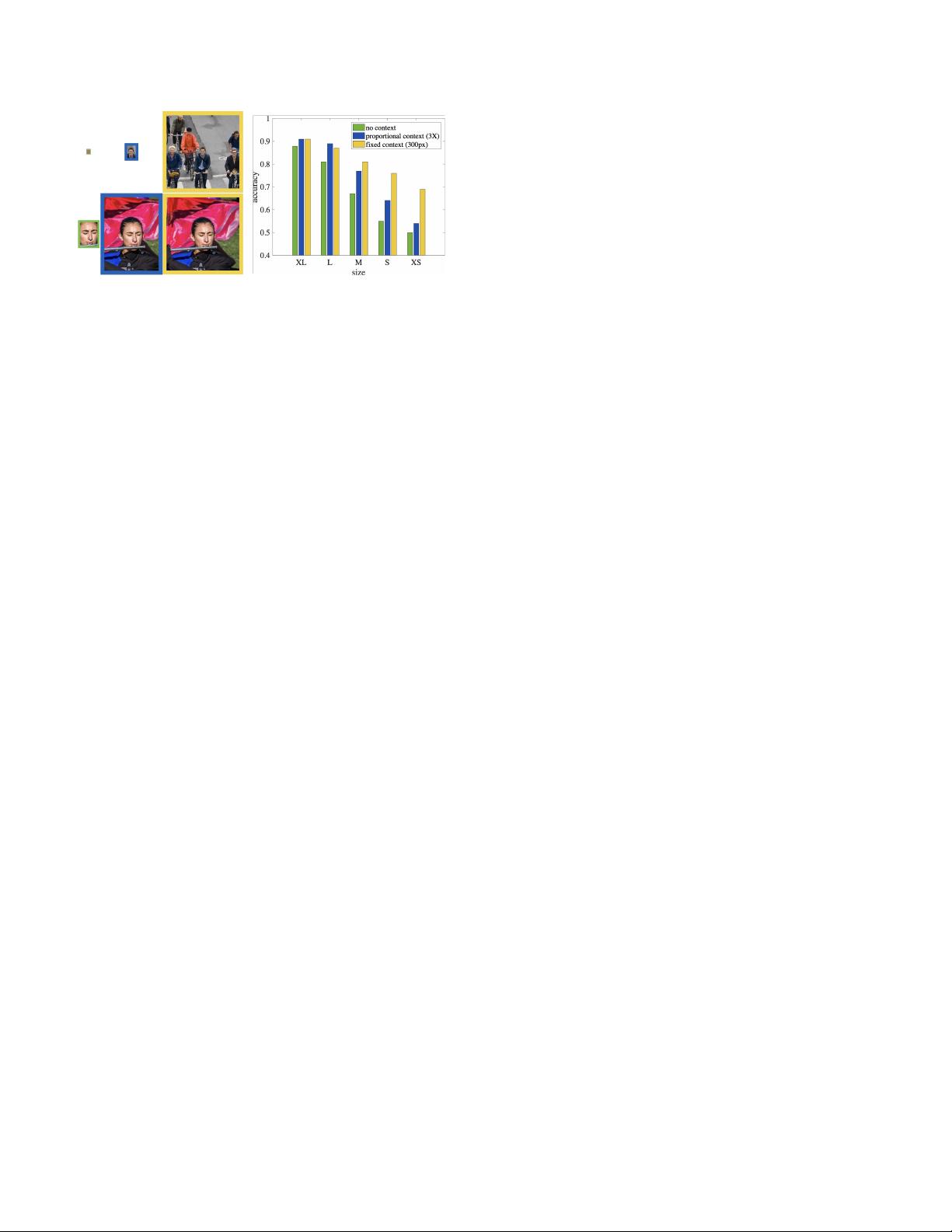

Figure 3: On the left, we visualize a large and small face,

both with and without context. One does not need context

to recognize the large face, while the small face is dramat-

ically unrecognizable without its context. We quantify this

observation with a simple human experiment on the right,

where users classify true and false positive faces of our pro-

posed detector. Adding proportional context (by enlarging

the window by 3X) provides a small improvement on large

faces but is insufficient for small faces. Adding a fixed con-

textual window of 300 pixels dramatically reduces error on

small faces by 20%. This suggests that context should be

modeled in a scale-variant manner. We operationalize this

observation with foveal templates of massively-large recep-

tive fields (around 300x300, the size of the yellow boxes).

2. Related work

Scale-invariance: The vast majority of recognition

pipelines focus on scale-invariant representations, dating

back to SIFT[15]. Current approaches to detection such as

Faster RCNN [18] subscribe to this philosophy as well, ex-

tracting scale-invariant features through ROI pooling or an

image pyramid [19]. We provide an in-depth exploration

of scale-variant templates, which have been previously pro-

posed for pedestrian detection[17], sometimes in the con-

text of improved speed [3]. SSD [13] is a recent technique

based on deep features that makes use of scale-variant tem-

plates. Our work differs in our exploration of context for

tiny object detection.

Context: Context is key to finding small instances as

shown in multiple recognition tasks. In object detection, [2]

stacks spatial RNNs (IRNN[11]) model context outside the

region of interest and shows improvements on small object

detection. In pedestrian detection, [17] uses ground plane

estimation as contextual features and improves detection on

small instances. In face detection, [28] simultaneously pool

ROI features around faces and bodies for scoring detections,

which significantly improve overall performance. Our pro-

posed work makes use of large local context (as opposed to

a global contextual descriptor [2, 17]) in a scale-variant way

(as opposed to [28]). We show that context is mostly useful

for finding low-resolution faces.

Multi-scale representation: Multi-scale representation

has been proven useful for many recognition tasks. [8, 14,

1] show that deep multi-scale descriptors (known as “hy-

percolumns”) are useful for semantic segmentation. [2, 13]

demonstrate improvements for such models on object detec-

tion. [28] pools multi-scale ROI features. Our model uses

“hypercolumn” features, pointing out that fine-scale fea-

tures are most useful for localizing small objects (Sec. 3.1

and Fig. 5).

RPN: Our model superficially resembles a region-

proposal network (RPN) trained for a specific object class

instead of a general “objectness” proposal generator [18].

The important differences are that we use foveal descrip-

tors (implemented through multi-scale features), we select

a range of object sizes and aspects through cross-validation,

and our models make use of an image pyramid to find ex-

treme scales. In particular, our approach for finding small

objects make use of scale-specific detectors tuned for inter-

polated images. Without these modifications, performance

on small-faces dramatically drops by more than 10% (Ta-

ble 1).

3. Exploring context and resolution

In this section, we present an exploratory analysis of the

issues at play that will inform our final model. To frame

the discussion, we ask the following simple question: what

is the best way to find small faces of a fixed-size (25x20)?.

By explicitly factoring out scale-variation in terms of the

desired output, we can explore the role of context and the

canonical template size. Intuitively, context will be crucial

for finding small faces. Canonical template size may seem

like a strange dimension to explore - given that we want to

find faces of size 25x20, why define a template of any size

other than 25x20? Our analysis gives a surprising answer

of when and why this should be done. To better understand

the implications of our analysis, along the way we also ask

the analogous question for a large object size: what is the

best way to find large faces of a fixed-size (250x200)?.

Setup: We explore different strategies for building

scanning-window detectors for fixed-size (e.g., 25x20)

faces. We treat fixed-size object detection as a binary

heatmap prediction problem, where the predicted heatmap

at a pixel position (x, y) specifies the confidence of a fixed-

size detection centered at (x, y). We train heatmap predic-

tors using a fully convolutional network (FCN) [14] defined

over a state-of-the-art architecture ResNet [9]. We explore

multi-scale features extracted from the last layer of each

res-block, i.e. (res2cx, res3dx, res4fx, res5cx) in terms of

ResNet-50. We will henceforth refer to these as (res2, res3,

res4, res5) features. We discuss the remaining particulars of

our training pipeline in Section 5.