Probabilistic Dense Reconstruction from a Moving Camera

Yonggen Ling

1

, Kaixuan Wang

2

, and Shaojie Shen

2

Abstract— This paper presents a probabilistic approach for

online dense reconstruction using a single monocular camera

moving through the environment. Compared to spatial stereo,

depth estimation from motion stereo is challenging due to

insufficient parallaxes, visual scale changes, pose errors, etc. We

utilize both the spatial and temporal correlations of consecutive

depth estimates to increase the robustness and accuracy of

monocular depth estimation. An online, recursive, probabilistic

scheme to compute depth estimates, with corresponding co-

variances and inlier probability expectations, is proposed in this

work. We integrate the obtained depth hypotheses into dense 3D

models in an uncertainty-aware way. We show the effectiveness

and efficiency of our proposed approach by comparing it with

state-of-the-art methods in the TUM RGB-D SLAM & ICL-

NUIM dataset. Online indoor and outdoor experiments are also

presented for performance demonstration.

I. INTRODUCTION

Accurate localization and dense mapping are fundamental

components of autonomous robotic systems as they serve

as the perception input for obstacle avoidance and path

planning. While localization from a monocular camera has

been well discussed in the past [1]–[5], online dense re-

construction using a single moving camera is still under

development [6]–[9]. Since monocular depth estimation is

based on consecutive estimated poses and images, main

issues of it are: imprecise poses due to localization er-

rors, inaccurate visual correspondences due to insufficient

parallaxes and visual scale changes, etc. Depth estimation

from traditional spatial stereo cameras (usually in the front-

parallel setting), however, avoids the issues met with motion

stereo. Thus many algorithms based on stereo cameras have

been developed in the past decades [10, 11]. The significant

drawback of spatial stereo is its baseline limitation: distant

objects can be better estimated using longer baselines be-

cause of larger disparities; while close-up structures can be

better reconstructed using shorter baselines because of larger

visual overlaps. Moreover, for real world applications such as

mobile robots, phones and wearable devices, it is impossible

to equip them with long baseline stereo cameras because

of the size constraint. If the baseline length, compared to

the average scene depth of the perceived environment, is

relatively small, images captured on stereo cameras will be

similar. As a result, visual information from stereo cameras

degrades to the same level as that obtained by a monocular

camera.

1

Tencent AI Lab, China.

2

The Hong Kong University of Science

and Technology, Hong Kong, SAR China. Correspondence to: Yonggen

Ling ylingaa@connect.ust.hk, Kaixuan Wang and Shaojie Shen

{kwangap, eeshaojie}@ust.hk. This work was partially sup-

ported by HKUST institutional studentship.

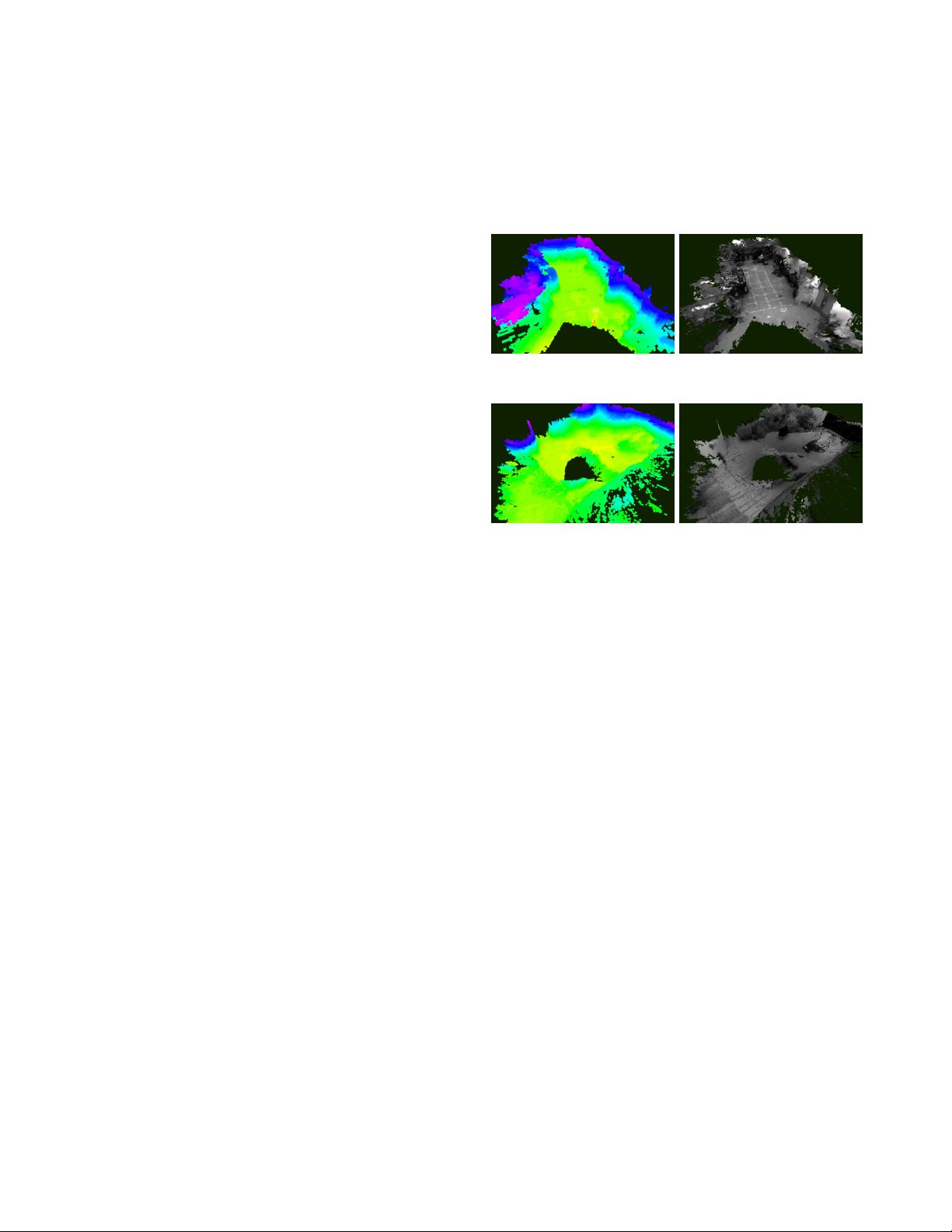

(a) Dense indoor reconstruction for

motion planning.

(b) Meshing view of indoor recon-

struction for visualization.

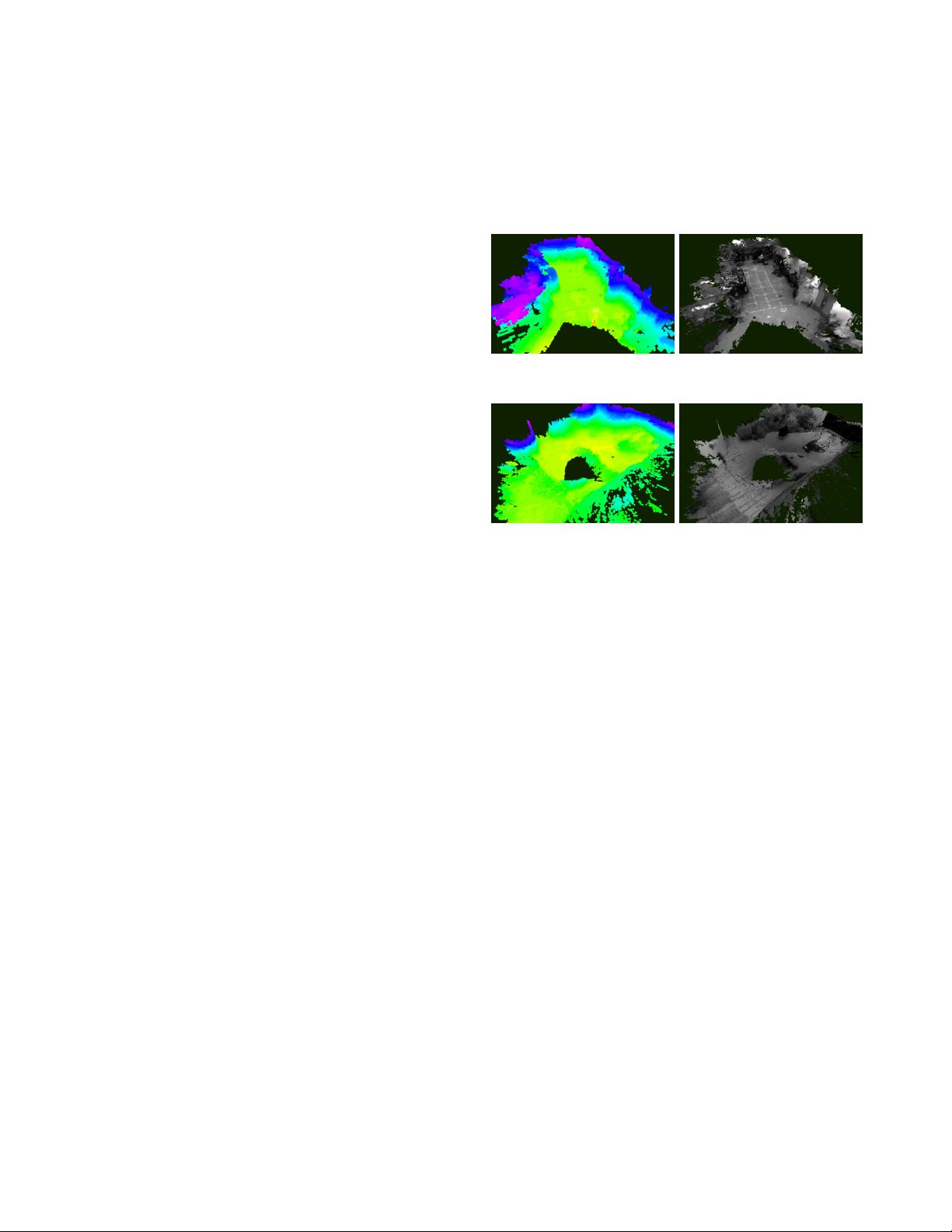

(c) Dense outdoor reconstruction for

motion planning.

(d) Meshing view of indoor recon-

struction for visualization.

Fig. 1. Dense reconstruction of an indoor/outdoor environment from

a single moving camera. (a)(c) Reconstruction for robotic applications,

such as motion planning and obstacle avoidance. Colors vary w.r.t. the

height to show the structure of the reconstructed dense environment.

(b)(d) Meshing view by applying marching cubes [12] on TSDFs for

visualization. More details can be found at: https://1drv.ms/v/s!

ApzRxvwAxXqQmlW9ZOrp9hdA7ude.

Fundamentally different from passive cameras, time-of-

flight (TOF) cameras as well as structure-light cameras,

emit light actively. They are able to provide high accuracy

depth measurements. With the advent of Microsoft Kinect

and ASUS Xtion, dense reconstruction algorithms based on

active depth cameras [13]–[15] have achieved impressive

results in recent years. Unfortunately, active sensors do not

work under strong sunlight, which limits their application to

indoor environments.

This paper focuses on dense reconstructions using a single

monocular camera, which adapts to both indoor and outdoor

environments with various scene depth ranges. Comparing

to existing methods [7, 9, 13]–[16], we make careful im-

provements to multiple sub-modules of the whole mapping

pipeline, resulting in substantial gains in the mapping perfor-

mance. The main contributions of this paper are as follows:

• A joint probabilistic consideration of depth estimation

and integration.

• A detailed discussion of aggregated costs and their

probability modeling.

• An online, recursive, probabilistic depth estimation

scheme that utilizes both the spatial and temporal cor-

relations of consecutive depth estimates.

• Open-source implementations available at

2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)

Madrid, Spain, October 1-5, 2018

978-1-5386-8093-3/18/$31.00 ©2018 IEEE 6364