block in another stripe. That is, a parity block in a stripe can

either (i) be stored on a separate and dedicated node or (ii) be

mixed with a data block in another stripe across all nodes in

the cluster.

2.2. Replication redundancy

Replication schemes are widely used to ensure high-data reli-

ability and to facilitate I/O parallelisms in distributed storage

clusters like GFS [

1], HDFS [2] and QFS [24]. A cluster file

system maintains two or three replicas for each data block.

However, replicated data improve data reliability and I/O

performance at the cost of high-storage consumption. For

example, the storage capacity overhead of replication and

triplication is as high as 200% and 300%, respectively.

Archiving infrequently accessed data (a.k.a., unpopular data)

can improve storage utilization. Additionally, such an archiv-

ing operation has a little impact on I/O access services in clus-

ters since the data to be archived exhibit decreased access

frequency. In the Facebook’s BLOB storage clusters, the BLOB

storage workload changes along with Facebook’sgrowth.

Consequentially, newly created BLOBs (i.e. hot data) have three

replicas to support a high request rate; week-old BLOBs (i.e.

warm data) have two duplicates using Geo-replicated XOR cod-

ing; and 2

+

-month old data (i.e. cold data) are converted into

the format of (14,10) RS-coded data [

18, 25].

Replica placement plays a significant role in data reliabil-

ity. A typical example is the rack-aware replica placement

policy adopted by HDFS [

2]. With the rack-aware replica

placement in place, three replicas of a data block are orga-

nized as below: one replica is stored on one node in a local

rack, another replica is placed on a node in a remote rack and

the last one is kept on a separate node in the same remote

rack. When it comes to two-way replication, each primary

block and its replica block are stored on two distinct nodes.

When an erasure-coded data archival proced ure is launched,

it is challenging to determine the set of source data blocks for

an archival stripe from a group of data replicas, because repli-

cas of any two distinct data blocks are randomly distributed.

2.3. Distributed erasure-coded archiv al

Decentralized encoding [

12], parallel data archiving [15] and

pipelined archival [

14] belong to the camp of distributed data

archival schemes (i.e. DArch). In the DArch cases, two nodes

or more—acting as encoding nodes—accomplish the parity

generation. In particular, each encoding node retrieves k dif-

ferent data blocks from existing data replicas and computes r

parity blocks. In this section, we make use of a concrete

example to illustrate the DArch scheme.

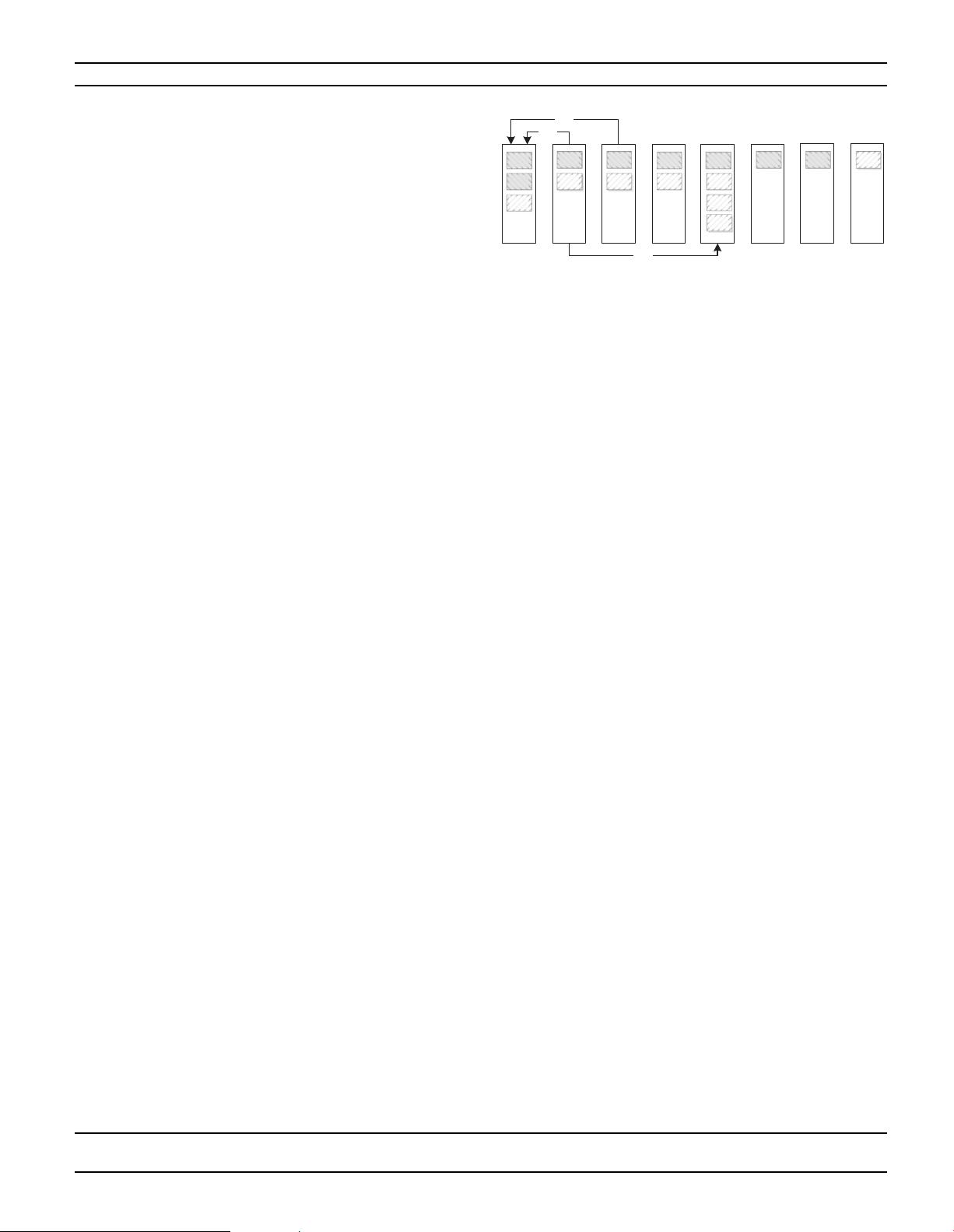

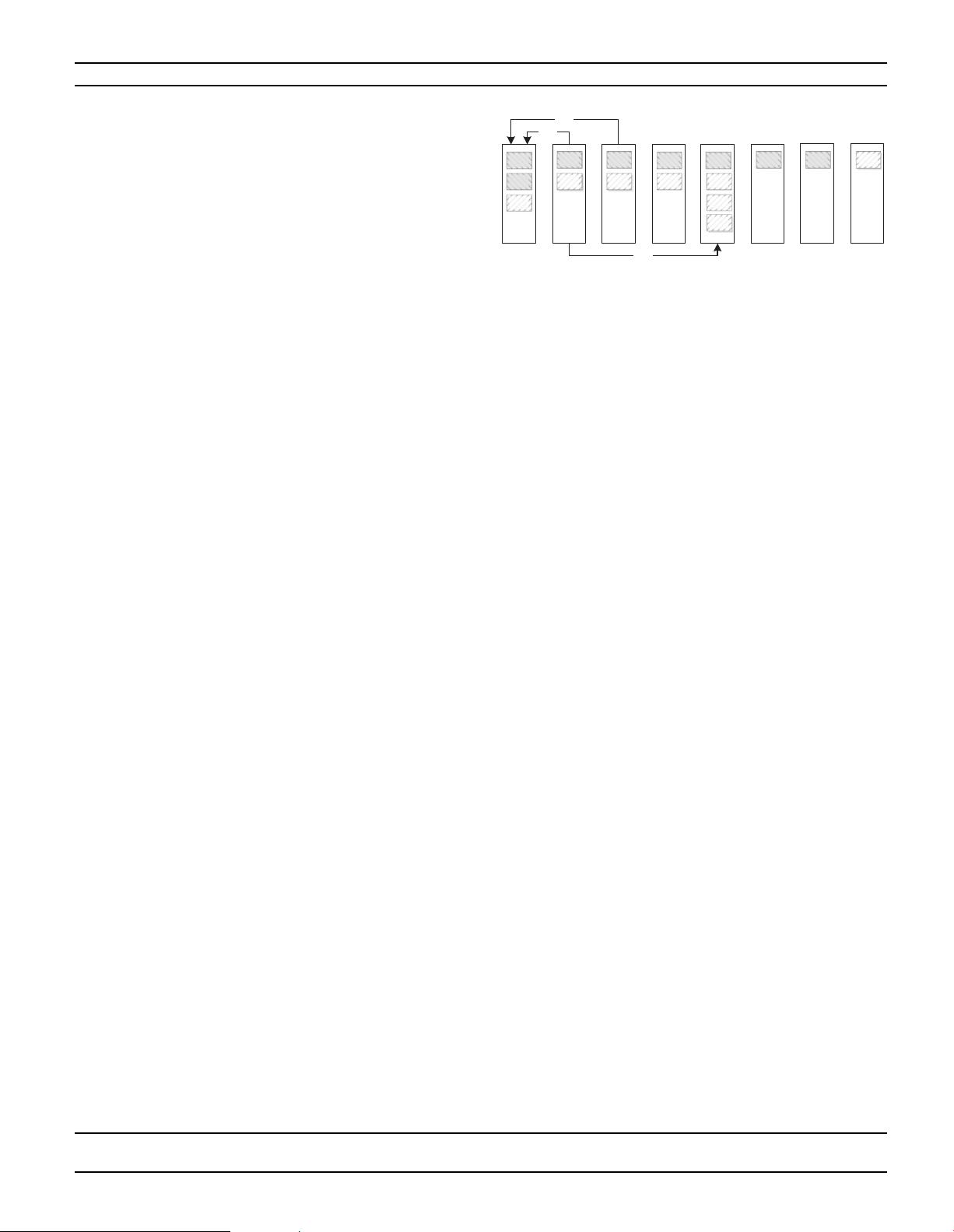

Figure

3 demonstrates how to archive two-replica data with

random distribution using RS codes with coding parameter

‘k = 4’ in a storage cluster, where

{

DDDD,,,

1,1 1,2 1,3 1,4

and

{

DDDD,,,

2,1 2,2 2,3 2,4

are the source data blocks of stripe 1

and 2, respectively. Data blocks are randomly distributed on

eight data nodes as long as two replicas of any data block are

stored on two different data nodes.

DArch embraces an idea of parallel archiving, namely,

multiple encoding nodes share the responsibility of parity-

block generation. For each archival stripe, a master node and

some provider nodes are chosen according to data locality. A

master node performs as an encoding node for a specific arch-

ival stripe. Specifically, given a stripe, a master node should

be a node that contains the most amount of source data blocks

in the stripe. For example, Fig.

3 illustrates that the two arch-

ival stripes are processed by two encoding nodes—

N

1

and

N

5

. After a master node is selected for an archival stripe,

DArch nominates associated data-block provider nodes as fol-

lows: a higher priority is assigned to a node storing a larger

amount of source data blocks; the master node fetches data

blocks from the remote node with a high priority in the first

place, and so forth.

In this study, we investigate the optimization approaches

on the basis of DArch, which is capable of accomplishing

erasure-coded archival for replicas managed by random data

placement strategies. After a thorough analysis, we observe

that non-sequential disk reads coupled with imbalanced I/O

loads suppress overall archiving performance. To alleviate the

above bottlenecks, we propose to incorporate a prefetching

mechanism to the data-block-reading stage to enhance disk I/

O throughput (see Section

3), and adopt a load-balancing

strategy to evenly distribute archival tasks among multiple

nodes to increase the utilization of network bandwidth (see

Section

4).

3. PREFETCHING-ENABLED ARCHIVING

3.1. Performance bottlenecks in data archival

In a handful of replica-based storage clusters (e.g. GFS [

8],

HDFS [

9] and QFS [24]), files are organized in form of large

D1,3

D1,4

D2,4

SN2 SN3 SN4 SN5

#

SN6 SN7 SN8

(

*

EN for stripe 1) (

#

EN for stripe 2)

SN1

*

D1,1

D1,2

D1,3 D1,4

D2,1

D2,2

D2,3

D2,4

D1,1 D1,2

D1,3 D1,4

D2,1

D2,2D2,3

D2,4

FIGURE 3. Distributed archiving scheme (DArch) enables multiple

encoding nodes (e.g.

N

1

and

N

5

) to share the data archival respon-

sibility for all archival stripes.

4J.HUANG et al.

SECTION B: COMPUTER AND COMMUNICATIONS NETWORKS AND SYSTEMS

THE COMPUTER JOURNAL, 2018

Downloaded from https://academic.oup.com/comjnl/advance-article-abstract/doi/10.1093/comjnl/bxy079/5065104 by Huazhong University of Science and Technology user on 02 January 2019