IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. XXX, NO. XXX, XXXXX 2014 3

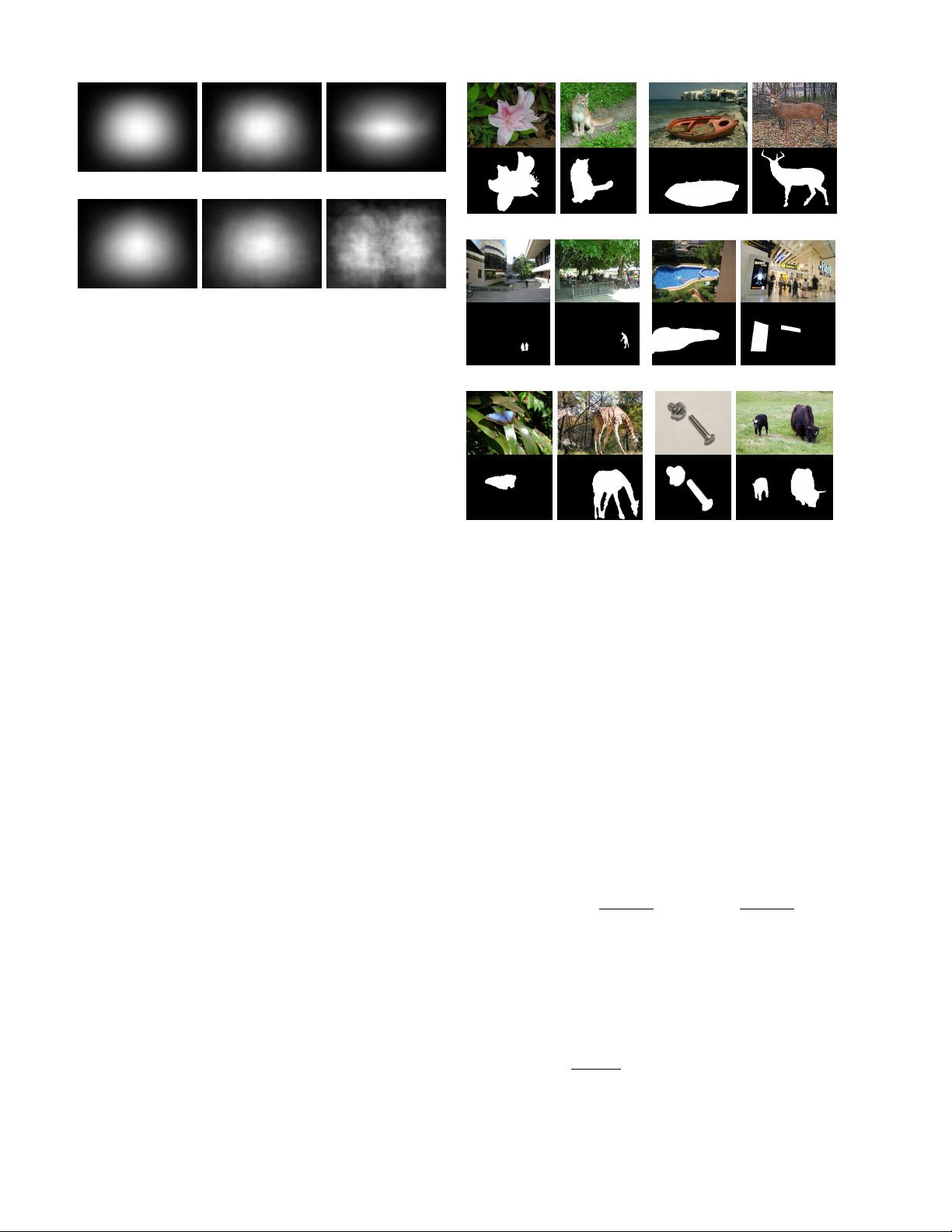

(a) MSRA10K (b) ECSSD (c) THUR15K

(d) DUT-OMRON (e) JuddDB (f) SED2

Fig. 2. Average annotation maps of six datasets used in benchmarking.

images in SED2 usually have two objects aligned around

opposite image borders. Moreover, we can see that the

spatial distribution of salient objects in JuddDB has a larger

variety than other datasets, indicating that this dataset have

smaller positional bias (i.e., center-bias of salient objects

and border-bias of background regions).

In Fig. 4(b), we aim to show the complexity of images

in six benchmark datasets. Toward this end, we apply the

segmentation algorithm by Felzenszwalb et al. [101] to see

how many super-pixels (i.e., homogeneous regions) can be

obtained on average from salient objects and background

regions of each image, respectively. In this manner, we can

use this measure to reflect how challenging a benchmark

is since massive super-pixels often indicate complex fore-

ground objects and cluttered background. From Fig. 4(c),

we can see that JuddDB is the most challenging benchmark

since it has an average number of 493 super-pixels from the

background of each image. On the contrary, SED2 contains

fewer number of super-pixels in foreground and background

regions, indicating that images in this benchmark often

contain uniform regions and are easy to process.

In Fig. 4(c), we demonstrate the average object sizes

of these benchmarks, while the size of each object is

normalized by the size of the corresponding image. We

can see that MSRA10K and ECCSD datasets have larger

objects while SED2 has smaller ones. In particular, we

can see that some benchmarks contain a limited number

of image regions with large foreground objects. By jointly

considering the center-bias property, it becomes very easy

to achieve a high precision on these images.

C. Evaluation Measures

There are several ways to measure the agreement be-

tween model predictions and human annotations [21]. Some

metrics evaluate the overlap between a tagged region while

others try to assess the accuracy of drawn shapes with

object boundary. In addition, some metrics have tried to

consider both boundary and shape [102].

Here, we use three universally-agreed, standard, and

easy-to-understand measures for evaluating a salient object

detection model. The first two evaluation metrics are based

on the overlapping area between subjective annotation and

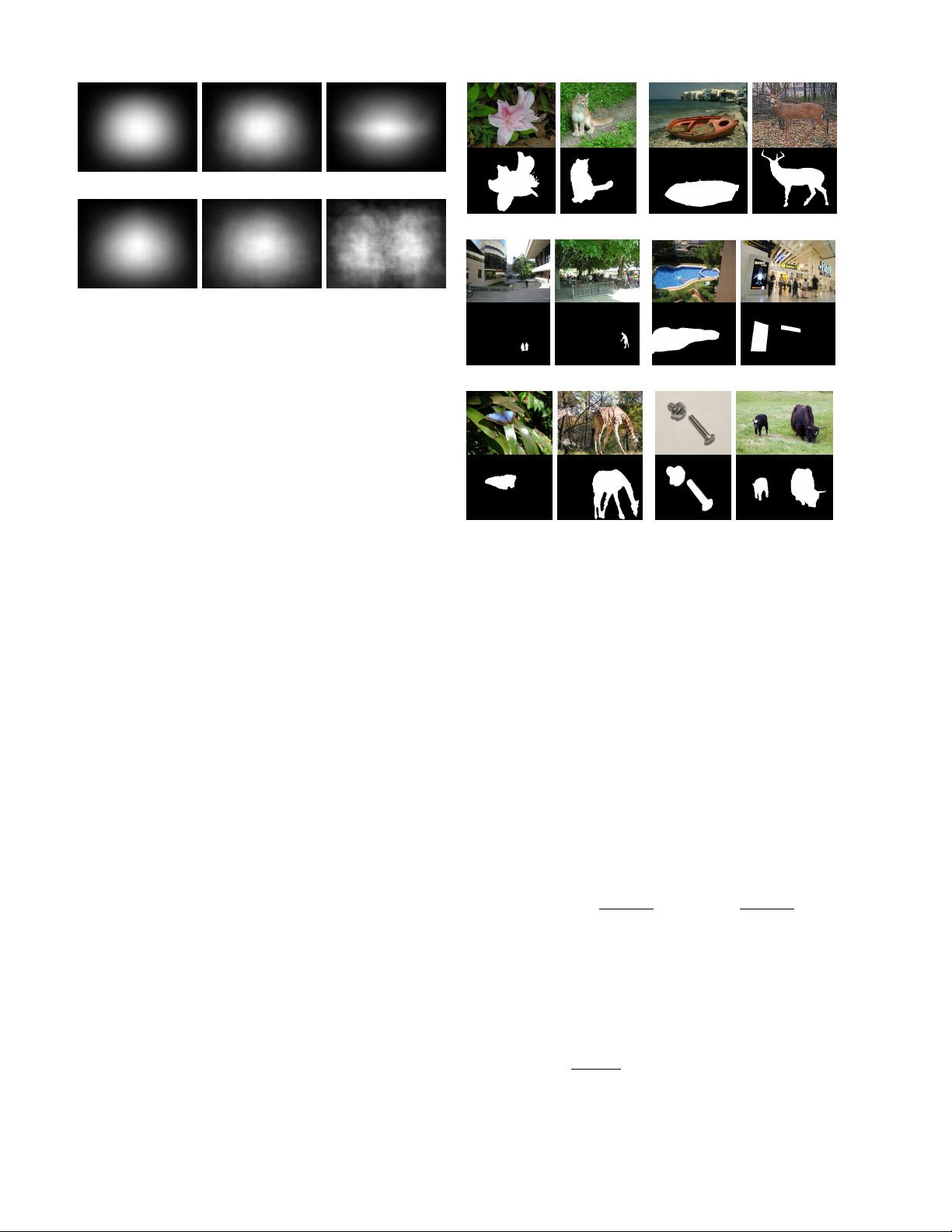

(a) MSRA10K (b) ECSSD

(c) JuddDB (d) DUT-OMRON

(e) THUR15K (f) SED2

Fig. 3. Images and pixel-level annotations from six salient object datasets.

saliency prediction, including the precision-recall (PR) and

the receiver operating characteristics (ROC). From these

two metrics, we also report the F-Measure, which jointly

considers recall and precision, and AUC, which is the area

under the ROC curve. Moreover, we also use the third

measure which directly computes the mean absolute error

(MAE) between the estimated saliency map and ground-

truth annotation. For the sake of simplification, we use S to

represent the predicted saliency map normalized to [0, 255]

and G to represent the ground-truth binary mask of salient

objects. For a binary mask, we use | · | to represent the

number of non-zero entries in the mask.

Precision-recall (PR). For a saliency map S, we can

convert it to a binary mask M and compute P recision

and Recall by comparing M with ground-truth G:

P recision =

|M ∩ G|

|M|

, Recall =

|M ∩ G|

|G|

(1)

From this definition, we can see that the binarization

of S is the key step in the evaluation. Usually, there are

three popular ways to perform the binarization. In the first

solution, Achanta et al. [18] proposed the image-dependent

adaptive threshold for binarizing S, which is computed as

twice as the mean saliency of S:

T

a

=

2

W × H

X

W

x=1

X

H

y=1

S(x, y), (2)

where W and H are the width and the height of the saliency

map S, respectively.

The second way to bipartite S is to use a fixed threshold

which changes from 0 to 255. On each threshold, a pair