没有合适的资源?快使用搜索试试~ 我知道了~

首页2016年Usenix FAST存储技术会议全文

2016年Usenix FAST存储技术会议全文

"FAST存储会议2016年全文"

FAST(Conference on File and Storage Technologies)是全球存储领域的顶级盛会,由USENIX协会主办,并得到了ACM SIGOPS的支持。2016年的会议在加利福尼亚州圣克拉拉举行,时间是2月22日至25日。这个资源包含了该年度会议的全部论文,涵盖了广泛的文件和存储技术主题,展示了当时研究领域的最新进展和创新。

参会者包括来自学术界和工业界的专家,他们共同讨论了存储系统设计、性能优化、数据持久化、容错机制、存储安全、闪存技术、分布式存储、云存储以及新兴的存储架构等多个关键议题。这些论文不仅反映了存储领域的最新研究趋势,也为业界提供了理论和实践的指导。

会议的赞助商包括银牌、金牌和青铜赞助商,以及一家白金赞助商,这些赞助商的参与体现了存储行业对这一活动的重视和支持。此外,媒体赞助商和产业合作伙伴如ACM Queue、ADMIN magazine、Linux Pro Magazine等,也通过报道和宣传扩大了会议的影响力,促进了存储技术的交流与传播。

FAST'16的参与者有机会接触到最新的研究成果,包括但不限于以下主题:

1. **高性能存储系统**:研究如何提升存储系统的吞吐量和I/O效率,减少延迟,以适应大数据和云计算环境的需求。

2. **新型存储介质**:探讨闪存和其他非易失性内存技术,以及它们如何改变传统存储架构。

3. **数据保护与恢复**:研究备份策略、故障恢复技术和数据冗余,确保数据的完整性和可用性。

4. **存储安全**:关注加密技术、访问控制和隐私保护,以应对日益增长的网络安全威胁。

5. **分布式存储**:分析分布式文件系统的设计与实现,解决大规模数据管理和共享问题。

6. **能源效率**:探索节能存储解决方案,降低数据中心的能耗。

7. **软件定义存储**:讨论如何通过软件定义的方式灵活配置和管理存储资源。

这些论文和讨论对于研究人员、工程师和系统管理员来说是非常宝贵的学习资料,他们可以从中获取灵感,改进现有系统,或者开发新的存储解决方案。FAST 2016年会议的全文集合是一个深入了解存储技术前沿的重要资源。

USENIX Association 14th USENIX Conference on File and Storage Technologies (FAST ’16) 5

second pass replays the logical entries in the log. After

the next checkpoint, the log is discarded, and the refer-

ence counts on all nodes referenced by the log are decre-

mented. Any nodes whose reference count hits zero (i.e.

because they are no longer referenced by other nodes in

the tree) are garbage collected at that time.

Implementation. BetrFS 0.2 guarantees consistent re-

covery up until the last log flush or checkpoint. By de-

fault, a log flush is triggered on a sync operation, every

second, or when the 32 MB log buffer fills up. Flush-

ing a log buffer with unbound log entries also requires

searching the in-memory tree nodes for nodes contain-

ing unbound messages, in order to first write these nodes

to disk. Thus, BetrFS 0.2 also reserves enough space at

the end of the log buffer for the binding log messages.

In practice, the log-flushing interval is long enough that

most unbound inserts are written to disk before the log

flush, minimizing the delay for a log write.

Additional optimizations. Section 5 explains some op-

timizations where logically obviated operations can be

discarded as part of flushing messages down one level of

the tree. One example is when a key is inserted and then

deleted; if the insert and delete are in the same message

buffer, the insert can be dropped, rather than flushed to

the next level. In the case of unbound inserts, we allow

a delete to remove an unbound insert before the value

is written to disk under the following conditions: (1) all

transactions involving the unbound key-value pair have

committed, (2) the delete transaction has committed, and

(3) the log has not yet been flushed. If these conditions

are met, the file system can be consistently recovered

without this unbound value. In this situation, BetrFS 0.2

binds obviated inserts to a special NULL node, and drops

the insert message from the B

ε

-tree.

4 Balancing Search and Rename

In this section, we argue that there is a design trade-off

between the performance of renames and recursive di-

rectory scans. We present an algorithmic framework for

picking a point along this trade-off curve.

Conventional file systems support fast renames at the

expense of slow recursive directory traversals. Each file

and directory is assigned its own inode, and names in

a directory are commonly mapped to inodes with point-

ers. Renaming a file or directory can be very efficient,

requiring only creation and deletion of a pointer to an in-

ode, and a constant number of I/Os. However, searching

files or subdirectories within a directory requires travers-

ing all these pointers. When the inodes under a directory

are not stored together on disk, for instance because of

renames, then each pointer traversal can require a disk

seek, severely limiting the speed of the traversal.

BetrFS 0.1 and TokuFS are at the other extreme. They

index every directory, file, and file block by its full path

in the file system. The sort order on paths guarantees that

all the entries beneath a directory are stored contiguously

in logical order within nodes of the B

ε

-tree, enabling fast

scans over entire subtrees of the directory hierarchy. Re-

naming a file or directory, however, requires physically

moving every file, directory, and block to a new location.

This trade-off is common in file system design. In-

termediate points between these extremes are possible,

such as embedding inodes in directories but not moving

data blocks of renamed files. Fast directory traversals re-

quire on-disk locality, whereas renames must issue only

a small number of I/Os to be fast.

BetrFS 0.2’s schema makes this trade-off parameteri-

zable and tunable by partitioning the directory hierarchy

into connected regions, which we call zones. Figure 2a

shows how files and directories within subtrees are col-

lected into zones in BetrFS 0.2. Each zone has a unique

zone-ID, which is analogous to an inode number in a tra-

ditional file system. Each zone contains either a single

file or has a single root directory, which we call the root

of the zone. Files and directories are identified by their

zone-ID and their relative path within the zone.

Directories and files within a zone are stored together,

enabling fast scans within that zone. Crossing a zone

boundary potentially requires a seek to a different part of

the tree. Renaming a file under a zone root moves the

data, whereas renaming a large file or directory (a zone

root) requires only changing a pointer.

Zoning supports a spectrum of trade-off points be-

tween the two extremes described above. When zones

are restricted to size 1, the BetrFS 0.2 schema is equiv-

alent to an inode-based schema. If we set the zone size

bound to infinity (∞), then BetrFS 0.2’s schema is equiv-

alent to BetrFS 0.1’s schema. At an intermediate set-

ting, BetrFS 0.2 can balance the performance of direc-

tory scans and renames.

The default zone size in BetrFS 0.2 is 512 KiB. In-

tuitively, moving a very small file is sufficiently inex-

pensive that indirection would save little, especially in

a WOD. On the other extreme, once a file system is

reading several MB between each seek, the dominant

cost is transfer time, not seeking. Thus, one would ex-

pect the best zone size to be between tens of KB and a

few MB. We also note that this trade-off is somewhat

implementation-dependent: the more efficiently a file

system can move a set of keys and values, the larger a

zone can be without harming rename performance. Sec-

tion 7 empirically evaluates these trade-offs.

As an effect of zoning, BetrFS 0.2 supports hard links

by placing a file with more than 1 link into its own zone.

Metadata and data indexes. The BetrFS 0.2 meta-

5

6 14th USENIX Conference on File and Storage Technologies (FAST ’16) USENIX Association

0

abc.c

docs

1

2

Zone 0

Zone 2

Zone 1

b.tex

a.tex

local

vi

ed

bin

src

(a) An example zone tree in BetrFS 0.2.

Metadata Index

(0,”/”) → stat info for “/”

(0,”/docs”) → zone 1

(0,”/local”) → stat info for “/local”

(0,”/src”) → stat info for “/src”

(0,”/local/bin”) → stat info for “/local/bin”

(0,”/local/bin/ed”) → stat info for “/local/bin/ed”

(0,”/local/bin/vi”) → stat info for “/local/bin/vi”

(0,”/src/abc.c”) → zone 2

(1,”/”) → stat info for “/docs”

(1,”/a.tex”) → stat info for “/docs/a.tex”

(1,”/b.tex”) → stat info for “/docs/b.tex”

(2,”/”) → stat info for “/src/abc.c”

Data Index

(0,“/local/bin/ed”, i) → block i of “/local/bin/ed”

(0,“/local/bin/vi”, i) → block i of “/local/bin/vi”

(1,“/a.tex”,i) → block i of “/docs/a.tex”

(1,“/b.tex”,i) → block i of “/docs/b.tex”

(2,“/”, i) → block i of “/src/abc.c”

(b) Example metadata and data indices in BetrFS 0.2.

Figure 2: Pictorial and schema illustrations of zone trees in BetrFS 0.2.

data index maps (zone-ID, relative-path) keys to meta-

data about a file or directory, as shown in Figure 2b. For

a file or directory in the same zone, the metadata includes

the typical contents of a stat structure, such as owner,

modification time, and permissions. For instance, in zone

0, path “/local” maps onto the stat info for this directory.

If this key (i.e., relative path within the zone) maps onto

a different zone, then the metadata index maps onto the

ID of that zone. For instance, in zone 0, path “/docs”

maps onto zone-ID 1, which is the root of that zone.

The data index maps (zone-ID, relative-path, block-

number) to the content of the specified file block.

Path sorting order. BetrFS 0.2 sorts keys by zone-ID

first, and then by their relative path. Since all the items

in a zone will be stored consecutively in this sort order,

recursive directory scans can visit all the entries within a

zone efficiently. Within a zone, entries are sorted by path

in a “depth-first-with-children” order, as illustrated in

Figure 2b. This sort order ensures that all the entries be-

neath a directory are stored logically contiguously in the

underlying key-value store, followed by recursive list-

ings of the subdirectories of that directory. Thus an ap-

plication that performs readdir on a directory and then

recursively scans its sub-directories in the order returned

by readdir will effectively perform range queries on

that zone and each of the zones beneath it.

Rename. Renaming a file or directory that is the root of

its zone requires simply inserting a reference to its zone

at its new location and deleting the old reference. So,

for example, renaming “/src/abc.c” to “/docs/def.c” in

Figure 2 requires deleting key (0, “/src/abc.c”) from the

metadata index and inserting the mapping (1, “/def.c”)

→ Zone 2.

Renaming a file or directory that is not the root of its

zone requires copying the contents of that file or direc-

tory to its new location. So, for example, renaming “/lo-

cal/bin” to “/docs/tools” requires (1) deleting all the keys

of the form (0, “/local/bin/p”) in the metadata index, (2)

reinserting them as keys of the form (1, “/tools/p”), (3)

deleting all keys of the form (0, “/local/bin/p”, i) from the

data index, and (4) reinserting them as keys of the form

(1, “/tools/p”, i). Note that renaming a directory never

requires recursively moving into a child zone. Thus, by

bounding the size of the directory subtree within a sin-

gle zone, we also bound the amount of work required to

perform a rename.

Splitting and merging. To maintain a consistent rename

and scan performance trade-off throughout system life-

time, zones must be split and merged so that the follow-

ing two invariants are upheld:

ZoneMin: Each zone has size at least C

0

.

ZoneMax: Each directory that is not the root of its

zone has size at most C

1

.

The ZoneMin invariant ensures that recursive directory

traversals will be able to scan through at least C

0

consec-

utive bytes in the key-value store before initiating a scan

of another zone, which may require a disk seek. The

ZoneMax invariant ensures that no directory rename will

require moving more than C

1

bytes.

The BetrFS 0.2 design upholds these invariants as

follows. Each inode maintains two counters to record

the number of data and metadata entries in its subtree.

6

USENIX Association 14th USENIX Conference on File and Storage Technologies (FAST ’16) 7

Whenever a data or metadata entry is added or removed,

BetrFS 0.2 recursively updates counters from the corre-

sponding file or directory up to its zone root. If either of a

file or directory’s counters exceed C

1

, BetrFS 0.2 creates

a new zone for the entries in that file or directory. When

a zone size falls below C

0

, that zone is merged with its

parent. BetrFS 0.2 avoids cascading splits and merges

by merging a zone with its parent only when doing so

would not cause the parent to split. To avoid unneces-

sary merges during a large directory deletion, BetrFS 0.2

defers merging until writing back dirty inodes.

We can tune the trade-off between rename and di-

rectory traversal performance by adjusting C

0

and C

1

.

Larger C

0

will improve recursive directory traversals.

However, increasing C

0

beyond the block size of the

underlying data structure will have diminishing returns,

since the system will have to seek from block to block

during the scan of a single zone. Smaller C

1

will im-

prove rename performance. All objects larger than C

1

can be renamed in a constant number of I/Os, and the

worst-case rename requires only C

1

bytes be moved. In

the current implementation, C

0

= C

1

= 512 KiB.

The zone schema enables BetrFS 0.2 to support a spec-

trum of trade-offs between rename performance and di-

rectory traversal performance. We explore these trade-

offs empirically in Section 7.

5 Efficient Range Deletion

This section explains how BetrFS 0.2 obtains nearly-flat

deletion times by introducing a new rangecast message

type to the B

ε

-tree, and implementing several B

ε

-tree-

internal optimizations using this new message type.

BetrFS 0.1 file and directory deletion performance is

linear in the amount of data being deleted. Although this

is true to some extent in any file system, as the freed disk

space will be linear in the file size, the slope for BetrFS

0.1 is alarming. For instance, unlinking a 4GB file takes

5 minutes on BetrFS 0.1!

Two underlying issues are the sheer volume of delete

messages that must be inserted into the B

ε

-tree and

missed optimizations in the B

ε

-tree implementation. Be-

cause the B

ε

-tree implementation does not bake in any

semantics about the schema, the B

ε

-tree cannot infer that

two keys are adjacent in the keyspace. Without hints

from the file system, a B

ε

-tree cannot optimize for the

common case of deleting large, contiguous key ranges.

5.1 Rangecast Messages

In order to support deletion of a key range in a sin-

gle message, we added a rangecast message type to

the B

ε

-tree implementation. In the baseline B

ε

-tree im-

plementation, updates of various forms (e.g., insert and

delete) are encoded as messages addressed to a single

key, which, as explained in §2, are flushed down the path

from root-to-leaf. A rangecast message can be addressed

to a contiguous range of keys, specified by the beginning

and ending keys, inclusive. These beginning and ending

keys need not exist, and the range can be sparse; the mes-

sage will be applied to any keys in the range that do exist.

We have currently added rangecast delete messages, but

we can envision range insert and upsert [12] being useful.

Rangecast message propagation. When single-key

messages are propagated from a parent to a child, they

are simply inserted into the child’s buffer space in logi-

cal order (or in key order when applied to a leaf). Range-

cast message propagation is similar to regular message

propagation, with two differences.

First, rangecast messages may be applied to multi-

ple children at different times. When a rangecast mes-

sage is flushed to a child, the propagation function must

check whether the range spans multiple children. If so,

the rangecast message is transparently split and copied

for each child, with appropriate subsets of the original

range. If a rangecast message covers multiple children

of a node, the rangecast message can be split and ap-

plied to each child at different points in time—most com-

monly, deferring until there are enough messages for that

child to amortize the flushing cost. As messages propa-

gate down the tree, they are stored and applied to leaves

in the same commit order. Thus, any updates to a key

or reinsertions of a deleted key maintain a global serial

order, even if a rangecast spans multiple nodes.

Second, when a rangecast delete is flushed to a leaf, it

may remove multiple key/value pairs, or even an entire

leaf. Because unlink uses rangecast delete, all of the

data blocks for a file are freed atomically with respect to

a crash.

Query. AB

ε

-tree query must apply all pending modifi-

cations in node buffers to the relevant key(s). Applying

these modifications is efficient because all relevant mes-

sages will be in a node’s buffer on the root-to-leaf search

path. Rangecast messages maintain this invariant.

Each B

ε

-tree node maintains a FIFO queue of pend-

ing messages, and, for single-key messages, a balanced

binary tree sorted by the messages’ keys. For range-

cast messages, our current prototype checks a simple

list of rangecast messages and interleaves the messages

with single-key messages based on commit order. This

search costs linear in the number of rangecast messages.

A faster implementation would store the rangecast mes-

sages in each node using an interval tree, enabling it to

find all the rangecast messages relevant to a query in

O(k + logn) time, where n is number of rangecast mes-

sages in the node and k is the number of those messages

relevant to the current query.

7

8 14th USENIX Conference on File and Storage Technologies (FAST ’16) USENIX Association

Rangecast unlink and truncate. In the BetrFS 0.2

schema, 4KB data blocks are keyed by a concatenated

tuple of zone ID, relative path, and block number. Un-

linking a file involves one delete message to remove the

file from the metadata index and, in the same B

ε

-tree-

level transaction, a rangecast delete to remove all of the

blocks. Deleting all data blocks in a file is simply en-

coded by using the same prefix, but from blocks 0 to

infinity. Truncating a file works the same way, but can

start with a block number other than zero, and does not

remove the metadata key.

5.2 B

ε

-Tree-Internal Optimizations

The ability to group a large range of deletion messages

not only reduces the number of total delete messages re-

quired to remove a file, but it also creates new opportu-

nities for B

ε

-tree-internal optimizations.

Leaf Pruning. When a B

ε

-tree flushes data from one

level to the next, it must first read the child, merge the in-

coming data, and rewrite the child. In the case of a large,

sequential write, a large range of obviated data may be

read from disk, only to be overwritten. In the case of Be-

trFS 0.1, unnecessary reads make overwriting a 10 GB

file 30–63 MB/s slower than the first write of the file.

The leaf pruning optimization identifies when an en-

tire leaf is obviated by a range delete, and elides reading

the leaf from disk. When a large range of consecutive

keys and values are inserted, such as overwriting a large

file region, BetrFS 0.2 includes a range delete for the key

range in the same transaction. This range delete message

is necessary, as the B

ε

-tree cannot infer that the range of

the inserted keys are contiguous; the range delete com-

municates information about the keyspace. On flush-

ing messages to a child, the B

ε

-tree can detect when a

range delete encompasses the child’s keyspace. BetrFS

0.2 uses transactions inside the B

ε

-tree implementation

to ensure that the removal and overwrite are atomic: at

no point can a crash lose both the old and new contents

of the modified blocks. Stale leaf nodes are reclaimed as

part of normal B

ε

-tree garbage collection.

Thus, this leaf pruning optimization avoids expensive

reads when a large file is being overwritten. This opti-

mization is both essential to sequential I/O performance

and possible only with rangecast delete.

Pac-Man. A rangecast delete can also obviate a signifi-

cant number of buffered messages. For instance, if a user

creates a large file and immediately deletes the file, the

B

ε

-tree may include many obviated insert messages that

are no longer profitable to propagate to the leaves.

BetrFS 0.2 adds an optimization to message flushing,

where a rangecast delete message can devour obviated

messages ahead of it in the commit sequence. We call

this optimization “Pac-Man”, in homage to the arcade

game character known for devouring ghosts. This op-

timization further reduces background work in the tree,

eliminating “dead” messages before they reach a leaf.

6 Optimized Stacking

BetrFS has a stacked file system design [12]; B

ε

-tree

nodes and the journal are stored as files on an ext4 file

system. BetrFS 0.2 corrects two points where BetrFS 0.1

was using the underlying ext4 file system suboptimally.

First, in order to ensure that nodes are physically

placed together, TokuDB writes zeros into the node files

to force space allocation in larger extents. For sequen-

tial writes to a new FS, BetrFS 0.1 zeros these nodes and

then immediately overwrites the nodes with file contents,

wasting up to a third of the disk’s bandwidth. We re-

placed this with the newer fallocate API, which can

physically allocate space but logically zero the contents.

Second, the I/O to flush the BetrFS journal file was

being amplified by the ext4 journal. Each BetrFS log

flush appended to a file on ext4, which required updat-

ing the file size and allocation. BetrFS 0.2 reduces this

overhead by pre-allocating space for the journal file and

using fdatasync.

7 Evaluation

Our evaluation targets the following questions:

• How does one choose the zone size?

• Does BetrFS 0.2 perform comparably to other file sys-

tems on the worst cases for BetrFS 0.1?

• Does BetrFS 0.2 perform comparably to BetrFS 0.1 on

the best cases for BetrFS 0.1?

• How do BetrFS 0.2 optimizations impact application

performance? Is this performance comparable to other

file systems, and as good or better than BetrFS 0.1?

• What are the costs of background work in BetrFS 0.2?

All experimental results were collected on a Dell Opti-

plex 790 with a 4-core 3.40 GHz Intel Core i7 CPU, 4

GB RAM, and a 500 GB, 7200 RPM ATA disk, with

a 4096-byte block size. Each file system’s block size

is 4096 bytes. The system ran Ubuntu 13.10, 64-bit,

with Linux kernel version 3.11.10. Each experiment is

compared with several file systems, including BetrFS

0.1 [12], btrfs [26], ext4 [20], XFS [31], and zfs [4].

We use the versions of XFS, btrfs, ext4 that are part

of the 3.11.10 kernel, and zfs 0.6.3, downloaded from

www.zfsonlinux.org. The disk was divided into 2 par-

titions roughly 240 GB each; one for the root FS and the

other for experiments. We use default recommended file

system settings unless otherwise noted. Lazy inode table

and journal initialization were turned off on ext4. Each

8

USENIX Association 14th USENIX Conference on File and Storage Technologies (FAST ’16) 9

40

80

120

160

4KiB

8KiB

16KiB

32KiB

64KiB

128KiB

256KiB

512KiB

1MiB

2MiB

4MiB

File Size (KiB) − log scale

Time (ms)

BetrFS_0.2 (0)

BetrFS_0.2 (∞)

Single File Rename (warm cache)

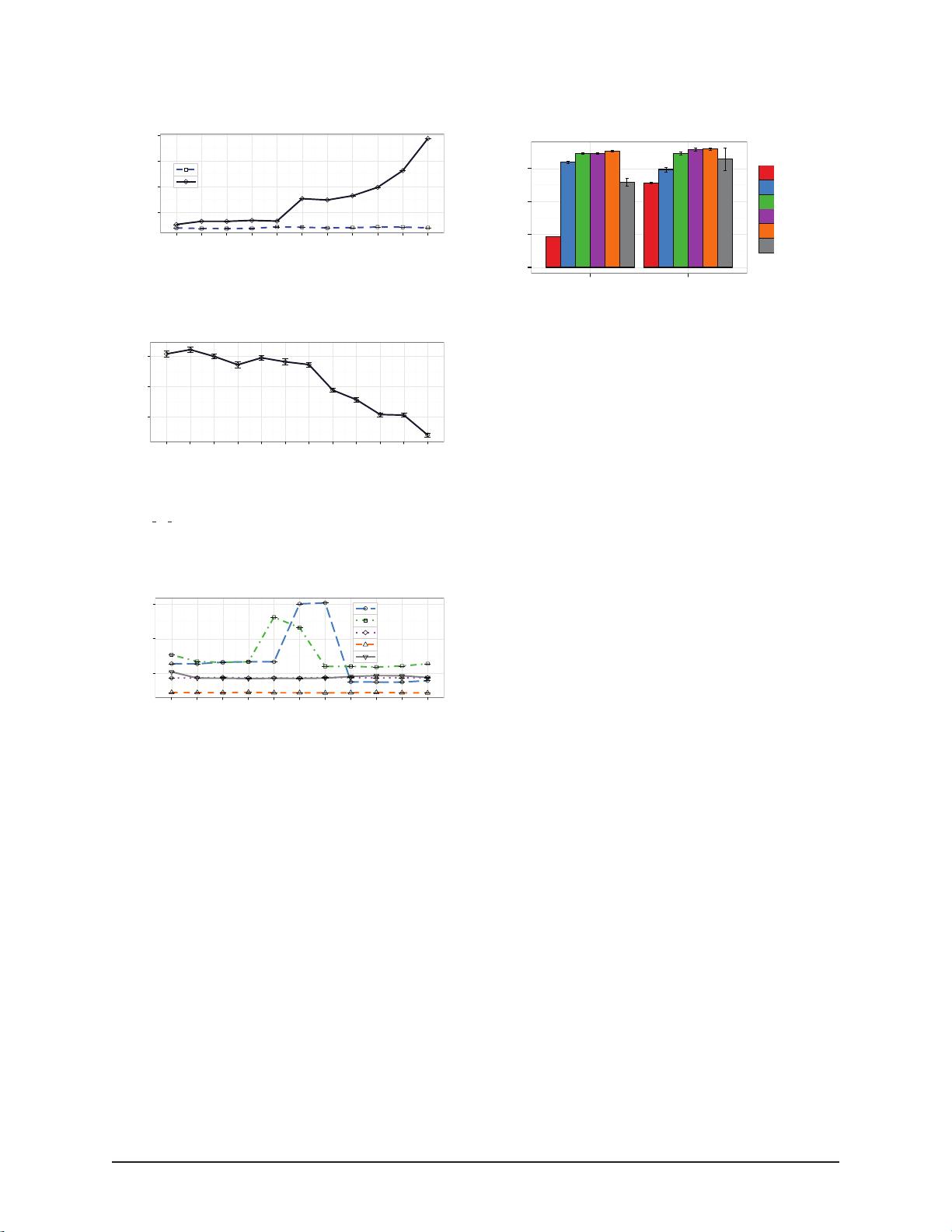

(a) BetrFS 0.2 file renames with zone size ∞ (all data must

be moved) and zone size 0 (inode-style indirection).

5

6

7

4KiB

8KiB

16KiB

32KiB

64KiB

128KiB

256KiB

512KiB

1MiB

2MiB

4MiB

8MiB

Zone Size (KiB) − log scale

Time (s)

Recursive Directory Scan (grep −r)

(b) Recursive scans of the Linux 3.11.10 source for

“cpu

to be64” with different BetrFS 0.2 zone sizes.

Figure 3: The impact of zone size on rename and scan

performance. Lower is better.

20

40

60

4KiB

8KiB

16KiB

32KiB

64KiB

128KiB

256KiB

512KiB

1MiB

2MiB

4MiB

File Size (KiB) − log scale

Time (ms)

BetrFS_0.2

btrfs

ext4

xfs

zfs

Single File Rename (warm cache)

Figure 4: Time to rename single files. Lower is better.

experiment was run a minimum of 4 times. Error bars

and ± ranges denote 95% confidence intervals. Unless

noted, all benchmarks are cold-cache tests.

7.1 Choosing a Zone Size

This subsection quantifies the impact of zone size on re-

name and scan performance.

A good zone size limits the worst-case costs of

rename but maintains data locality for fast directory

scans. Figure 3a shows the average cost to rename a file

and fsync the parent directory, over 100 iterations, plot-

ted as a function of size. We show BetrFS 0.2 with an

infinite zone size (no zones are created—rename moves

all file contents) and 0 (every file is in its own zone—

rename is a pointer swap). Once a file is in its own zone,

the performance is comparable to most other file sys-

0

30

60

90

write read

Operation

MiB/s

BetrFS_0.1

BetrFS_0.2

btrfs

ext4

xfs

zfs

Sequential I/O

Figure 5: Large file I/O performance. We sequentially

read and write a 10GiB file. Higher is better.

tems (16ms on BetrFS 0.2 compared to 17ms on ext4).

This is balanced against Figure 3b, which shows grep

performance versus zone size. As predicted in Sec-

tion 4 directory-traversal performance improves as the

zone size increases.

We select a default zone size of 512 KiB, which en-

forces a reasonable bound on worst case rename (com-

pared to an unbounded BetrFS 0.1 worst case), and keeps

search performance within 25% of the asymptote. Fig-

ure 4 compares BetrFS 0.2 rename time to other file sys-

tems. Specifically, worst-case rename performance at

this zone size is 66ms, 3.7× slower than the median file

system’s rename cost of 18ms. However, renames of files

512 KiB or larger are comparable to other file systems,

and search performance is 2.2× the best baseline file sys-

tem and 8× the median. We use this zone size for the rest

of the evaluation.

7.2 Improving the Worst Cases

This subsection measures BetrFS 0.1’s three worst cases,

and shows that, for typical workloads, BetrFS 0.2 is ei-

ther faster or within roughly 10% of other file systems.

Sequential Writes. Figure 5 shows the throughput to se-

quentially read and write a 10GiB file (more than twice

the size of the machine’s RAM). The optimizations de-

scribed in §3 improve the sequential write throughput

of BetrFS 0.2 to 96MiB/s, up from 28MiB/s in BetrFS

0.1. Except for zfs, the other file systems realize roughly

10% higher throughput. We also note that these file sys-

tems offer different crash consistency properties: ext4

and XFS only guarantee metadata recovery, whereas zfs,

btrfs, and BetrFS guarantee data recovery.

The sequential read throughput of BetrFS 0.2 is im-

proved over BetrFS 0.1 by roughly 12MiB/s, which is

attributable to streamlining the code. This places BetrFS

0.2 within striking distance of other file systems.

Rename. Table 1 shows the execution time of sev-

eral common directory operations on the Linux 3.11.10

9

剩余379页未读,继续阅读

2014-10-12 上传

2020-07-08 上传

2014-10-12 上传

2017-03-26 上传

点击了解资源详情

点击了解资源详情

muplj

- 粉丝: 1

- 资源: 2

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

最新资源

- Aspose资源包:转PDF无水印学习工具

- Go语言控制台输入输出操作教程

- 红外遥控报警器原理及应用详解下载

- 控制卷筒纸侧面位置的先进装置技术解析

- 易语言加解密例程源码详解与实践

- SpringMVC客户管理系统:Hibernate与Bootstrap集成实践

- 深入理解JavaScript Set与WeakSet的使用

- 深入解析接收存储及发送装置的广播技术方法

- zyString模块1.0源码公开-易语言编程利器

- Android记分板UI设计:SimpleScoreboard的简洁与高效

- 量子网格列设置存储组件:开源解决方案

- 全面技术源码合集:CcVita Php Check v1.1

- 中军创易语言抢购软件:付款功能解析

- Python手动实现图像滤波教程

- MATLAB源代码实现基于DFT的量子传输分析

- 开源程序Hukoch.exe:简化食谱管理与导入功能

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功