6

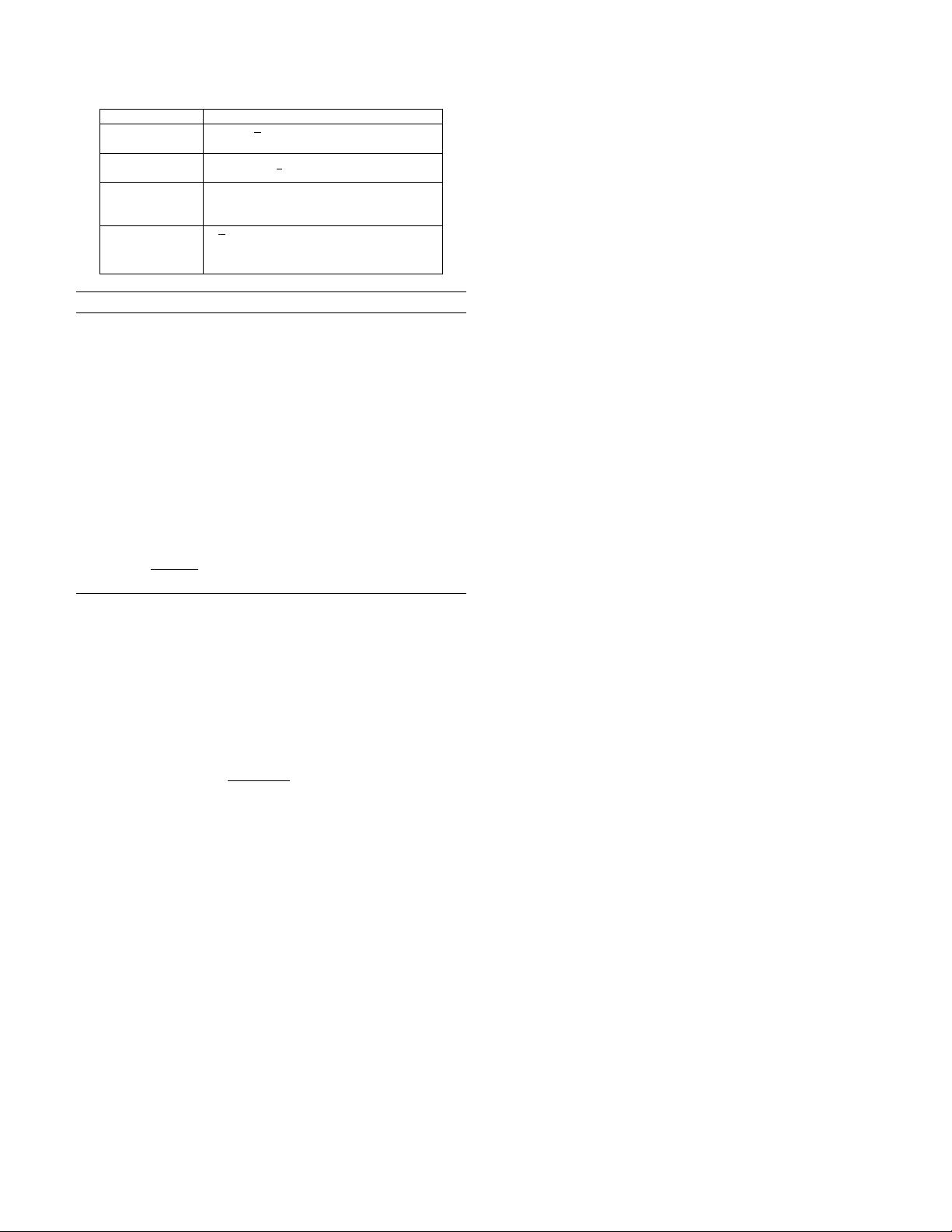

TABLE II: Loss functions of common ML models

Model

Loss function L(w

t

i

)

Neural network

1

n

P

n

j=1

(y

i

− f(x

j

; w))

2

(Mean Squared Error)

Linear

regression

1

2

y

j

− w

T

x

j

2

K-means

P

j

kx

j

− f(x

j

; w)k

(f(x

j

; w) is the centroid of all objects

assigned to x

j

’s class)

squared-SVM

[

1

n

P

n

j=1

max(0, 1 − y

j

(w

T

x

j

− bias))]

+λ

w

T

2

(bias is the bias parameter and

λ is const.)

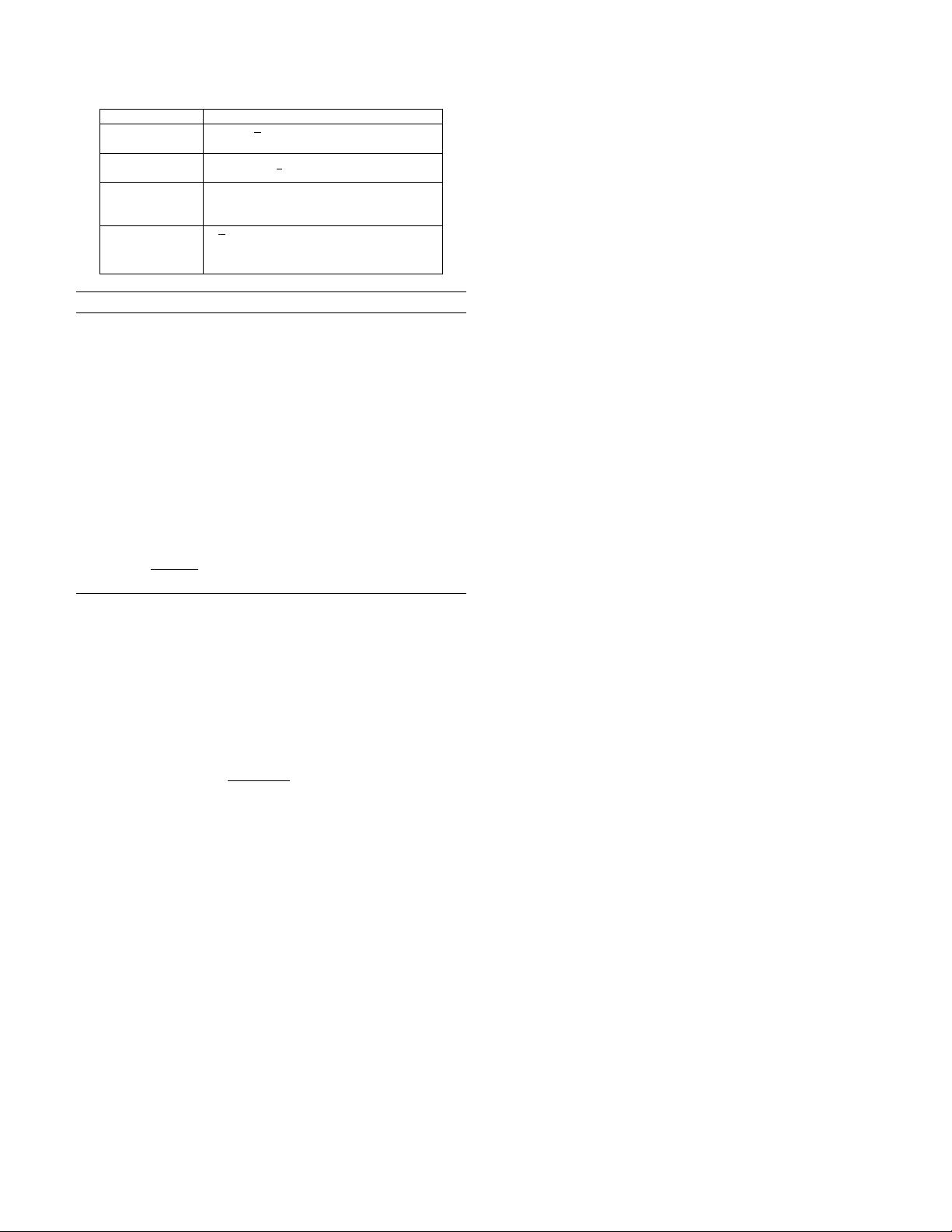

Algorithm 1 Federated averaging algorithm [23]

Require: Local minibatch size B, number of participants m per iteration, number of

local epochs E, and learning rate η.

Ensure: Global model w

G

.

1: [Participant i]

2: LocalTraining(i, w):

3: Split local dataset D

i

to minibatches of size B which are included into the set B

i

.

4: for each local epoch j from 1 to E do

5: for each b ∈ B

i

do

6: w ← w − η∆L(w; b) (η is the learning rate and ∆L is the gradient

of L on b.)

7: end for

8: end for

9:

10: [Server]

11: Initialize w

0

G

12: for each iteration t from 1 to T do

13: Randomly choose a subset S

t

of m participants from N

14: for each partipant i ∈ S

t

parallely do

15: w

t+1

i

← LocalTraining(i, w

t

G

)

16: end for

17: w

t

G

=

1

P

i∈N

D

i

P

N

i=1

D

i

w

t

i

(Averaging aggregation)

18: end for

Thereafter, in Step 2, the participant i implements the local

training and optimizes the target in (3) on minibatches from

the original local dataset (lines 2-8). Note that a minibatch

refers to a randomized subset of each participant’s dataset. At

the t

th

iteration (line 17), the server minimizes the global loss

in (4) by the averaging aggregation which is formally defined

as

w

t

G

=

1

P

i∈N

D

i

N

X

i=1

D

i

w

t

i

. (5)

The FL training process is iterated till the global loss function

converges, or a desirable accuracy is achieved.

C. Statistical Challenges of FL

Following an elaboration of the FL training process in the

previous section, we now proceed to discuss the statistical

challenges faced in FL.

In traditional distributed ML, the central server has access

to the whole training dataset. As such, the server can split the

dataset into subsets that follow similar distributions. The sub-

sets are subsequently sent to participating nodes for distributed

training. However, this approach is impractical for FL since

the local dataset is only accessible by the data owner.

In the FL setting, the participants may have local datasets

that follow different distributions, i.e., the datasets of partic-

ipants are non-IID. While the authors in [23] show that the

aforementioned FedAvg algorithm is able to achieve desirable

accuracy even when data is non-IID across participants, the

authors in [66] found otherwise. For example, the accuracy

of a FedAvg-trained CNN model has 51% lower accuracy

than centrally-trained CNN model for CIFAR-10 [67]. This

deterioration in accuracy is further shown to be quantified by

the earth mover’s distance (EMD) [68], i.e., difference in FL

participant’s data distribution as compared to the population

distribution. As such, when data is non-IID and highly skewed,

data-sharing is proposed in which a shared dataset with

uniform distribution across all classes is sent by the FL server

to each FL participant. Then, the participant trains its local

model on its private data together with the received data. The

simulation result shows that accuracy can be increased by

30% with 5% shared data due to reduced EMD. However,

a common dataset may not always be available for sharing by

the FL server. An alternative solution is subsequently discussed

in section IV.

The authors in [69] also find that global imbalance, i.e.,

the situation in which the collection of data held across all

FL participants is class imbalanced, also leads to a deteri-

oration in model accuracy. As such, the Astraea framework

is proposed. On initialization, the FL participants first send

their data distribution to the FL server. A rebalancing step is

introduced before training begins in which each participant

performs data augmentation [70] on the minority classes, e.g.,

through random rotations and shifts. After training on the aug-

mented data, a mediator is created to coordinate intermediate

aggregation, i.e., before sending the updated parameters to

the FL server for global aggregation. The mediator selects

participants with data distributions that best contributes to an

uniform distribution when aggregated. This is done through a

greedy algorithm approach to minimize the Kullback-Leibler

Divergence [71] between local data and uniform distribution.

The simuation results show accuracy improvement when tested

on imbalanced datasets.

The data on each participant’s device can also be het-

erogeneous in other ways, e.g., the number of training data

owned across participants can differ. The authors in [72]

propose learning separate, but structurally related models for

each participant. As such, concepts in multi-task learning

[73] can naturally be adopted to model such relationships.

Instead of minimizing the conventional loss function presented

previously in Table II, the loss function is modified to also

model the relationship amongst tasks. Then, the MOCHA

algorithm is proposed in which an alternating optimization

approach [74] is used to approximately solve the minimization

problem. Interestingly, MOCHA can be calibrated based on

the resource constraints of a participating device. For example,

the quality of approximation can be adaptively adjusted based

on network conditions and CPU states of the participating

devices. However, MOCHA cannot be applied to non-convex

DL models.

Apart from data heterogeneity, the convergence of a dis-

tributed learning algorithm is always a concern. Higher con-

vergence rate helps to save a large amount of time and

resources for the FL participants, and also significantly in-

creases the success rate of the federated training since fewer

communication rounds will reduce participant dropouts. To

ensure convergence, the study in [75] propose FedProx, which