76 July/August 2008

Mobile Graphics Survey

Compression

Compression not only saves storage space, but it

also reduces the amount of data sent over a net-

work or a memory bus. For GPUs, compression

and decompression (codec) have two major tar-

gets: textures and buffers.

Textures are read-only images glued onto geomet

-

rical primitives such as triangles. A texture codec

algorithm’s core requirements include fast random

access to the texture data, fast decompression,

and inexpensive hardware implementation. The

random-access requirement usually implies that a

block of pixels is compressed to a xed size. For ex-

ample, a group of 4 × 4 pixels can be compressed

from 3 × 8 = 24 bits per pixel down to 4 bits per

pixel, requiring only 64 bits to represent the whole

group. As a consequence of this xed-rate compres

-

sion, most texture compression algorithms are lossy

(for example, JPEG) and usually don’t reproduce the

original image exactly. Because textures are read-

only data and usually compressed ofine, the time

spent compressing the image isn’t as important as

the decompression time, which must be fast. Such

algorithms are sometimes called asymmetric.

As a result of these requirements, developers have

adopted Ericsson Texture Compression (ETC) as a

new codec for OpenGL ES.

6

ETC stores one base

color for each 4 × 4 block of texels and modies

the luminance using only a 2-bit lookup index per

pixel. This technique keeps the hardware decom-

pressor small. Currently, no desktop graphics APIs

use this algorithm.

Buffers are more symmetric than textures in

terms of compression and decompression because

both processes must occur in hardware in real time.

For example, the color buffer can be compressed,

so when a triangle is rendered to a block of pix-

els (say, 4 × 4) in the color buffer, the hardware

attempts to compress this block. If this succeeds,

the data is marked as compressed and sent back to

the main memory in compressed form over the bus

and stored in that form. Most buffer compression

algorithms are exact to avoid error accumulation.

However, if the algorithm is lossy, the color data

can be lossily compressed and later recompressed,

and so on, until the accumulated error exceeds the

threshold for what’s visible. This is called tandem

compression, meaning that if compression fails, you

must have a fallback that guarantees an exact color

buffer—namely, sending the data uncompressed.

7

Depth and stencil buffers might also be com-

pressed. The depth buffer deserves special men-

tion because its contents are proportional to 1/z,

and when viewed in perspective, the depth values

over a triangle remain linear. Depth-buffer com

-

pression algorithms heavily exploit this property,

which accounts for higher compression rates. A

survey of existing algorithms appears elsewhere.

8

Interestingly, all buffer codec algorithms are

transparent to the user. All action takes place in the

GPU and is never exposed to the user or program-

mer, so there’s no need for standardization. There’s

no major difference for buffer codec on mobile

devices versus desktops, but mobile graphics has

caused renewed interest in such techniques.

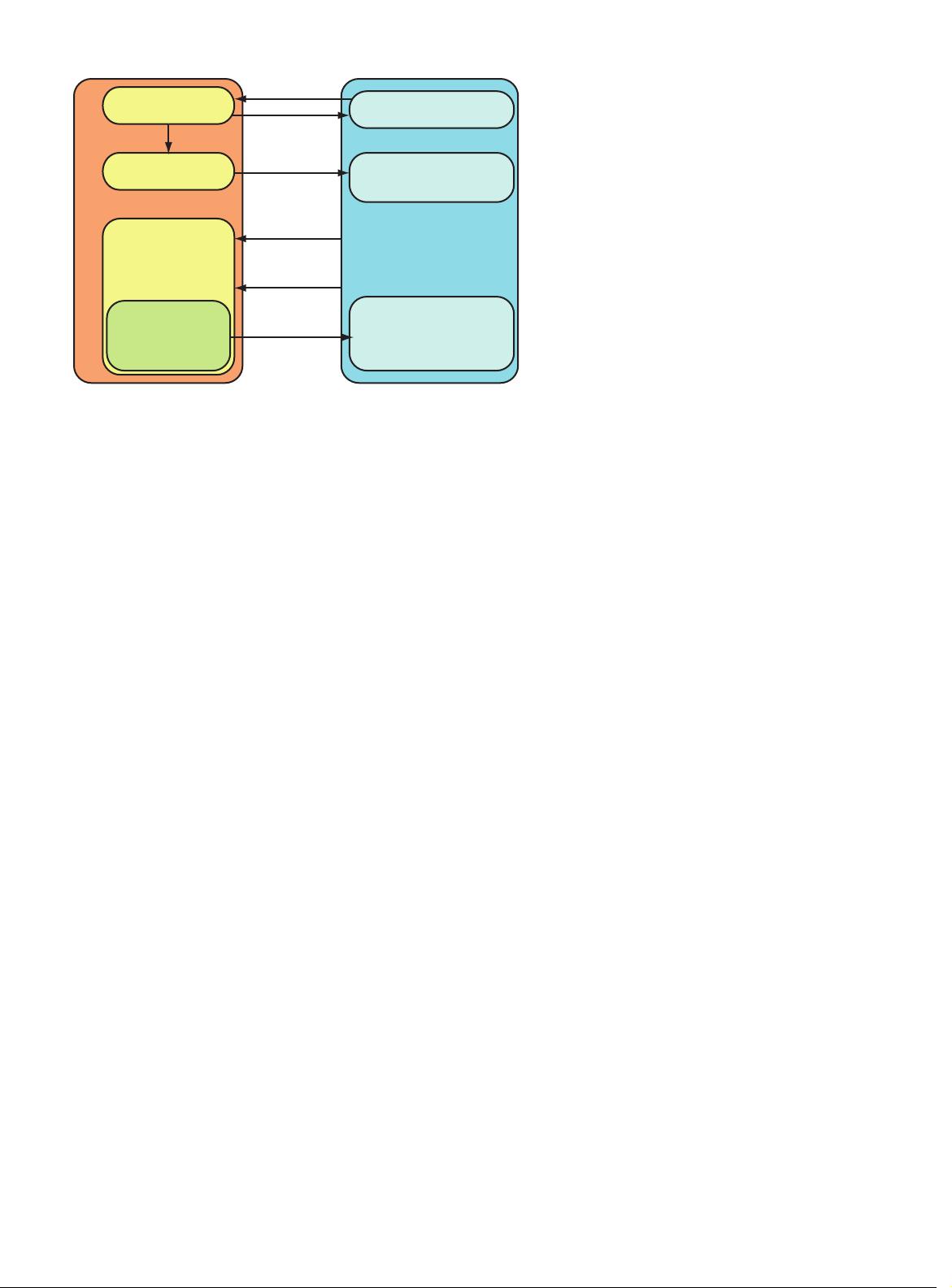

Tiling architectures

Tiling architectures aim to reduce the memory traf-

c related to frame-buffer accesses using a com

-

pletely different approach. Tiling the frame buffer

so that a small tile (such as a rectangular block of

pixels) is stored on the graphics chip provides many

optimization and culling possibilities. Commercial-

ly, Imagination Technologies and ARM offer mobile

3D accelerators using tiling architectures. Their core

insight is that a large chunk of the memory accesses

are to buffers such as color, depth, and stencil.

Ideally, we’d like the memory for the entire frame

buffer on-chip, which would make such memory

accesses extremely inexpensive. However, this isn’t

practical for the whole frame buffer, but storing a

small tile of, say, 16 × 16 pixels of the frame buffer

on-chip is feasible. When all rendering has been

nished to a particular tile, its contents can be

written to the external frame buffer in an efcient

block transfer. Figure 2 illustrates this concept.

However, tiling has the overhead that all the tri-

angles must be buffered and sorted into correct tiles

after they’re transformed to screen space. A tiling

Tiling

Rasterizer

pixel shader

Memory

Primitives

Primitives

Geometry

Scene data

Transformed

scene data

Frame buffer

Tile lists

Primitives

per tile

Texture read

Write

RGBA/Z

On-chip

buffers

GPU

Figure 2. A tiling architecture. The primitives are being transformed

and stored in external memory. There they are sorted into tile lists,

where each list contains the triangles overlapping that tile. This makes it

possible to store the frame buffer for a tile (for example, 16 × 16 pixels)

in on-chip memory, which makes accesses to the tile’s frame buffer

extremely inexpensive.