Murthy, Kasif & Salzberg

On the other hand, it is possible to dene impurity measures for which the problem

of nding optimal hyperplanes can be solved in polynomial time. For example, if one

minimizes the sum of distances of mis-classied examples, then the optimal solution can

be found using linear programming methods (if distance is measured along one dimension

only). However, classiers are usually judged byhow many points they classify correctly,

regardless of how close to the decision boundary a pointmay lie. Thus most of the standard

measures for computing impurity base their calculation on the discrete numb er of examples

of each category on either side of the hyperplane. Section 3.3 discusses several commonly

used impurity measures.

Now let us address the second issue, that of the complexity of building a small tree.

It is easy to show that the problem of inducing the smallest axis-parallel decision tree is

NP-hard. This observation follows directly from the work of Hyal and Rivest (1976). Note

that one can generate the smallest axis-parallel tree that is consistent with the training

set in polynomial time

if

the numb er of attributes is a constant. This can b e done by

using dynamic programming or branch and bound techniques (see Moret (1982) for several

pointers). But when the tree uses oblique splits, it is not clear, even for a xed number

of attributes, how to generate an optimal (e.g., smallest) decision tree in p olynomial time.

This suggests that the complexity of constructing go od oblique trees is greater than that

for axis-parallel trees.

It is also easy to see that the problem of constructing an optimal (e.g., smallest) oblique

decision tree is NP-hard. This conclusion follows from the work of Blum and Rivest (1988).

Their result implies that in

d

dimensions (i.e., with

d

attributes) the problem of producing

a 3-no de oblique decision tree that is consistent with the training set is NP-complete. More

specically, they show that the following decision problem is NP-complete: given a training

set

T

with

n

examples and

d

Boolean attributes, do es there exist a 3-no de neural network

consistent with

T

?From this it is easy to show that the following question is NP-complete:

given a training set

T

, does there exist a 3-leaf-node oblique decision tree consistent with

T

?

As a result of these complexity considerations, we to ok the pragmatic approach of trying

to generate small trees, but not looking for the smallest tree. The greedy approach used by

OC1 and virtually all other decision tree algorithms implicitly tries to generate small trees.

In addition, it is easy to construct example problems for which the optimal split at a no de

will not lead to the b est tree; thus our philosophyasembo died in OC1 is to nd locally

goo d splits, but not to spend excessive computational eort on improving the qualityof

these splits.

2. Previous Work on Oblique Decision Tree Induction

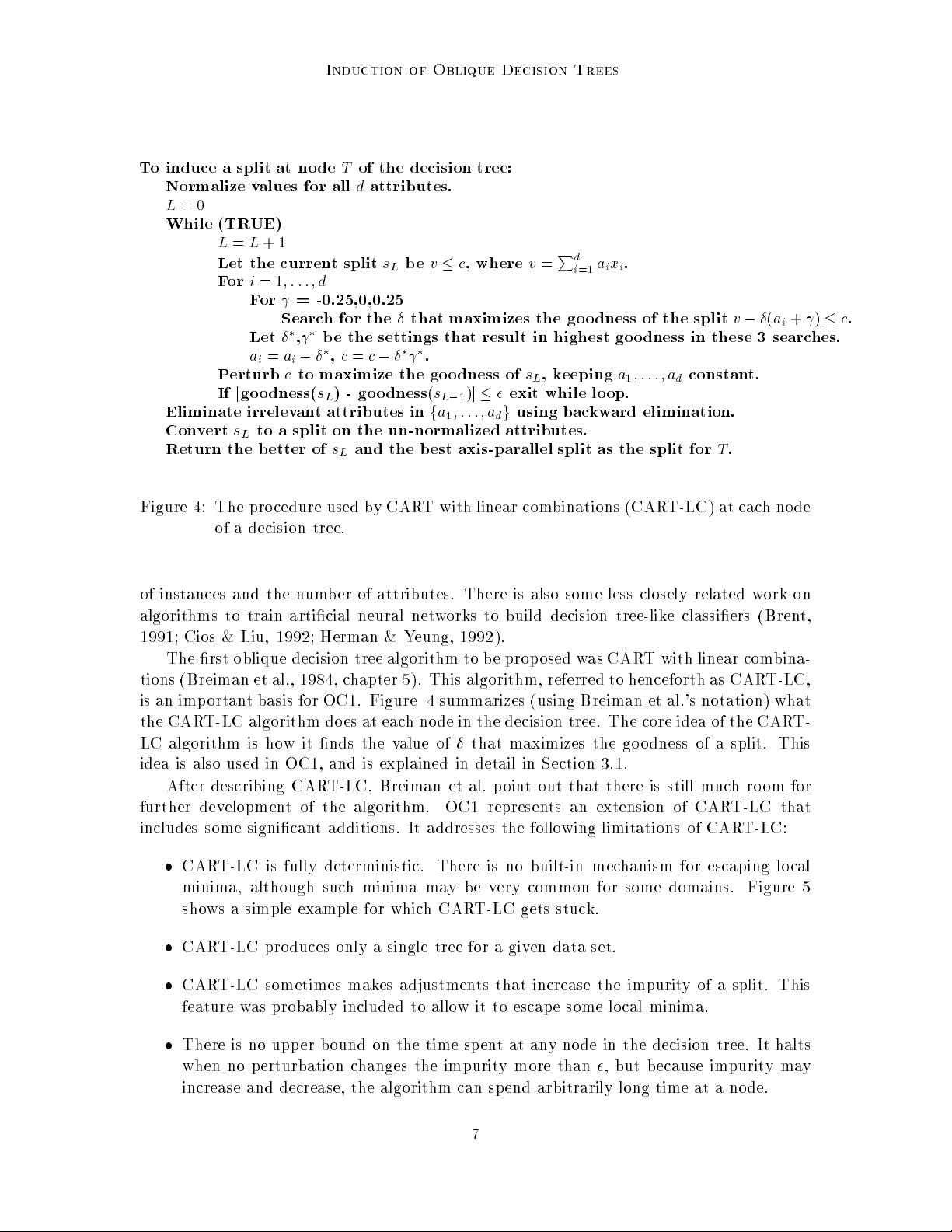

Before describing the OC1 algorithm, we will briey discuss some existing oblique DT

induction metho ds, including CART with linear combinations, Linear Machine Decision

Trees, and Simulated Annealing of Decision Trees. There are also methods that induce

tree-like classiers with linear discriminants at each node, most notably methods using

linear programming (Mangasarian, Setiono, & Wolb erg, 1990; Bennett & Mangasarian,

1992, 1994a, 1994b). Though these metho ds can nd the optimal linear discriminants for

specic goo dness measures, the size of the linear program grows very fast with the number

6