没有合适的资源?快使用搜索试试~ 我知道了~

首页2023 IEEE 固态电路国际会议专题论文集

2023 IEEE 固态电路国际会议专题论文集

需积分: 5 0 下载量 98 浏览量

更新于2024-06-15

收藏 123.25MB PDF 举报

"JSSC 2023.11 all papers"

这是一期关于《IEEE固态电路杂志》(Journal of Solid-State Circuits,简称JSSC)的特刊,发布于2023年11月。JSSC是固态电路领域的权威期刊,涵盖了广泛的电子电路设计和技术,包括模拟、数字、混合信号和存储器集成电路。这期特刊重点关注2023年IEEE国际固态电路会议(ISSCC),这是一个展示固态电路最新进展的全球性重要会议。

本期特刊由D. Sylvester成为新的副编辑,并由M. Kiani和K. Sengupta撰写客座编辑序言,介绍2023年ISSCC的特色和重要性。该特刊包含了多篇技术论文,展示了固态电路在各个方面的创新。

首先,有一篇论文介绍了采用三片晶圆堆叠的混合型15兆像素CIS(互补金属氧化物半导体图像传感器)+1兆像素EVS(事件视觉传感器)的设计,具备4.6-G事件/秒的读出速度、像素内时钟数字转换器以及片上图像信号处理器和环境光传感器功能。这个设计展示了高集成度和高速数据处理能力在图像传感器领域的应用。

其次,还有一篇论文描述了一个具有113.3-dB动态范围、600帧/秒的SPAD(单光子雪崩二极管)X射线探测器,其特点是无缝全局快门和时间编码扩展计数器。这一创新技术对于高精度成像和医疗检测具有重大意义。

此外,还有一款低刺激散射像素共享的次视网膜假体系统级芯片(SoC)被提出,它采用了基于时间的光电二极管感应和像素级动态电压缩放。这种设计旨在提高人工视网膜假体的性能,为视力受损者提供更好的视觉恢复可能性。

这些论文展示了固态电路在成像、医疗应用、以及生物医学工程等领域的前沿进展,涵盖了从新器件架构到高级信号处理算法的各种创新。通过JSSC 2023.11特刊,读者可以了解到固态电路技术的最新趋势和发展,这对于相关领域的研究者、工程师和学生来说是极其宝贵的资源。

2962 IEEE JOURNAL OF SOLID-STATE CIRCUITS, VOL. 58, NO. 11, NOVEMBER 2023

V. CONCLUSION

We have introduced a state-of-the-art hybrid EVS/CIS

image sensor. This was achieved using advanced three-

wafer-stacking technology. The hybrid sensor simplifies

synchronization between EVS and CIS compared with a

two-sensor solution. Furthermore, a hybrid sensor avoids

parallax/occlusion errors and requires only one package and

lens. This enables new use-cases such as rolling-shutter

correction or deblur image enhancement, video frame inter-

polation for slow motion, and simultaneous localization and

mapping.

The CIS pixel array sacrifices only one out of 16 color

channels for EVS functionality which achieves comparable

image quality to the state-of-the-art sparse phase-detection

autofocus systems. The EVS pixel was evaluated at a linear

NCT setting of 15% achieving a CTNU of ∼3% being

comparable to stand-alone EVS sensors. Very low noise of

<1 Hz is reported.

Conversely to arbiter-based readout, the content-aware scan

operation achieves fair, low-latency readout through the bypass

of rows and columns without events and a skip function that

only reads out the first and last events of a connected group of

events having the same polarity. Using these methodologies,

we report a maximum event rate of up to 4.6 GEps. The power

consumption at 55 pJ/Event outperforms recently published

stand-alone EVS sensors with at least VGA resolution by

up to 200% depending on the actual event distribution in

the array. We thus report three leading figures of merits.

The first describes the multiplication of event pixel resolu-

tion times event rate yielding 4.4 MP × GEps. The second

normalizes the first FOM by the required power achieving

0.08 MP × GEps/pJ. And the last FOM multiplies CIS and

EVS resolution as well as the CIS frame rate and EVS event

rate to form a hybrid measure of 1200 MP

2

×frames/s × GEps.

REFERENCES

[1] P. Lichtensteiner, “An AER temporal contrast vision sensor,”

Ph.D. thesis, ETH, Zürich, Switzerland, 2006.

[2] G. Gallego et al., “Event-based vision: A survey,” IEEE Trans. Pat-

tern Anal. Mach. Intell., vol. 44, no. 1, pp. 154–180, Jan. 2022, doi:

10.1109/TPAMI.2020.3008413.

[3] T. Haruta et al., “A 1/2.3inch 20Mpixel 3-layer stacked CMOS image

sensor with DRAM,” in IEEE Int. Solid-State Circuits Conf. (ISSCC)

Dig. Tech. Papers, San Francisco, CA, USA, Feb. 2017, pp. 76–77, doi:

10.1109/ISSCC.2017.7870268.

[4] C. Posch, D. Matolin, and R. Wohlgenannt, “An asynchronous

time-based image sensor,” in Proc. IEEE Int. Symp. Circuits

Syst., Seattle, WA, USA, May 2008, pp. 2130–2133, doi:

10.1109/ISCAS.2008.4541871.

[5] C. Brandli, R. Berner, M. Yang, S.-C. Liu, and T. Delbruck, “A 240×180

130 dB 3 µs latency global shutter spatiotemporal vision sensor,” IEEE

J. Solid-State Circuits, vol. 49, no. 10, pp. 2333–2341, Oct. 2014, doi:

10.1109/JSSC.2014.2342715.

[6] C. Li et al., “Design of an RGBW color VGA rolling and global

shutter dynamic and active-pixel vision sensor,” in Proc. IEEE Int. Symp.

Circuits Syst. (ISCAS), Lisbon, Portugal, May 2015, pp. 718–721, doi:

10.1109/ISCAS.2015.7168734.

[7] T. Finateu et al., “A 1280 × 720 back-illuminated stacked temporal

contrast event-based vision sensor with 4.86 µm pixels, 1.066GEPS

readout, programmable event-rate controller and compressive data-

formatting pipeline,” in IEEE Int. Solid-State Circuits Conf. (ISSCC)

Dig. Tech. Papers, San Francisco, CA, USA, Feb. 2020, pp. 112–114,

doi: 10.1109/ISSCC19947.2020.9063149.

[8] B. Son et al., “A 640 × 480 dynamic vision sensor with a 9 µm pixel

and 300Meps address-event representation,” in IEEE Int. Solid-State

Circuits Conf. (ISSCC) Dig. Tech. Papers, Feb. 2017, pp. 66–67, doi:

10.1109/ISSCC.2017.7870263.

[9] Y. Suh et al., “A 1280 × 960 dynamic vision sensor with a 4.95-µm

pixel pitch and motion artifact minimization,” in Proc. IEEE Int.

Symp. Circuits Syst. (ISCAS), Seville, Spain, Oct. 2020, pp. 1–5, doi:

10.1109/ISCAS45731.2020.9180436.

[10] S. Chen, “Development of event-based sensor and applications,” in

Proc. Conf. Comput. Vis. Pattern Recognit. (CVPR), 2021, pp. 1–17.

Accessed: Aug. 22, 2022. [Online]. Available: https://tub-rip.github.io/

eventvision2021/slides/CVPRW21_Shoushun_Chen.pdf

[11] iniVation. Specifications—Current Models. Accessed: Aug. 22, 2022.

[Online]. Available: https://inivation.com/wp-content/uploads/2021/

08/2021-08-iniVation-devices-Specifications.pdf

[12] C. Li, L. Longinotti, F. Corradi, and T. Delbruck, “A 132 by 104 10 µm-

pixel 250 µW 1kefps dynamic vision sensor with pixel-parallel noise

and 107 spatial redundancy suppression,” in Proc. Int. Image Sensor

Workshop (IISW), Snowbird, UT, USA, 2019, p. 16.

[13] M. Guo et al., “A 3-wafer-stacked hybrid 15MPixel CIS +

1 MPixel EVS with 4.6G event/s readout, in-pixel TDC and on-

chip ISP and ESP function,” in IEEE Int. Solid-State Circuits

Conf. (ISSCC) Dig. Tech. Papers, Feb. 2023, pp. 90–92, doi:

10.1109/ISSCC42615.2023.10067476.

[14] Y. Yaffe et al., “Dynamic vision sensor—The road to market,” in Proc.

Int. Conf. Robot. Automat. (ICRA), 2017. Accessed: May 3, 2023.

[Online]. Available: https://rpg.ifi.uzh.ch/docs/ICRA17workshop/

Samsung.pdf

[15] K. Kodama et al., “1.22 µm 35.6Mpixel RGB hybrid event-based vision

sensor with 4.88 µm-pitch event pixels and up to 10K event frame

rate by adaptive control on event sparsity,” in IEEE Int. Solid-State

Circuits Conf. (ISSCC) Dig. Tech. Papers, Feb. 2023, pp. 92–93, doi:

10.1109/ISSCC42615.2023.10067520.

[16] A. Niwa et al., “A 2.97 µm-pitch event-based vision sensor with shared

pixel front-end circuitry and low-noise intensity readout mode,” in IEEE

Int. Solid-State Circuits Conf. (ISSCC) Dig. Tech. Papers, Feb. 2023,

pp. 94–95, doi: 10.1109/ISSCC42615.2023.10067566.

[17] A. Suess et al., “Physical modeling and parameter extraction for event-

based vision sensors,” in Proc. IISS Int. Image Sensor Workshop (IISW),

Edinburgh, U.K., 2023, p. R5.5.

Menghan Guo received the M.E. degree in circuits

and systems from Southeast University, Nanjing,

China, in 2013.

From 2013 to 2017, he was a Research

Associate with Nanyang Technological University,

Singapore. In 2017, he joined CelePixel Technolo-

gies, Shanghai, China, as the IC Design Manager

for smart vision sensors in 2017, and he is currently

with OMNIVISION, Shanghai. His research inter-

ests mainly focus on the design of event-based vision

sensor.

Shoushun Chen (Senior Member, IEEE) received

the B.S. degree from Peking University, Beijing,

China, in 2000, the M.E. degree from the Chinese

Academy of Sciences, Beijing, in 2003, and the

Ph.D. degree from the Hong Kong University of

Science and Technology, Hong Kong, in 2007.

He held postdoctoral positions with the Hong

Kong University of Science and Technology and

Yale University, New Haven, CT, USA. From July

2009 to April 2023, he was an Assistant Professor

and later promoted to tenured Associate Professor

with Nanyang Technological University, Singapore. He is the Founder of

CelePixel Technology, Shanghai, China, which was acquired by OMNIVI-

SION, Shanghai, in 2020. His research focuses on various areas, including

smart vision sensors, motion detection sensors, energy-efficient algorithms for

bioinspired vision and analog/mixed-signal VLSI circuits and systems.

Dr. Chen serves as the Chair of the Technical Committee of Sensory Systems

for the IEEE Circuits and Systems Society (CASS).

GUO et al.: THREE-WAFER-STACKED HYBRID 15-MPixel CIS + 1-MPixel EVS 2963

Zhe Gao received the M.S. and Ph.D. degrees

in electrical engineering from the University of

Rochester, Rochester, NY, USA, in 2009 and 2013,

respectively.

He is currently a Research and Development Man-

ager with OMNIVISION, Santa Clara, CA, USA.

He holds more than ten issued/pending U.S. patents.

His research interests include CMOS image sensors

and related system design.

Wenlei Yang received the B.S. degree in optics and

optical engineering from the University of Science

and Technology of China, Anhui, China, in 2014,

and the M.Sc. degree in telecommunications from

the Hong Kong University of Science and Technol-

ogy, Hong Kong, in 2019.

He has been working in the field of event-based

vision sensors and related application development

since 2015. He was one of the Key Members of

Celepixel Technology, Shanghai, China. He is cur-

rently Innovation Hardware Engineer with OMNIVI-

SION, Shanghai, with a focus on event-based vision sensor design with an

emphasis on digital design and event-based application development.

Peter Bartkovjak received the M.S. degree in elec-

trical engineering–microelectronics from the Slovak

Technical University, Bratislava, Slovak, in 1993.

He has been working in the image sensors’ design

since 2004. During his employment with BAE

Systems (former Fairchild Imaging), he had respon-

sibilities of analog design lead for a 4k electron

counting noise floor scientific sensor. He is currently

working as a Staff Analog Design Engineer with

OMNIVISION, Santa Clara, CA, USA, where he

is responsible for various research and development

and product-related innovative designs. He was a key contributor to several

high-speed SERDES interface designs currently in production. His focus is

primarily on the analog- and mixed-mode design in the area of image sensors’

column readout circuitry and related circuits and systems.

Qing Qin received the B.S. degree in electrical

engineering from the University of Virginia, Char-

lottesville, VA, USA, in 2015, and the M.S. degree

in electrical engineering from the University of Cali-

fornia at San Diego, San Diego, CA, USA, in 2017.

She is currently working as a Staff Analog Design

Engineer with OMNIVISION, Santa Clara, CA,

USA, with design focuses on CMOS image sensing

and display-related circuitry and systems.

Xiaoqin Hu received the M.Sc. degree in micro-

electronics and solid-state electronics from Xiangtan

University, Xiangtan, China, in 2010.

In 2017, she joined CelePixel Technologies,

Shanghai, China, as a Sr. Software Engineer for

smart vision sensors, and is currently with OMNIVI-

SION, Shanghai.

Dahai Zhou received the bachelor’s degree in

applied electronic technology from the Changsha

Aeronautical Vocational and Technical College,

Changsha, China, in 2003, and the B.Sc. degree

in communication engineering from the University

of Electronic Science and Technology of China,

Chengdu, China.

He is currently a Senior Engineer with OMNIVI-

SION, Shanghai, China, working on the EVS sensor

characterization test and design of testing platforms.

Qiping Huang received the M.E. degree in automa-

tion from Southeast University, Nanjing, China,

in 2016.

In 2019, she joined CelexPixel Technologies,

Shanghai, China, as a Software Engineer, and is cur-

rently with OMNIVISION, Shanghai. Her research

interests mainly focus on the performance test of

event-based vision sensors.

Masayuki Uchiyama received the M.E. degree in

electrical engineering from Osaka University, Osaka,

Japan, in 2009.

In 2009, he joined Sony, Kanagawa, Japan, where

he engaged in the pixel design of CIS products.

In 2012, he joined TSMC, Hsinchu, Taiwan, where

he was responsible for CIS product development as

a Product Engineer. In 2019, joined OMNIVISION,

Santa Clara, CA, US, where he currently works on

CIS development as a Process Integration Engineer.

His research interests include CIS simulation, sub-

micrometer pixel development, and wafer-stacking technology.

Yoshiharu Kudo photograph and biography not available at the time of

publication.

Shimpei Fukuoka received the B.S. degree in elec-

tronics from the Tokyo University of Agriculture and

Technology, Fuchu, Japan, 2009.

He held a position as an Image Sensor Process

Integration Engineer with Sony, Kanagawa, Japan,

from 2012 to 2021. Since 2021, he has been work-

ing on Research and Development with OMNIVI-

SION, Yokohama, Japan. His work has focused

on the development of image sensor and process

integration.

Chengcheng Xu received the B.S. degree in elec-

tronic science and technology from the Huazhong

University of Science and Technology, Wuhan,

China, in 2007, and the M.S. degree in electrical

engineering from the University of California at

Santa Cruz, Santa Cruz, CA, USA, in 2015.

He is currently a Senior Staff Analog Design Engi-

neer with OMNIVISION, Santa Clara, CA, USA.

He is working on mixed-signal circuit design for

CMOS image sensors.

2964 IEEE JOURNAL OF SOLID-STATE CIRCUITS, VOL. 58, NO. 11, NOVEMBER 2023

Hiroaki Ebihara received the B.S. and M.S. degrees

from Waseda University, Tokyo, Japan, in 2000 and

2002, respectively.

He has worked in the field of CMOS image sensors

for the past 16 years. He is currently the Director

of Array Design with OMNIVISION, Santa Clara,

CA, USA, where he is primarily working on the

design and development of readout circuits for CIS.

He holds more than 40 U.S. patents.

Xueqing (Andy) Wang received the B.Sc. degree

in electronic engineering from the Huazhong Uni-

versity of Science and Technology, Wuhan, China,

the M.S. degree in data communications from the

Nanjing University of Posts and Telecommunica-

tions, Nanjing, China, and the M.S. degree in

electrical engineering from the University of Florida,

Gainesville, FL, USA.

He started his career with National Semiconductor,

Santa Clara, CA, USA, working on high-speed link

circuit design. He joined OmniVision Technologies,

Santa Clara; Fairchild Imaging, San Jose, CA, USA; and Marvell Semicon-

ductor, Wilmington, DE, USA. He is currently a Principle Design Engineer

and a Design Manager with OMNIVISION, Santa Clara, responsible for

advanced image sensor circuit design and product development. His research

interest spans from high-precision/low-noise signal path to high-speed data

link circuit/system design.

Peiwen Jiang photograph and biography not available at the time of

publication.

Bo Jiang received the B.S. degree in electrical engi-

neering from the Xi’an University of Technology,

Xi’an, China, in 2003, the M.S. degree from Xi’an

Jiaotong University, Xi’an, in 2006, and the Ph.D.

degree in electrical engineering from the University

of Vermont, Burlington, VT, USA, in 2016.

He is currently working with OMNIVISION, Santa

Clara, CA, USA, as a Sensor Characterization

Engineer.

Bo Mu received the Ph.D. degree in imaging sci-

ence from the Rochester Institute of Technology,

Rochester, NY, USA, in 2007.

He is currently a Director of Algorithm Develop-

ment, OMNIVISION, Santa Clara, CA, USA. His

research interests include color image and video

processing, computer vision, computational imaging,

and image quality metrics.

Huan Chen received the B.Sc. degree from Peking

University, Beijing, China, in 2000.

He held functions as Senior Process Research and

Development Engineer with SMIC, Data Analysis

Group Director, pdf Solutions, Inc., San Jose, CA,

USA, as well as Investment Director with Light-

house Capital, Palm Beach Gardens, FL, USA.

Since 2019, he has been with CelePixel Technology,

Shanghai, China, as a Group Manager for EVS

systems and algorithm development, and is currently

with OMNIVISION, Shanghai.

Jason Yang photograph and biography not available at the time of publication.

T. J. Dai received the B.Sc. degree in semicon-

ductors from the Huazhong University of Science

and Technology, Wuhan, China, in 1994, and the

M.Sc. degree in microelectronics from Tsinghua

University, Beijing, China, in 1997.

Since 2001, he has been with OMNIVISION,

Santa Clara, CA, USA, where he is currently a VP

for sensor-related circuit design.

Andreas Suess (Member, IEEE) received the

B.Sc.E.E. degree (with honors) from the University

of Applied Sciences Düsseldorf, Düsseldorf,

Germany, in 2008, and the Ph.D. degree (summa

cum laude) from University Duisburg-Essen,

Duisburg, Germany, in 2014.

From 2009 to 2014, he was with Fraunhofer IMS,

Duisburg, where he worked primarily on Indirect

Time-of-Flight Imaging. In 2014, he joined imec,

Leuven, Belgium, where he worked on numerous

technologies—most notably global shutter, indirect

and direct time-of-flight, and thin-film photodetectors for SWIR. Since

2018, he has been with OMNIVISION, Santa Clara, CA, USA, where he

is currently managing a system design team within the office of the CTO.

He has authored or coauthored more than 30 scientific article and holds

more than 30 pending or issued patents.

Dr. Suess currently serves in the Technical Program Committee of the

International Image Sensor Workshop (IISW) and the subcommittee on

Imagers, Medical, MEMS, and Displays of the International Solid-State

Circuits Conference (ISSCC).

IEEE JOURNAL OF SOLID-STATE CIRCUITS, VOL. 58, NO. 11, NOVEMBER 2023 2965

A 113.3-dB Dynamic Range 600 Frames/s SPAD

X-Ray Detector With Seamless Global Shutter

and Time-Encoded Extrapolation Counter

Byungchoul Park , Member, IEEE, Hyun-Seung Choi, Student Member, IEEE, Jinwoong Jeong , Member, IEEE,

Taewoo Kim , Member, IEEE, Myung-Jae Lee , Member, IEEE , and Youngcheol Chae , Senior Member, IEEE

Abstract— This article presents a 400 × 200 single-photon

avalanche diode (SPAD) X-ray detector that supports seamless

global shutter operation. The pixel consists of an SPAD with two

time-encoded extrapolation counters. For use under low X-ray

dose conditions, the pixels and readout circuitry operate in a

fully digital manner, avoiding the noise penalty of the readout.

Under high X-ray dose conditions, the proposed counter operates

in the extrapolation mode and thus achieves a high dynamic

range (HDR), while reducing the counter’s power consump-

tion. This is achieved by capturing the time of the counter

overflow (T

OF

) and expanding the counter depth by the ratio

between the integration time (T

INT

) and T

OF

. To reconstruct T

OF

,

a φ

GCLK

signal is distributed during T

INT

and accumulated from

T

OF

to the end of T

INT

by reusing the counter. This only requires

distribution of the φ

GCLK

across the pixel array. Furthermore,

seamless global shutter operation is realized through the use of

two counters, which achieves a 100% temporal aperture for the

X-ray photon. The proposed X-ray detector is implemented in

a 1P5M 65-nm CMOS process. To be used for both medical

diagnosis and industrial inspection, the pixel pitch is selected as

49.5 µm. The 400 × 200 detector achieves a dynamic range (DR)

of 113.3 dB, while consuming only 127.2 mW. Compared with

previous X-ray detectors, the proposed detector exhibits a

24.9-dB improvement in DR.

Index Terms— CMOS image sensor, digital photon counter,

extrapolation counter, seamless global shutter, single-photon

avalanche diode (SPAD), SPAD X-ray detector.

I. INTRODUCTION

R

ECENTLY, the demand for X-ray detectors using hard

X-rays (λ < 0.1 nm) has increased. Thanks to its

ability to penetrate dense substances, it is suitable for many

Manuscript received 11 May 2023; revised 11 July 2023; accepted

26 July 2023. Date of publication 21 August 2023; date of current version

24 October 2023. This article was approved by Associate Editor Mehdi Kiani.

This work was supported by the Korea Medical Device Development Fund

grant funded by the Korean Government (the Ministry of Science and ICT, the

Ministry of Trade, Industry and Energy, the Ministry of Health and Welfare,

and the Ministry of Food and Drug Safety) under Project RS_2020_KD000048

and Project 1711138024. (Corresponding author: Youngcheol Chae.)

Byungchoul Park and Youngcheol Chae are with the Department of Electri-

cal and Electronic Engineering, Yonsei University, Seoul 03722, South Korea

(e-mail: ychae@yonsei.ac.kr).

Hyun-Seung Choi and Myung-Jae Lee are with the Post-Silicon Semi-

conductor Institute, Korea Institute of Science and Technology (KIST),

Seoul 02792, South Korea.

Jinwoong Jeong and Taewoo Kim are with Rayence, Hwaseong 18449,

South Korea.

Color versions of one or more figures in this article are available at

https://doi.org/10.1109/JSSC.2023.3302849.

Digital Object Identifier 10.1109/JSSC.2023.3302849

applications such as medical diagnosis (computed tomog-

raphy (CT), projection radiography, fluoroscopy, etc.) and

industrial inspection (non-destructive testing, airport security,

etc.) [1], [2], [3], [4], [5]. In both the applications, the X-ray

detectors require a high frame rate and a high dynamic

range (HDR) at the same time. Conventional approaches to

HDR imaging, such as switching the full-well capacity of

the photodetector [1] or synthesizing images with different

integration times [6], [7], make X-ray detectors susceptible

to motion artifacts that degrade the quality of the captured

images. This is critical for CT and security applications as

the detectors need to capture the images of moving objects.

To address this problem, a global shutter X-ray detector with

multiple integration capacitors that achieves HDR has been

proposed [3]. However, conventional global shutter detec-

tors require a certain amount of readout time, thus wasting

the temporal aperture during the pixel readout [3], [8], [9].

Furthermore, the X-ray source cannot be turned off immedi-

ately, due to its slow response during pixel readout. Therefore,

to achieve a 100% temporal aperture, a seamless global shutter

operation was proposed [10], which used an additional mem-

ory to store the result of the previous image and thus realizes

simultaneous photon integration and pixel readout. In addition,

use of photodiode as a detector has a fundamental limitation

due to noise in the readout circuitry, e.g., 8.1 e

−

in [3] and

67 e

−

in [4], which limits the minimum dose of the X-ray.

To lower the minimum dose of the X-ray, a single-photon

avalanche diode (SPAD) can be used, with digital counter,

and fully digital readout, which is free from readout noise.

However, many counter bits are required for increasing the

dynamic range (DR) of the photon-counting detector. This

results in a significant increase in both the area and power

consumption of the counter. To overcome this problem,

an extrapolation counting (EXT) method that limits the acti-

vation of the SPAD and counter while preserving their DR

has been proposed [11], [12], [13]. This method has several

advantages for conventional imagers, since the pixel circuit

can be implemented in a compact pixel pitch. However,

X-ray photons cannot be focused with conventional lenses,

and the detector size must be increased as much as the target

size, which poses additional challenges. Therefore, no clinical-

grade SPAD X-ray detectors have been reported yet.

This article presents the first SPAD X-ray detector, which is

an extension of [14], and provides a comprehensive analysis

0018-9200 © 2023 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission.

See https://www.ieee.org/publications/rights/index.html for more information.

2966 IEEE JOURNAL OF SOLID-STATE CIRCUITS, VOL. 58, NO. 11, NOVEMBER 2023

of the extrapolation counter, a detailed discussion of its

implementations, and additional measurement results. The

400 × 200 detector, implemented in a 1P5M 65-nm CMOS

process, achieves a 49.5-µm pixel pitch, uses an SPAD as

a detector, and captures its output pulses with two counters,

realizing a seamless global shutter operation. In addition, the

proposed pixel and its readout circuitry are implemented in a

fully digital manner, achieving a frame rate of 600 frames/s.

To achieve the HDR and low power consumption, a time-

encoded extrapolation counter is proposed, by reusing the

counter to capture the time of counter overflow (T

OF

) and

estimate the number of incident photons during the integration

time (T

INT

). This allows the DR to be extended by the ratio

between T

INT

and T

OF

, resulting in a DR of 113.3 dB, while

consuming only 127.2 mW. Compared with the previous

X-ray detectors [1], [2], this work achieves a 24.9-dB higher

DR. Compared with the state-of-the-art global shutter X-ray

detector [3], this work achieves a competitive DR while sup-

porting seamless global shutter operation and providing digital

outputs. Compared with the state-of-the-art SPAD imagers,

this work achieves a competitive DR and high frame rate.

In addition, the proposed detector provides a good radiation

hardness, which can guarantee the operation up to 8000 Gy.

This article is organized as follows. Section II describes the

background of the extrapolation counting method. Section III

provides the implementation of the proposed time-encoded

extrapolation counter. The implementation of the proposed

SPAD X-ray detector is illustrated in Section IV. The measure-

ment results are presented in Section V. Finally, the conclusion

is provided in Section VI.

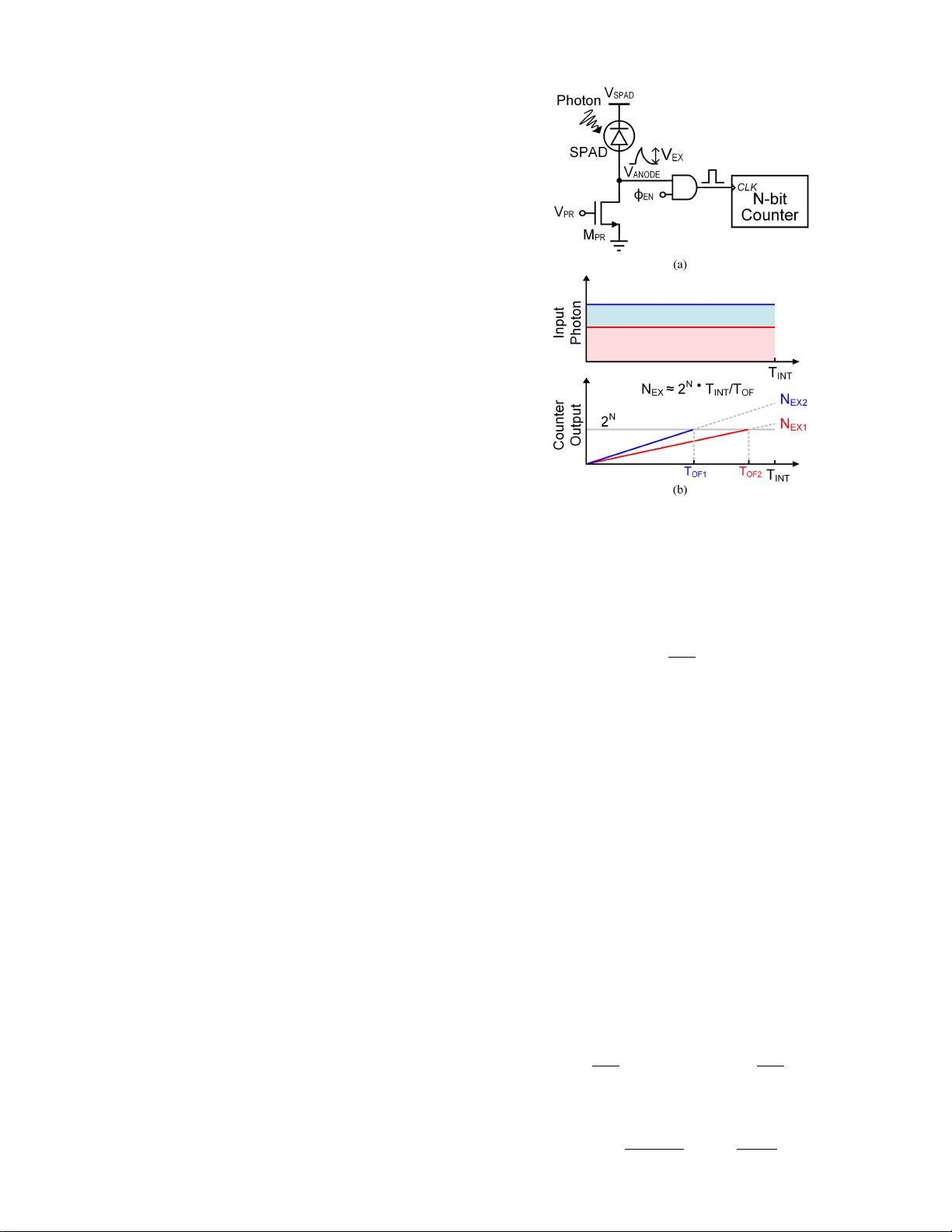

II. EXTRAPOLATION COUNTING METHOD

A. Operation Principle

An SPAD is a reverse-biased photodiode that is biased by

the sum of an excess bias voltage (V

EX

) and a breakdown

voltage (V

BR

) of the junction. To detect a photon, a passive

recharge transistor (M

PR

) and an AND gate are attached to the

SPAD [see Fig. 1(a)]. A voltage pulse with a magnitude of V

EX

is generated at the SPAD’s anode, caused by incident photon

and impact ionization. It propagates to an N -bit counter,

enabled by a control signal (φ

EN

). Furthermore, the anode of

the SPAD is recharged to ground for the consecutive photon

detection by M

PR

. Thanks to SPADs’ ability to detect single

photons, these detectors are often used in many applica-

tions [11], [12], [13], [14], [15], [16], [17], [18], [19]. An ana-

log counter can be a possible candidate for use in the single-

photon counting method, thanks to its compact design [19],

[20], [21], [22], [23]. However, considering the non-idealities

of the analog counter, such as limited linearity (∼9-bit) [20]

and non-uniformity (∼1.9%) [21], it is not a promising solu-

tion for X-ray applications. A digital counter with a large bit

depth can be used for accommodating the HDR; however, the

power consumption of the counter is proportional to the num-

ber of incident photons and the counter consumes a significant

amount of power, especially under high X-ray dose conditions.

To circumvent this, an extrapolation counting method has

been proposed [11], [13] that measures T

OF

and extends its

Fig. 1. (a) Single-photon counting with SPAD and counter and (b) concept

of the extrapolation counting method.

effective depth by the ratio between T

INT

and T

OF

, since T

OF

is inversely proportional to the intensity of the incident light

as shown in Fig. 1(b). The result of the extrapolated counting

during T

INT

(N

EX

) can be calculated as follows:

N

EX

=

T

INT

T

OF

· 2

N

. (1)

This allows the power consumption of the counter to be

reduced by the ratio of T

INT

/T

OF

, which can be achieved up

to several hundred times while preserving the overall DR.

B. Conventional Extrapolation Counting Method

Fig. 2(a) shows the implementation of the conventional

extrapolation counting method [11]. The pixel consists of an

SPAD, passive recharge circuit, N -bit counter, and overflow

latch. To recover T

OF

, N-bit time codes (TCs) are applied

globally to the pixel array. The TCs equally divide T

INT

with

a time interval of T

INT

/2

N

, and its value increases linearly

from 0 to 2

N

− 1. During T

INT

, SPAD captures the incident

photon and accumulates it in the counter. When the counter

accumulates 2

N

photons, it results in an overflow (T

OF

) and

the overflow latch stores the counter’s overflow information

(OF latch = 1). Subsequently, the counter is rearranged as

a latch and captures the TCs (M

1

) of that moment (N

OUT1

),

as shown in Fig. 2(b). In this way, T

OF

is measured, and the

range of T

OF

can be represented as

M

1

·

T

INT

2

N

< T

OF

< (M

1

+ 1) ·

T

INT

2

N

. (2)

As a result, N

EX

can be calculated from (1) and rounded up

as

N

EX

= T

INT

·

2

N

M

1

· T

INT

· 2

N

=

2

N

N

OUT1

· 2

N

. (3)

剩余343页未读,继续阅读

2024-01-28 上传

2024-01-28 上传

2024-01-28 上传

2024-03-18 上传

2024-03-18 上传

2024-03-18 上传

2024-03-18 上传

2024-01-28 上传

netshell

- 粉丝: 11

- 资源: 185

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

最新资源

- MATLAB新功能:Multi-frame ViewRGB制作彩色图阴影

- XKCD Substitutions 3-crx插件:创新的网页文字替换工具

- Python实现8位等离子效果开源项目plasma.py解读

- 维护商店移动应用:基于PhoneGap的移动API应用

- Laravel-Admin的Redis Manager扩展使用教程

- Jekyll代理主题使用指南及文件结构解析

- cPanel中PHP多版本插件的安装与配置指南

- 深入探讨React和Typescript在Alias kopio游戏中的应用

- node.js OSC服务器实现:Gibber消息转换技术解析

- 体验最新升级版的mdbootstrap pro 6.1.0组件库

- 超市盘点过机系统实现与delphi应用

- Boogle: 探索 Python 编程的 Boggle 仿制品

- C++实现的Physics2D简易2D物理模拟

- 傅里叶级数在分数阶微分积分计算中的应用与实现

- Windows Phone与PhoneGap应用隔离存储文件访问方法

- iso8601-interval-recurrence:掌握ISO8601日期范围与重复间隔检查

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功