6

function. KG2E uses two scoring methods, i.e, asymmetric

KL-divergence and symmetric expected likelihood. While the

scoring function of ManifoldE is defined as

f

r

(h, t) =

M(h, r, t) − D

2

r

2

, (7)

where

M

is the manifold function, and

D

r

is a relation-specific

manifold parameter.

2) Semantic Matching: Another direction is to calculate

the semantic similarity. SME [39] proposes to semantically

match separate combinations of entity-relation pairs of

(h, r)

and

(r, t)

. Its scoring function is defined with two versions of

matching blocks - linear and bilinear block, i.e.,

f

r

(h, t) = g

left

(h, r)

>

g

right

(r, t). (8)

The linear matching block is defined as

g

left

(h, t) = M

l,1

h

>

+

M

l,2

r

>

+ b

>

l

, and the bilinear form is

g

left

(h, r) = (M

l,1

h) ◦

(M

l,2

r)+b

>

l

. By restricting relation matrix

M

r

to be diagonal

for multi-relational representation learning, DistMult [32]

proposes a simplified bilinear formulation defined as

f

r

(h, t) = h

>

diag(M

r

)t. (9)

To capture productive interactions in relational data and

compute efficiently, HolE [20] introduces a circular correlation

of embedding, which can be interpreted as a compressed

tensor product, to learn compositional representations. By

defining a perturbed holographic compositional operator as

p(a, b; c) = (c ◦a)? b

, where

c

is a fixed vector, the expanded

holographic embedding model HolEx [40] interpolates the

HolE and full tensor product method. It can be viewed as linear

concatenation of perturbed HolE. Focusing on multi-relational

inference, ANALOGY [21] models analogical structures of

relational data. It’s scoring function is defined as

f

r

(h, t) = h

>

M

r

t, (10)

with relation matrix constrained to be normal matrices in linear

mapping, i.e.,

M

>

r

M

r

= M

r

M

>

r

for analogical inference.

Crossover interactions are introduced by CrossE [41] with an

interaction matrix

C ∈ R

n

r

×d

to simulate the bi-directional

interaction between entity and relation. The relation specific

interaction is obtained by looking up interaction matrix as

c

r

= x

>

r

C

. By combining the interactive representations and

matching with tail embedding, the scoring function is defined

as

f(h, r, t) = σ

tanh (c

r

◦ h + c

r

◦ h ◦ r + b) t

>

. (11)

The semantic matching principle can be encoded by neural

networks further discussed in Sec. III-C.

The two methods mentioned above in Sec.

III-A

4 with group

representation also follow the semantic matching principle. The

scoring function of TorusE [30] is defined as:

min

(x,y)∈([h]+[r])×[t]

kx − yk

i

. (12)

By modeling

2L

relations as group elements, the scoring

function of DihEdral [31] is defined as the summation of

components:

f

r

(h, t) = h

>

Rt =

L

X

l=1

h

(l)>

R

(l)

t

(l)

, (13)

where the relation matrix

R

is defined in block diagonal form

for

R

(l)

∈ D

K

, and entities are embedded in real-valued space

for h

(l)

and t

(l)

∈ R

2

.

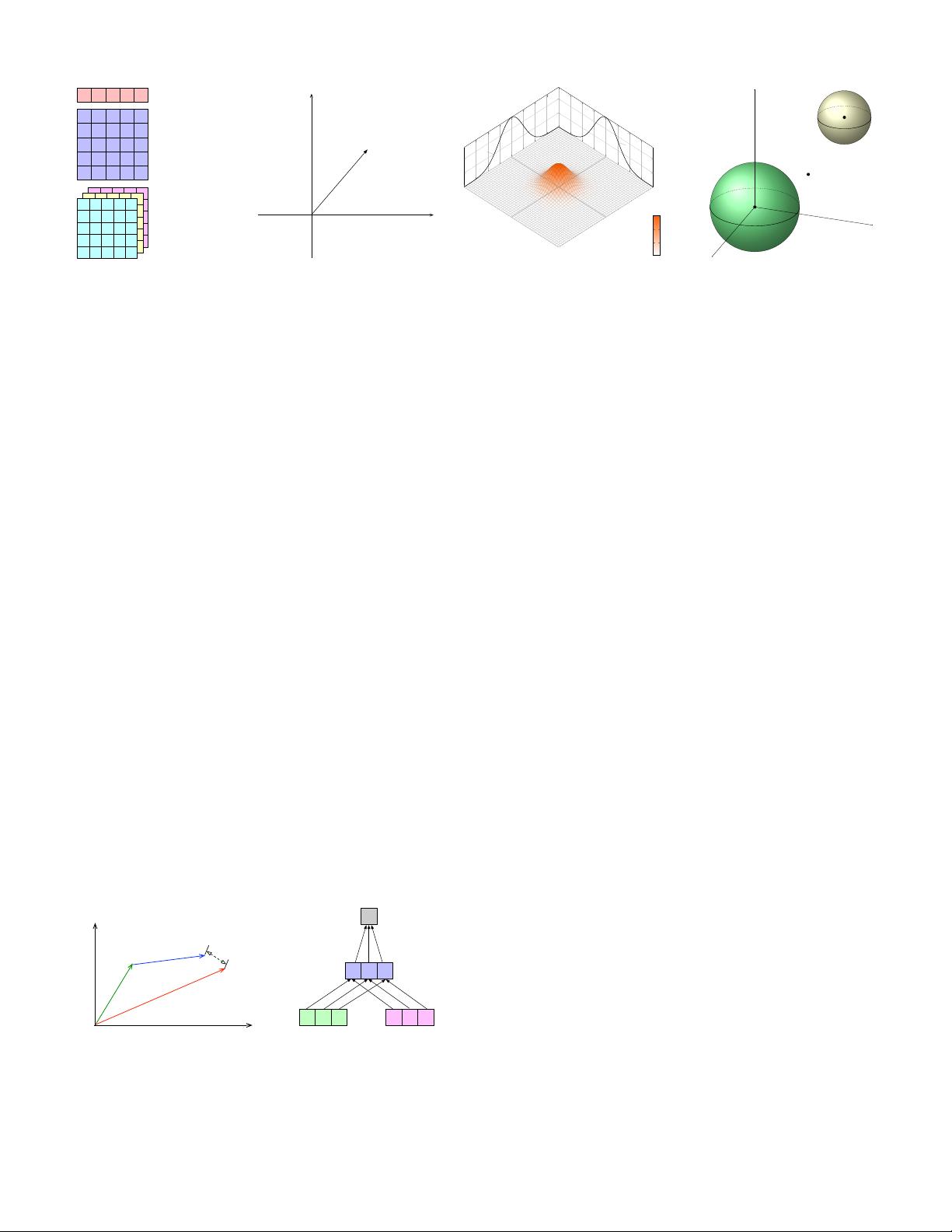

C. Encoding Models

This section introduces models that encode the interactions

of entities and relations through specific model architectures,

including linear/bilinear models, factorization models, and

neural networks. Linear models formulate relations as a

linear/bilinear mapping by projecting head entities into a

representation space close to tail entities. Factorization aims

to decompose relational data into low-rank matrices for

representation learning. Neural networks encode relational data

with non-linear neural activation and more complex network

structures. Several neural models are illustrated in Fig. 6.

1) Linear/Bilinear Models: Linear/bilinear models encode

interactions of entities and relations by applying linear operation

as:

g

r

(h, t) = M

T

r

h

t

, (14)

or bilinear transformation operations as Eq. 10. Canonical

methods with linear/bilinear encoding include SE [8], SME [39],

DistMult [32], ComplEx [22], and ANALOGY [21]. For

TransE [15] with L2 regularization, the scoring function can

be expanded to the form with only linear transformation with

one-dimensional vectors, i.e.,

kh + r − tk

2

2

= 2r

T

(h − t) − 2h

T

t + krk

2

2

+ khk

2

2

+ ktk

2

2

. (15)

Wang et al. [46] studied various bilinear models and eval-

uated their expressiveness and connections by introducing

the concepts of universality and consistency. The authors

further showed that the ensembles of multiple linear models

can improve the prediction performance through experiments.

Recently, to solve the independence embedding issue of entity

vectors in canonical Polyadia decomposition, SimplE [47]

introduces the inverse of relations and calculates the average

canonical Polyadia score of (h, r, t) and (t, r

−1

, h) as

f

r

(h, t) =

1

2

h ◦ rt + t ◦ r

0

t

, (16)

where

r

0

is the embedding of inversion relation. More bilinear

models are proposed from a factorization perspective discussed

in the next section.

2) Factorization Models: Factorization methods formulated

KRL models as three-way tensor

X

decomposition. A general

principle of tensor factorization can be denoted as

X

hrt

≈

h

>

M

r

t

, with the composition function following the semantic

matching pattern. Nickel et al. [48] proposed the three-way

rank-

r

factorization RESCAL over each relational slice of

knowledge graph tensor. For

k

-th relation of

m

relations, the

k-th slice of X is factorized as

X

k

≈ AR

k

A

T

. (17)

The authors further extended it to handle attributes of entities

efficiently [49]. Jenatton et al. [50] then proposed a bilinear

structured latent factor model (LFM), which extends RESCAL

by decomposing

R

k

=

P

d

i=1

α

k

i

u

i

v

>

i

. By introducing three-

way Tucker tensor decomposition, TuckER [51] learns to