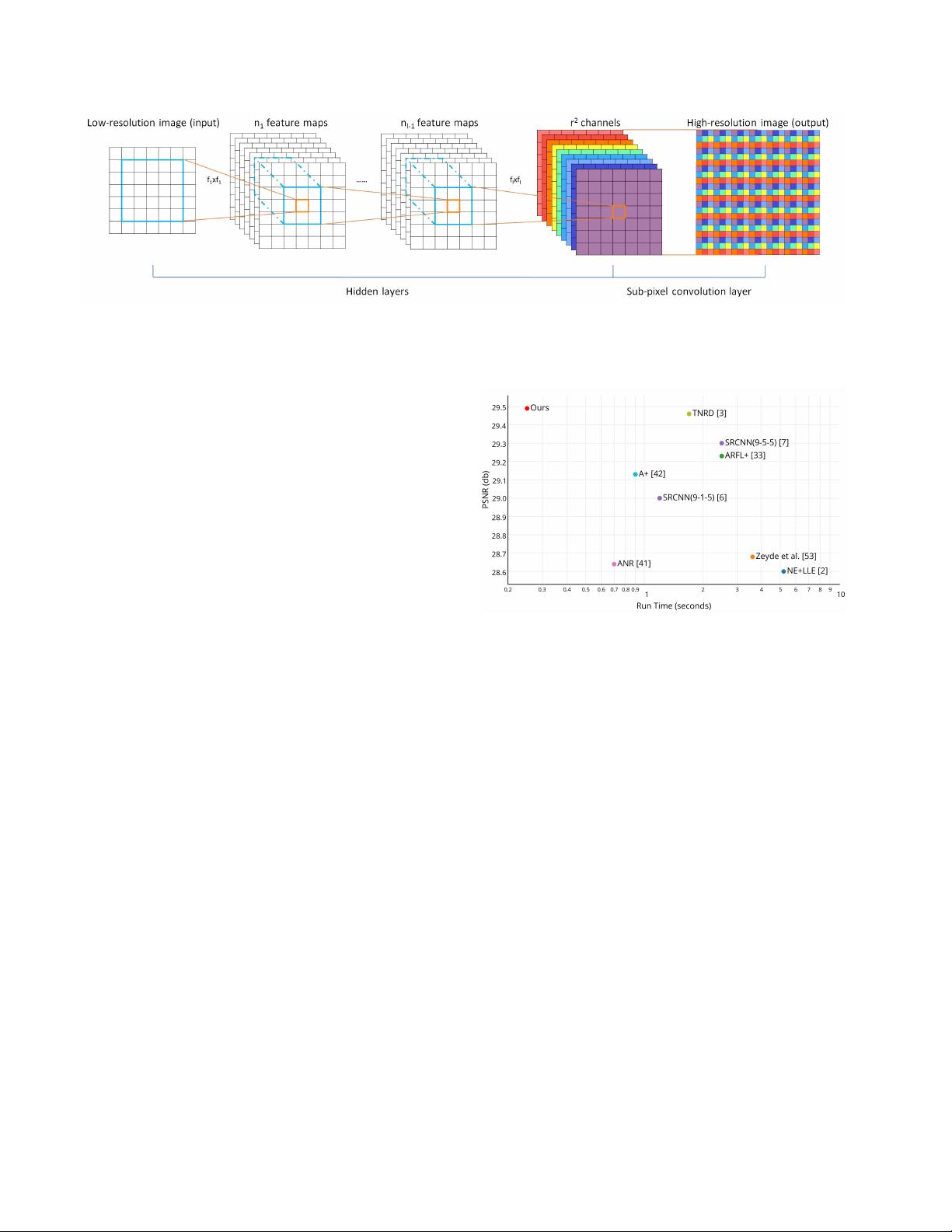

Figure 1. The proposed efficient sub-pixel convolutional neural network (ESPCN), with two convolution layers for feature maps extraction,

and a sub-pixel convolution layer that aggregates the feature maps from LR space and builds the SR image in a single step.

based [9, 18, 46, 12] and patch-based [2, 43, 52, 13, 54,

40, 5] methods. A detailed review of more generic SISR

methods can be found in [45]. One family of approaches

that has recently thrived in tackling the SISR problem is

sparsity-based techniques. Sparse coding is an effective

mechanism that assumes any natural image can be sparsely

represented in a transform domain. This transform domain

is usually a dictionary of image atoms [25, 10], which can

be learnt through a training process that tries to discover

the correspondence between LR and HR patches. This

dictionary is able to embed the prior knowledge necessary

to constrain the ill-posed problem of super-resolving unseen

data. This approach is proposed in the methods of [47, 8].

A drawback of sparsity-based techniques is that introducing

the sparsity constraint through a nonlinear reconstruction is

generally computationally expensive.

Image representations derived via neural networks [21,

49, 34] have recently also shown promise for SISR. These

methods, employ the back-propagation algorithm [22] to

train on large image databases such as ImageNet [30] in

order to learn nonlinear mappings of LR and HR image

patches. Stacked collaborative local auto-encoders are used

in [4] to super-resolve the LR image layer by layer. Os-

endorfer et al. [27] suggested a method for SISR based on

an extension of the predictive convolutional sparse coding

framework [29]. A multiple layer convolutional neural net-

work (CNN) inspired by sparse-coding methods is proposed

in [7]. Chen et. al. [3] proposed to use multi-stage trainable

nonlinear reaction diffusion (TNRD) as an alternative to

CNN where the weights and the nonlinearity is trainable.

Wang et. al [44] trained a cascaded sparse coding network

from end to end inspired by LISTA (Learning iterative

shrinkage and thresholding algorithm) [16] to fully exploit

the natural sparsity of images. The network structure is not

limited to neural networks, for example, a random forest

[31] has also been successfully used for SISR.

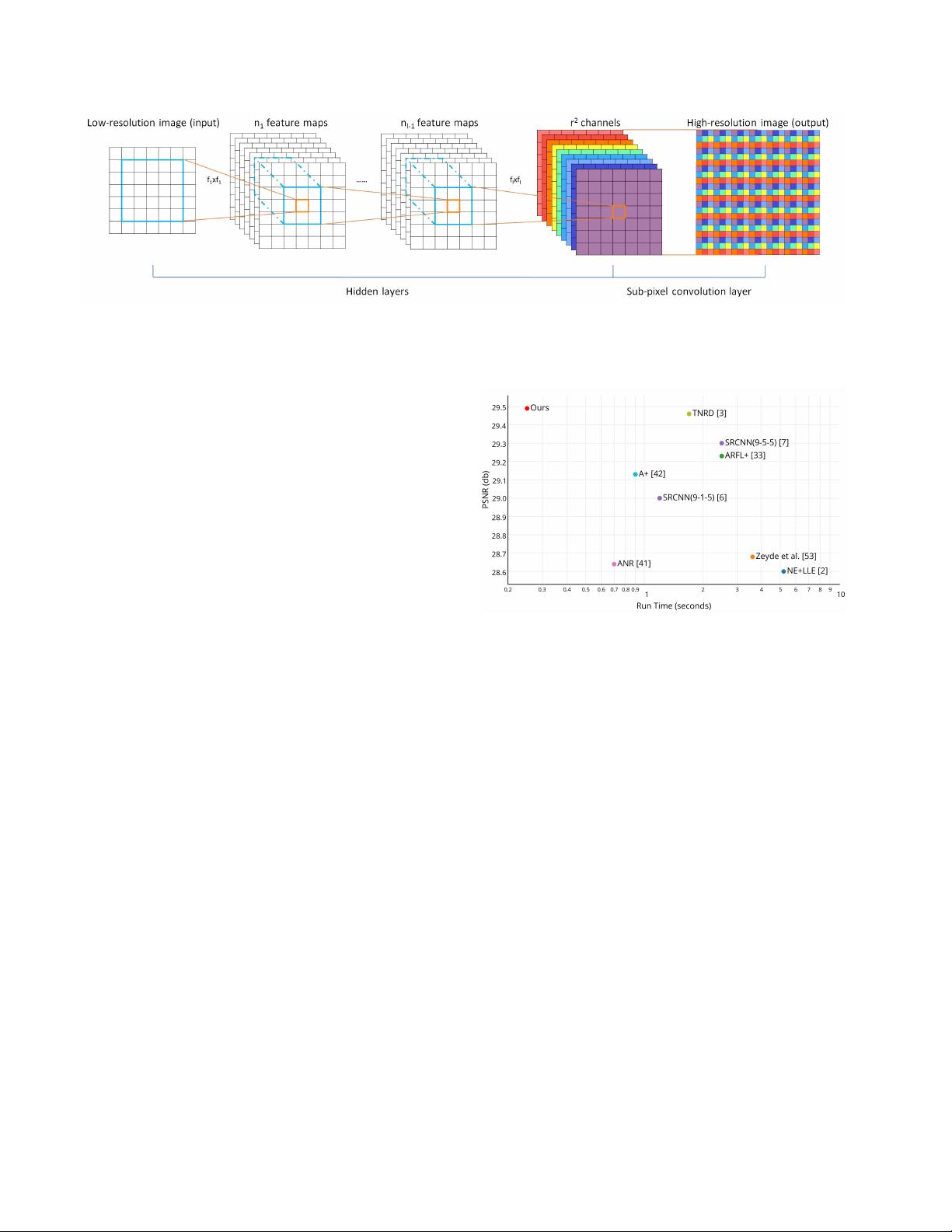

Figure 2. Plot of the trade-off between accuracy and speed for

different methods when performing SR upscaling with a scale

factor of 3. The results presents the mean PSNR and run-time

over the images from Set14 run on a single CPU core clocked at

2.0 GHz.

1.2. Motivations and contributions

With the development of CNN, the efficiency of the al-

gorithms, especially their computational and memory cost,

gains importance [36]. The flexibility of deep network mod-

els to learn nonlinear relationships has been shown to attain

superior reconstruction accuracy compared to previously

hand-crafted models [27, 7, 44, 31, 3]. To super-resolve

a LR image into HR space, it is necessary to increase the

resolution of the LR image to match that of the HR image

at some point.

In Osendorfer et al. [27], the image resolution is

increased in the middle of the network gradually. Another

popular approach is to increase the resolution before or

at the first layer of the network [7, 44, 3]. However,

this approach has a number of drawbacks. Firstly, in-

creasing the resolution of the LR images before the image

enhancement step increases the computational complexity.

This is especially problematic for convolutional networks,

where the processing speed directly depends on the input