没有合适的资源?快使用搜索试试~ 我知道了~

首页HDL模型功能验证:第二版《编写测试台架指南》

HDL模型功能验证:第二版《编写测试台架指南》

需积分: 10 15 下载量 24 浏览量

更新于2024-07-21

收藏 6.08MB PDF 举报

《编写测试台架:HDL模型的功能验证》(WritingTestbenches: Functional Verification of HDL Models, Second Edition)是Janick Bergeron所著的经典教材,由Qualis Design Corporation撰写并经Kluwer Academic Publishers出版。该书专注于高级硬件描述语言(HDL)模型的测试台架设计与功能验证,针对的是第二版更新内容,旨在帮助读者理解和实践如何创建有效且高效的硬件测试方案。

本书的核心内容涵盖以下几个方面:

1. **测试台架基础**:首先,作者介绍了测试台架的概念,强调它们在硬件设计验证过程中的重要性,特别是对于复杂集成电路(IC)的设计验证。测试台架不仅仅是程序代码,更是模拟系统行为、驱动和监控设计组件的关键工具。

2. **HDL模型功能验证**:详细讲解了如何使用HDL(如VHDL或Verilog)来构建和验证硬件模型。这包括模型的结构化设计、模块化开发、接口定义以及测试数据的生成。

3. **测试策略与方法**:书中讨论了不同的测试策略,如边界值分析、路径覆盖、随机测试等,以及如何选择合适的方法来确保模型的正确性和完整性。

4. **测试环境搭建**:涵盖了测试工具的选择、调试器的使用、仿真器配置以及与实际硬件的接口设计,使读者能够构建出一个完整的测试流程。

5. **案例研究与实战示例**:书中提供了丰富的实例,展示了如何将理论知识应用到实际项目中,通过实际操作加深理解和掌握测试台架设计的技巧。

6. **最新技术和趋势**:作为第二版,书中可能包含了针对当时最新HDL语言特性、工具更新以及行业标准的介绍,反映了当时的前沿实践。

版权信息表明,这本书在2003年由Kluwer Academic Publishers出版,并获得了美国图书馆学会的版权许可。版权所有,未经许可,不得复制、存储或传输任何部分。

《WritingTestbenches》是一本深入浅出的指南,为从事HDL设计和验证的专业人士提供了宝贵的学习资源,无论是在教育机构还是工业界,都具有很高的参考价值。对于希望通过系统学习测试台架设计的学生和工程师来说,这是一本不可或缺的参考资料。

What is Verification?

model of the universe as far as the design is concerned. The verifi-

cation challenge is to determine what input patterns to supply to the

design and what is the expected output of a properly- working

design when submitted to those input patterns.

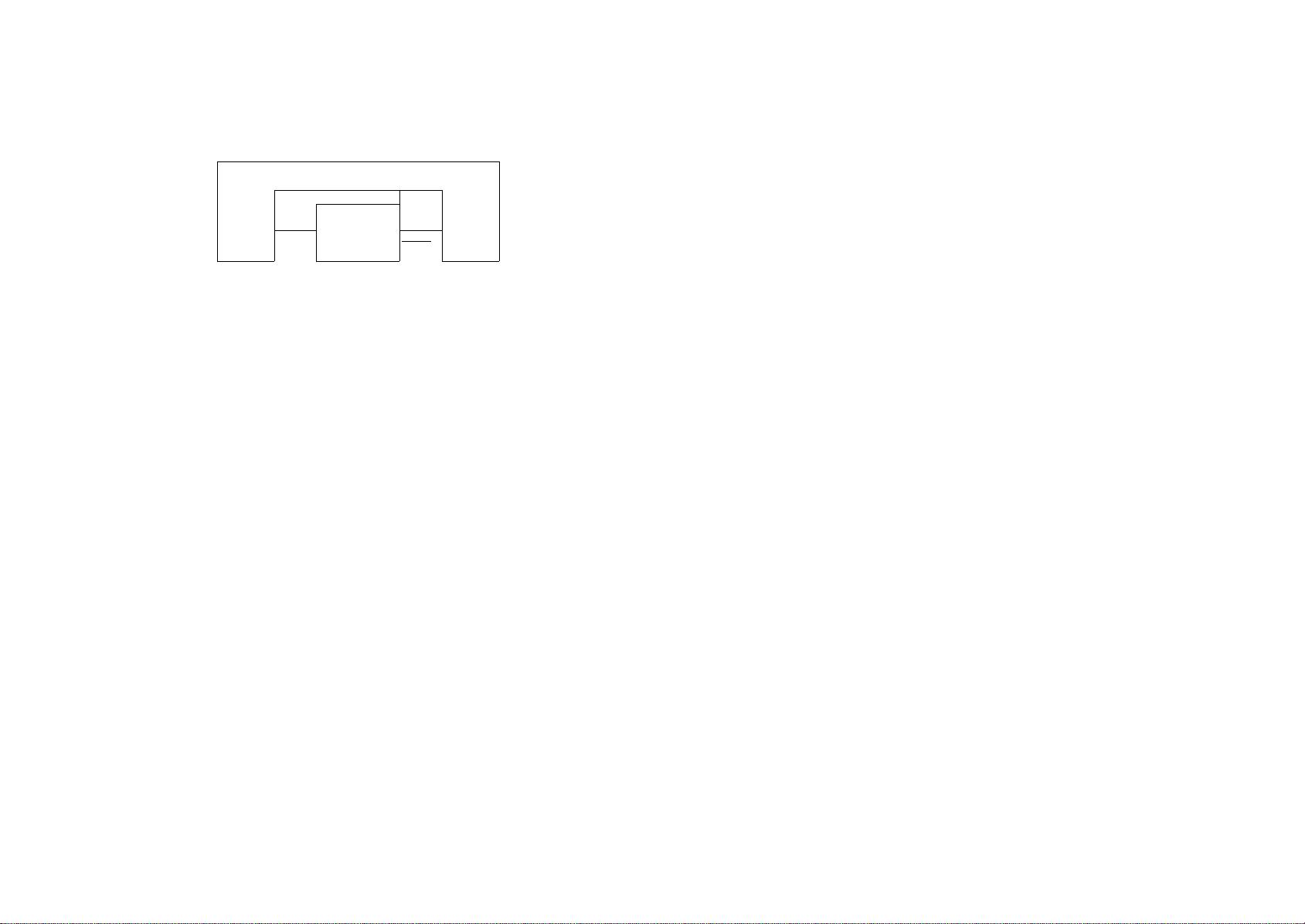

Testbench

Design

under

Verification

Design

under

Verification

P

THE IMPORTANCE OF VERIFICATION

If you look at a typical book on Verilog or VHDL, you will find that

most of the chapters are devoted to describing the syntax and

semantics of the language. You will also invariably find two or

three chapters on synthesizeable coding style or Register Transfer

Level (RTL) subset.

Most often, only a single chapter is dedicated to testbenches. Very

little can be adequately explained in one chapter and these explana-

tions are usually very simplistic. In nearly all cases, these books

limit the techniques described to applying simple sequences of vec-

tors in a synchronous fashion. The output is then verified using a

waveform viewing tool. Most books also take advantage of the

topic to introduce the file input mechanisms offered by the lan-

guage, devoting yet more content to detailed syntax and semantics.

Given the significant proportion of literature devoted to writing

synthesizeable VHDL or Verilog code compared to writing test-

benches to verify their functional correctness, you could be tempted

to conclude that the former is a more daunting task than the latter.

The evidence found in all hardware design teams points to the con-

trary.

Today, in the era of multi-million gate ASICs, reusable intellectual

property (IP), and system-on-a-chip (SoC) designs, verification

consumes about 70% of the design effort. Design teams, properly

staffed to address the verification challenge, include engineers ded-

icated to verification. The number of verification engineers can be

up to twice the number of RTL designers.

2 Writing Testbenches: Functional Verification of HDL Models

Figure 1-1.

Generic

structure of a

testbench and

design under

verification

Most books focus

on syntax, seman-

tic and RTL sub-

set.

70% of design

effort goes to veri-

fication.

The Importance of Verification

Verification is on

the critical path.

Verification time

can be reduced

through parallel-

Verification time

can be reduced

through abstrac-

tion.

Using abstraction

reduces control

over low-level

details.

Verification time

can be reduced

through automa-

tion.

Given the amount of effort demanded by verification, the shortage

of qualified hardware design and verification engineers, and the

quantity of code that must be produced, it is no surprise that, in all

projects, verification rests squarely in the critical path. The fact that

verification is often considered after the design has been completed,

when the schedule has already been ruined, compounds the prob-

lem. It is also the reason verification is currently the target of new

tools and methodologies. These tools and methodologies attempt to

reduce the overall verification time by enabling parallelism of

effort, higher abstraction levels and automation.

If efforts can be parallelized, additional resources can be applied

effectively to reduce the total verification time. For example, dig-

ging a hole in the ground can be parallelized by providing more

workers armed with shovels. To parallelize the verification effort, it

is necessary to be able to write—and debug—testbenches in paral-

lel with each other as well as in parallel with the implementation of

the design.

Providing higher abstraction levels enables you to work more effi-

ciently without worrying about low-level details. Using a backhoe

to dig the same hole mentioned above is an example of using a

higher abstraction level.

Higher abstraction levels are usually accompanied by a reduction in

control and therefore must be chosen wisely. These higher abstrac-

tion levels often require additional training to understand the

abstraction mechanism and how the desired effect can be produced.

Using a backhoe to dig a hole suffers from the same loss-of-control

problem: The worker is no longer directly interacting with the dirt;

instead the worker is manipulating levers and pedals. Digging hap-

pens much faster, but with lower precision and only by a trained

operator. The verification process can use higher abstraction levels

by working at the transaction- or bus-cycle levels (or even higher

ones), instead of always dealing with low-level zeroes and ones.

Automation lets you do something else while a machine completes

a task autonomously, faster and with predictable results. Automa-

tion requires standard processes with well-defined inputs and out-

puts. Not all processes can be automated. For example, holes must

be dug in a variety of shapes, sizes, depths, locations and in varying

Writing Testbenches: Functional Verification of HDL Models

3

What is Verification?

soil conditions, which render general-purpose automation impossi-

ble.

Randomization

can be used as an

automation tool.

Verification faces similar challenges. Because of the variety of

functions, interfaces, protocols and transformations that must be

verified, it is not possible to provide a general purpose automation

solution for verification, given today's technology. It is possible to

automate some portion of the verification process, especially when

applied to a narrow application domain. For example, trenchers

have automated digging holes used to lay down conduits or cables

at shallow depths. Tools automating various portions of the verifi-

cation process are being introduced. For example, there are tools

that will automatically generate bus-functional models from a

higher-level abstract specification.

For specific domains, automation can be emulated using random-

ization. By constraining a random generator to produce valid inputs

within the bounds of a particular domain, it is possible to automati-

cally produce almost all of the interesting conditions. For example,

the tedious process of vacuuming the bottom of a pool can be auto-

mated using a broom head that, constrained by the vertical walls,

randomly moves along the bottom. After a few hours, only the cor-

ners and a few small spots remain to be cleaned manually. This type

of automation process takes more computation time to achieve the

same result, but it is completely autonomous, freeing valuable

resources to work on other critical tasks. Furthermore, this process

can be parallelized

1

easily by concurrently running several random

generators. They can also operate overnight, increasing the total

number of productive hours.

1. Optimizing these concurrent processes to reduce the amount of overlap

is another question!

4 Writing Testbenches: Functional Verification of HDL Models

Reconvergence Model

RECONVERGENCE MODEL

Do you know what

you are actually

verifying?

The reconvergence model is a conceptual representation of the veri-

fication process. It is used to illustrate what exactly is being veri-

fied.

One of the most important questions you must be able to answer is:

What are you verifying? The purpose of verification is to ensure

that the result of some transformation is as intended or as expected.

For example, the purpose of balancing a checkbook is to ensure that

all transactions have been recorded accurately and confirm that the

balance in the register reflects the amount of available funds.

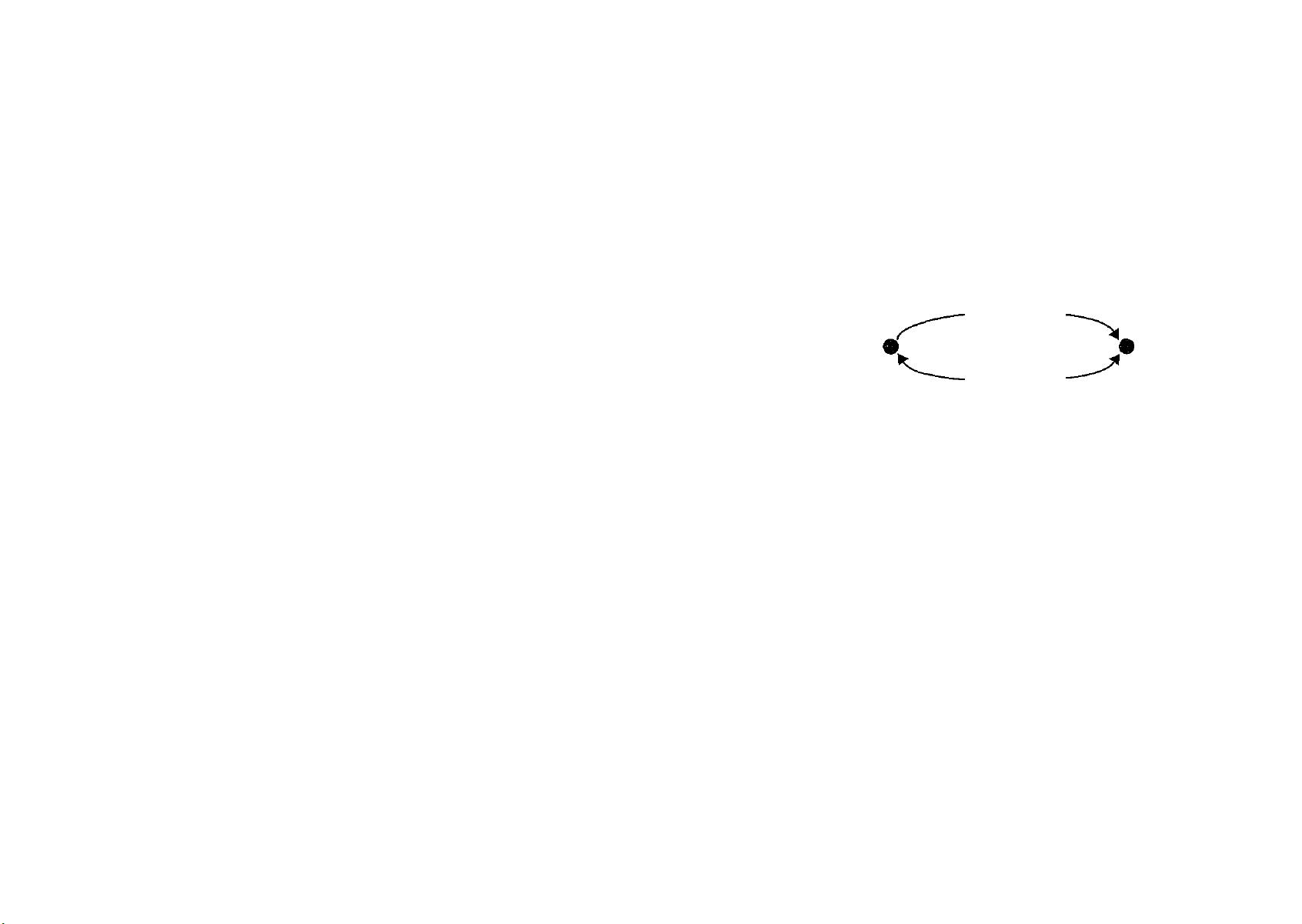

Figure 1-2.

Reconvergent

paths in

verification

Transformation

Verification

Verification is the

reconciliation,

through different

means, of a speci-

fication and an

output.

Figure 1-2 shows that verification of a transformation can be

accomplished only through a second reconvergent path with a com-

mon source. The transformation can be any process that takes an

input and produces an output. RTL coding from a specification,

insertion of a scan chain, synthesizing RTL code into a gate-level

netlist and layout of a gate-level netlist are some of the transforma-

tions performed in a hardware design project. The verification pro-

cess reconciles the result with the starting point. If there is no

starting point common to the transformation and the verification, no

verification takes place.

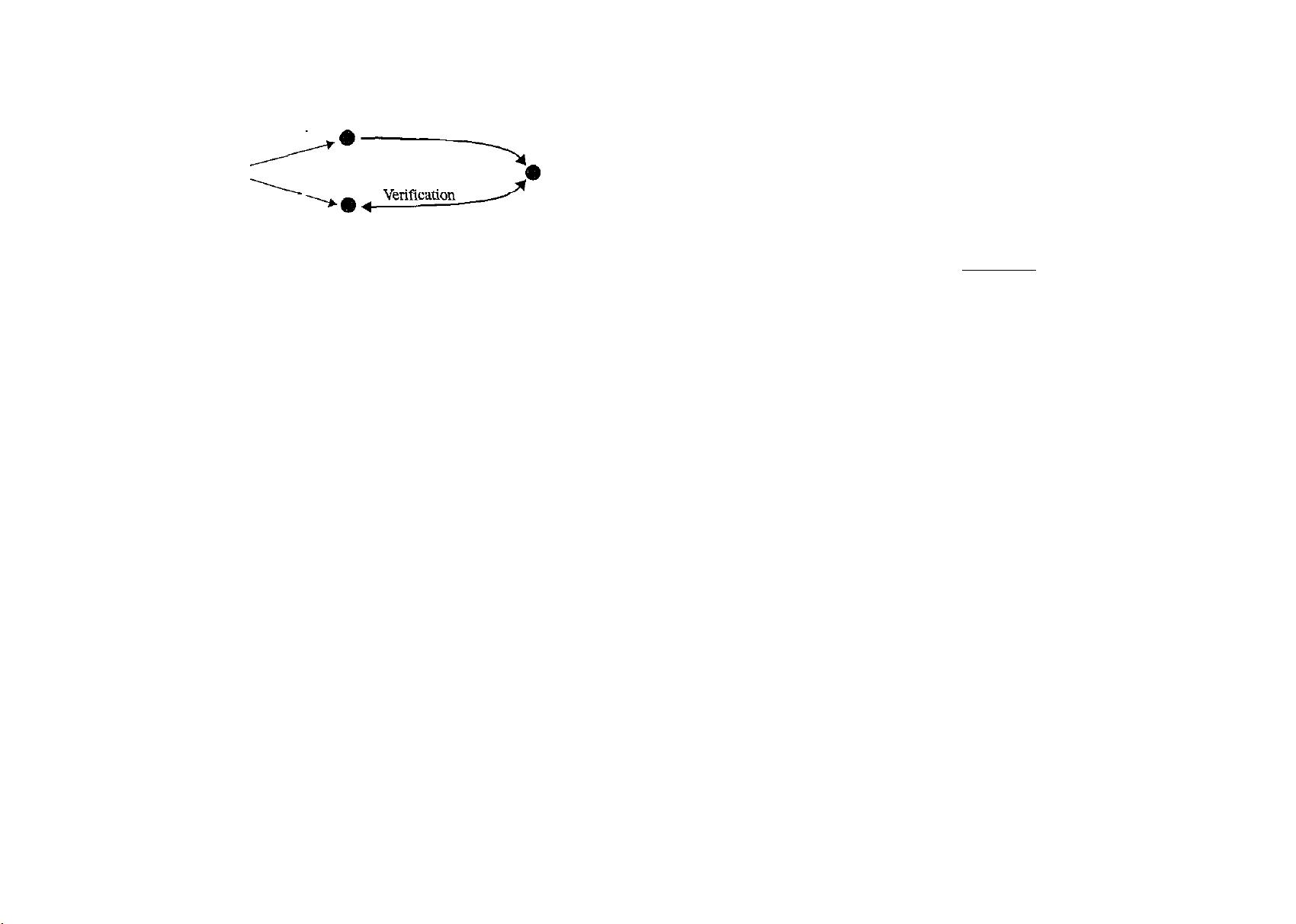

The reconvergent model can be described using the checkbook

example as illustrated in Figure 1-3. The common origin is the pre-

vious month's balance in the checking account. The transformation

is the writing, recording and debiting of several checks during a

Writing Testbenches: Functional Verification of HDL Models

5

one-month period. The verification reconciles the final balance in

the checkbook register using this month's bank statement.

Figure 1-3.

Balancing a

checkbook is a

verification

process

Balance from

last month's

statement

Recording Checks

Reconciliation

Balance from

latest

statement

THE HUMAN FACTOR

If the transformation process is not completely automated from end

to end, it is necessary for an individual (or group of individuals) to

interpret a specification of the desired outcome and then perform

the transformation. RTL coding is an example of this situation. A

design team interprets a written specification document and pro-

duces what they believe to be functionally correct synthesizeable

HDL code. Usually, each engineer is left to verify that the code

written is indeed functionally correct.

Figure 1-4.

Reconvergent

paths in

ambiguous

situation

Specifi-^ Interpre-

cation ^ tation

RTL coding

Verifying your

own design veri-

fies against your

interpretation, not

against the specifi-

cation.

Figure 1-4 shows the reconvergent path model of the situation

described above. If the same individual performs the verification of

the RTL coding that initially required interpretation of a specifica-

tion, then the common origin is that interpretation, not the specifi-

cation.

In this situation, the verification effort verifies whether the design

accurately represents the implementer's interpretation of that speci-

fication. If that interpretation is wrong in any way, then this verifi-

cation activity will never highlight it.

Any human intervention in a process is a source of uncertainty and

unrepeatability. The probability of human-introduced errors in a

6

Writing Testbenches: Functional Verification of HDL Models

process can be reduced through several complementary mecha-

nisms: automation, poka-yoke or redundancy.

Automation

Eliminate human

intervention.

Automation is the obvious way to eliminate human-introduced

errors in a process. Automation takes human intervention com-

pletely out of the process. However, automation is not always pos-

sible, especially in processes that are not well-defined and continue

to require human ingenuity and creativity, such as hardware design.

Poka-Yoke

Make human inter-

vention foolproof.

Another possibility is to mistake-proof the human intervention by

reducing it to simple, and foolproof steps. Human intervention is

needed only to decide on the particular sequence or steps required

to obtain the desired results. This mechanism is also known as

poka-yoke in Total Quality Management circles. It is usually the

last step toward complete automation of a process. However, just

like automation, it requires a well-defined process with standard

transformation steps. The verification process remains an art that,

to this day, does not yield itself to well-defined steps.

Redundancy

Have two individ-

uals check each

other's work.

The final alternative to removing human errors is redundancy. It is

the simplest, but also the most costly mechanism. Redundancy

requires every transformation resource to be duplicated. Every

transformation accomplished by a human is either independently

verified by another individual, or two complete and separate trans-

formations are performed with each outcome compared to verify

that both produced the same or equivalent output. This mechanism

is used in high-reliability environments, such as airborne and space

systems. It is also used in industries where later redesign and

Writing Testbenches: Functional Verification of HDL Models 7

replacement of a defective product would be more costly than the

redundancy itself, such as ASIC design.

Figure 1-5.

Redundancy in

an ambiguous

situation

enables

accurate

verification

Interpre

tation

Specifi-,

cation

Interpre-

tation

RTL coding

A different person

should be in

charge of verifica-

tion.

Figure 1-5 shows the reconvergent paths model where redundancy

is used to guard against misinterpretation of an ambiguous specifi-

cation document. When used in the context of hardware design,

where the transformation process is writing RTL code from a writ-

ten specification document, this mechanism implies that a different

individual must be in charge of the verification.

WHAT IS BEING VERIFIED?

Choosing the common origin and reconvergence points determines

what is being verified. These origin and reconvergence points are

often determined by the tool used to perform the verification. It is

important to understand where these points lie to know which trans-

formation is being verified. Formal verification, model checking,

functional verification, and rule checkers verify different things

because they have different origin and reconvergence points.

Formal Verification

Formal verifica-

tion does not elim-

inate the need to

write testbenches.

Formal verification is often misunderstood initially. Engineers

unfamiliar with the formal verification process often imagine that it

is a tool that mathematically determines whether their design is cor-

rect, without having to write testbenches, Once you understand

what the. end points of the formal verification reconvergent paths

are, you know what exactly is being verified.

The application of formal verification falls under two broad catego-

ries: equivalence checking and model checking.

8 Writing Testbenches: Functional Verification of HDL Models

What Is Being Verified?

Equivalence Checking

Equivalence

checking com-

pares two models.

Figure 1-6 shows the reconvergent path model for equivalence

checking. This formal verification process mathematically proves

that the origin and output are logically equivalent and that the trans-

formation preserved its functionality.

Figure 1-6.

Equivalence

checking paths RTL or

Netlist

Synthesis

Equivalence

Checking

RTL or

Netlist

It can compare two

netlists.

It can detect bugs

in the synthesis

software.

In its most common use, equivalence checking compares two

netlists to ensure that some netlist post-processing, such as scan-

chain insertion, clock-tree synthesis or manual modification

2

, did

not change the functionality of the circuit.

Another popular use of equivalence checking is to verify that the

netlist correctly implements the original RTL code. If one trusted

the synthesis tool completely, this verification would not be neces-

sary. However, synthesis tools are large software systems that

depend on the correctness of algorithms and library information.

History has shown that such systems are prone to error. Equiva-

lence checking is used to keep the synthesis tool honest. In some

rare instances, this form of equivalence checking is used to verify

that manually written RTL code faithfully represents a legacy gate-

level design.

Less frequently, equivalence checking is used to verify that two

RTL descriptions are logically identical, sometimes to avoid run-

ning lengthy. regression simulations when only minor non-func-

tional changes are made to die source code to obtain better

synthesis results, or when a design is translated from an HDL to

another.

2. Text editors remain the greatest design tools!

Writing Testbenches: Functional Verification of HDL Models 9

vviiaiis v eruieaiion.'

Equivalence

checking found a

bug in an arith-

metic operator.

Equivalence checking is a true alternative path to the logic synthe-

sis transformation being verified. It is only interested in comparing

Boolean and sequential logic functions, not mapping these func-

tions to a specific technology while meeting stringent design con-

straints. Engineers using equivalence checking found a design at

Digital Equipment Corporation (now part of HP) to be synthesized

incorrectly. The design used a synthetic operator that was function-

ally incorrect when handling more than 48 bits. To the synthesis

tool's defense, the documentation of the operator clearly stated that

correctness was not guaranteed above 48 bits. Since the synthesis

tool had no knowledge of documentation, it could not know it was

generating invalid logic. Equivalence checking quickly identified a

problem that could have been very difficult to detect using gate-

level simulation.

Model Checking

Model checking

proves assertions

about the behavior

of the design.

Model checking is a more recent application of formal verification

technology. In it, assertions or characteristics of a design are for-

mally proven or disproved. For example, all state machines in a

design could be checked for unreachable or isolated states. A more

powerful model checker may be able to determine if deadlock con-

ditions can occur.

Another type of assertion that can be formally verified relates to

interfaces. Using a formal description language, assertions about

the interfaces of a design are stated and the tool attempts to prove or

disprove them. For example, an assertion might state that, given

that signal ALE will be asserted, then either the DTACK or

ABORT signal will be asserted eventually.

Figure 1-7.

Model

checking paths

RTL Coding

Specifi-

cation

.Interpretation

Model

Checking

Assertions

RTL

10 Writing Testbenches: Functional Verification of HDL Models

What Is Being Verified?

Knowing which

assertions to prove

and expressing

them correctly is

the most difficult

part.

The reconvergent path model for model checking is shown in

Figure 1-7 . The greatest obstacle for model checking technology is

identifying, through interpretation of the design specification,

which assertions to prove. Of those assertions, only a subset can be

proven feasibly. Current technology cannot prove high-level asser-

tions about a design to ensure that complex functionality is cor-

rectly implemented. It would be nice to be able to assert that, given

specific register settings, a set of asynchronous transfer mode

(ATM) cells will end up at a set of outputs in some relative order.

Unfortunately, model checking technology is not at that level yet.

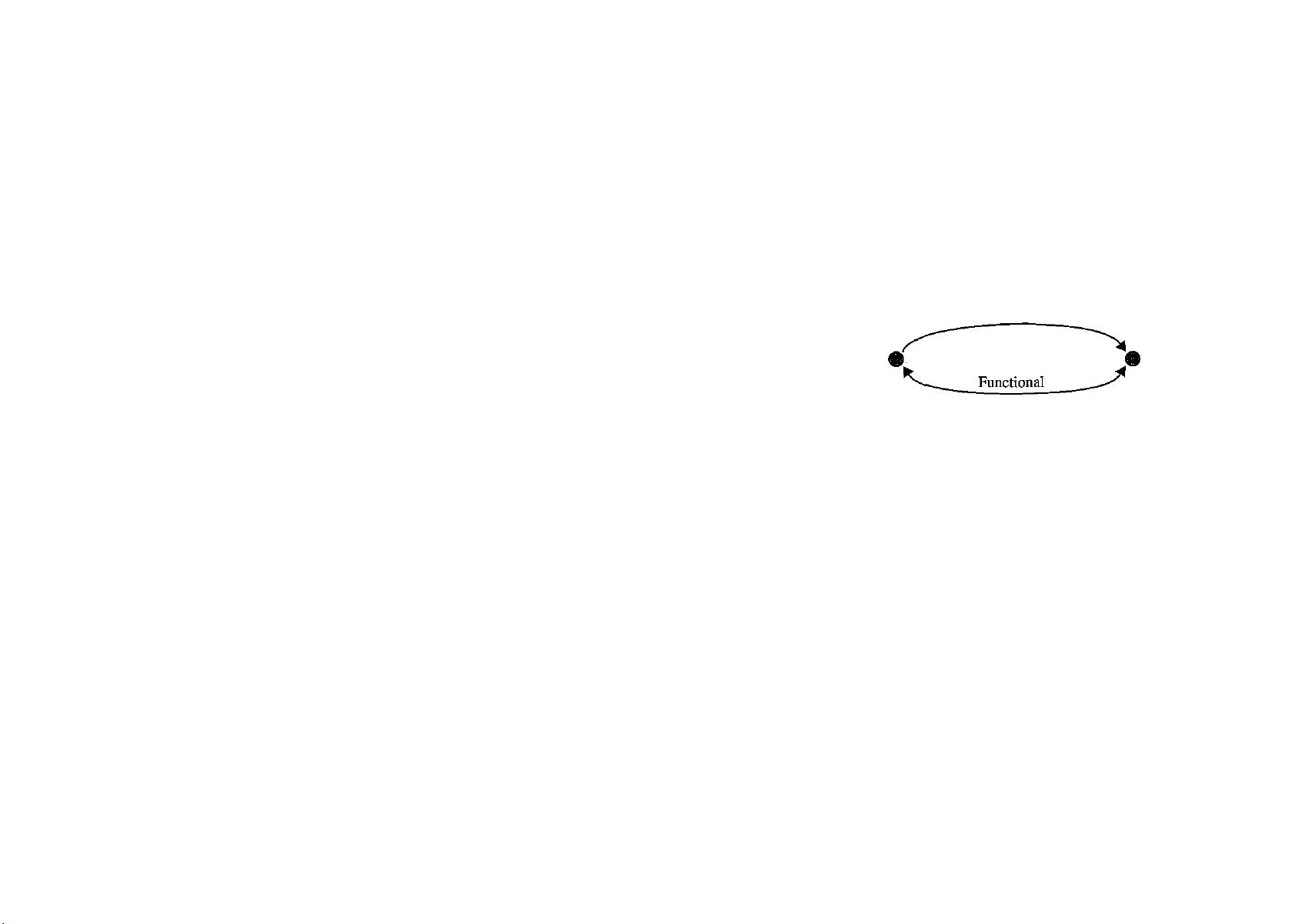

Functional Verification

Figure 1-8.

Functional

verification

paths

Specifi-

cation

RTL Coding

RTL

Verification

Functional verifi-

cation verifies

design intent.

You can prove the

presence of bugs,

but you cannot

prove their

absence.

The main purpose of functional verification is to ensure that a

design implements intended functionality. As shown by the recon-

vergent path model in Figure 1-8, functional verification reconciles

a design with its specification. Without functional verification, one

must trust that the transformation of a specification document into

RTL code was performed correctly, without misinterpretation of the

specification's intent.

It is important to note that, unless a specification is written in a for-

mal language with precise semantics, it is impossible to prove that

a design meets the intent of its specification. Specification docu-

ments are written using natural languages by individuals with vary-

ing degrees of ability in communicating their intentions. Any

document is open to interpretation. Functional verification, as a

process, can show that a design meets the intent of its specification.

But it cannot prove it. One can easily prove that the design does not

implement the intended function by identifying a single discrep-

3. Even if such a language existed, one would eventually have to show

that this description is indeed an accurate description of the design

intent, based on some higher-level ambiguous specification.

Writing Testbenches: Functional Verification of HDL Models 11

剩余254页未读,继续阅读

2018-08-07 上传

2010-12-19 上传

2022-09-20 上传

2012-02-26 上传

2021-09-28 上传

2012-03-02 上传

aihuangan03

- 粉丝: 0

- 资源: 1

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

最新资源

- Angular程序高效加载与展示海量Excel数据技巧

- Argos客户端开发流程及Vue配置指南

- 基于源码的PHP Webshell审查工具介绍

- Mina任务部署Rpush教程与实践指南

- 密歇根大学主题新标签页壁纸与多功能扩展

- Golang编程入门:基础代码学习教程

- Aplysia吸引子分析MATLAB代码套件解读

- 程序性竞争问题解决实践指南

- lyra: Rust语言实现的特征提取POC功能

- Chrome扩展:NBA全明星新标签壁纸

- 探索通用Lisp用户空间文件系统clufs_0.7

- dheap: Haxe实现的高效D-ary堆算法

- 利用BladeRF实现简易VNA频率响应分析工具

- 深度解析Amazon SQS在C#中的应用实践

- 正义联盟计划管理系统:udemy-heroes-demo-09

- JavaScript语法jsonpointer替代实现介绍

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功