to generate high resolution

1024 × 1024

images; we believe Imagen is much simpler, as Imagen

does not need to learn a latent prior, yet achieves better results in both MS-COCO FID and human

evaluation on DrawBench. GLIDE [

41

] also uses cascaded diffusion models for text-to-image, but

we use large pretrained frozen language models, which we found to be instrumental to both image

fidelity and image-text alignment. XMC-GAN [

81

] also uses BERT as a text encoder, but we scale to

much larger text encoders and demonstrate the effectiveness thereof. The use of cascaded models is

also popular throughout the literature [

14

,

39

] and has been used with success in diffusion models to

generate high resolution images [16, 29].

6 Conclusions, Limitations and Societal Impact

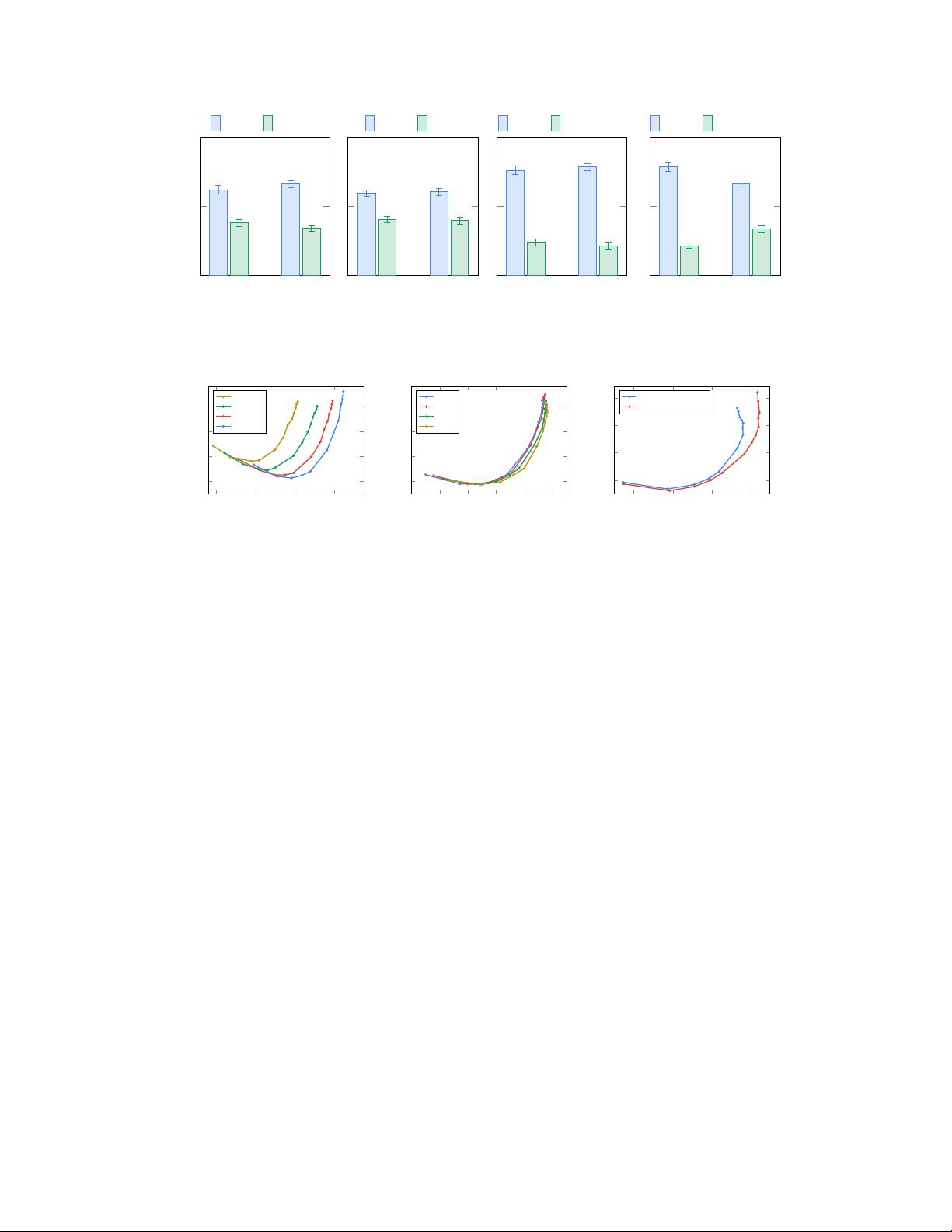

Imagen showcases the effectiveness of frozen large pretrained language models as text encoders for

the text-to-image generation using diffusion models. Our observation that scaling the size of these

language models have significantly more impact than scaling the U-Net size on overall performance

encourages future research directions on exploring even bigger language models as text encoders.

Furthermore, through Imagen we re-emphasize the importance of classifier-free guidance, and we

introduce dynamic thresholding, which allows usage of much higher guidance weights than seen

in previous works. With these novel components, Imagen produces

1024 × 1024

samples with

unprecedented photorealism and alignment with text.

Our primary aim with Imagen is to advance research on generative methods, using text-to-image

synthesis as a test bed. While end-user applications of generative methods remain largely out of

scope, we recognize the potential downstream applications of this research are varied and may impact

society in complex ways. On the one hand, generative models have a great potential to complement,

extend, and augment human creativity [

30

]. Text-to-image generation models, in particular, have

the potential to extend image-editing capabilities and lead to the development of new tools for

creative practitioners. On the other hand, generative methods can be leveraged for malicious purposes,

including harassment and misinformation spread [

20

], and raise many concerns regarding social and

cultural exclusion and bias [

67

,

62

,

68

]. These considerations inform our decision to not to release

code or a public demo. In future work we will explore a framework for responsible externalization

that balances the value of external auditing with the risks of unrestricted open-access.

Another ethical challenge relates to the large scale data requirements of text-to-image models, which

have have led researchers to rely heavily on large, mostly uncurated, web-scraped datasets. While this

approach has enabled rapid algorithmic advances in recent years, datasets of this nature have been

critiqued and contested along various ethical dimensions. For example, public and academic discourse

regarding appropriate use of public data has raised concerns regarding data subject awareness and

consent [

24

,

18

,

60

,

43

]. Dataset audits have revealed these datasets tend to reflect social stereotypes,

oppressive viewpoints, and derogatory, or otherwise harmful, associations to marginalized identity

groups [

44

,

4

]. Training text-to-image models on this data risks reproducing these associations

and causing significant representational harm that would disproportionately impact individuals and

communities already experiencing marginalization, discrimination and exclusion within society. As

such, there are a multitude of data challenges that must be addressed before text-to-image models like

Imagen can be safely integrated into user-facing applications. While we do not directly address these

challenges in this work, an awareness of the limitations of our training data guide our decision not to

release Imagen for public use. We strongly caution against the use text-to-image generation methods

for any user-facing tools without close care and attention to the contents of the training dataset.

Imagen’s training data was drawn from several pre-existing datasets of image and English alt-text pairs.

A subset of this data was filtered to removed noise and undesirable content, such as pornographic

imagery and toxic language. However, a recent audit of one of our data sources, LAION-400M [

61

],

uncovered a wide range of inappropriate content including pornographic imagery, racist slurs, and

harmful social stereotypes [

4

]. This finding informs our assessment that Imagen is not suitable for

public use at this time and also demonstrates the value of rigorous dataset audits and comprehensive

dataset documentation (e.g. [

23

,

45

]) in informing consequent decisions about the model’s appropriate

and safe use. Imagen also relies on text encoders trained on uncurated web-scale data, and thus

inherits the social biases and limitations of large language models [5, 3, 50].

While we leave an in-depth empirical analysis of social and cultural biases encoded by Imagen to

future work, our small scale internal assessments reveal several limitations that guide our decision

not to release Imagen at this time. First, all generative models, including Imagen, Imagen, may

run into danger of dropping modes of the data distribution, which may further compound the social

9