An Introduction to

Digital Image Processing

Bill Silver

Chief Technology Officer

Cognex Corporation, Modular Vision Systems Division

Digital image processing allows

one to enhance image features of

interest while attenuating detail

irrelevant to a given application,

and then extract useful information

about the scene from the

enhanced image. This introduction

is a practical guide to the

challenges, and the hardware and

algorithms used to meet them.

mages are produced by a variety of

physical devices, including still

and video cameras, x-ray devices,

electron microscopes, radar, and

ultrasound, and used for a variety of

purposes, including entertainment,

medical, business (e.g. documents),

industrial, military, civil (e.g. traffic),

security, and scientific. The goal in

each case is for an observer, human or

machine, to extract useful information

about the scene being imaged. An

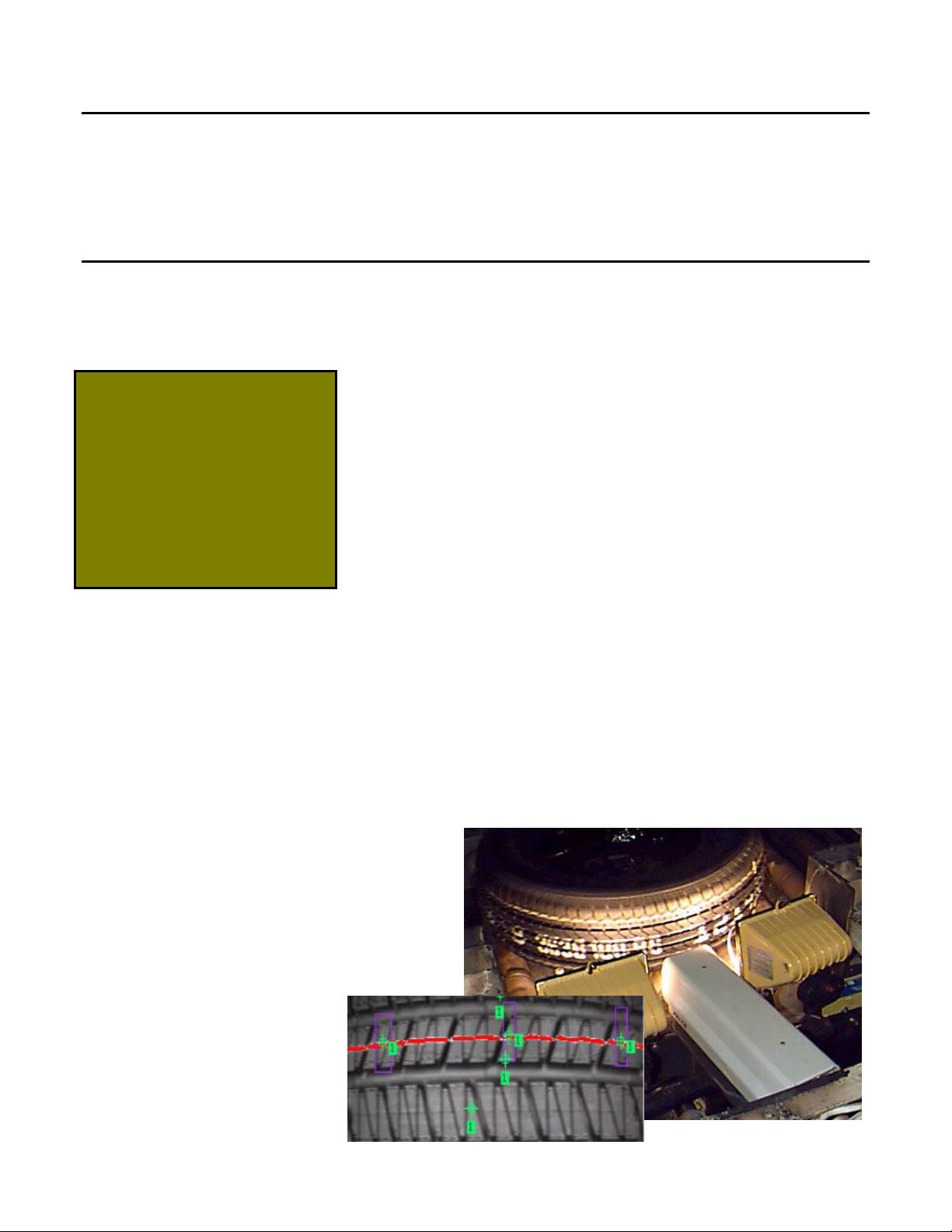

example of an industrial application is

shown in figure 1.

Often the raw image is not directly

suitable for this purpose, and must be

processed in some way. Such

processing is called image

enhancement; processing by an

observer to extract information is

called image analysis. Enhancement

and analysis are distinguished by their

output, images vs. scene information,

and by the challenges faced and

methods employed.

Image enhancement has been done

by chemical, optical, and electronic

means, while analysis has been done

mostly by humans and electronically.

Digital image processing is a subset

of the electronic domain wherein the

image is converted to an array of small

integers, called pixels, representing a

physical quantity such as scene

radiance, stored in a digital memory,

and processed by computer or other

digital hardware. Digital image

processing, either as enhancement for

human observers or performing

autonomous analysis, offers

advantages in cost, speed, and

flexibility, and with the rapidly falling

price and rising performance of

personal computers it has become the

dominant method in use.

The Challenge

An image is not a direct

measurement of the properties of

physical objects being viewed. Rather

it is a complex interaction among

several physical processes: the

intensity and distribution of

illuminating radiation, the physics of

the interaction of the radiation with

the matter comprising the scene, the

geometry of projection of the reflected

or transmitted radiation from 3

dimensions to the 2 dimensions of the

image plane, and the electronic

characteristics of the sensor. Unlike

for example writing a compiler, where

an algorithm backed by formal theory

exists for translating a high-level

computer language to machine

language, there is no algorithm and no

comparable theory for extracting

scene information of interest, such as

the position or quality of an article of

manufacture, from an image.

The challenge is often under-

appreciated by novice users due to the

seeming effortlessness with which

their own visual system extracts

information from scenes. Human

vision is enormously more

sophisticated than anything we can

engineer at present and for the

foreseeable future. Thus one must be

image

processing is

used to verify

that the correct

tire is installed

on vehicles at

GM.