In the case of real data, we observe that α

m

/α

⊥

m

starts off low (around 1) in early training and

then increases to a maximum (about 40) within in the first few epochs and then returns to a low

value again (around 1) at the end of training.

18

In the plot of α

m

/α

⊥

m

against training loss, we see

that when actual learning happens, that is, when the loss comes down, α

m

/α

⊥

m

stays around 20.

In other words, when training with real labels, each training example in our set of 50K examples

used to measure coherence helps many other examples.

In contrast, for random data, although the evolution of α

m

/α

⊥

m

is similar to that of real data, the

actual values, particularly, the peak is very different. α

m

/α

⊥

m

starts off low (around 1), increases

slightly (staying usually below 5), and then returns back to a low value (around 1). Therefore, each

training example in the case of random data, helps only one or two other examples during training,

that is, the 50K random examples used to estimate coherence are more or less orthogonal to each

other.

19

In summary,

With a ResNet-50 model on real ImageNet data, in a sample of 50K exam-

ples, each example helps tens of other examples during training, whereas on

random data, each example only helps one or two others.

This provides evidence that the difference in generalization between real and random stems from a

difference in similarity between the per-example gradients in the two cases, that is, from a difference

in coherence.

While experiments with other architectures and datasets also show similar differences between

real and random datasets (see Appendix E), there are cases when the coherence of random data as

measured by α

m

/α

⊥

m

over the entire network can be surprisingly high for an extended period during

training.

20

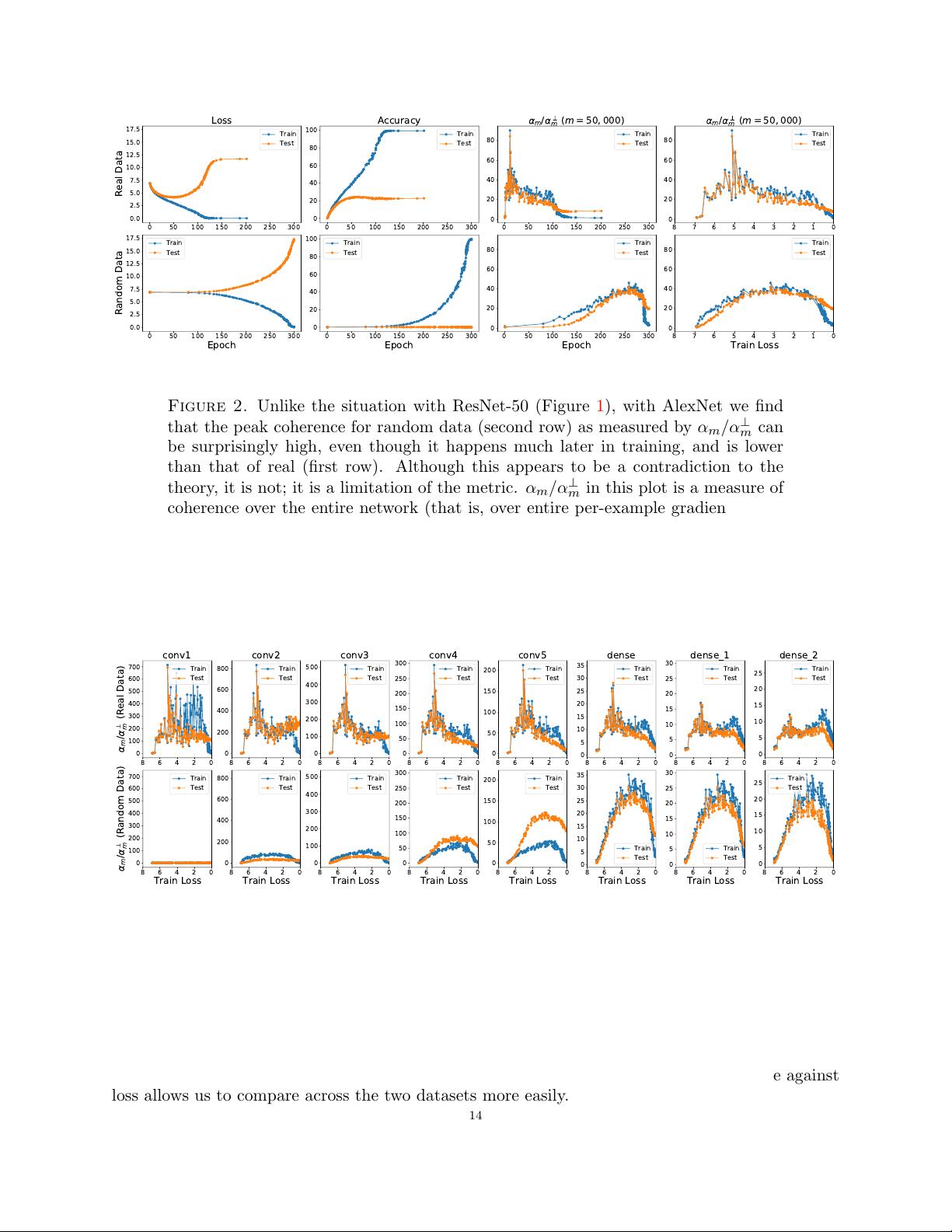

In our experiments, we found an extreme case of this when we replaced the ResNet-50

network in the previous experiment with an AlexNet network (learning rate of 0.01). The training

curves and measurements of α

m

/α

⊥

m

in this case are shown in Figure 2. As we can see, unlike the

ResNet-50 case, α

m

/α

⊥

m

reaches a value of 40 for m = 50, 000. In other words, in a sample of 50K

examples, at peak coherence, each random example helps 40 other examples!

21

What is going on? An examination of the per-layer values of α

m

/α

⊥

m

provides some insight. These

are shown in Figure 3. We see that for the first convolution layer (conv1) in the case of random—

and only in that case—α

m

/α

⊥

m

is approximately 1 indicating that the per-example gradients in that

layer are pairwise orthogonal (at least over the sample used to measure coherence).

22

This indicates

that the first layer plays an important role in “memorizing” the random data since each example

is pushing the parameters of the layer in a different direction (orthogonal to the rest). This is not

surprising since the images are comprised of random pixels.

18

For now, we ignore the small differences in training and test coherence.

19

We note here that very early in training, that is, the first few steps (not shown in Figure 1, but presented in

Figure 16 instead), α

m

/α

⊥

m

can be very high even for random data due to imperfect initialization. All the training

examples are coordinated in moving the network to a more reasonable point in parameter space. As may be expected

from our theory, this movement generalizes well: the test loss decreases in concert with training loss in this period.

Rapid changes to the network early in training is well documented (see, for example, the need for learning rate

warmup in He et al. [2016] and Goyal et al. [2017]).

20

As we discussed earlier, coherence even for random can be high for a short period early on in training due to

imperfections in initialization. But the difference here is sustained high coherence.

21

That said, note that (1) even in this case, at its peak α

m

/α

⊥

m

for real is more than 2× the peak for random;

and (2) the high coherence of random occurs much later in training than that of real which possibly indicates the

importance of the “expansion term” ([η

k

β]

T

k=t+1

) in the bound of Theorem 1 (see discussion in Section 5).

22

The difference in α

m

/α

⊥

m

in the first layer between real and random is also seen when the entire training set is

used to measure α

m

/α

⊥

m

(Figure 19).

15