Draft

Copyright of Marco Zuliani 2008–2011 Draft

θ. We define the error associated with the datum d with respect to the model space

as the distance from d to M(θ):

e

M

(d, θ)

def

= min

d

0

∈M(θ)

dist(d, d

0

)

where dist(·, ·) is an appropriate distance function. Using this error metric, we define

the CS as:

S (θ)

def

= {d ∈ D : e

M

(d; θ) ≤ δ} (3.1)

where δ is a threshold that can either be inferred from the nature of the problem

or, under certain hypothesis, estimated automatically [WS04] (see Figure 3.1 for a

pictorial representation of the previous definitions). In the former case, if we want

Draft

Copyright of Marco Zuliani 2008–2011 Draft

more than 50% of outliers.

Despite many modifications, the RANSAC algorithm is essentially composed of

two steps that are repeated in an iterative fashion (hypothesize–and–test framework):

• Hypothesize. First minimal sample sets (MSSs) are randomly selected from

the input dataset and the model parameters are computed using only the el-

ements of the MSS. The cardinality of the MSS is the smallest sufficient t o

determine the model parameters

2

(as opposed to other approaches, such as

least squares, where the parameters are estimated using all the data available,

possibly with appr o p r i a t e weights).

• Test. In the second step RANSAC checks wh ich elements of the entir e dataset

are consistent with the mo d e l instantiated with the parameters estimated in

the first step. The set of such elements is called consensus set (CS).

RANSAC terminates wh en the proba b i l i ty of finding a better ranked CS d r op s be-

low a certain threshold. In the origin a l formulation the ranking of the CS was its

cardinality ( i.e. CSs that contain more elements are ranked better than CSs t hat

contain fewer elem ents).

3.2 Preliminaries

To facilitate the discussion that follows, it is convenient to intro duce a suitable

formalism to describe the steps for the estimation of the mod el param et er s and for the

construction of the CS. As usual we will denot e vectors with boldface letters and the

superscript

(h)

will indicate the h

th

iteration. The symbol ˆx indicates the estimated

value of the quantity x. The input dataset which is composed of N elements is

indicated by D = {d

1

,...,d

N

} and we will indicate a MSS with the letter s. Let

θ ({d

1

,...,d

h

})betheparametervectorestimatedusingthesetofdata{d

1

,...,d

h

},

where h ≥ k and k is the cardin a l i ty of the MSS. The model space M is defined as:

M(θ)

def

=

d ∈ R

d

: f

M

(d; θ)=0

where θ is a parameter vector and f

M

is a smooth function whose zero level set

contains all the points that fit the model M in st a ntiated with the parameter vector

2

Suppose we want to estimate a line: in this case the cardinality of the MSS is 2, since at least

two distinct points are needed to uniquely define a line.

16

Draft

Copyright of Marco Zuliani 2008–2011 Draft

more than 50% of out l i er s.

Despite many modifications, th e RANSAC algori th m is essential l y composed of

two steps that are repeated in an iterative fashion (hypothesize–and–test framework):

• Hypothesize. First minimal sample sets (MSSs) are randomly selected from

the input dataset and the model parameters are computed using only the el-

ements of the MSS. The cardinality of the MSS is the smallest sufficient to

determine th e model parameters

2

(as opposed to other approaches, such as

least squares, where the parameter s are estimated using all the data available,

possibly with appr o p r i a t e wei g hts) .

• Test. In the second step RANSAC ch ecks which elements of t h e entire dataset

are consist ent with th e model instantiated with t h e parameters estimated in

the first step. The set of such elements is called consensus set (CS).

RANSAC terminates wh en the probabili ty of finding a better ranked CS drops be-

low a certain thr esh o l d. In the original formulation the ranking of the CS was its

cardinality ( i.e. CSs that contain more elements are ranked bett er than CSs that

contain fewer elements).

3.2 Preliminaries

To facilitate the discussion that follows, it is convenient to introduce a suitable

formalism to describe the steps for t h e estimati on of the model parameters and for the

construction of the CS. As usual we will denote vectors with boldface letters and the

superscript

(h)

will indicate the h

th

iteration. The symbol ˆx indicates the estimat ed

value of t h e quantity x. The inpu t dataset whi ch is composed of N el ements is

indicated by D = {d

1

,...,d

N

} and we will indicate a MSS with the letter s. Let

θ ({d

1

,...,d

h

})betheparametervectorestimatedusingthesetofdata{d

1

,...,d

h

},

where h ≥ k and k is the cardinality of t h e MSS. The model space M is defined as:

M(θ)

def

=

d ∈ R

d

: f

M

(d; θ)=0

where θ is a parameter vector and f

M

is a smooth function whose zero level set

contains all the points that fit the model M instantiated with t h e par a m et er vector

2

Suppose we want to estimate a line: in this case the cardinality of the MSS is 2, since at least

two distinct points are needed to uniquely define a line.

16

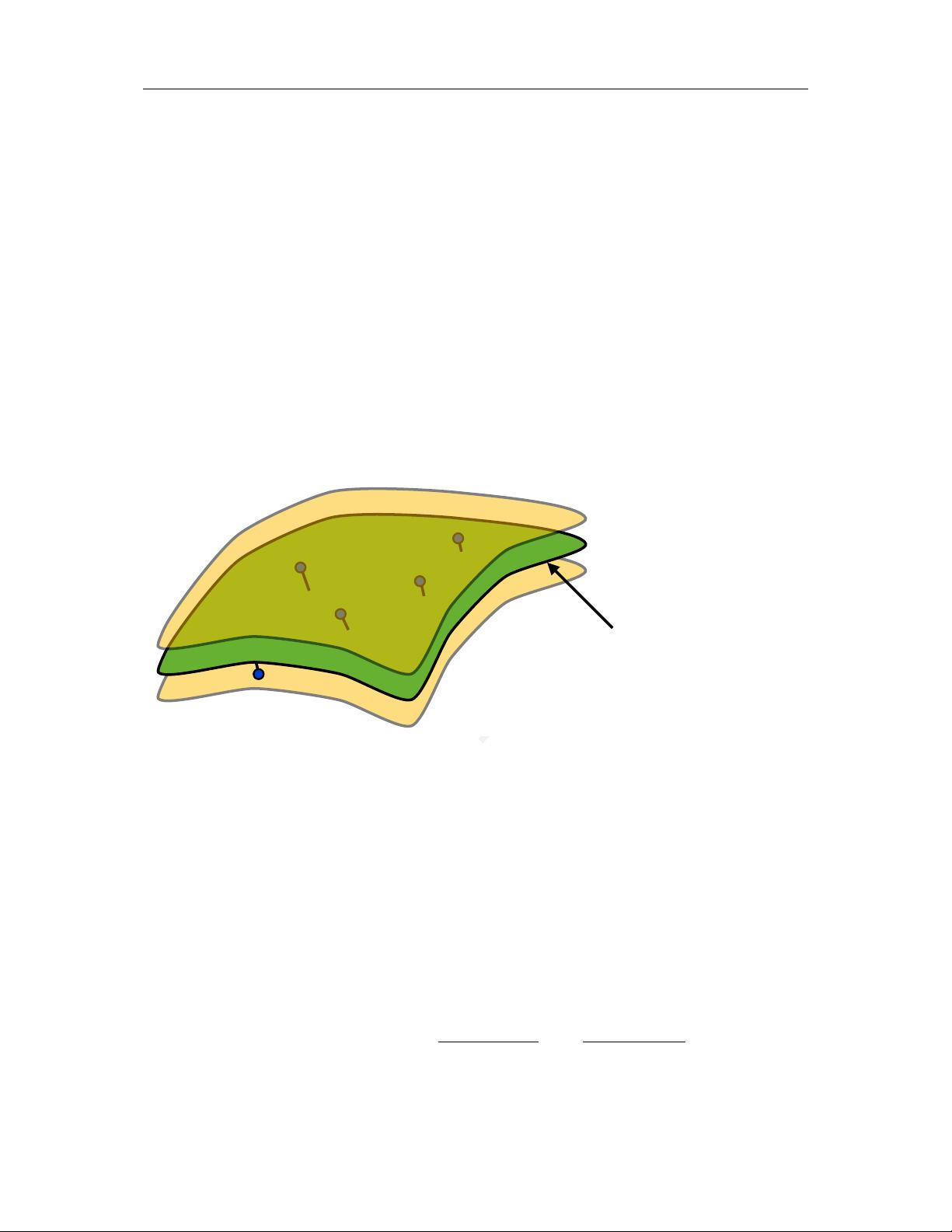

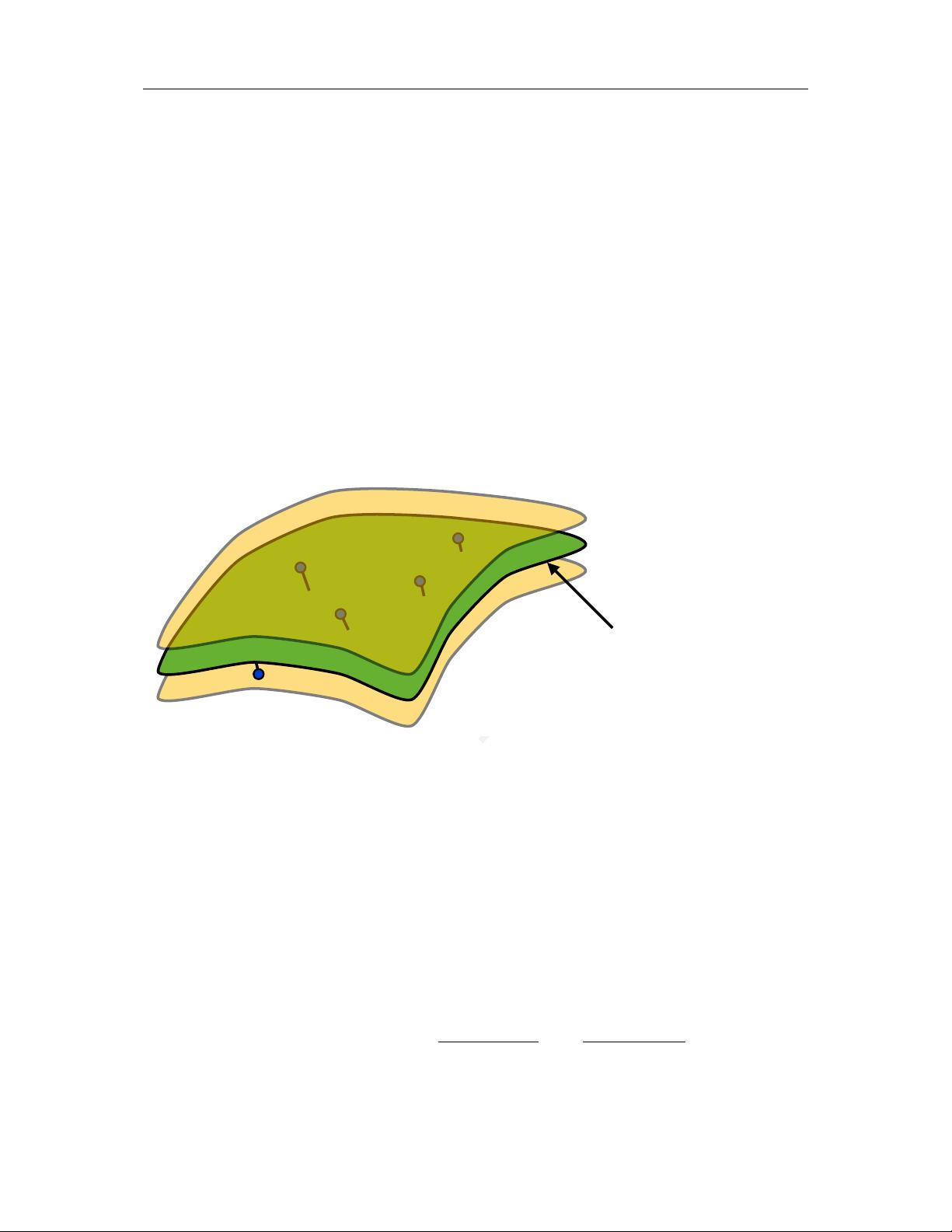

Figure 3.1: This figure pictorially displays the model space M as a green surface (the

locus for which f

M

(d; θ) = 0). The yellow surfaces represent the boundaries for a

datum to be considered an inlier (imagine that the distance function is the Euclidean

distance, hence the smallest distance between any two points on the yellow surface

and green surface is δ). Note that the structure of the green surface is both defined

by the model M and by the parameter vector θ. The inliers, represented as blue

dots, lie in between the two yellow “crusts”.

to relate the value of δ to the statistics of the noise that affects the data and the

distance function is the Euclidean norm, we can write:

e

M

(d, θ) = min

d

0

∈M(θ)

v

u

u

t

n

X

i=1

(d

i

− d

0

i

)

2

=

v

u

u

t

n

X

i=1

(d

i

− d

∗

i

)

2

17