BUCH et al.: REVIEW OF COMPUTER VISION TECHNIQUES FOR ANALYSIS OF URBAN TRAFFIC 923

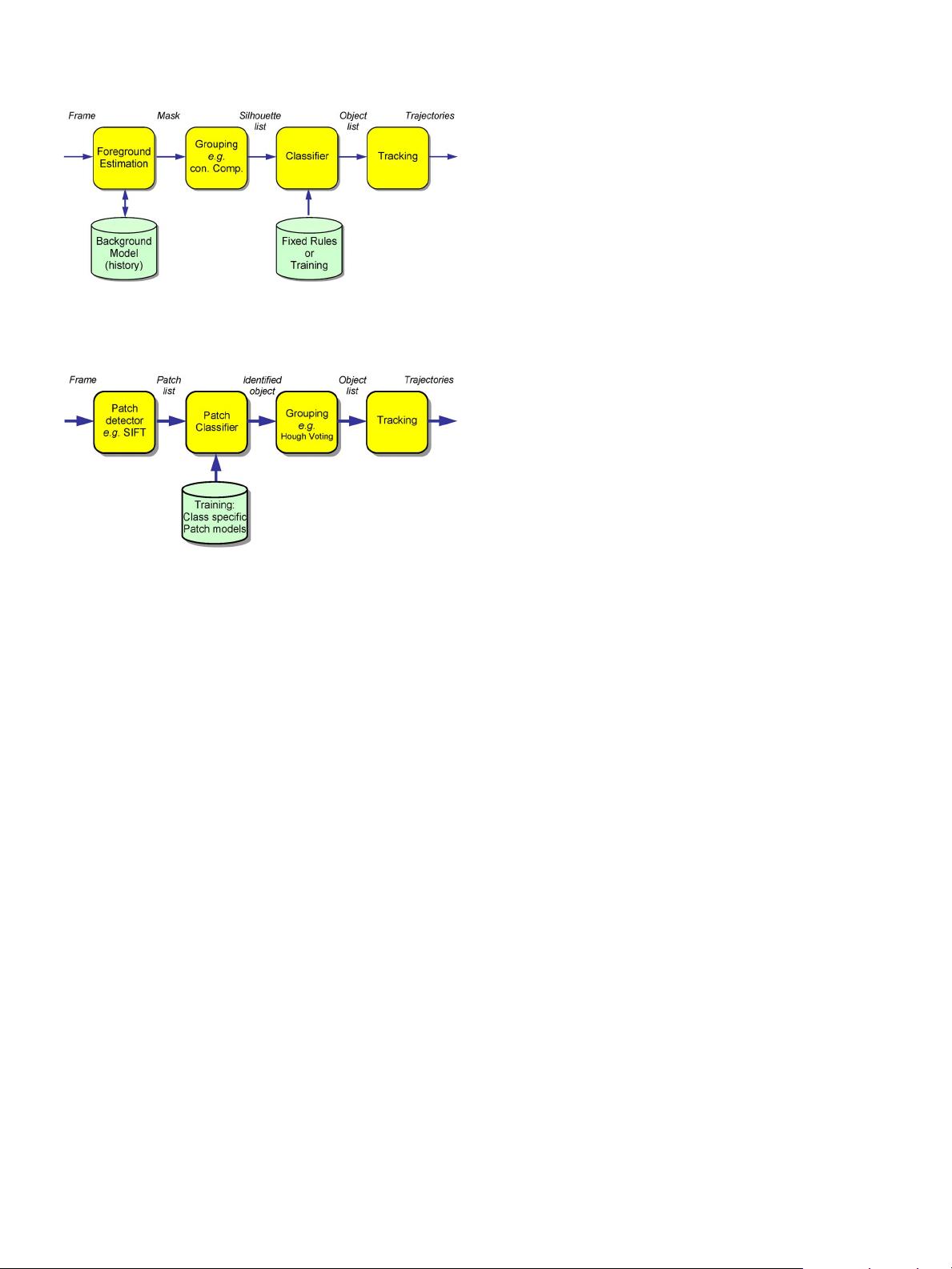

Fig. 2. Block diagram for a top–down surveillance system. The grouping of

pixels in the foreground mask into silhouettes that represent objects is done

early with a simple algorithm without knowledge of object classes.

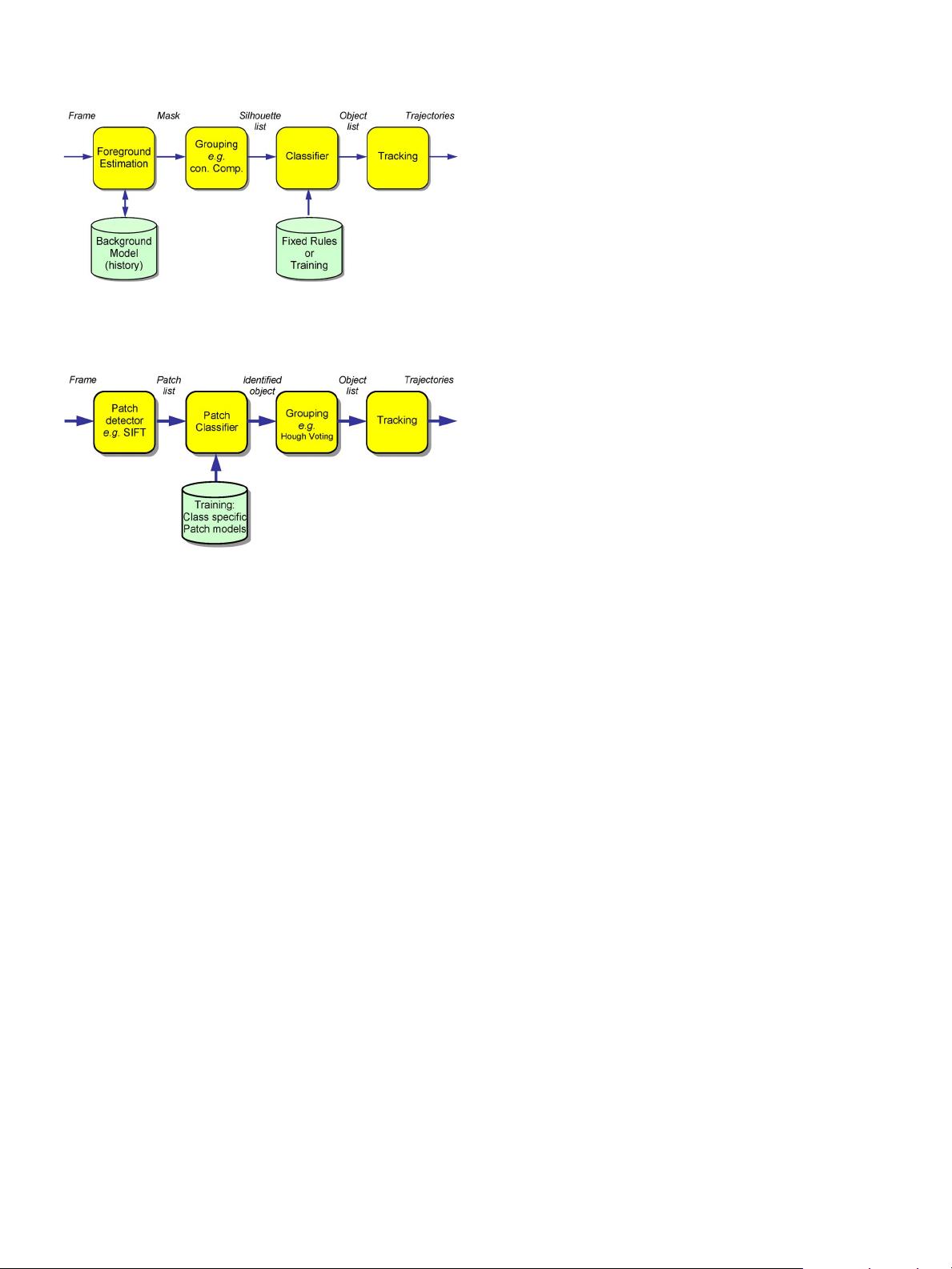

Fig. 3. Block diagram for a bottom–up surveillance system. Local image

patches are first extracted from the input image and classified as a specific

part of a trained object class. The identified parts are combined into objects

based on the class through a grouping or voting process. Advanced tracking

concepts [83] allow this grouping to be performed in the spatial–temporal

domain, which directly produces an object trajectory rather than frame-per-

frame object detections.

the processing pipeline of a typical video analytics application:

foreground estimation (see Section III-A), classification (see

Section III-B), and tracking (see Section III-D). See Fig. 2

for a block diagram. A statistical model typically estimates

foreground pixels, which are then grouped with a basic model

(e.g., connected regions) and propagated through the system

until the classification stage; for example, see [14], [21], [29],

[45], [51], [56], and [98]. Classification then uses prior infor-

mation (previously learned or preprogrammed) about the object

classes to assign a class label. For the remainder of this paper,

we will refer to this class of algorithms as “top–down” or

“object based,” because pixels are grouped into objects early

during the processing.

In contrast, we define a “bottom–up” approach as an ap-

proach that first detects and classifies parts of an object (see

Fig. 3). This initial classification of the parts uses learned prior

information (e.g., training) about the final object classes (e.g.,

an image area is classified to be a car wheel or a pedestrian

head based on previously learned appearances of wheels and

heads). The combination of these parts into valid objects and

trajectories is the final step of the algorithm; for example, see

[83], [85], and [104]. This type of approach is typically used in

generic object recognition.

In the next section, we will first describe the top–down

approach in more detail, including foreground segmentation

and top–down vehicle classification. This approach is followed

by relevant bottom–up classification approaches for traffic

surveillance. The last section considers tracking, which can

equally be applied after both classification methods.

A. Foreground Segmentation

Foreground estimation and segmentation is the first stage of

several visual surveillance systems. The f oreground regions are

marked (e.g., mask image) for processing in the subsequent

steps. The foreground is defined as every object, which is

not a fixed furniture of a scene, where fixed could normally

mean months or years. This definition conforms to human

understanding, but it is difficult to algorithmically implement.

There are two main different approaches to estimate the fore-

ground, which both use strong assumptions to comply with the

aforementioned definition. First, a background model of some

kind can be used to accumulate information about the s cene

background of a video sequence. The model is then compared

to the current frame to identify differences (or “motion”),

provided that the camera is stationary. This concept lends itself

well for computer implementation but leads to problems with

slow-moving traffic. Any car should be considered foreground,

but stationary objects are missed due to the lack of motion. The

next five sections discus different solutions for using motion as

the main cue for foreground segmentation.

A different approach performs segmentation based on whole

object appearances and will be discussed in Section VI. This

approach can be used for moving and for stationary cameras but

requires prior information f or foreground object appearances.

This way, the review moves from the simple frame differ-

ence method in the next section to learning based methods in

Section VI.

1) Frame Differencing: Possibly, the simplest method for

foreground segmentation is frame differencing. A pixel-by-

pixel difference map is computed between two consecutive

frames. This difference is thresholded and used as the fore-

ground mask. This algorithm is very fast; however, it can-

not cope with noise, abrupt illumination changes, or periodic

movements in the background such as trees. In [110], frame

differencing is used to detect street-parking vehicles. Special

care is taken in the algorithm to suppress the influence of noise.

Motorcycles are detected in [102] based on frame differencing.

However, using more information than only the last frame for

subtraction is preferable. This approach leads to the background

subtraction techniques described in the next sections.

2) Background Subtraction: This group of background

models estimates a background image (i.e., fixed scene), which

is subtracted from the current video frame. A threshold is

applied to the resulting difference image to give the foreground

mask. The threshold can be constant or dynamic, as used in

[51]. The following methods differ in the way the background

picture is obtained, resulting in different levels of image quality

for different levels of computational complexity.

a) Averaging: In the background averaging method, all

video frames are summed up. The learning rate specifies the

weight between a new frame and the background. This al-

gorithm has little computational cost; however, it is likely to

produce tails behind moving objects due to the contamination

of the background with the appearance of the moving objects.