51 52 53 54

41 42 43 44

31 32 33 34

21 22 23 24

EonEonEon

SonEon

NonEon

EonSonEon

SonSonEon

WonSonEon

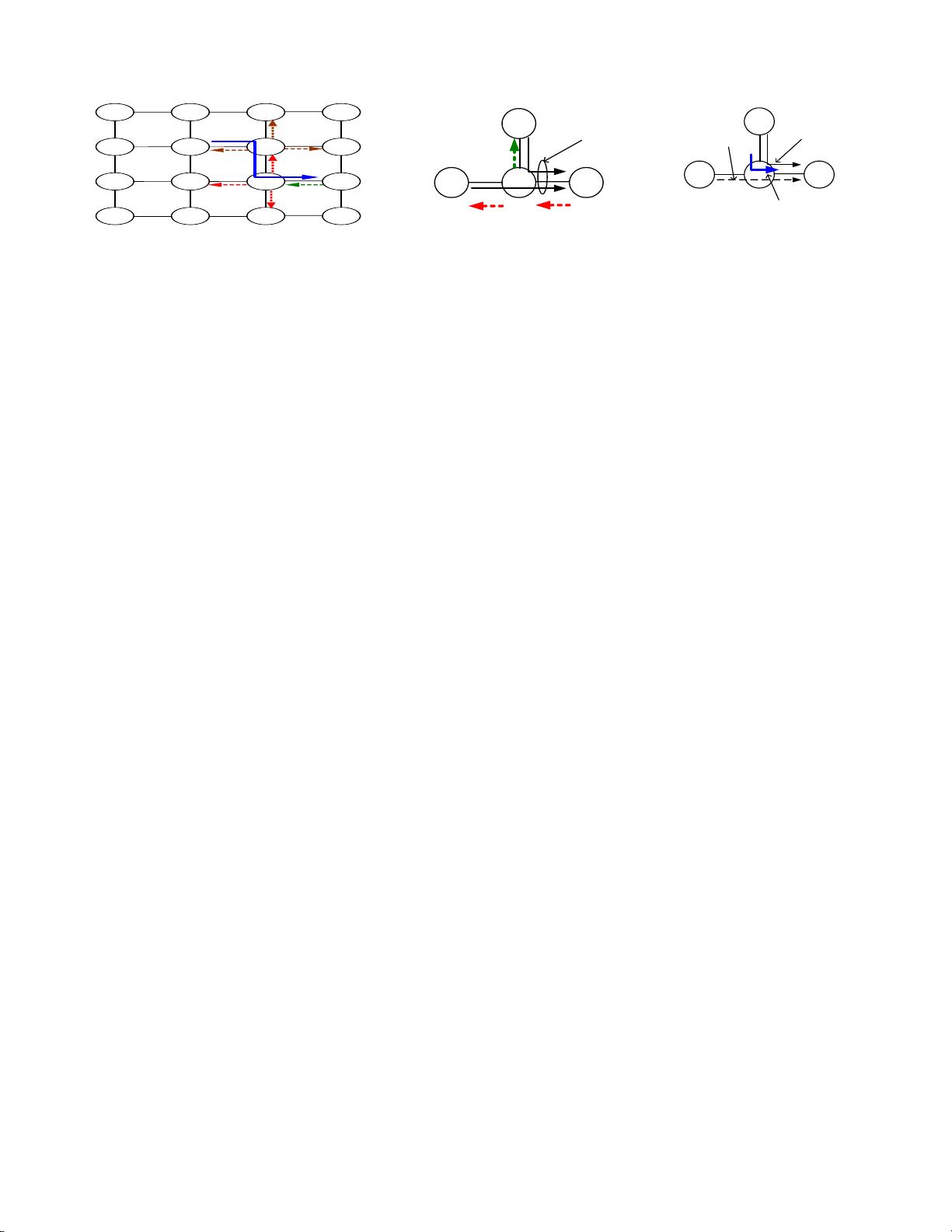

Figure 2. Token forwarding in a network with d

max

=3

32 33 34

EonEon

43

SonEon

Conflict

LA

F1

Eon

LA

F2

Figure 3. Example token conflict

32 33 34

43

LA

F2

LA

F1

LA

F1

successful

LA

F2

killed

Local priority setting at node 33: North

input has priority to use East output

Figure 4. Token conflict resolution

checks to see if there are sufficient resources (greater than b

thr

buffers and v

thr

VCs) at that corresponding input port. For

instance, node 33 is allowed to forward the E

on

token to its

North neighbor (node 43) using an appended S

on

E

on

token,

only if there are sufficient resources at its North input port

(South output port of node 43). All nodes which receive tokens

from node 33 do a similar forwarding to their neighbors by

appending their own tokens, as shown in Fig. 2. This token

forwarding takes place for up to d

max

hops in the network,

thus allowing each node to gather knowledge about resource

availability at all other nodes within its d

max

-hop vicinity.

Propagation of tokens between adjacent nodes uses separate

point-to-point wires which are much narrower than the data

channels. Hence, in a way, TFC makes use of tokens traveling

on separate narrow channels between nodes to optimize com-

munication energy/delay on the wider data channels.

How are tokens used? Tokens provide knowledge of conges-

tionlevelsinad

max

-hop neighborhood. Packets at these nodes

thus use these tokens during RC to form a token route – partial

route of the packet computed for up to the next d

max

hops at

once along less congested sections of the network. Thus, for

instance, the E

on

S

on

E

on

token at node 42 in Fig. 2 can be

used by a packet at that node to travel to node 34 (assuming

node 34 is closer to the packet’s destination) along a three-hop

partial route (assuming d

max

≥ 3) through the East output to

reach node 43, followed by a South hop to node 33 and then

an East hop to node 34 (route shown in bold), thus making use

of the E

on

S

on

E

on

token to route through a path along which

sufficient resources are available.

Once these token routes are known, lookaheads are sent (on

separate channels) ahead of the data flits along these routes

to do control setup at intermediate nodes one cycle prior to

data arrival, thereby allowing the data flit to bypass the router

pipeline and right away traverse the crossbar when it arrives

at these intermediate nodes. In contrast to the baseline, which

allows lookaheads to succeed only under very low loads when

router ports are empty, lookaheads here hold tokens. When such

a lookahead arrives at an intermediate router, it is prioritized to

use its desired switch port over any locally-buffered flits at that

router and hence succeeds in control setup even at high loads.

Moreover, the likelihood of conflicts with other lookaheads is

low along token routes as tokens are forwarded only along less

congested sections in the network. Hence, in the example of

Fig. 2, a packet using the E

on

S

on

E

on

token at node 42 can

bypass intermediate nodes 43 and 33 on its way to node 34.

Are tokens used on a per-flit basis? Yes, tokens are used by

individual flits of a packet. A header flit starting at a node is

free to use any of the available tokens during route computation.

Body/tail flits, on the other hand, do not go through route

computation and need to follow this same route and use tokens

to bypass intermediate routers along that route if such tokens

are available or else they traverse that route without tokens.

Similar to the baseline VC flow control design which uses a

VC control table at each router to store information about each

traversing packet [7], a packet’s route in the token scheme is

stored in a control table at each intermediate router as its header

flit traverses it, which allows the subsequent body/tail flits to

follow the same route as the header.

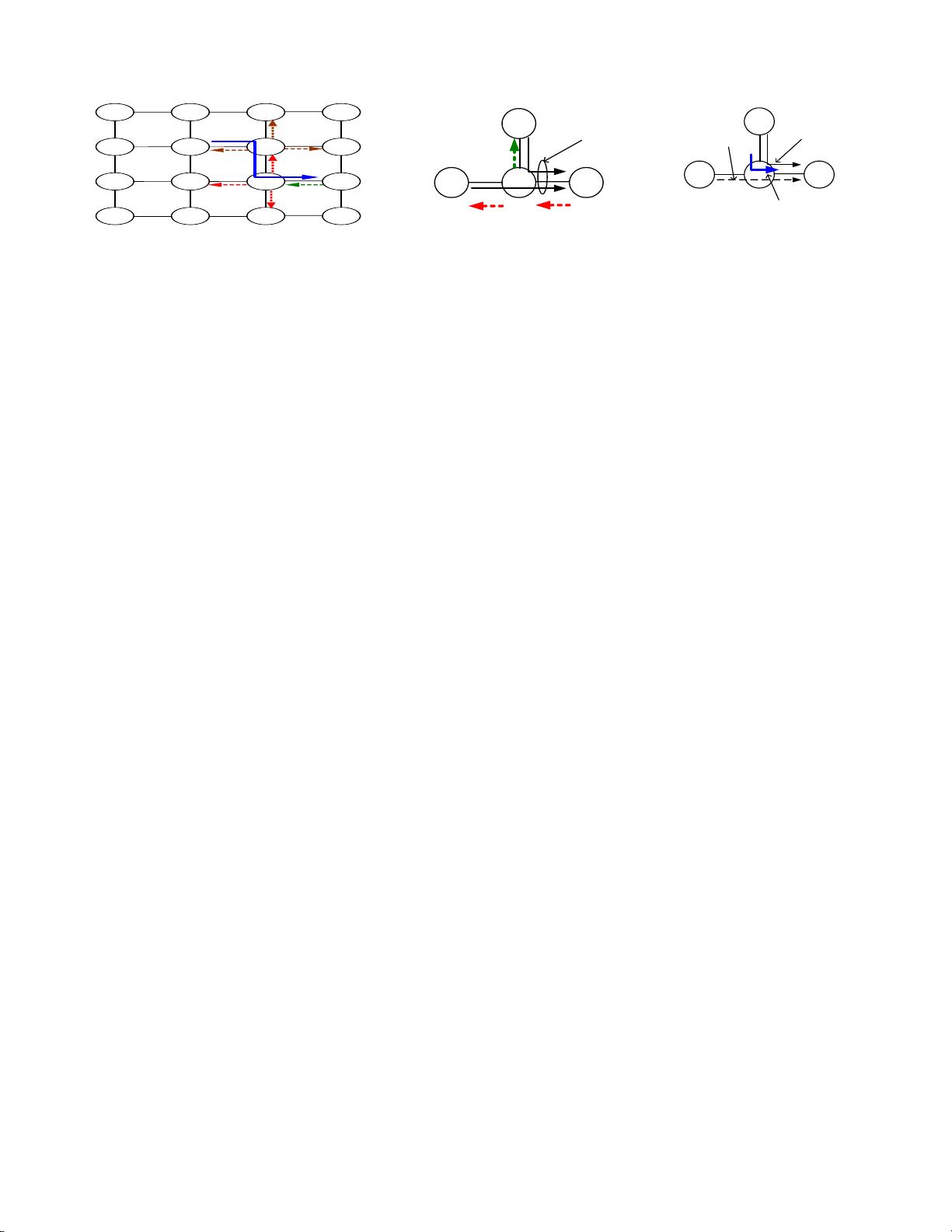

How are conflicts between multiple flits using tokens re-

solved? As discussed above, tokens are forwarded by broadcast-

ing them in the network. However, since broadcasting involves

forwarding the same token along multiple directions, it can

lead to conflicts when multiple lookaheads (corresponding to

different flits) arrive at a router from different input directions

holding the token for the same output port to bypass that

router. Fig. 3 shows an example of such a conflict where two

lookaheads for flit F1 (using token S

on

E

on

) and flit F2 (using

token E

on

E

on

) arrive simultaneously at router 33 at its North

and West input ports, respectively, holding the token to go out

at the East output.

To resolve such conflicts, each router maintains a local switch

port priority vector which has one entry for each output port

which denotes the particular input port which has priority to

use that output port. If an incoming lookahead matches the

priority setting for its desired output port at that router, it is

deemed to have won the switch. For instance, as shown in Fig. 4,

the current priority setting at node 33 denotes the North input

having priority to use the East output. Hence, the lookahead for

F1 (which matches this priority setting) is allowed to succeed

while the lookahead for F2 is killed. This priority vector is

varied dynamically to balance the request queues at each input

based on the observed traffic, as explained in Section 4.4.

What happens when a lookahead carrying a token

gets killed? Normal tokens only act as hints of resource

(buffers/VCs) availability in the network. They do not need to

guarantee resources at the endpoint node of a flit’s token route.

Hence, a check for a free buffer/VC is done at each intermediate

node along the token route and a lookahead carrying a token is

killed at an intermediate node if there are no free buffers or VCs

available at the next hop along the token route. A lookahead

might also be killed due to conflicts with other lookaheads

(as described above in the answer to the previous question).

When a flit’s lookahead gets killed at an intermediate node,

its corresponding data flit cannot bypass that node and needs

to get buffered there while going through the normal pipeline.

The key thing to note here is that since a check for a free