深度学习基础及卷积神经网络应用详解

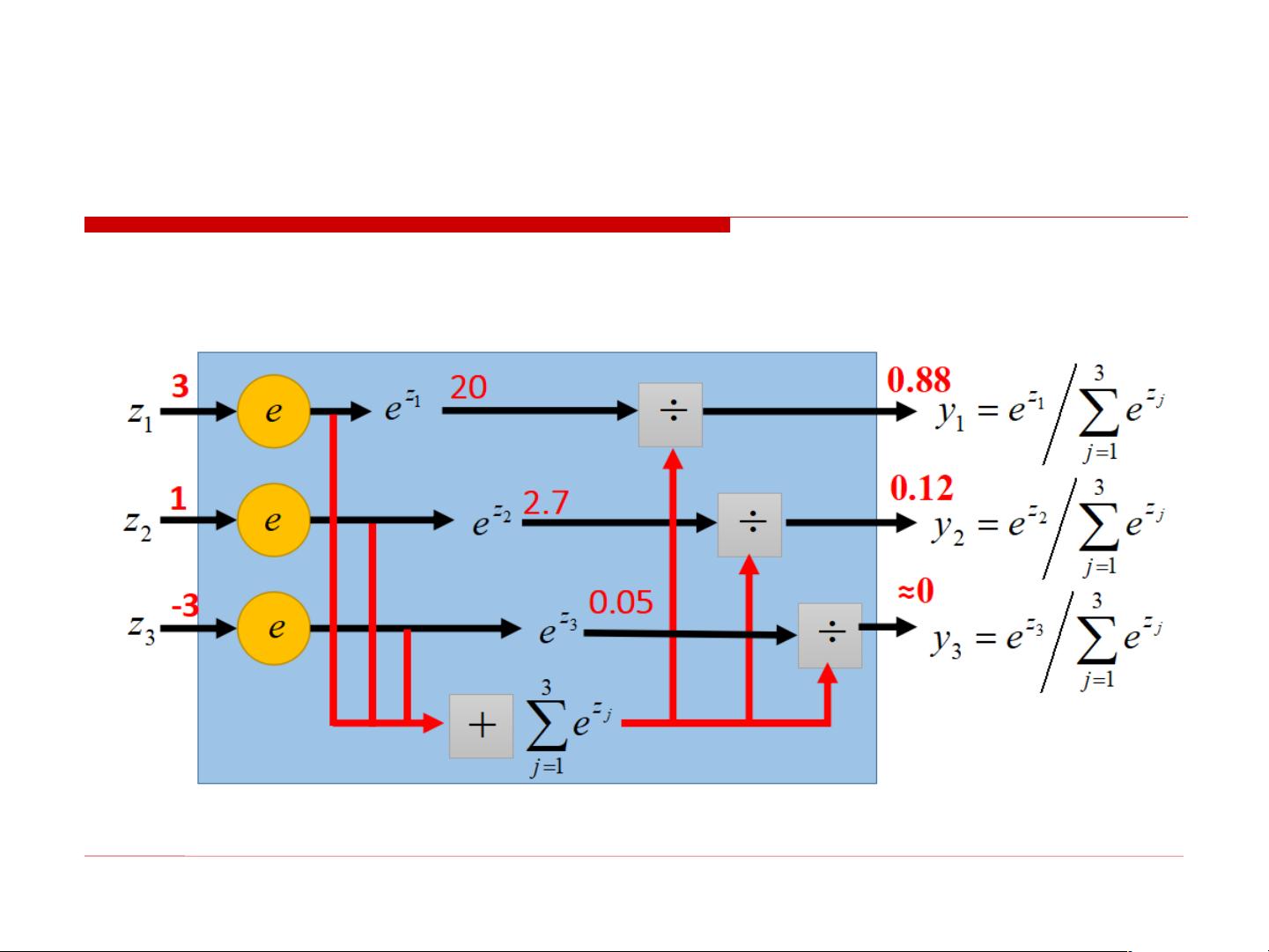

In the first lecture on the basics of deep learning, the focus was on Convolutional Neural Networks (CNN), their structure, and practical applications. The lecture also covered the concept of loss functions, which measure the error between predicted values and actual values. Specifically, mean square error was discussed, along with error backpropagation using the gradient descent method and the chain rule for differentiation.

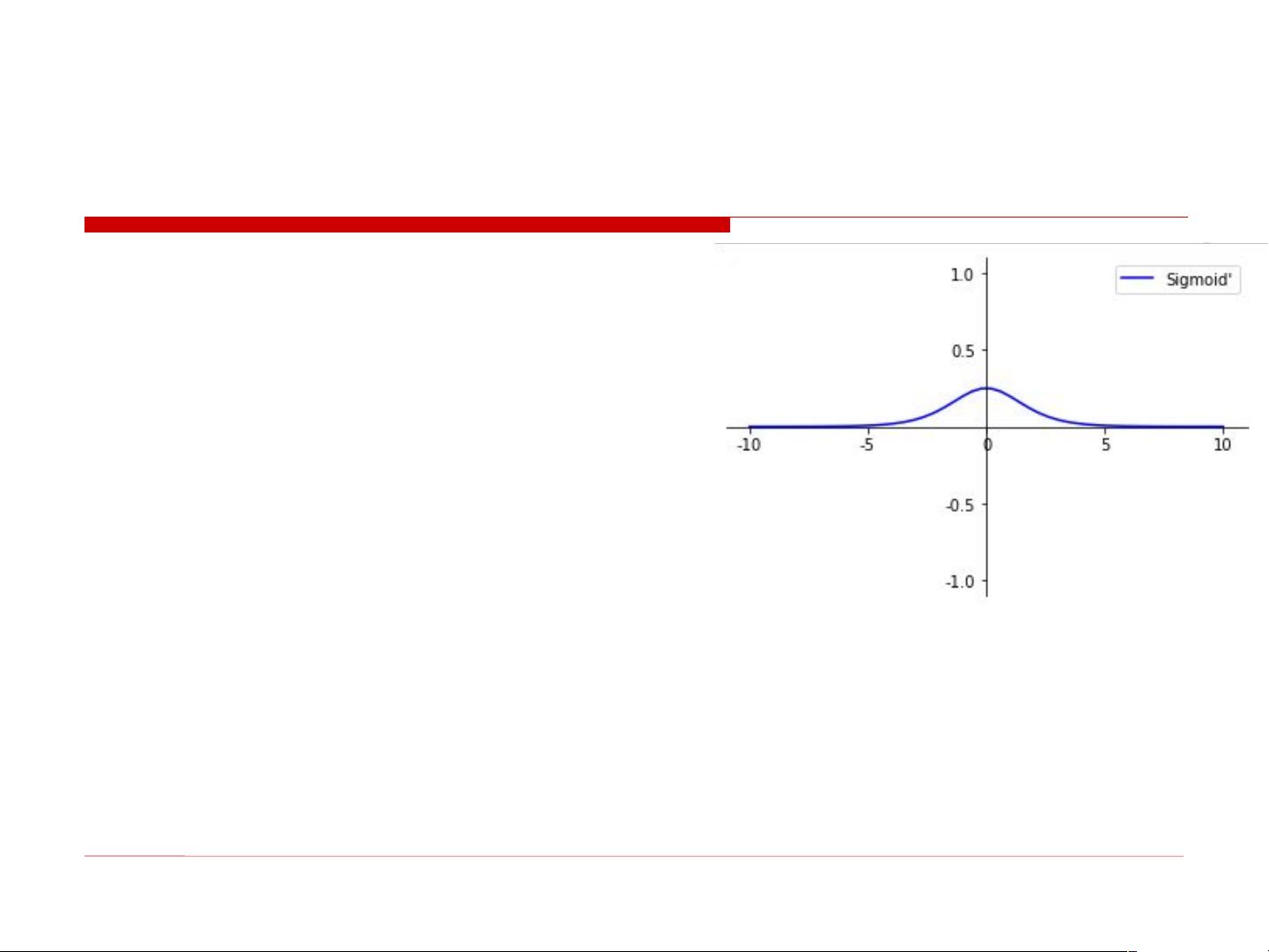

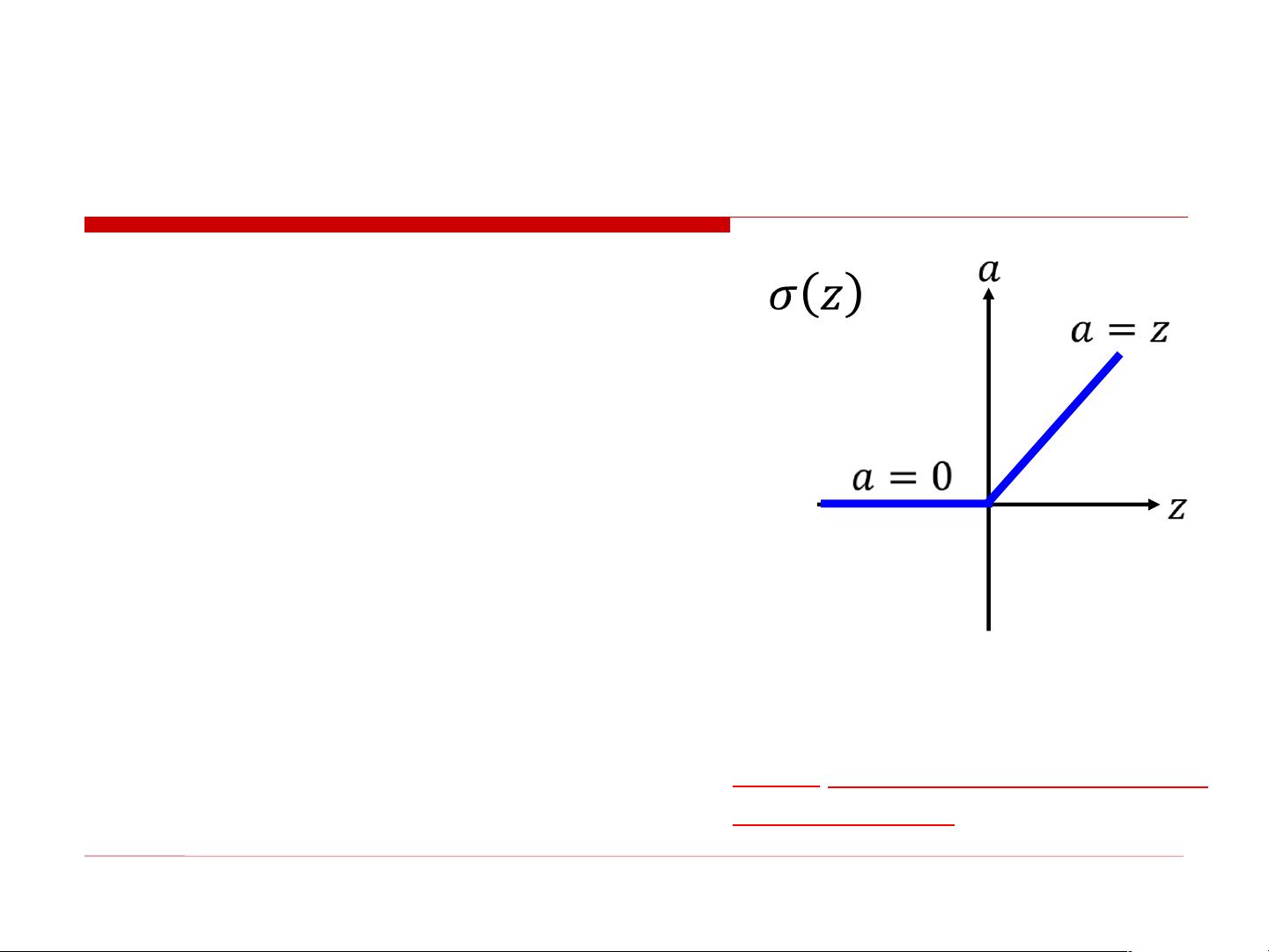

Traditional neural network structures were reviewed, highlighting the limitation of the backpropagation algorithm in solving the training issues, especially concerning the vanishing gradient problem in deep neural networks. The vanishing gradient problem occurs when the chain rule of differentiation leads to diminishing gradients, as seen in popular activation functions like the Sigmoid function.

Overall, the lecture emphasized the importance of understanding the fundamentals of deep learning, convolutional neural networks, loss functions, and the challenges faced in training deep neural networks. It provided a comprehensive overview of key concepts and practical applications in the field of deep learning.

2015-11-05 上传

2021-09-28 上传

2021-11-24 上传

2023-06-10 上传

2009-12-04 上传

2023-06-02 上传

2021-09-30 上传

mozun2020

- 粉丝: 1w+

- 资源: 131

最新资源

- JavaScript实现的高效pomodoro时钟教程

- CMake 3.25.3版本发布:程序员必备构建工具

- 直流无刷电机控制技术项目源码集合

- Ak Kamal电子安全客户端加载器-CRX插件介绍

- 揭露流氓软件:月息背后的秘密

- 京东自动抢购茅台脚本指南:如何设置eid与fp参数

- 动态格式化Matlab轴刻度标签 - ticklabelformat实用教程

- DSTUHack2021后端接口与Go语言实现解析

- CMake 3.25.2版本Linux软件包发布

- Node.js网络数据抓取技术深入解析

- QRSorteios-crx扩展:优化税务文件扫描流程

- 掌握JavaScript中的算法技巧

- Rails+React打造MF员工租房解决方案

- Utsanjan:自学成才的UI/UX设计师与技术博客作者

- CMake 3.25.2版本发布,支持Windows x86_64架构

- AR_RENTAL平台:HTML技术在增强现实领域的应用