P-3

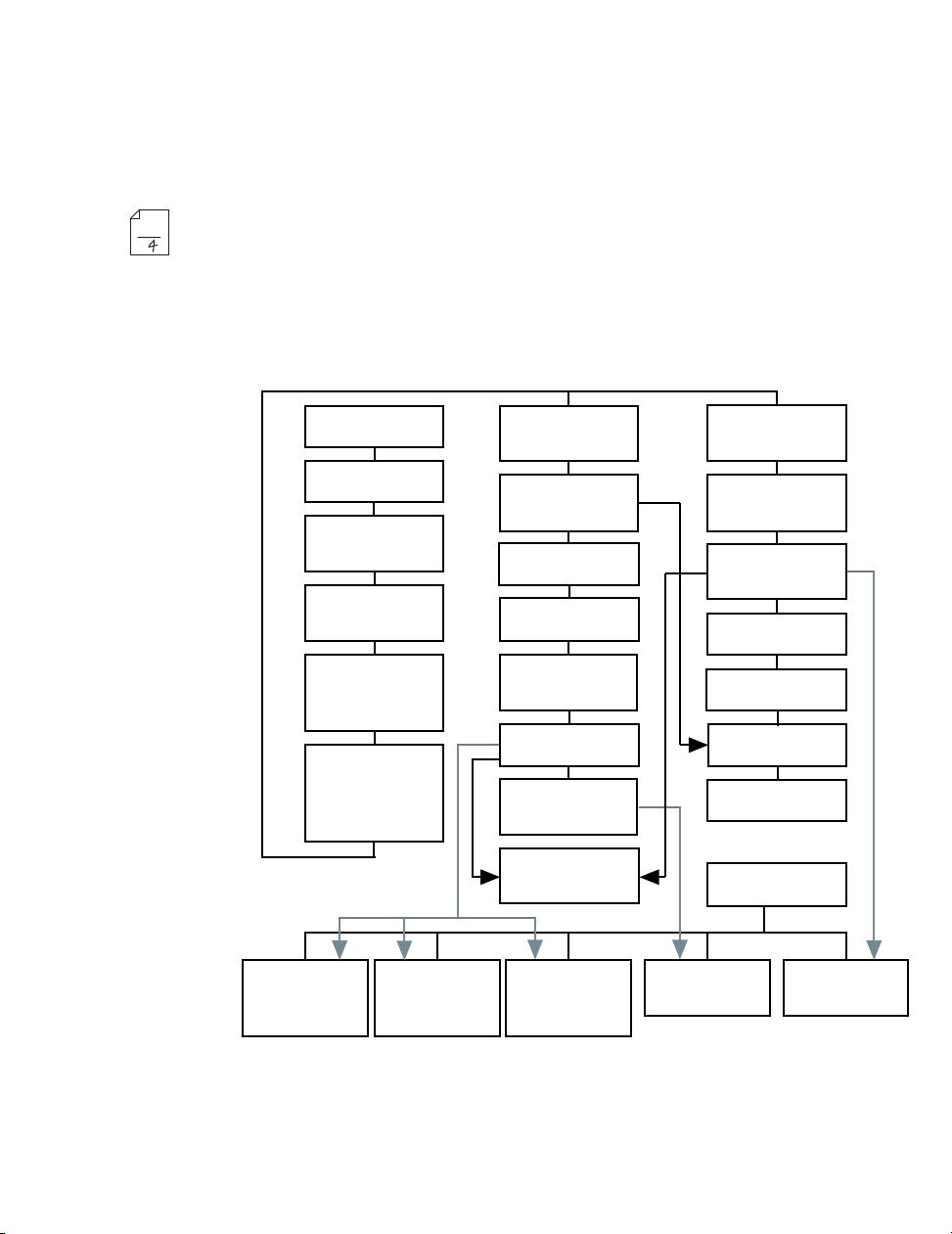

sic neural network architectures. The notation that is introduced in this

chapter is used throughout the book. In Chapter 3 we present a simple pat-

tern recognition problem and show how it can be solved using three differ-

ent types of neural networks. These three ne

tworks are representative of

the types of networks that are presented in the remainder of the text. In

addition, the pattern recognition problem presented here provides a com-

mon thread of experience throug

hout the book.

Much of the focus of this book will be

on methods for training neural net-

works to perform various tasks. In Ch

apter 4 we introduce learning algo-

rithms and present the first practical algorithm:

the perceptron learning

rule. The perceptron network has fundamental limitations, but it is impor-

tant for historical reasons and is also a useful tool fo

r introducing key con-

cepts that will be applied to more powerful networks in

later chapters.

One of the main objectives of this book

is to explain how neural networks

operate. For this reason we will weave together neural network topics with

important introductory material. For example, linear algebra, which is the

core of the mathematics required for understanding neural networks, is re-

viewed in Chapters 5 and 6. The concept

s discussed in these chapters will

be used extensively throughout the remainder of the book.

Chapters 7, and 15–19 describe networks

and learning rules that are

heavily inspired by biology and psychology. They fall into two categories:

associative networks and competitive networks. Chapters 7 and 15 intro-

duce basic concepts, while Chapters 16

–19 describe more advanced net-

works.

Chapters 8–14 and 17 develop a class of learning called performance learn-

ing, in which a network is trained to o

ptimize its performance. Chapters 8

and 9 introduce the basic concepts of performance learning. Chapters 10–

13 apply these concepts to feedforward neural networks of increasing pow-

er and complexity, Chapter 14 applies them to

dynamic networks and

Chapter 17 applies them to radial basis networks, which also use concepts

from competitive learning.

Chapters 20 and 21 discuss recurrent associative memory network

s. These

networks, which have feedback connections, are dynamical systems. Chap-

ter 20 investigates the stability of the

se systems. Chapter 21 presents the

Hopfield network, which has been one of the most influential recurrent net-

works.

Chapters 22–27 are different than the preceding chapters. Previous chap-

ters focus on the fundamentals of each type of

network and their learning

rules. The focus is on understanding the key concepts. In Chapters 22–27,

we discuss some practical issues in applying neural networks to real world

problems. Chapter 22 describes many practical training tips, and Chapters

23–27 present a series of case studies, in which neural networks are ap-

plied to practical problems in function a

pproximation, probability estima-

tion, pattern recognition, clu

stering and prediction.