International Journal of Computer Vision (2020) 128:261–318 271

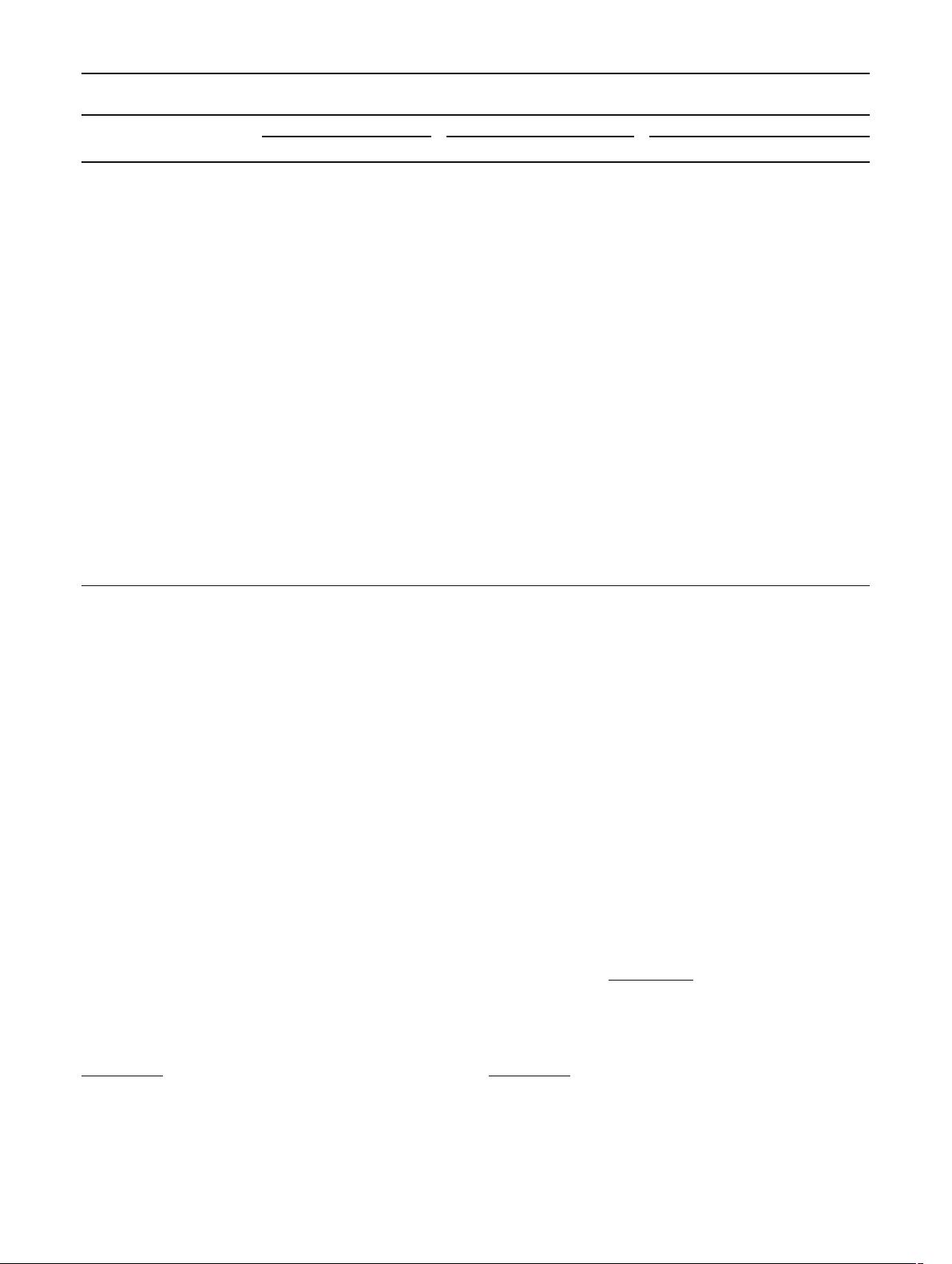

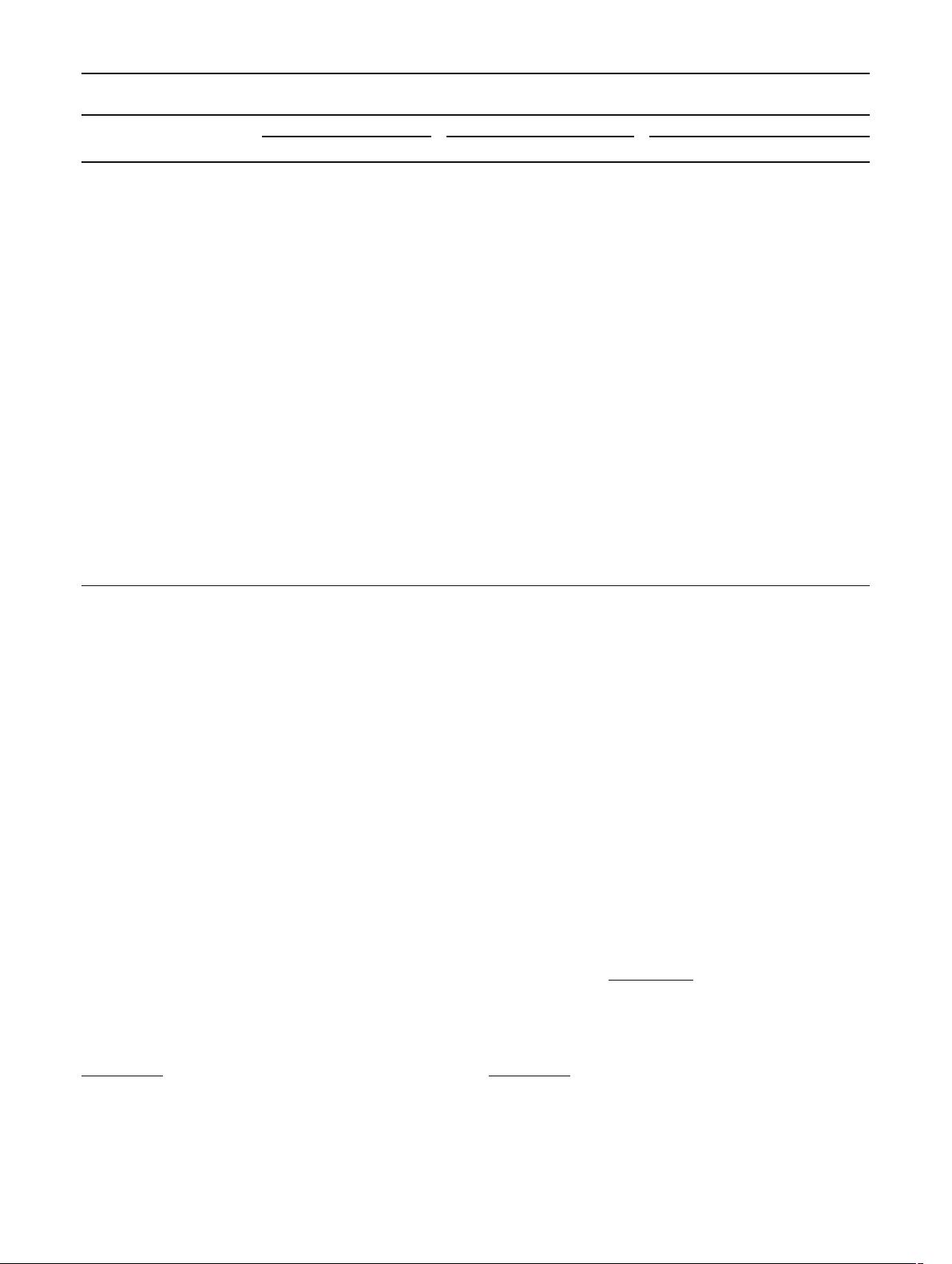

Table 3 Statistics of commonly used object detection datasets

Challenge Object classes Number of images Number of annotated objects Summary (Train+Val )

Train Val Test Train Val Images Boxes Boxes/Image

PASCAL VOC object detection challenge

VOC07 20 2501 2510 4952 6301(7844) 6307(7818) 5011 12,608 2.5

VOC08 20 2111 2221 4133 5082(6337) 5281(6347) 4332 10,364 2.4

VOC09 20 3473 3581 6650 8505(9760) 8713(9779) 7054 17,218 2.3

VOC10 20 4998 5105 9637 11,577(13,339) 11,797(13,352) 10,103 23,374 2.4

VOC11 20 5717 5823 10,994 13,609(15,774) 13,841(15,787) 11,540 27,450 2 .4

VOC12 20 5717 5823 10,991 13,609(15,774) 13,841

(15,787) 11,540 27,450 2.4

ILSVRC object detection challenge

ILSVRC13 200 395,909 20,121 40,152 345,854 55,502 416,030 401,356 1.0

ILSVRC14 200 456,567 20,121 40,152 478,807 55,502 476,668 534,309 1.1

ILSVRC15 200 456,567 20,121 51,294 478,807 55,502 476,668 534,309 1.1

ILSVRC16 200 456,567 20,121 60,000 478,807 55,502 476,668 534,309 1.1

ILSVRC17 200 456,567 20,121 65,500 478,807 55,502 476,668 534,309 1.1

MS COCO object detection challenge

MS COCO15 80 82,783 40,

504 81,434 604,907 291,875 123,287 896,782 7.3

MS COCO16 80 82,783 40,504 81,434 604,907 291,875 123,287 896,782 7.3

MS COCO17 80 118,287 5000 40,670 860,001 36,781 123,287 896,782 7.3

MS COCO18 80 118,287 5000 40,670 860,001 36,781 123,287 896,782 7.3

Open images challenge object detection (OICOD)(BasedonopenimagesV4Kuznetsova et al. 2018)

OICOD18 500 1,643,042 100,000 99,999 11,498,734 696,410 1,743,042 12,195,144 7.0

Object statistics for VOC challenges list the non-difficult objects used in the evaluation (all annotated objects). For the COCO challenge, prior to

2017, the test set had four splits (Dev, Standard, Reserve,andChallenge), with each having about 20K images. Starting in 2017, the test set has

only the Dev and Challenge splits, with the other two splits removed. Starting in 2017, the train and val sets are arranged differently, and the test set

is divided into two roughly equally sized splits of about 20,000 images each: Test Dev and Test Challenge. Note that the 2017 Test Dev/Challenge

splits contain the same images as the 2015 Test Dev/Challenge splits, so results across the years are directly comparable

classes in the dataset are exhaustively annotated, whereas

for Open Images V4 a classifier was applied to each image

and only those labels with sufficiently high scores were sent

for human verification. Therefore i n OICOD only the object

instances of human-confirmed positive labels are annotated.

4.2 Evaluation Criteria

There are three criteria for evaluating the performance of

detection algorithms: detection speed in Frames Per Second

(FPS), precision, and recall. The most commonly used met-

ric is Average Precision (AP), derived from precision and

recall. AP is usually evaluated in a category specific manner,

i.e., computed for each object category separately. To com-

pare performance over all object categories, the mean AP

(mAP) averaged over all object categories is adopted as the

final measure of performance

3

. More details on these metrics

3

In object detection challenges, such as PASCAL VOC and ILSVRC,

the winning entry of each object category is that with the highest AP

score, and the winner of the challenge is the team that wins on the most

object categories. The mAP is also used as the measure of a team’s

can be found in Everingham et al. (2010), Everingham et al.

(2015), Russakovsky et al. (2015), Hoiem et al. (2012).

The standard outputs of a detector applied to a testing

image I are the predicted detections {(b

j

, c

j

, p

j

)}

j

, indexed

by object j, of Bounding Box (BB) b

j

, predicted category c

j

,

and confidence p

j

. A predicted detection (b , c, p) is regarded

as a True Positive (TP) if

• The predicted category c equals the ground truth label

c

g

.

• The overlap ratio IOU (Intersection Over Union) (Ever-

ingham et al. 2010; Russakovsky et al. 2015)

IOU(b, b

g

) =

area (b ∩ b

g

)

area (b ∪ b

g

)

, (4)

between the predicted BB b and the ground truth b

g

is

not smaller than a predefined threshold ε, where ∩ and

Footnote 3 continued

performance, and is justified since the ranking of teams by mAP was

always the same as the ranking by the number of object categories won

(Russakovsky et al. 2015).

123