102

U. Fayyad, I? Stolorz/Future Generation Computer Systems 13 (1997) 99-115

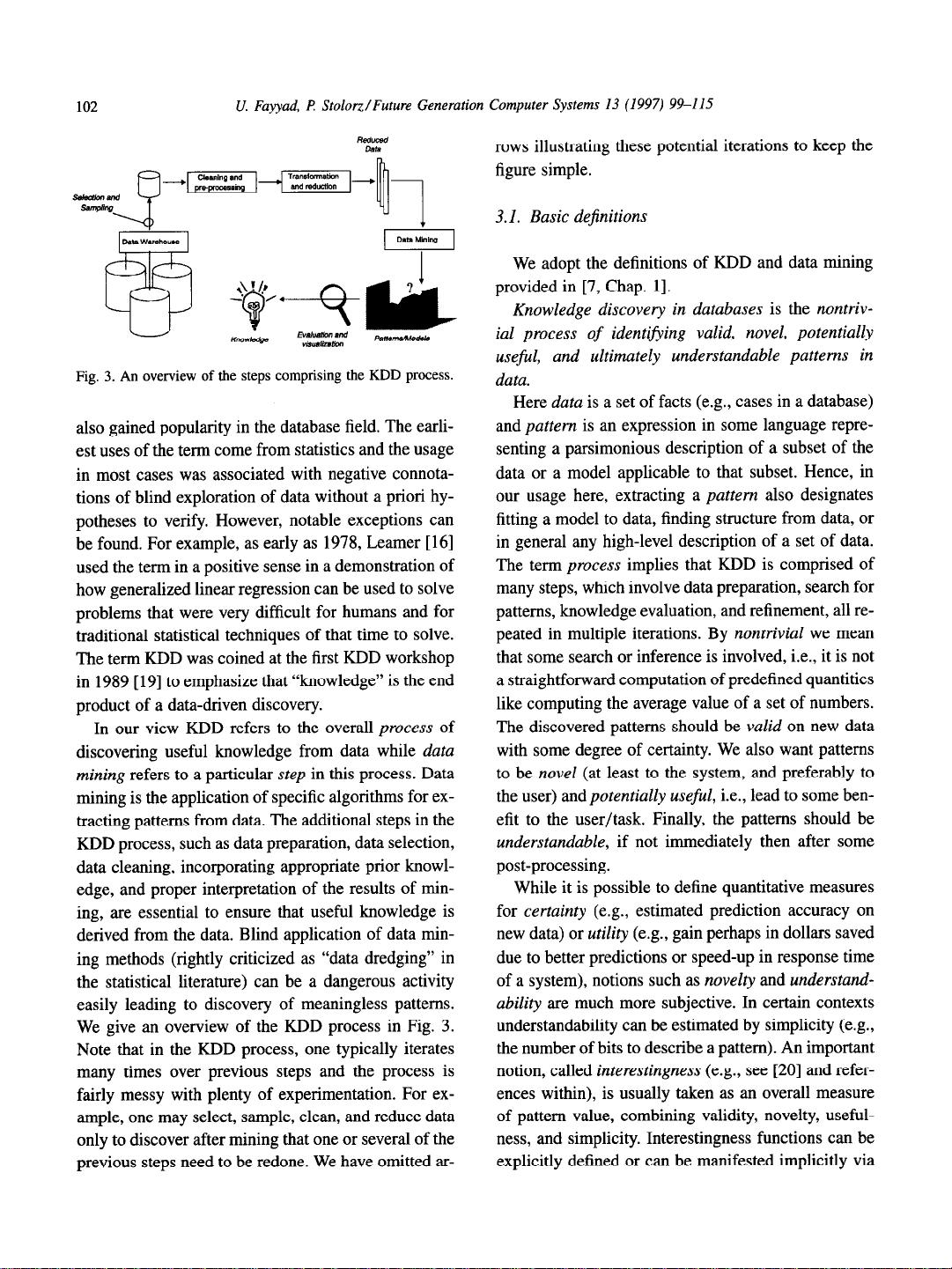

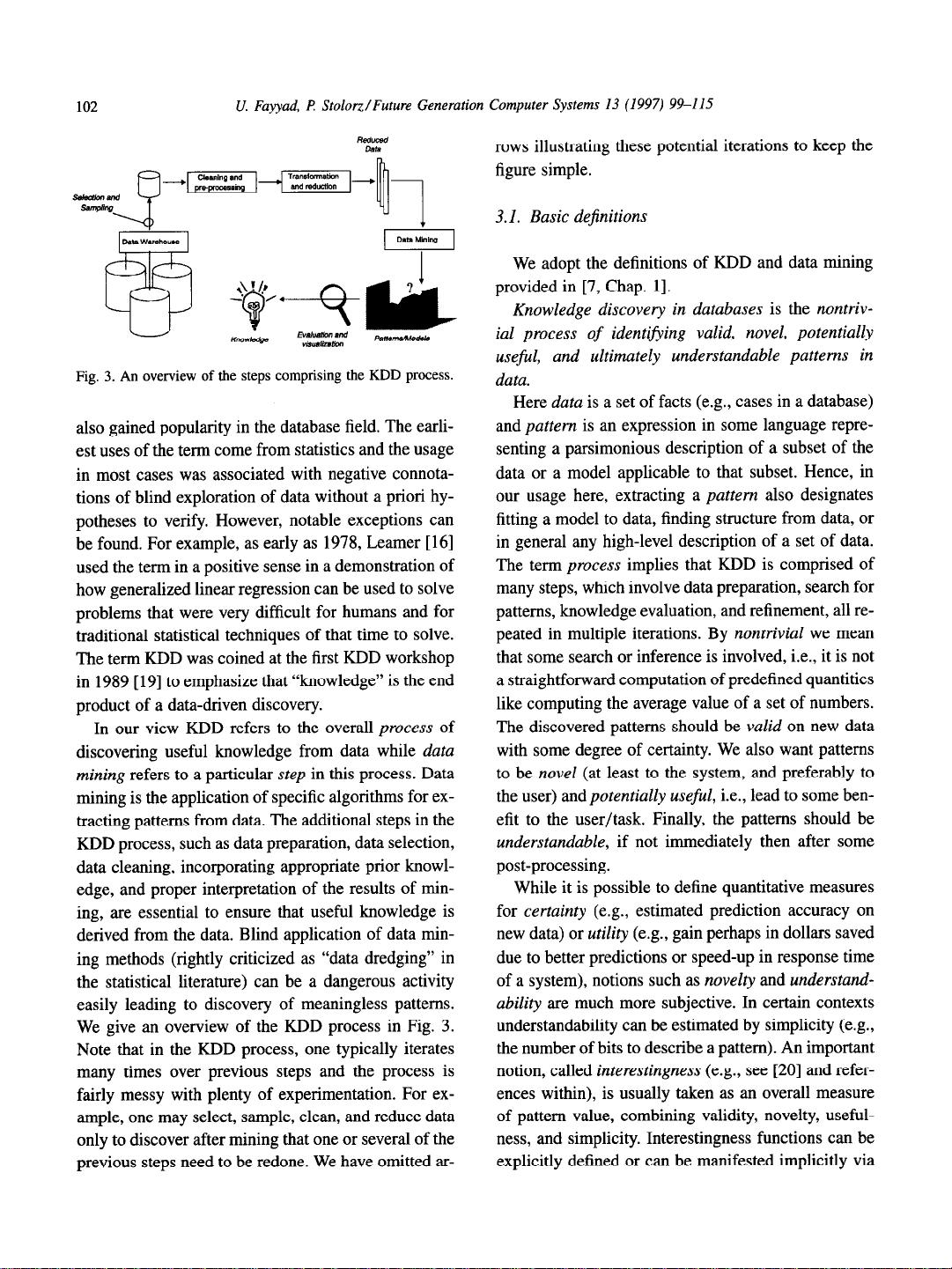

Fig. 3. An overview of the steps comprising the KDD process.

also gained popularity in the database field. The earli-

est uses of the term come from statistics and the usage

in most cases was associated with negative connota-

tions of blind exploration of data without a priori hy-

potheses to verify. However, notable exceptions can

be found. For example, as early as 1978, Learner [ 161

used the term in a positive sense in a demonstration of

how generalized linear regression can be used to solve

problems that were very difficult for humans and for

traditional statistical techniques of that time to solve.

The term KDD was coined at the first KDD workshop

in 1989 [ 191 to emphasize that “knowledge” is the end

product of a data-driven discovery.

In our view KDD refers to the overall process of

discovering useful knowledge from data while data

mining refers to a particular step in this process. Data

mining is the application of specific algorithms for ex-

tracting patterns from data. The additional steps in the

KDD process, such as data preparation, data selection,

data cleaning, incorporating appropriate prior knowl-

edge, and proper interpretation of the results of min-

ing, are essential to ensure that useful knowledge is

derived from the data. Blind application of data min-

ing methods (rightly criticized as “data dredging” in

the statistical literature) can be a dangerous activity

easily leading to discovery of meaningless patterns.

We give an overview of the KDD process in Fig. 3.

Note that in the KDD process, one typically iterates

many times over previous steps and the process is

fairly messy with plenty of experimentation. For ex-

ample, one may select, sample, clean, and reduce data

only to discover after mining that one or several of the

previous steps need to be redone. We have omitted ar-

rows illustrating these potential iterations to keep the

figure simple.

3.1. Basic definitions

We adopt the definitions of KDD and data mining

provided in [7, Chap. 11.

Knowledge discovery in databases is the nontriv-

ial process of identifying valid, novel, potentially

useful, and ultimately understandable patterns in

data.

Here data is a set of facts (e.g., cases in a database)

and pattern is an expression in some language repre-

senting a parsimonious description of a subset of the

data or a model applicable to that subset. Hence, in

our usage here, extracting a pattern also designates

fitting a model to data, finding structure from data, or

in general any high-level description of a set of data.

The term process implies that KDD is comprised of

many steps, which involve data preparation, search for

patterns, knowledge evaluation, and refinement, all re-

peated in multiple iterations. By nontrivial we mean

that some search or inference is involved, i.e., it is not

a straightforward computation of predefined quantities

like computing the average value of a set of numbers.

The discovered patterns should be valid on new data

with some degree of certainty. We also want patterns

to be novel (at least to the system, and preferably to

the user) and potentially useful, i.e., lead to some ben-

efit to the user/task. Finally, the patterns should be

understandable, if not immediately then after some

post-processing.

While it is possible to define quantitative measures

for certainty (e.g., estimated prediction accuracy on

new data) or utility (e.g., gain perhaps in dollars saved

due to better predictions or speed-up in response time

of a system), notions such as novelty and understand-

ability are much more subjective. In certain contexts

understandability can be estimated by simplicity (e.g.,

the number of bits to describe a pattern). An important

notion, called interestingness (e.g., see [20] and refer-

ences within), is usually taken as an overall measure

of pattern value, combining validity, novelty, useful-

ness, and simplicity. Interestingness functions can be

explicitly defined or can be manifested implicitly via