4 Zhaowei Cai, Quanfu Fan, Rogerio S. Feris, and Nuno Vasconcelos

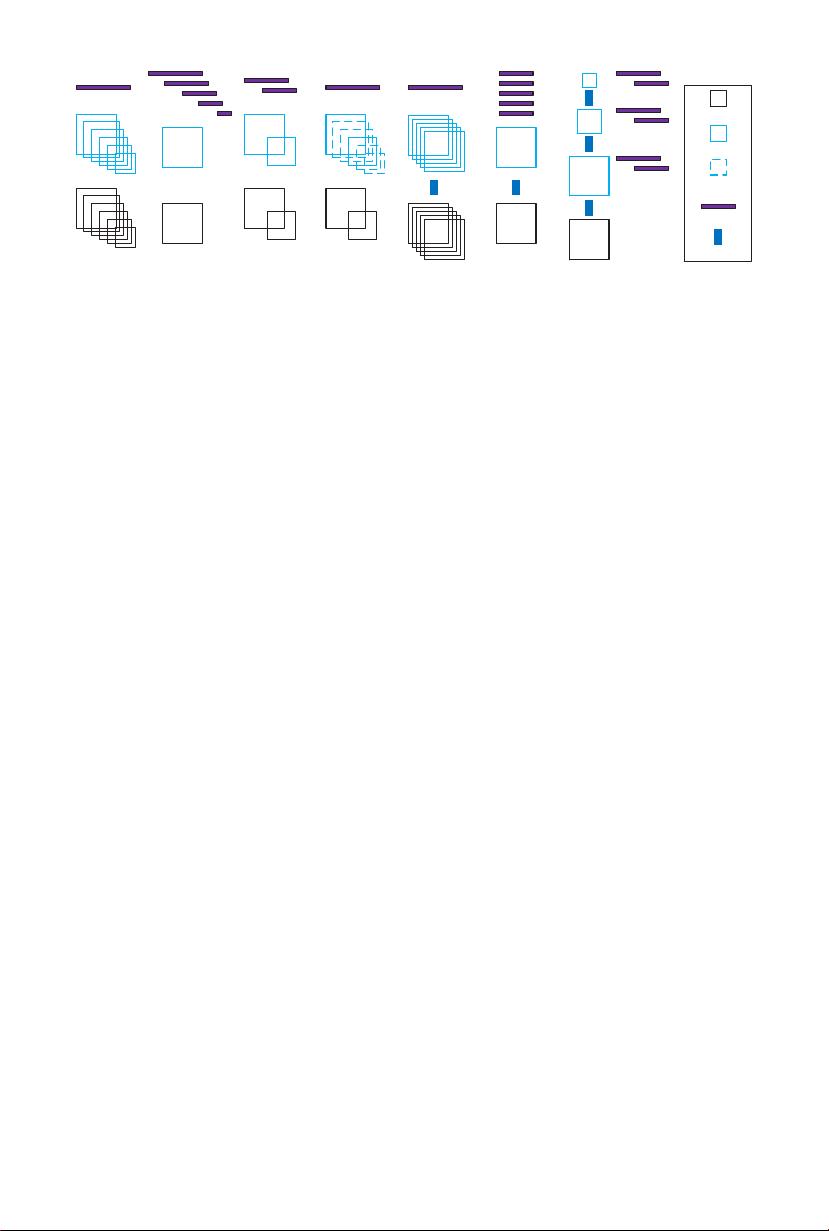

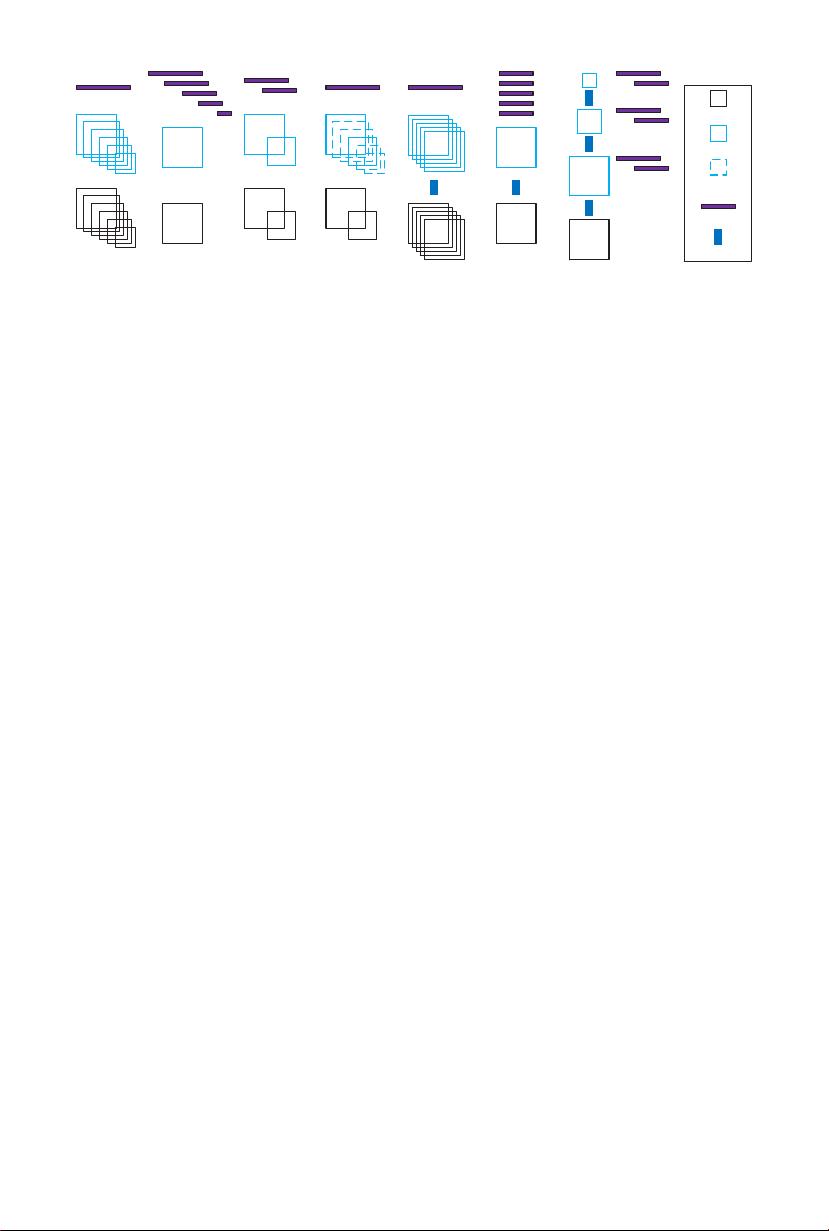

(a) (d)(b) (e) (f) (g)(c)

input image

feature map

model template

CNN layers

approximated

feature map

Fig. 2. Different strategies for multi-scale detection. The length of model template

represents the template size.

3.1 Multi-scale Detection

The coverag e of ma ny object scales is a critical problem for object detection.

Since a de tector is bas ic ally a dot-product between a learned template and an

image regio n, the template has to be matched to the spatial support of the object

to reco gnize. There are two ma in strategies to achieve this goal. The first is to

learn a single classifier and rescale the image multiple times, so that the classifier

can match all possible object sizes. As illustrated in Fig. 2 (a ), this strategy

requires feature computation at multiple image scales. While it usually produces

the most accurate detec tion, it tends to be very costly. An alternative approach

is to apply multiple classifiers to a single input image. This strategy, illustrated

in Fig. 2 (b), avoids the repeated computation of feature maps and tends to be

efficient. However, it requires an indiv idual classifier for each object scale and

usually fails to produce good detectors. Several approaches have been proposed

to achieve a good trade-off between accuracy and complexity. For example, the

strategy of Fig. 2 (c) is to rescale the input a few times and learn a small number

of model templates [24]. Another possibility is the feature approximation of [2].

As shown in Fig. 2 (d), this consists of rescaling the input a small number of

times and interpolating the missing feature maps. This has been shown to achieve

considerable speed-ups for a very modest loss of classification accur acy [2].

The implementation of multi-scale strategies on CNN-based detectors is slightly

different from those discussed above, due to the complexity of CNN features. As

shown in Fig. 2 (e), the R-CNN of [3] simply warps object proposal patches

to the natural scale of the CNN. This is somewhat similar to Fig. 2 (a), but

features are computed for patches rather than the entire image. The multi-scale

mechanism of the RPN [9], shown in Fig . 2 (f), is simila r to that of Fig. 2 (b).

However, multiple sets of templates of the same size are applied to all feature

maps. This can lead to a severe scale inconsistency for template matching. As

shown in Fig. 1, the single scale of the feature maps, dic tated by the (228×228)

receptive field o f the CNN, can be severely mismatched to s mall (e.g. 32×32) or

large (e.g. 640×64 0) objects. This compromises object detection performance.

Inspired by previous evidence on the benefits of the strategy of Fig. 2 (c)

over that of Fig. 2 (b), we propo se a new multi-sc ale stra tegy, shown in Fig. 2