International Journal on Recent and Innovation Trends in Computing and Communication ISSN: 2321-8169

Volume: 2 Issue: 3 479 – 482

______________________________________________________________________________________________

479

IJRITCC | March 2014, Available @ http://www.ijritcc.org

_______________________________________________________________________________________________

A Survey on Compression Algorithms in Hadoop

Sampada Lovalekar

Department of IT

SIES Graduate School of Technology

Nerul, Navi Mumbai, India

sampada.lovalekar@gmail.com

Abstract—Now a days, big data is hot term in IT. It contains large volume of data. This data may be structured, unstructured or semi structured.

Each big data source has different characteristics like frequency, volume, velocity and veracity of the data. Reasons of growth in the

volume is use of internet, smart phone ,social networks, GPS devices and so on. However, analyzing big data is a very challenging problem today.

Traditional data warehouse systems are not able to handle this large amount of data. As the size is very large, compression will surely add the benefit

to store this large size of data. This paper explains various compression techniques in hadoop.

Keywords-bzip2, gzip ,lzo, lz4 ,snappy

______________________________________________________*****___________________________________________________

I. INTRODUCTION

The volume of big data is growing day by day because of use

of smart phones, internet, sensor devices etc. The three key

characteristics of big data are volume, variety and value. Volume

can be described as the large quantity of data generated because

of use of technologies now a day. Big data comes in different

formats like audio, video, image etc. This is variety. Data is

generated in real time with demands for usable information to be

served up as needed. Value is the value of that data whether it is

more or less important.

Big data is used in many sectors like healthcare, banking,

insurance and so on. The amount of data is increasing day by

day. Big data sizes vary from a few dozen terabytes to many

petabytes of data.

Big data doesn’t only bring new data types and storage

mechanisms, but new types of analysis as well. Data is growing

too fast. New data types are added. Processing and managing

big data is a challenge in today’s era. With traditional methods

for big data storage and analysis is less efficient. So, there is

difference between analytics of traditional data and big data.

The challenges comes with big data are data privacy and

security, data storage, creating business value from the large

amount of data etc. Data is growing too fast. Following points

should be considered [1].

In 2011 alone, mankind created over 1.2 trillion GB of

data.

Data volumes are expected to grow 50 times by 2020.

Google receives over 2,000,000 search queries every

minute.

72 hours of video are added to YouTube every minute.

There are 217 new mobile Internet users every minute.

571 new websites are created every minute of the day.

According to Twitter’s own research in early 2012, it

sees roughly 175 million tweets every day, and has more

than 465 million accounts.

As the size of big data is growing, compression is must. These

large amounts of data need to be compressed. The advantages of

compression are [2]:

Compressed data uses less bandwidth on the network

than uncompressed data.

Compressed data uses less disk space.

Speed up the data transfer across the network to or from

disk.

Cost is reduced.

II. BIG DATA TECHNOLOGIES

Hadoop is an open source framework for processing, storing,

and analyzing massive amounts of distributed unstructured data.

Hadoop has two main components. These are:

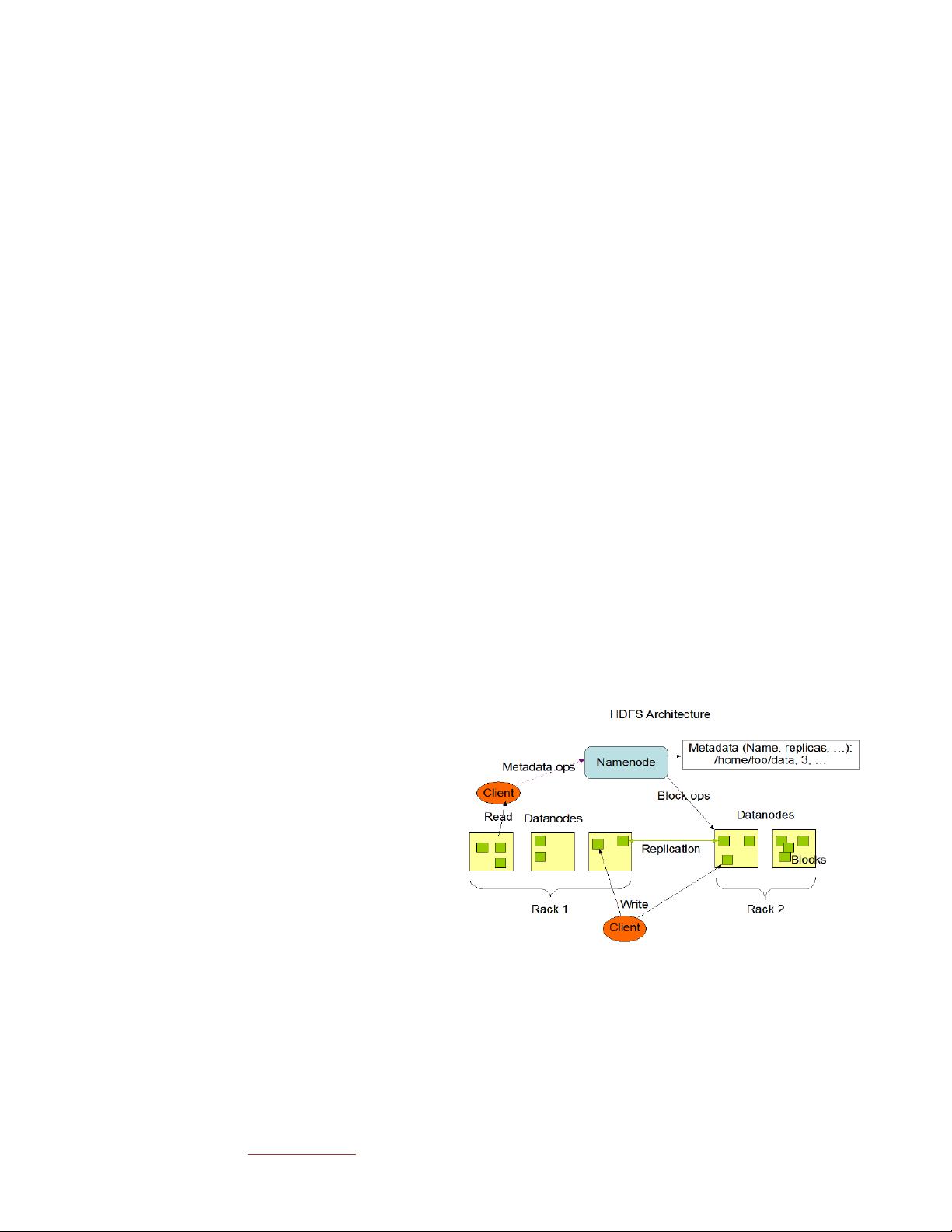

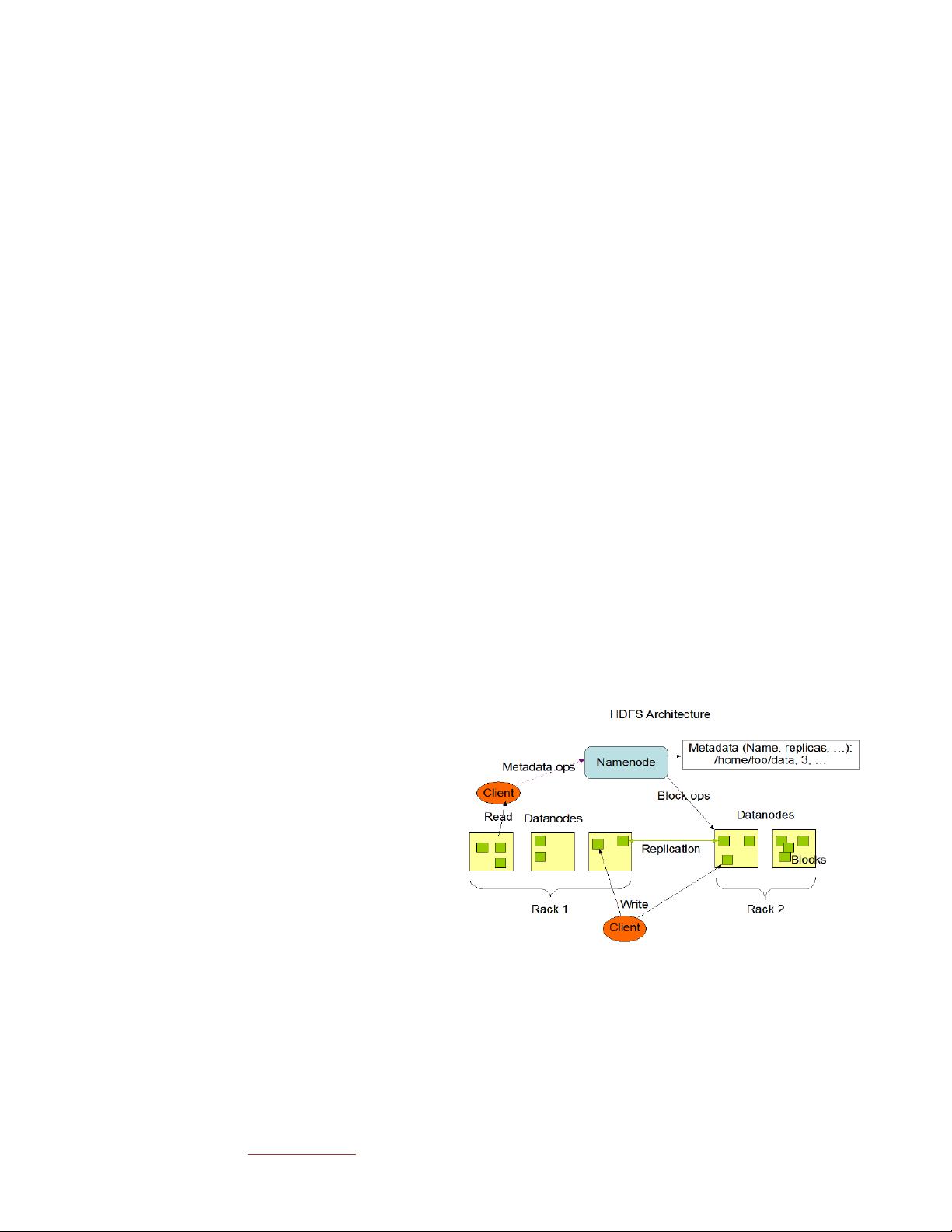

Figure 1. HDFS architecture

A HDFS: Hadoop Distributed File System

The Hadoop Distributed File System (HDFS) is a distributed file

system designed to store very large data sets , and to stream those

data sets at high bandwidth to user applications.