Introduction

Suppose

that

to

produce

a

certain

product,

four

operations

must

be

performed

on

a

certain

machine.

The

operations

are

denoted

by

A, B,

C,

and

D.

We

assume

that

operation

B

can

be

performed

only

after

operation

A

has

been

performed,

and

operation

D

can

be

performed

only

after

operation

B

has

been

performed.

(Thus

the

sequence

CDAB

is allowable

but

the

sequence

CDBA

is

not.)

The

setup

cost C

mn

for

passing

from

any

operation

'IT/,

to

any

other

operation

n is given.

There

is also

an

initial

startup

cost SA

or

Sc

for

starting

with

operation

A

or

C, respectively.

The

cost

of

a sequence is

the

sum

of

the

setup

costs

associated

with

it; for

example,

the

operation

sequence

ACDB

has

cost

Example

1.1.2

(A

Deterministic

Scheduling

Problem)

Thus

a

discrete-state

system

can

equivalently

be

described in

terms

of a difference

equation

or

in

terms

of

transition

probabilities.

Depend-

ing

on

the

given problem,

it

may

be

notationally

or

mathematically

more

convenient

to

use one

description

over

the

other.

The

following examples

illustrate

discrete-state

problems.

The

first

example involves a

deterministic problem,

that

is, a

problem

where

there

is no

stochastic

uncertainty.

In

such

a

problem,

when

a

control

is chosen

at

a given

state,

the

next

state

is fully

determined;

that

is, for

any

state

i,

control u,

and

time

k,

the

transition

probability

Pij (u, k) is equal

to

1 for a

single

state

j,

and

it

is 0 for all

other

candidate

next

states.

The

other

three

examples involve

stochastic

problems,

where

the

next

state

resulting

from

a given choice of

control

at

a given

state

cannot

be

determined

a priori.

where

wet,

u,"j) is

the

set

Sec.

1.1

Chap. 1

The

Dynamic

Programming

Algorithm

where

gk

are

some functions; in

the

inventory example, we have

(e)

Optimization over (closed-loop) policies,

that

is, rules for choosing

Uk

for each k

and

each possible value of

Xk.

This

type

of

state

transition

can

alternatively

be

described

in

terms

of

the

discrete-time

system

equation

In

the

preceding example,

the

state

Xk

was a continuous

real

variable,

and

it

is

easy

to

think. of multidimensional generalizations

where

the

state

is

an

n-dimensional vector of real variables.

It

is also possible, however,

that

the

state

takes

values from a discrete set, such as

the

integers.

A version of

the

inventory problem where a discrete viewpoint is

more

natural

arises

when

stock

is

measured

in

whole

units

(such as

cars),

each

of which is

a significant fraction of

xk,

Uk,

or

Wk.

It

is

more

appropriate

then

to

take

as

state

space

the

set

of all integers

rather

than

the

set

of

real

numbers.

The

form of

the

system

equation

and

the

cost

per

period

will, of

course,

stay

the

same.

Generally,

there

are

many

situations

where

the

state

is

naturally

dis-

crete

and

there

is no continuous

counterpart

of

the

problem. Such sit-

uations

are

often conveniently specified in

terms

of

the

probabilities of

transition

between

the

states.

What

we need

to

know is Pij

(u,

k), which

is

the

probability

at

time

k

that

the

next

state

will

be

j,

given

that

the

current

state

is 'i,

and

the

control selected is u, Le.,

Discrete-State

and

Finite-State

Problems

where

the

probability

distribution

of

the

random

parameter

Wk

is

Conversely, given a

discrete-state

system

in

the

form

together

with

the

probability

distribution

Pk(Wk

I

Xk,

Uk)

of

Wk,

we

can

provide

an

equivalent

transition

probability

description.

The

corresponding

transition

probabilities

are

given

by

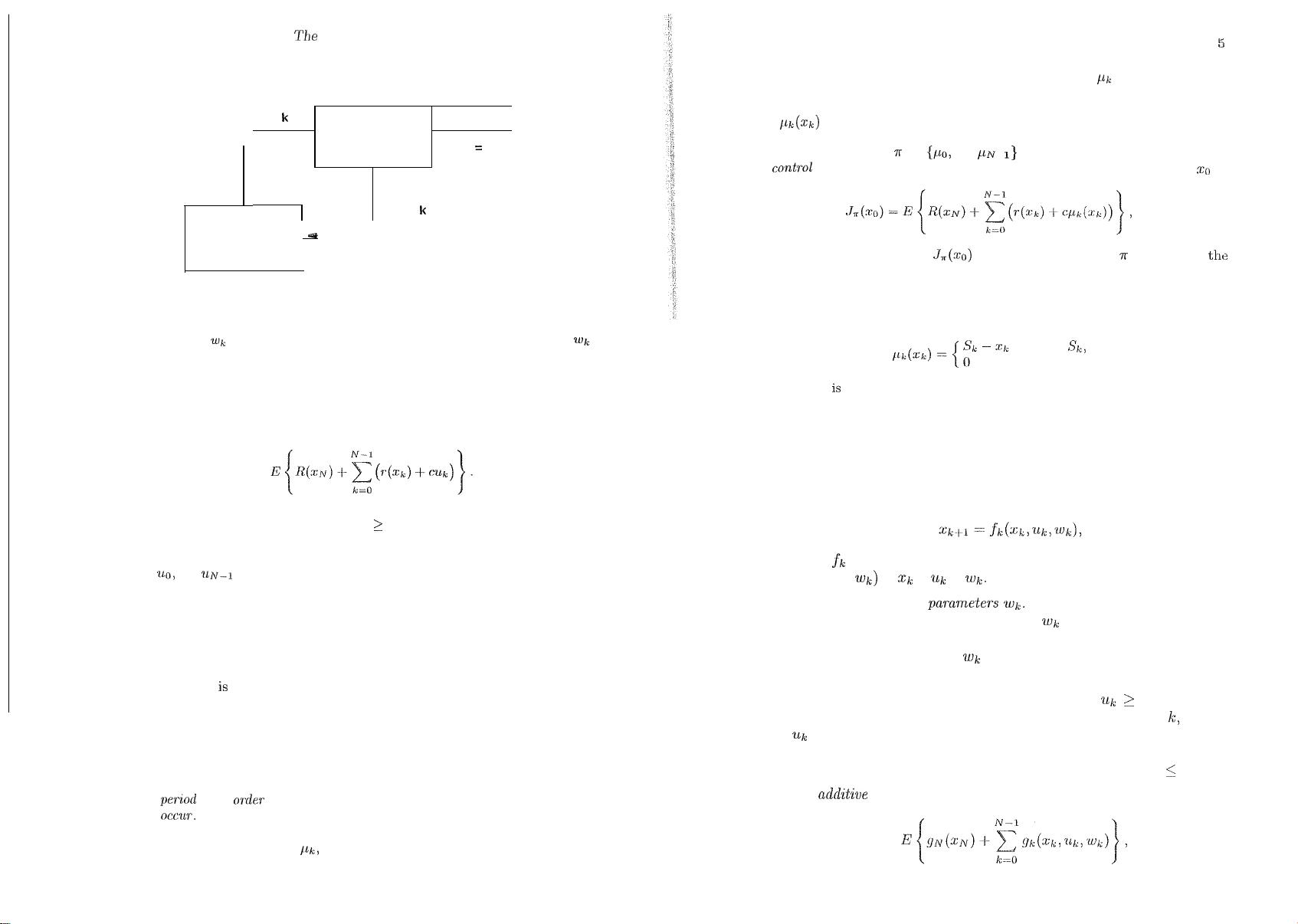

We

can

view

this

problem

as

a sequence

of

three

decisions,

namely

the

choice

of

the

first

three

operations

to

be

performed

(the

last

operation

is

determined

from

the

preceding

three).

It

is

appropriate

to

consider

as

state

the

set

of

operations

already

performed,

the

initial

state

being

an

artificial

state

corresponding

to

the

beginning

of

the

decision process.

The

possible

state

transitions

corresponding

to

the

possible

states

and

decisions for

this

problem

is shown

in

Fig. 1.1.2. Here

the

problem

is

deterministic,

Le.,

at

a given

state,

each

choice

of

control

leads

to

a

uniquely

determined

state.

For

example,

at

state

AC

the

decision

to

perform

operation

D leads

to

state

ACD

with

certainty,

and

has

cost

CCD.

Deterministic

problems

with

a finite

number

of

states

can

be

conveniently

represented

in

terms

of

transition

graphs'

such

as

the

one

of

Fig. 1.1.2.

The

optimal

solution

corresponds

to

the

path

that

starts

at

the

initial

state

and

ends

at

some

state

at

the

terminal

time

and

has

minimum

sum

of

arc

costs

plus

the

terminal

cost. We will

study

systematically

problems

of

this

type

in

Chapter

2.