122 IEEE TRANSACTIONS ON INTELLIGENT VEHICLES, VOL. 9, NO. 1, JANUARY 2024

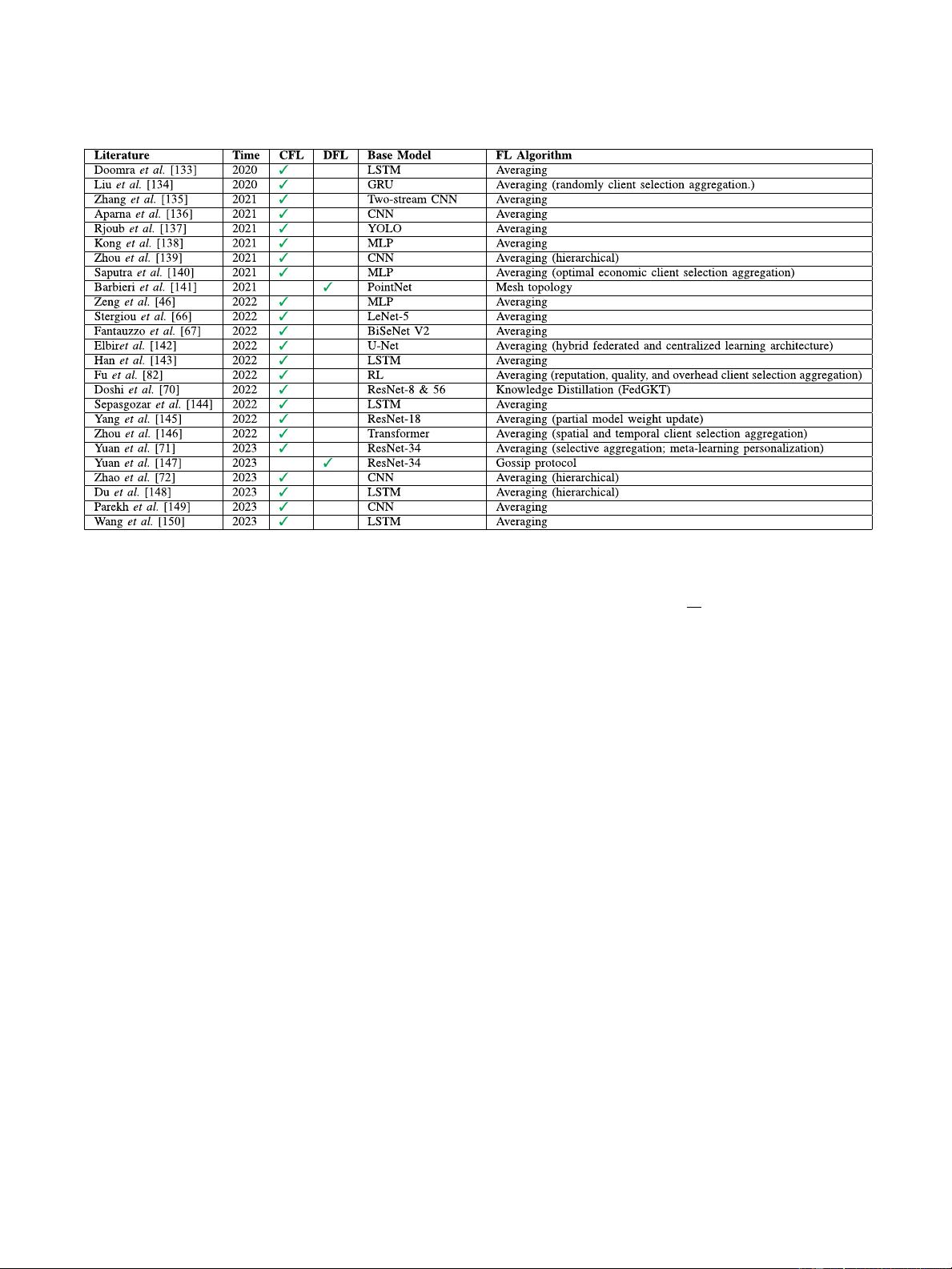

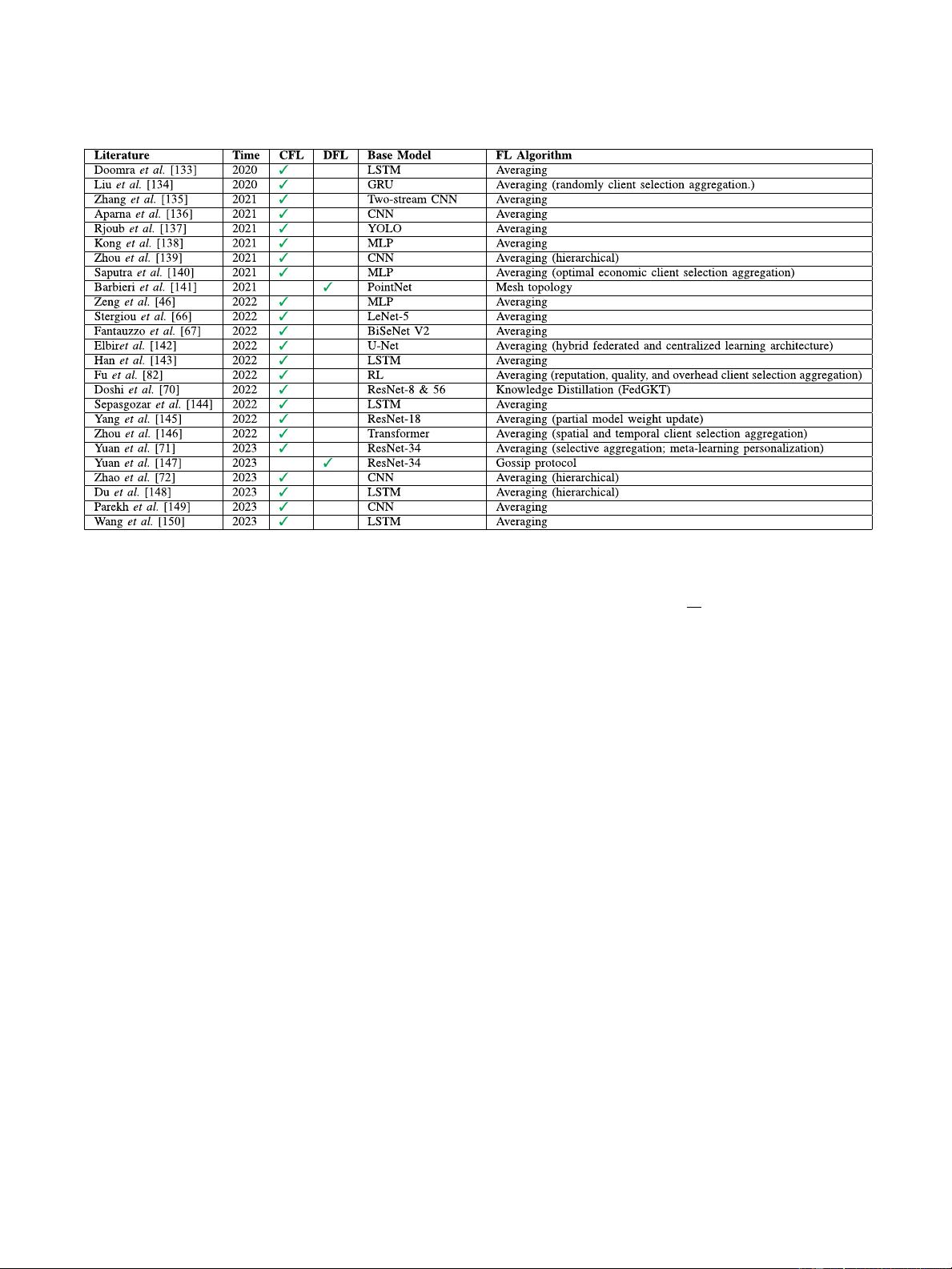

TABLE III

L

ITERATURE OVERVIEW OF FL FOR CAV ALGORITHMS

This training process typically adopts a simple Stochastic

Gradient Descent (SGD) algorithm. The computational

infrastructure is usually limited.

3) Local Update Upload: After training the model, each ve-

hicle applies privacy-preserving techniques such as differ-

ential privacy (introduces artificial noise to the parameters)

and then uploads/communicates the model parameters to

the selected central server (Centralized Federated Learn-

ing, i.e., CFL) or other vehicles (Decentralized Federated

Learning, i.e., DFL).

4) Aggregation of Vehicle Updates: The server securely ag-

gregates the parameters uploaded from K vehicles to

obtain the global model. Furthermore, it tests the model’s

performance.

A. Centralized Federated Learning

In this section, we review two major aggregation methods in

the centralized framework, namely averaging and a more recent

technique called knowledge distillation.

1) Averaging: Most of the existing literature uses the Feder-

ated Averaging (FedAvg) algorithm [25] for the FL aggregation

process on the server—see Table III. FedAvg applies SGD op-

timization to local vehicles and performs a weighted averaging

of the weights of the vehicles on the central server. FedAvg

performs multiple local gradient updates before s ending the

parameters to the server, reducing the number of communication

rounds. For FL4CAV, data on each CAV are dynamically updated

at each communication round.

A typical FL setup has K vehicles that have their own local

data sets and the ability to perform simple local optimization. At

the central server, the optimization problem can be represented

as

min

x∈R

d

f(x)=

1

K

K

i=1

f

i

(x

i

)

, (1)

where f

i

: R

d

→ R for i ∈{1,...,K} is the local objective

function of the ith vehicle. The local objective function of the

ith vehicle can have the form,

f

i

(x

i

)=E

ξ

i

∼D

i

[(x

i

,ξ

i

)], (2)

where ξ

i

represents the data that have been sampled from the

local vehicle data D

i

for the i

th

vehicle. The expectation oper-

ator, E, is acting on the local objective function, (x

i

,ξ

i

),with

respect to a data sample, ξ

i

, drawn from the vehicle data, D

i

.

The function (x

i

,ξ

i

) is the loss function evaluated for each

vehicle, x

i

, and data sample, ξ

i

. Here, x

i

∈ R

d

represents the

model parameters of vehicle i, and X ∈ R

d×K

is the matrix

formed using these parameter vectors. The learning process

is performed to find a minimizer of the objective function,

x

i

= x

∗

=argmin

x∈R

d

f(x).

The data obtained from CAVs are typically non-independent

and non-identically distributed (non-IID). FedAvg faces chal-

lenges in realistic heterogeneous data settings, as a single global

model may not perform well for individual vehicles, and mul-

tiple local updates can cause the updates to deviate from the

global objective [44]. Several variants of FedAvg have been

proposed to address the challenges encountered by FL, such

as data heterogeneity, client drift, local vehicle data imbalance,

communication latency, and computation capabilities. FedProx

algorithm, FedAvg with a proximal term, has been proposed to

improve the convergence and reduce communication cost [45].

Dynamic Federated Proximal [46] algorithm (DFP) is an exten-

sion of FedProx that could effectively deal with non-IID data

Authorized licensed use limited to: Zhengzhou University. Downloaded on April 27,2024 at 09:15:34 UTC from IEEE Xplore. Restrictions apply.