...

...

+

...

...

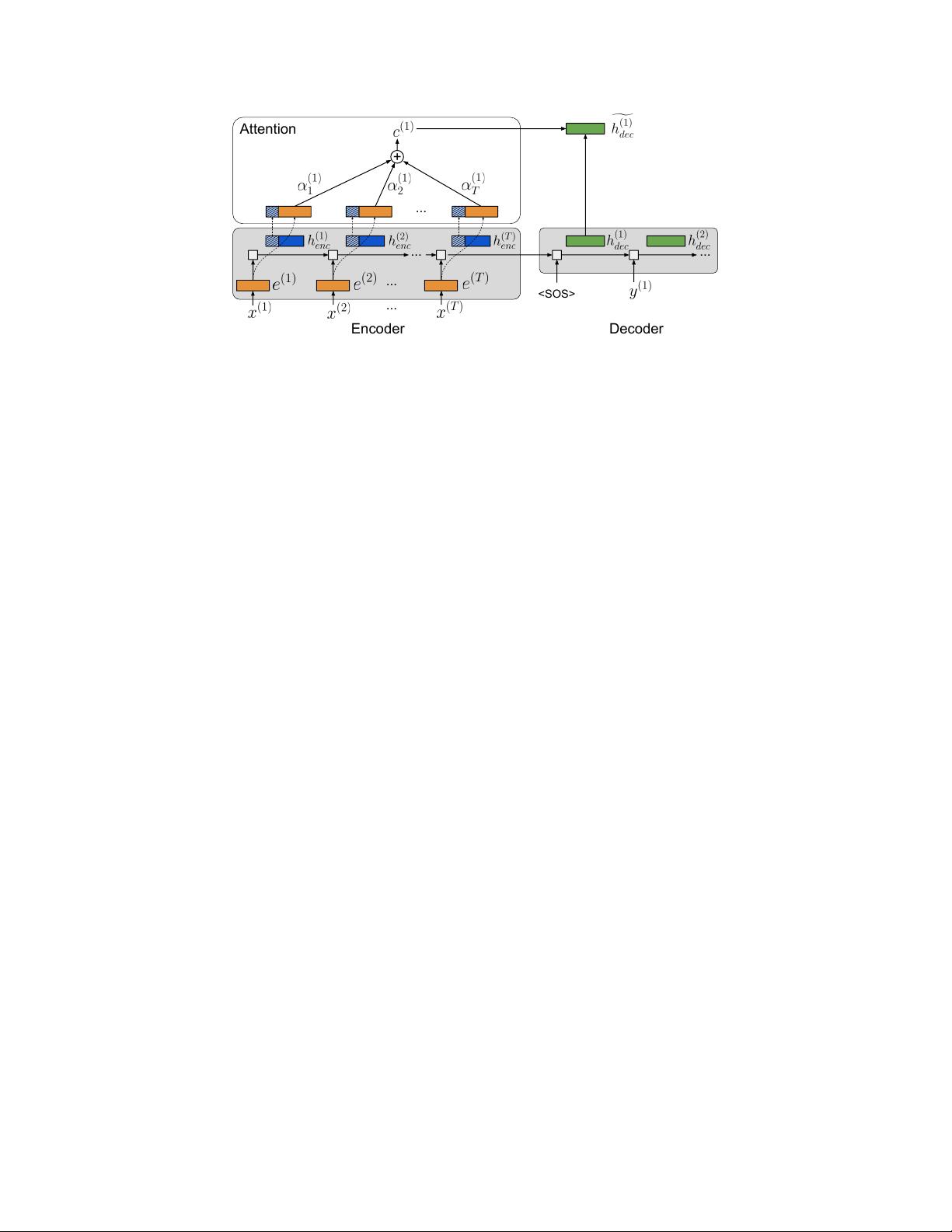

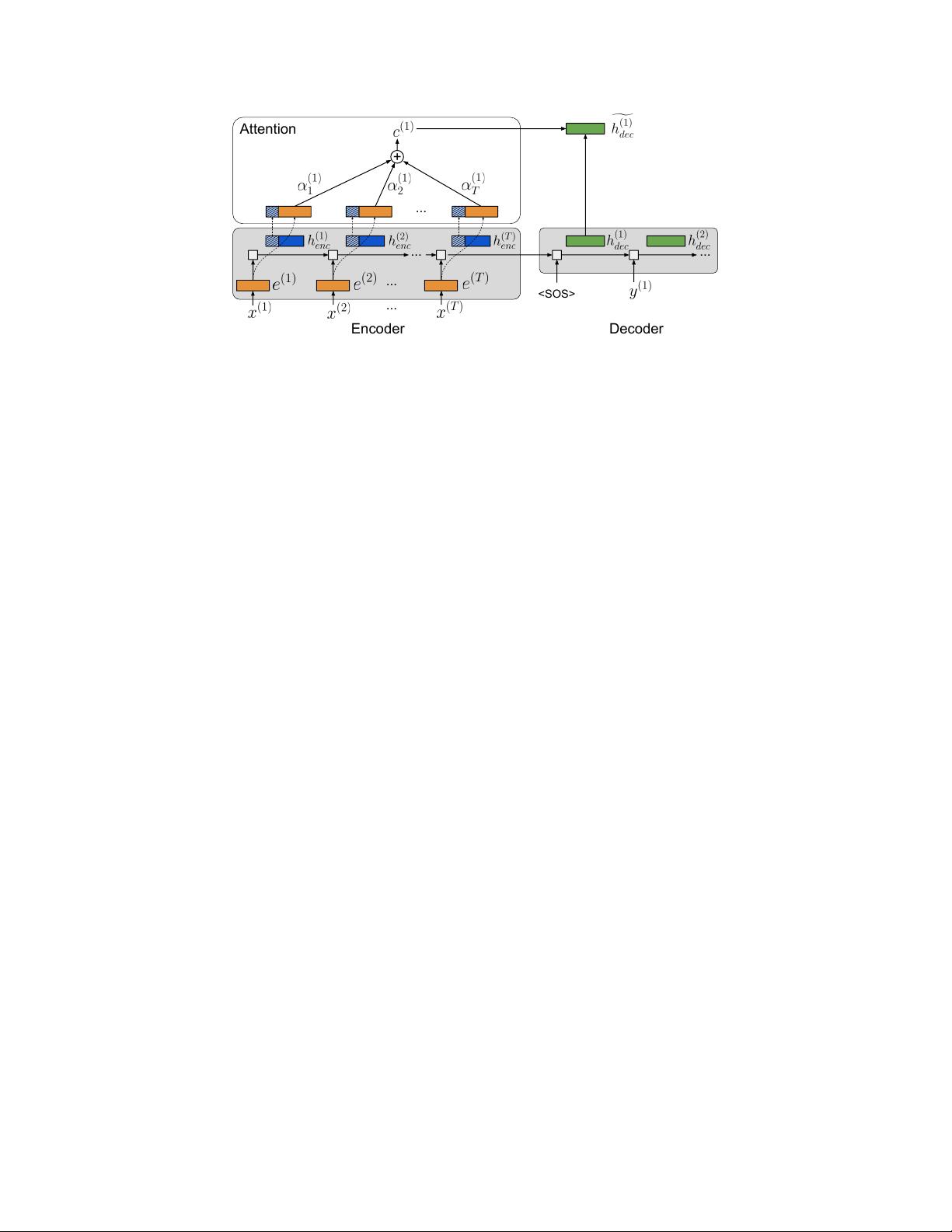

Attention

...

<SOS>

DecoderEncoder

Figure 2:

Attention mechanism for NMT.

The word embeddings, encoder hidden states, and decoder hidden

states are color-coded orange, blue, and green, respectively; the striped regions of the encoder hidden states

represent the slices that are stored in memory for attention. The final vectors used to compute the context vector

are concatenations of the word embeddings and encoder hidden state slices.

5.1 GPU Considerations

For our method to be used as part of a practical training procedure, we must run it on a parallel

architecture such as a GPU. This introduces additional considerations which require modifications to

Algorithm 1: (1) we implement it with ordinary finite-bit integers, hence dealing with overflow, and

(2) for GPU efficiency, we ensure uniform memory access patterns across all hidden units.

Overflow.

Consider the storage required for a single hidden unit. Algorithm 1 assumes unboundedly

large integers, and hence would need to be implemented using dynamically resizing integer types,

as was done by Maclaurin et al.

[13]

. But such data structures would require non-uniform memory

access patterns, limiting their efficiency on GPU architectures. Therefore, we modify the algorithm

to use ordinary finite integers. In particular, instead of a single integer, the buffer is represented

with a sequence of 64-bit integers

(B

0

, . . . , B

D

)

. Whenever the last integer in our buffer is about to

overflow upon multiplication by

2

R

Z

, as required by step 1 of Algorithm 1, we append a new integer

B

D+1

to the sequence. Overflow will occur if B

D

> 2

64−R

Z

.

After appending a new integer

B

D+1

, we apply Algorithm 1 unmodified, using

B

D+1

in place of

B

.

It is possible that up to

R

Z

−1

bits of

B

D

will not be used, incurring an additional penalty on storage

cost. We experimented with several ways of alleviating this penalty but found that none improved

significantly over the storage cost of the initial method.

Vectorization.

Vectorization imposes an additional penalty on storage. For efficient computation,

we cannot maintain different size lists as buffers for each hidden unit in a minibatch. Rather, we must

store the buffer as a three-dimensional tensor, with dimensions corresponding to the minibatch size,

the hidden state size, and the length of the buffer list. This means each list of integers being used as a

buffer for a given hidden unit must be the same size. Whenever a buffer being used for any hidden

unit in the minibatch overflows, an extra integer must be added to the buffer list for every hidden unit

in the minibatch. Otherwise, the steps outlined above can still be followed.

We give the complete, revised algorithm in Appendix C.3. The compromises to address overflow and

vectorization entail additional overhead. We measure the size of this overhead in Section 6.

5.2 Memory Savings with Attention

Most modern architectures for neural machine translation make use of attention mechanisms [

4

,

5

];

in this section, we describe the modifications that must be made to obtain memory savings when

using attention. We denote the source tokens by

x

(1)

, x

(2)

, . . . , x

(T )

, and the corresponding word

embeddings by

e

(1)

, e

(2)

, . . . , e

(T )

. We also use the following notation to denote vector slices: given

a vector

v ∈ R

D

, we let

v[: k] ∈ R

k

denote the vector consisting of the first

k

dimensions of

v

.

Standard attention-based models for NMT perform attention over the encoder hidden states; this is

problematic from the standpoint of memory savings, because we must retain the hidden states in

memory to use them when computing attention. To remedy this, we explore several alternatives to

storing the full hidden state in memory. In particular, we consider performing attention over: 1) the

embeddings

e

(t)

, which capture the semantics of individual words; 2) slices of the encoder hidden

6