This article has been accepted for inclusion in a future issue of this journal. Content is final as presented, with the exception of pagination.

SONG et al.: SPARSE PROXIMAL RL VIA NESTED OPTIMIZATION 3

transition (s, a, r, s

), the sample matrices are defined as

˜

≡

⎡

⎢

⎣

φ

T

1

.

.

.

φ

T

m

⎤

⎥

⎦

,

˜

≡

⎡

⎢

⎣

φ

T

1

.

.

.

φ

T

m

⎤

⎥

⎦

and

˜

R ≡

⎡

⎢

⎣

r

1

.

.

.

r

m

⎤

⎥

⎦

(4)

and we obtain an empirical version of (3)asfollows:

θ = u

∗

= arg min

u∈R

n×1

˜

u −

˜

R + γ

˜

θ

2

. (5)

The derivation from (3)to(5) can be referred to [20] and [22].

It has been proved that with m →∞, the fixed-point of (5)

approaches that of (3) with probability one.

A least squares problem orthogonally projects θ back to F

as a new fixed-point θ

∗

. We can solve fixed-point θ

∗

in the

following nested optimization problem [28], [33], [34]:

⎧

⎪

⎨

⎪

⎩

u

∗

= arg min

u∈R

n×1

˜

u −

˜

R + γ

˜

θ

2

2

θ

∗

= arg min

θ∈R

n×1

˜

θ −

˜

u

∗

2

2

.

(6)

The first step of (6) is to minimize the OPE and the second

is to minimize the FPE. In this paper, we refer to these two

steps as projection step and fixed-point step as in [28].

Given a fixed θ , the closed-form solution to u

∗

in the

projection step of (6) can be found as follows:

u

∗

= arg min

u∈R

n×1

˜

u −

˜

R + γ

˜

θ

2

2

=

˜

T

˜

−1

˜

T

˜

R + γ

˜

θ

. (7)

By substituting (7) into the fixed-point step in (6) and setting

θ = u, the nested optimization problem in (6) is changed to the

following optimization problem to minimize the mean-square

projected Bellman error (MSPBE):

u

∗

= arg min

u∈R

n×1

˜

u −

˜

˜

T

˜

−1

˜

T

˜

R + γ

˜

u

2

2

. (8)

Equation (8) is an empirical version, and it can be derived by

substituting (4) into the original definition of MSPBE in [8]:

MSPBE(θ) = 1/2V

θ

−TV

θ

2

D

, and = (

T

D)

−1

T

D

is the Bellman projection operator.

In [28], two schemes of

1

-regularization problem are

proposed, termed L

1

and L

21

. The difference lies in, where to

place the

1

penalty. L

21

scheme that places

1

penalty in the

fixed-point step forms a solvable Lasso problem by

1

solvers;

whereas, the

2

penalty in the projection step keeps the pro-

jection matrix nonsingular, but the effects on the algorithm

is not entirely clear. For these reasons, we choose the nested

optimization problem scheme that has an

1

penalty in the

fixed-point step. Differently, we use iterative refinement [36]

instead of the

2

penalty to keep the projection matrix nonsin-

gular, and ensure that the result of the projection step converge

to the unbiased solution. The nested optimization problem in

this paper is proposed as

⎧

⎪

⎨

⎪

⎩

u

∗

= arg min

u∈R

n×1

1

2

˜

u −

˜

R + γ

˜

θ

2

2

θ

∗

= arg min

θ∈R

n×1

1

2

˜

θ −

˜

u

∗

2

2

+ β

1

θ

1

(9)

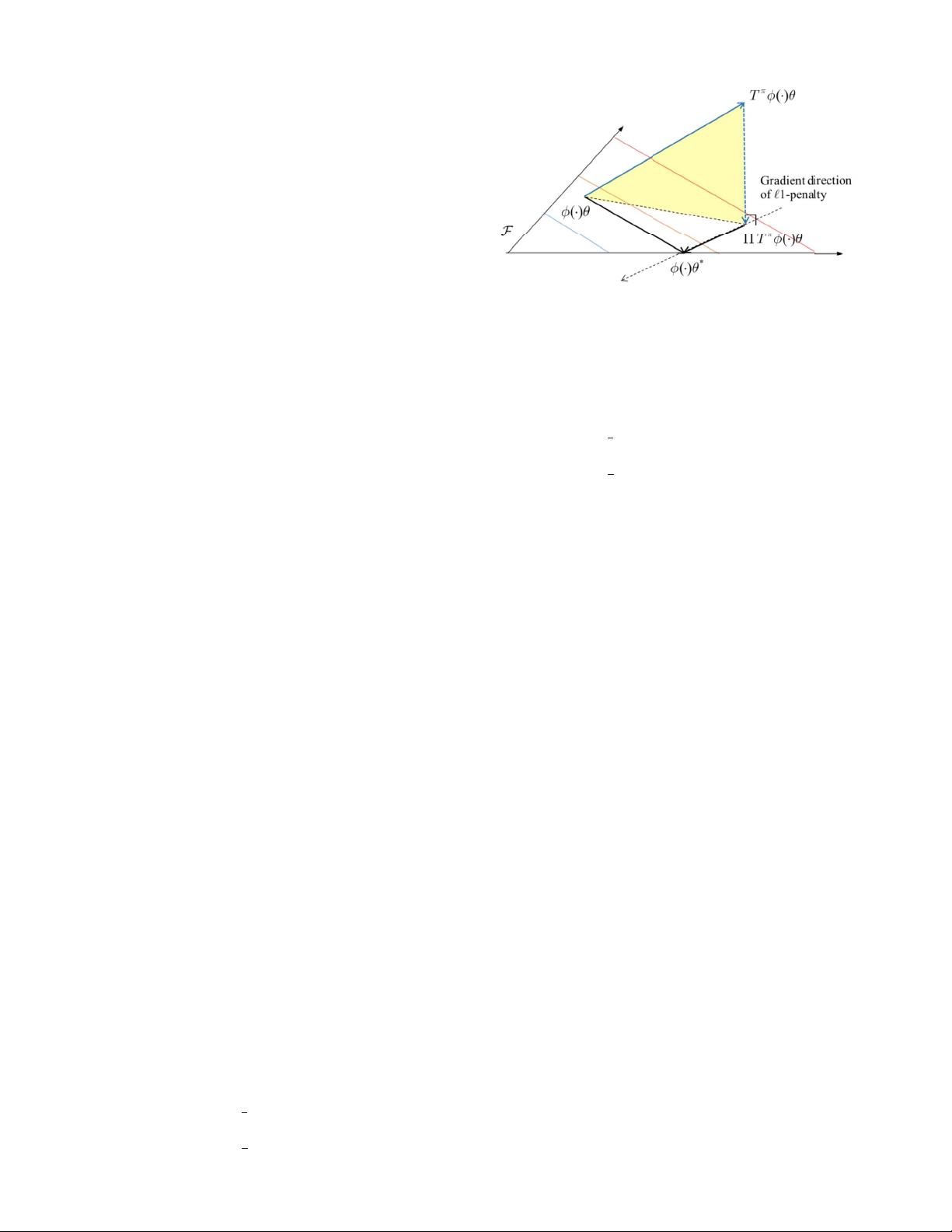

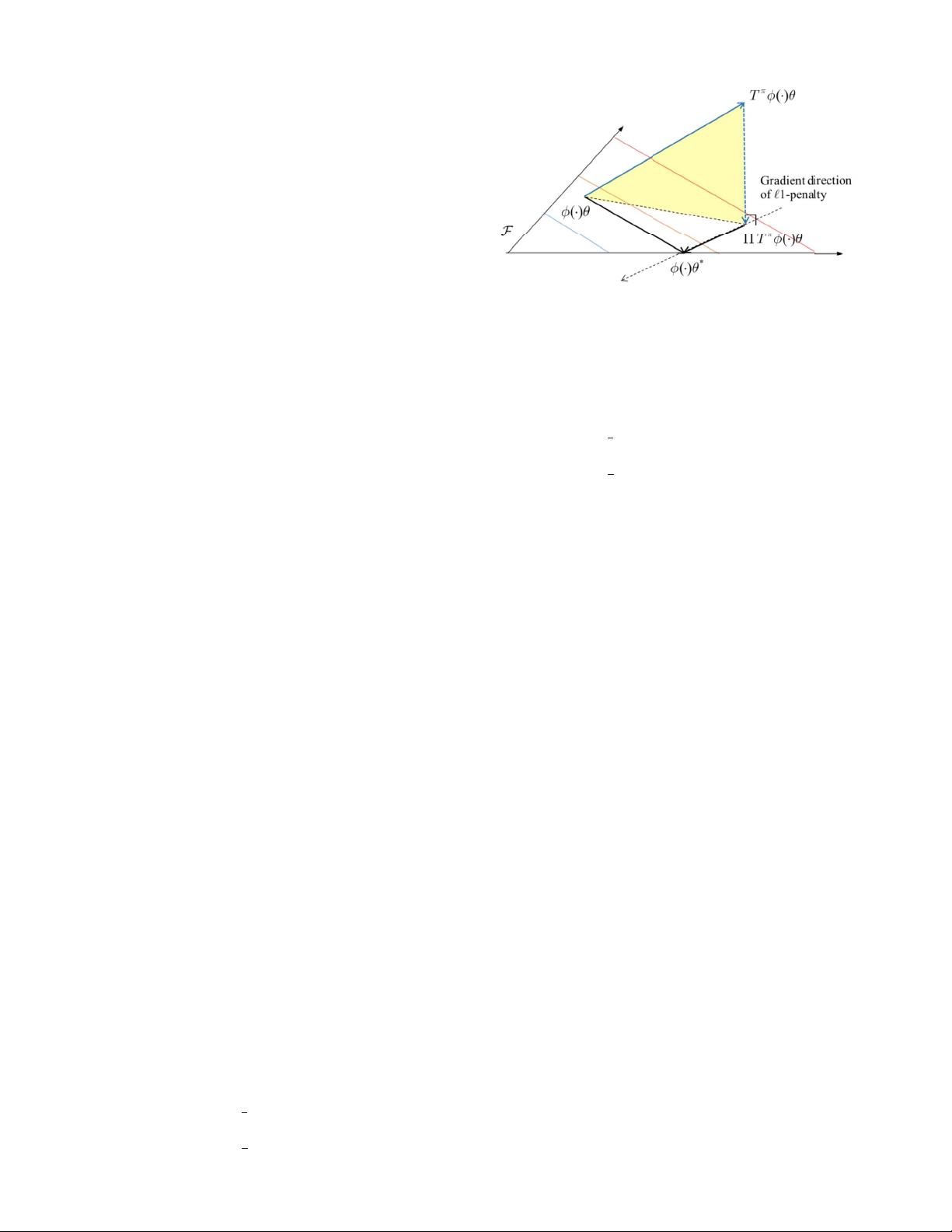

Fig. 1. Graphical illustration of the proposed nested optimization problem.

where β

1

∈ R

+

is an

1

-regularization parameter. An equiv-

alent nested optimization problem of (9) including MSPBE

in (8) can be obtained as follows:

⎧

⎪

⎪

⎨

⎪

⎪

⎩

u

∗

= arg min

u∈R

n×1

1

2

˜

u −

˜

˜

T

˜

−1

˜

T

˜

R + γ

˜

θ

2

2

θ

∗

= arg min

θ∈R

n×1

1

2

˜

θ −

˜

u

∗

2

2

+ β

1

θ

1

.

(10)

We see that the nested optimization in (10) contains a sub-

problem minimizing MSPBE and a subproblem minimizing

a Lasso problem. Both subproblems are relatively easier to

solve than MSPBE with

1

-regularization. The equivalency

of (9) and (10) can be proved as follows. By substituting (7)

into the fixed-point step in (9) and setting θ = u, the fixed

point of (9) can be obtained as

θ

∗

= arg min

θ∈R

n×1

˜

θ −

˜

˜

T

˜

−1

˜

T

˜

R + γ

˜

θ

2

2

+ β

1

θ

1

. (11)

Also we can substitute the closed-form solution to projection

step in (10) into the corresponding fixed-point step as follows:

u

∗

= arg min

u∈R

n×1

˜

u −

˜

˜

T

˜

−1

˜

T

˜

R + γ

˜

θ

2

2

=

˜

T

˜

−1

˜

T

˜

˜

T

˜

−1

˜

T

˜

R + γ

˜

θ

θ

∗

= arg min

θ∈R

n×1

˜

θ −

˜

u

∗

2

2

+ β

1

θ

1

= arg min

θ∈R

n×1

˜

θ −

˜

˜

T

˜

−1

˜

T

˜

˜

T

˜

−1

˜

T

˜

R + γ

˜

θ

2

2

+ β

1

θ

1

= arg min

θ∈R

n×1

˜

θ −

˜

˜

T

˜

−1

˜

T

˜

R + γ

˜

θ

2

2

+ β

1

θ

1

. (12)

We choose the nested optimization problem in (10)asthe

regularization scheme in this paper. The graphical illustration

of this problem is shown in Fig. 1.

With a more generalized meaning, an elastic net

penalty [37] can be used

p

en

(

θ

)

≡ β

1

θ

1

+ β

θ

2

2

= β

1

θ

1

+ μ/β

1

θ

2

2

(13)

where β

2

∈ R

+

is an

2

-regularization parameter, μ ∈ R

+

is tradeoff weight between

2

and

1

penalties. The value of