LI et al.: DISTRIBUTED ESTIMATION OVER AN ADAPTIVE INCREMENTAL NETWORK 153

global estimation at time instant . Consider a Newton’s search

based approach to solving (9) for incremental learning within

a distributed network. The optimal tap weight

is estimated

via [17]

(10)

where

, , denotes a regu-

larization parameter with small positive value,

indicates

an appropriately chosen step-size, which is evaluated in

Section III-E, and the scheme is initialized with an

1

vector

.

For a practical scheme to realize (10), and utilizing the corre-

lation of the input signal at each node, we replace

by the following sample sliding-window estimates:

(11)

(12)

with

equal to the number of recent regressors of each node

whilst

and denote the corresponding input vector and

desired response at instant time

for the th node. Hence, using

the matrix inversion formula, recursion (10) becomes,

(13)

where the local

block data matrix and 1 data vector

are

.

.

.

.

.

.

(14)

and

is employed to avoid the inversion of a rank deficient ma-

trix

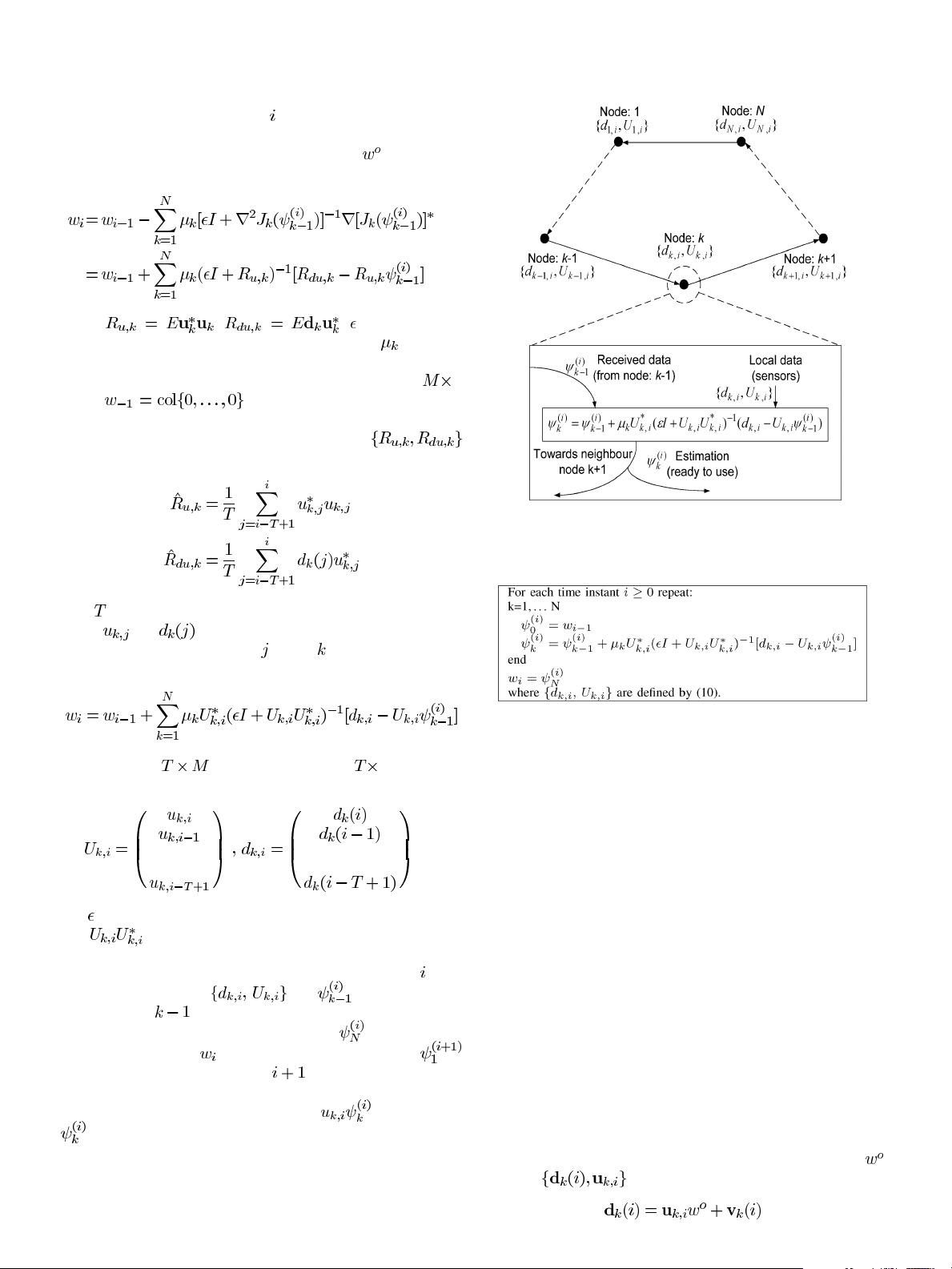

. As such, recursion (13) is the distributed APA

(dAPA) learning algorithm in an incremental network, the op-

eration of which is shown in Fig. 1. At each time instant

, each

node utilizes local data

and received from its

previous node

in the cycle to update the local estimation.

At the end of the cycle, the local estimation

is employed as

the global estimation and the initial local estimation

for the next discrete time instant . The final weight vector

shown at the bottom of Fig. 1 can either be used to generate

a filter output vector term of the form

or the vector

itself can then be used for system identification or equaliza-

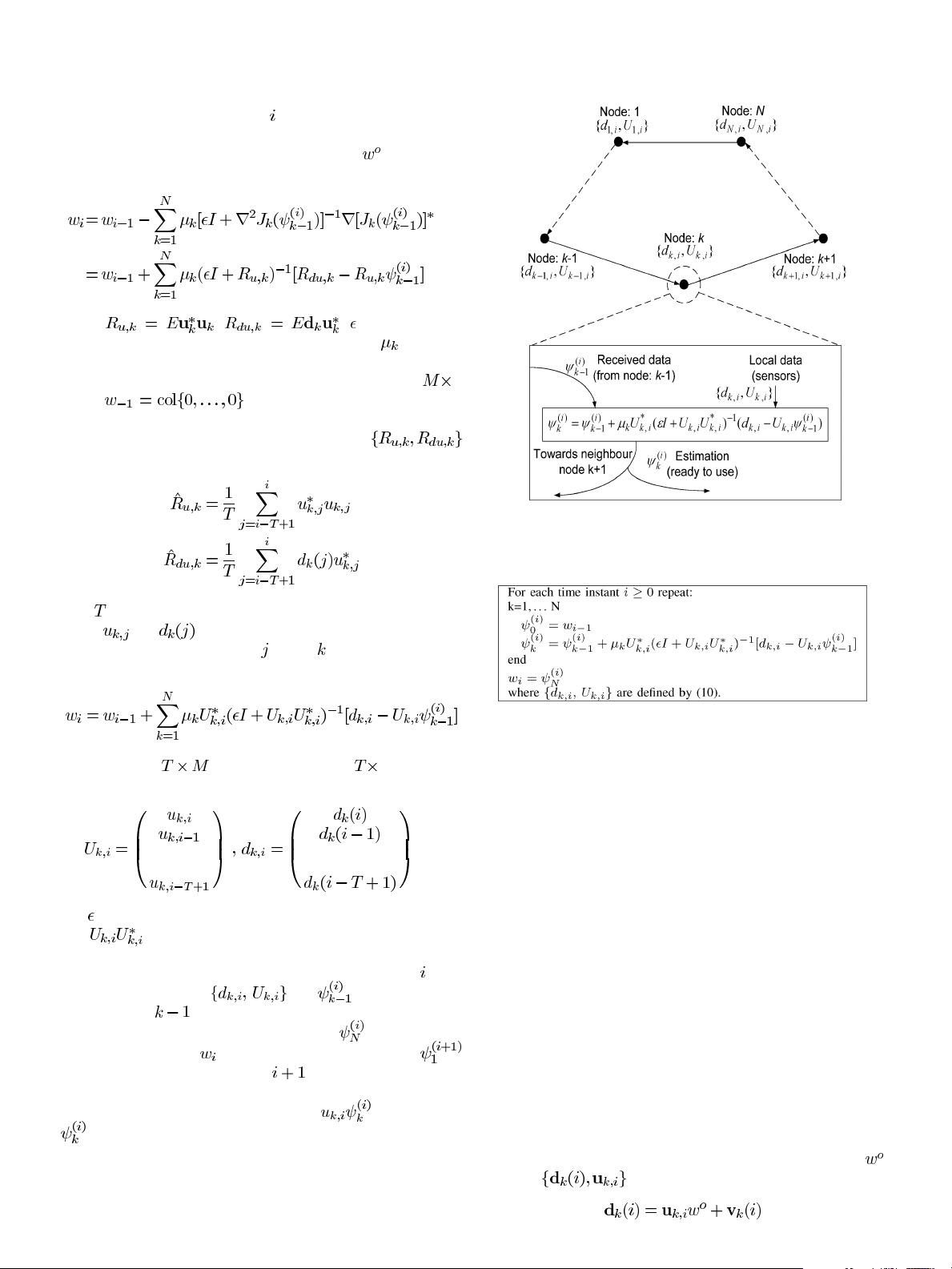

tion. The pseudo-code implementation of dAPA is described in

Table I. In addition, dAPA has intermediate computational and

memory cost between dLMS and dRLS, for certain regressor

length, which is verified in the Appendix.

Fig. 1. Data processing of the dAPA algorithm in an incremental network.

TABLE I

P

SEUDO-CODE IMPLEMENTATION OF D

APA

III. PERFORMANCE ANALYSIS

The convergence behaviors of classical APA-based algo-

rithms are studied in [15]–[19], exploiting arguments based on

a single adaptive filter. In order to study the performance of the

dAPA algorithm, we extend the weighted energy conservation

approach for the APA-based algorithms of [18], [19] to the

case of a distributed incremental network, which involves both

the space dimension and the time dimension. However, due to

the energy flow across the interconnected filter, some of the

simplifications for a single filter case cannot be adopted. A

set of weighting matrices is particularly chosen to decouple a

set of equations and we evaluate the transient and steady-state

performances at each individual node in terms of mean-square

deviation (MSD), excess mean-square-error (EMSE) and

mean-square error (MSE). The closed-form expressions for

the theoretical results are formed under some simplifying

assumptions described below.

A. Data Model and Assumption

As defined earlier, we use boldface letters as the random

quantities and assume the same model as in [9] to carry out the

performance analysis:

A1) The relation between the unknown system vector

and takes the form:

(15)