3

filter #175

filter #55

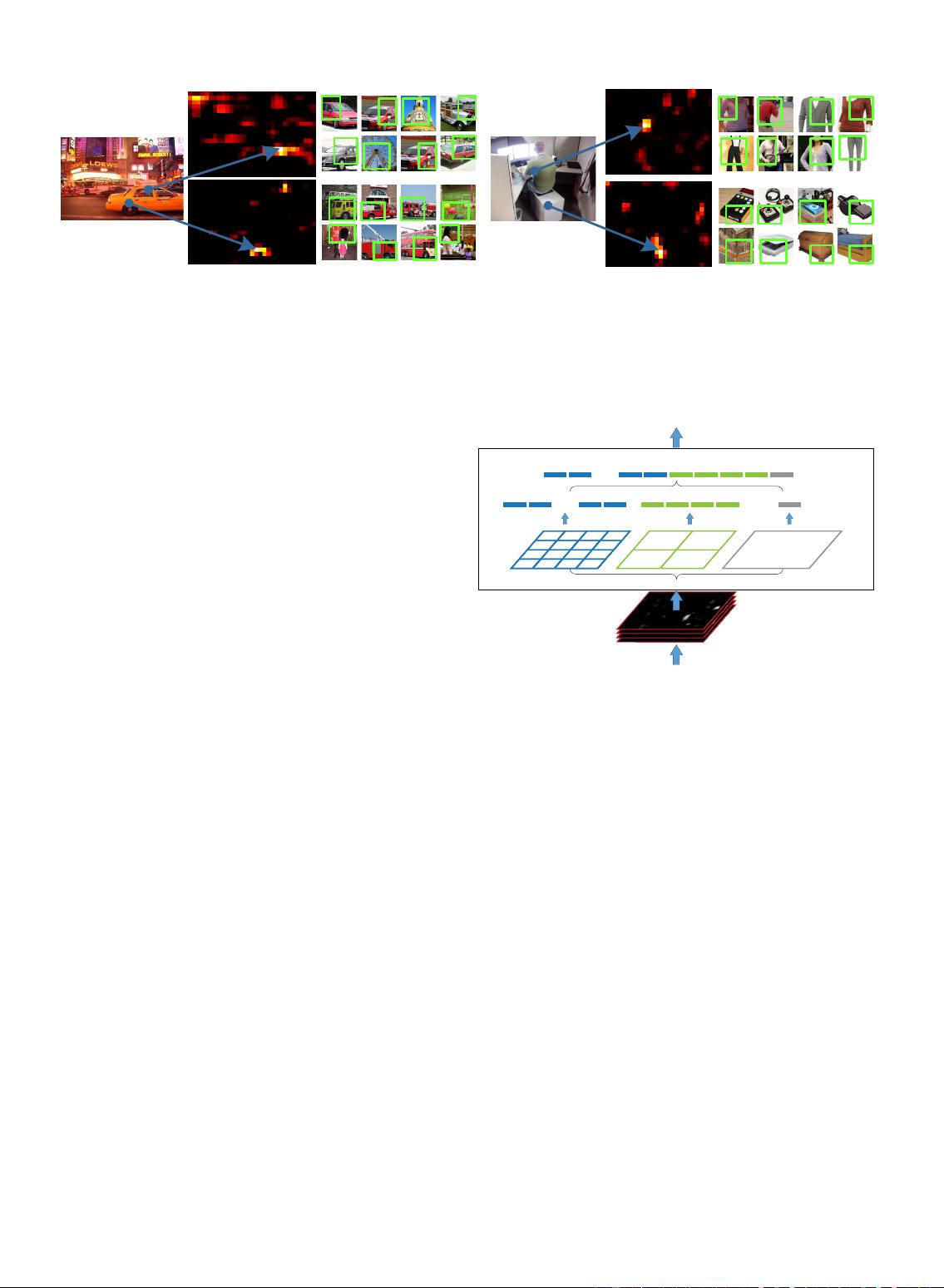

(a) image (b) feature maps (c) strongest activations

filter #66

filter #118

(a) image (b) feature maps (c) strongest activations

Figure 2: Visualization of the feature maps. (a) Two images in Pascal VOC 2007. (b) The feature maps of some

conv

5

filters. The arrows indicate the strongest responses and their corresponding positions in the images.

(c) The ImageNet images that have the strongest responses of the corresponding filters. The green rectangles

mark the receptive fields of the strongest responses.

layers are fully connected, with an N-way softmax as

the output, where N is the number of categories.

The deep network described above needs a fixed

image size. However, we notice that the requirement

of fixed sizes is only due to the fully-connected layers

that demand fixed-length vectors as inputs. On the

other hand, the convolutional layers accept inputs of

arbitrary sizes. The convolutional layers use sliding

filters, and their outputs have roughly the same aspect

ratio as the inputs. These outputs are known as feature

maps [1] - they involve not only the strength of the

responses, but also their spatial positions.

In Figure 2, we visualize some feature maps. They

are generated by some filters of the conv

5

layer. Fig-

ure 2(c) shows the strongest activated images of these

filters in the ImageNet dataset. We see a filter can be

activated by some semantic content. For example, the

55-th filter (Figure 2, bottom left) is most activated by

a circle shape; the 66-th filter (Figure 2, top right) is

most activated by a ∧-shape; and the 118-th filter (Fig-

ure 2, bottom right) is most activated by a ∨-shape.

These shapes in the input images (Figure 2(a)) activate

the feature maps at the corresponding positions (the

arrows in Figure 2).

It is worth noticing that we generate the feature

maps in Figure 2 without fixing the input size. These

feature maps generated by deep convolutional lay-

ers are analogous to the feature maps in traditional

methods [27], [28]. In those methods, SIFT vectors

[29] or image patches [28] are densely extracted and

then encoded, e.g., by vector quantization [16], [15],

[30], sparse coding [17], [18], or Fisher kernels [19].

These encoded features consist of the feature maps,

and are then pooled by Bag-of-Words (BoW) [16] or

spatial pyramids [14], [15]. Analogously, the deep

convolutional features can be pooled in a similar way.

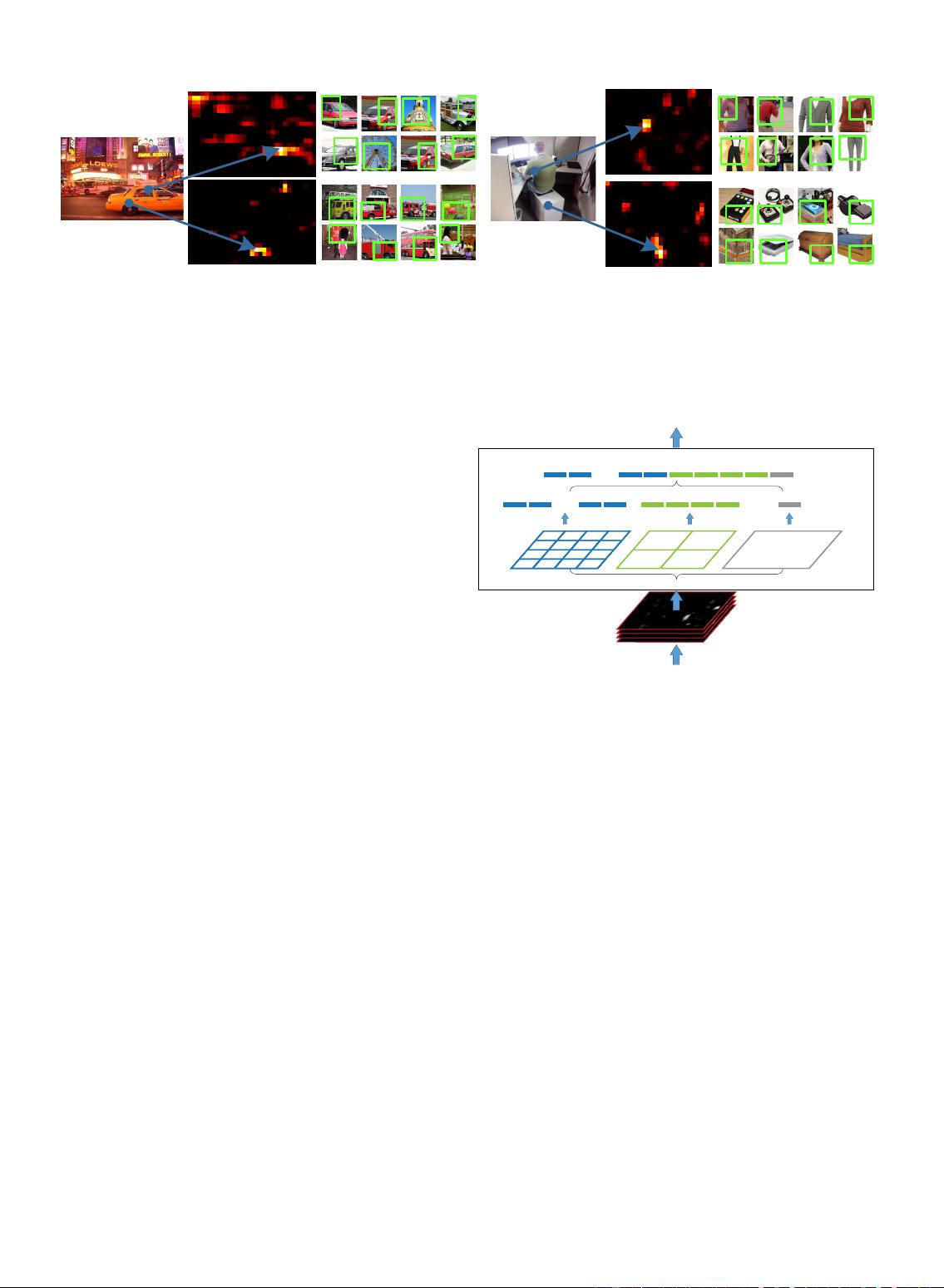

2.2 The Spatial Pyramid Pooling Layer

The convolutional layers accept arbitrary input sizes,

but they produce outputs of variable sizes. The classi-

fiers (SVM/softmax) or fully-connected layers require

convolutional layers

feature maps of conv

5

(arbitrary size)

fixed-length representation

input image

16×256-d 4×256-d 256-d

…...

…...

spatial pyramid pooling layer

fully-connected layers (fc

6

, fc

7

)

Figure 3: A network structure with a spatial pyramid

pooling layer. Here 256 is the filter number of the

conv

5

layer, and conv

5

is the last convolutional layer.

fixed-length vectors. Such vectors can be generated

by the Bag-of-Words (BoW) approach [16] that pools

the features together. Spatial pyramid pooling [14],

[15] improves BoW in that it can maintain spatial

information by pooling in local spatial bins. These

spatial bins have sizes proportional to the image size,

so the number of bins is fixed regardless of the image

size. This is in contrast to the sliding window pooling

of the previous deep networks [3], where the number

of sliding windows depends on the input size.

To adopt the deep network for images of arbi-

trary sizes, we replace the last pooling layer (e.g.,

pool

5

, after the last convolutional layer) with a spatial

pyramid pooling layer. Figure 3 illustrates our method.

In each spatial bin, we pool the responses of each

filter (throughout this paper we use max pooling).

The outputs of the spatial pyramid pooling are kM-

dimensional vectors with the number of bins denoted

as M (k is the number of filters in the last convo-

lutional layer). The fixed-dimensional vectors are the

input to the fully-connected layer.

With spatial pyramid pooling, the input image can