0 1000 2000 3000 4000 5000

Iteration

0

2

4

6

8

10

Style Loss (×10

5

)

Batch Norm

Instance Norm

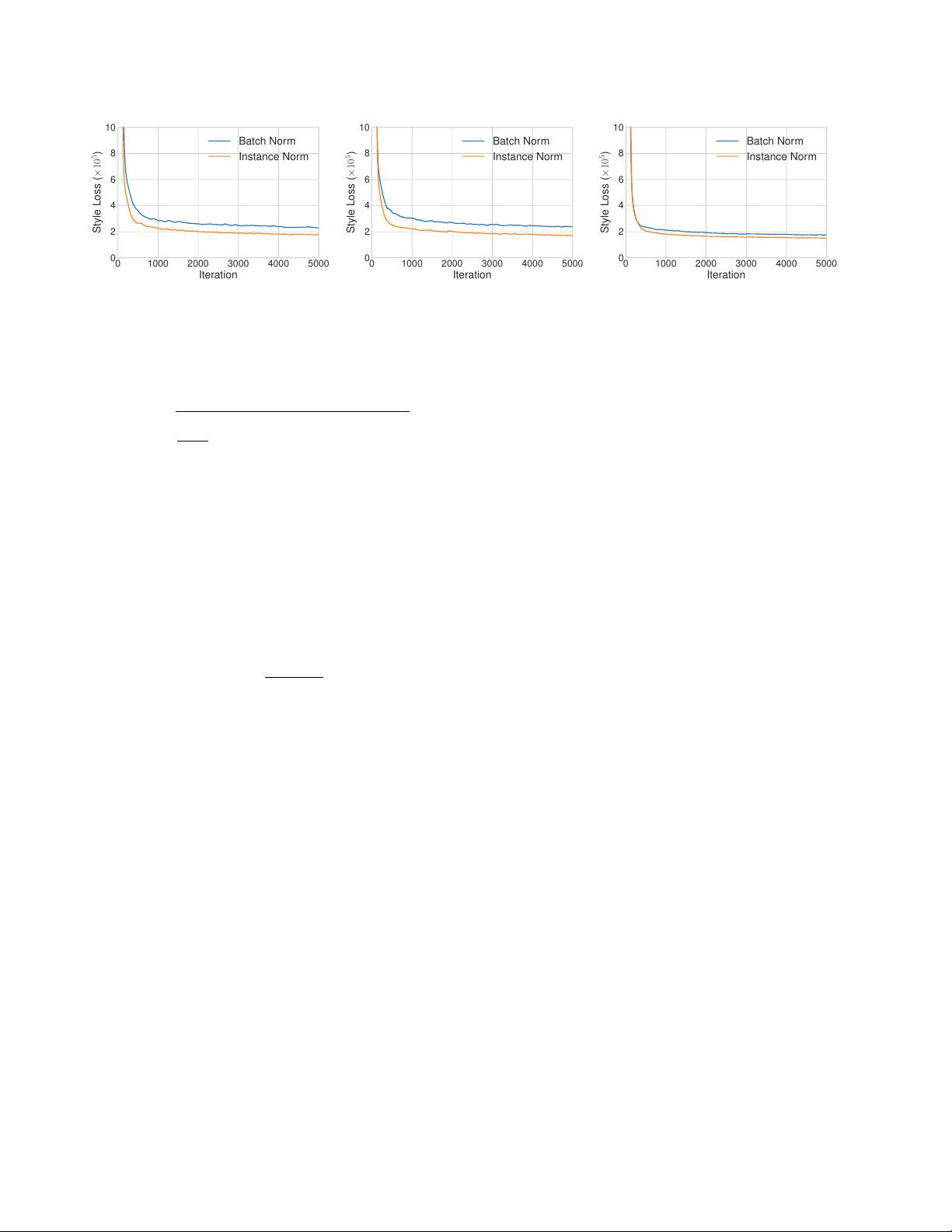

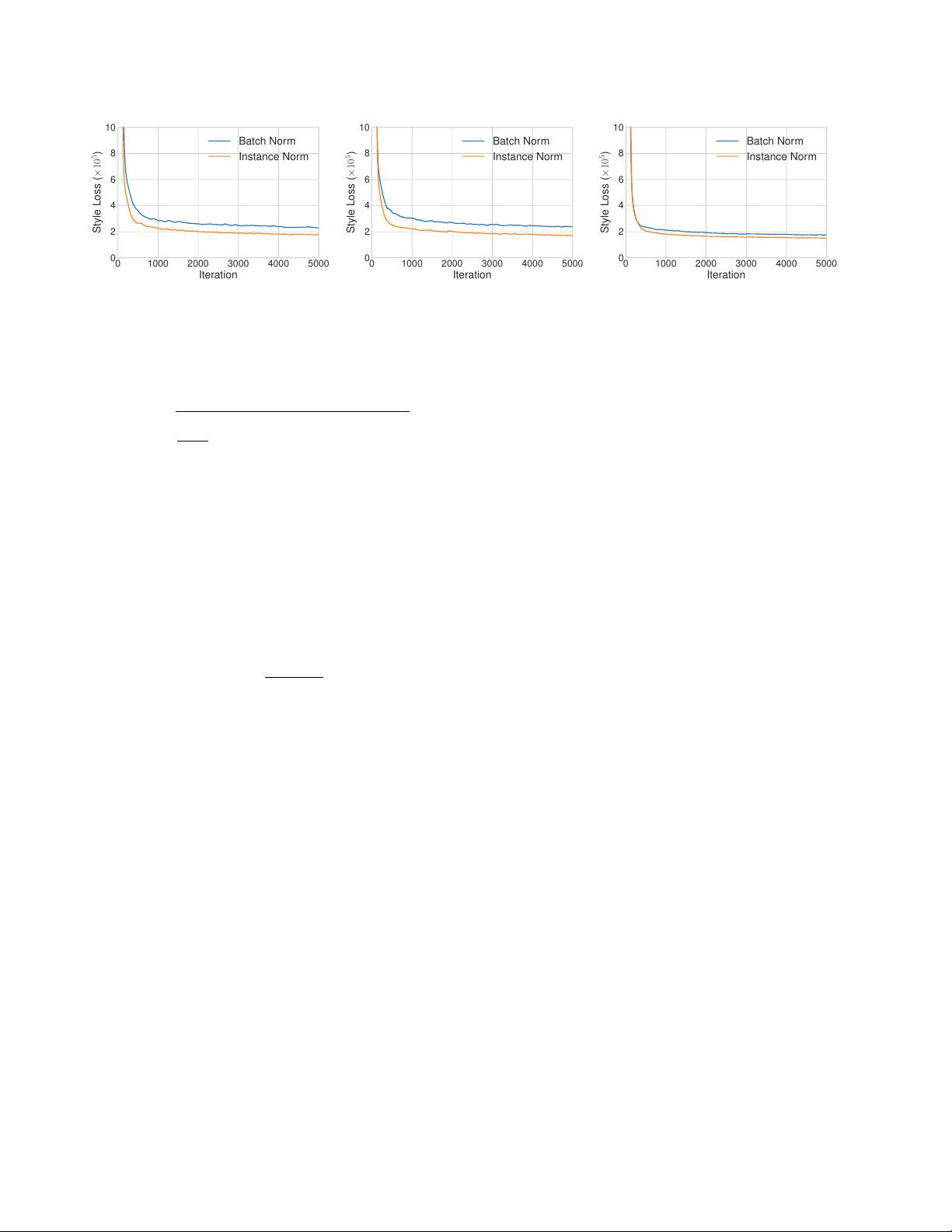

(a) Trained with original images.

0 1000 2000 3000 4000 5000

Iteration

0

2

4

6

8

10

Style Loss (×10

5

)

Batch Norm

Instance Norm

(b) Trained with contrast normalized images.

0 1000 2000 3000 4000 5000

Iteration

0

2

4

6

8

10

Style Loss (×10

5

)

Batch Norm

Instance Norm

(c) Trained with style normalized images.

Figure 1. To understand the reason for IN’s effectiveness in style transfer, we train an IN model and a BN model with (a) original images

in MS-COCO [36], (b) contrast normalized images, and (c) style normalized images using a pre-trained style transfer network [24]. The

improvement brought by IN remains significant even when all training images are normalized to the same contrast, but are much smaller

when all images are (approximately) normalized to the same style. Our results suggest that IN performs a kind of style normalization.

σ

nc

(x) =

v

u

u

t

1

HW

H

X

h=1

W

X

w=1

(x

nchw

− µ

nc

(x))

2

+ (6)

Another difference is that IN layers are applied at test

time unchanged, whereas BN layers usually replace mini-

batch statistics with population statistics.

3.3. Conditional Instance Normalization

Instead of learning a single set of affine parameters γ

and β, Dumoulin et al. [11] proposed a conditional instance

normalization (CIN) layer that learns a different set of pa-

rameters γ

s

and β

s

for each style s:

CIN(x; s) = γ

s

x − µ(x)

σ(x)

+ β

s

(7)

During training, a style image together with its index

s are randomly chosen from a fixed set of styles s ∈

{1, 2, ..., S} (S = 32 in their experiments). The con-

tent image is then processed by a style transfer network

in which the corresponding γ

s

and β

s

are used in the CIN

layers. Surprisingly, the network can generate images in

completely different styles by using the same convolutional

parameters but different affine parameters in IN layers.

Compared with a network without normalization layers,

a network with CIN layers requires 2F S additional param-

eters, where F is the total number of feature maps in the

network [11]. Since the number of additional parameters

scales linearly with the number of styles, it is challenging to

extend their method to model a large number of styles (e.g.,

tens of thousands). Also, their approach cannot adapt to

arbitrary new styles without re-training the network.

4. Interpreting Instance Normalization

Despite the great success of (conditional) instance nor-

malization, the reason why they work particularly well for

style transfer remains elusive. Ulyanov et al. [52] attribute

the success of IN to its invariance to the contrast of the con-

tent image. However, IN takes place in the feature space,

therefore it should have more profound impacts than a sim-

ple contrast normalization in the pixel space. Perhaps even

more surprising is the fact that the affine parameters in IN

can completely change the style of the output image.

It has been known that the convolutional feature statistics

of a DNN can capture the style of an image [16, 30, 33].

While Gatys et al. [16] use the second-order statistics as

their optimization objective, Li et al. [33] recently showed

that matching many other statistics, including channel-wise

mean and variance, are also effective for style transfer. Mo-

tivated by these observations, we argue that instance nor-

malization performs a form of style normalization by nor-

malizing feature statistics, namely the mean and variance.

Although DNN serves as a image descriptor in [16, 33], we

believe that the feature statistics of a generator network can

also control the style of the generated image.

We run the code of improved texture networks [52] to

perform single-style transfer, with IN or BN layers. As

expected, the model with IN converges faster than the BN

model (Fig. 1 (a)). To test the explanation in [52], we then

normalize all the training images to the same contrast by

performing histogram equalization on the luminance chan-

nel. As shown in Fig. 1 (b), IN remains effective, sug-

gesting the explanation in [52] to be incomplete. To ver-

ify our hypothesis, we normalize all the training images to

the same style (different from the target style) using a pre-

trained style transfer network provided by [24]. According

to Fig. 1 (c), the improvement brought by IN become much

smaller when images are already style normalized. The re-

maining gap can explained by the fact that the style nor-

malization with [24] is not perfect. Also, models with BN

trained on style normalized images can converge as fast as

models with IN trained on the original images. Our results

indicate that IN does perform a kind of style normalization.

Since BN normalizes the feature statistics of a batch of