IEEE TRANSACTIONS ON L

A

T

E

X CLASS FILES, VOL. 14, NO. 8, AUGUST 2016 1

Residual Networks of Residual Networks:

Multilevel Residual Networks

Ke Zhang, Member, IEEE, Miao Sun, Student Member, IEEE, Tony X. Han, Member, IEEE,

Xingfang Yuan, Student Member, IEEE, Liru Guo, and Tao Liu

Abstract—Residual networks family with hundreds or even

thousands of layers dominate major image recognition tasks, but

building a network by simply stacking residual blocks inevitably

limits its optimization ability. This paper proposes a novel

residual-network architecture, Residual networks of Residual net-

works (RoR), to dig the optimization ability of residual networks.

RoR substitutes optimizing residual mapping of residual mapping

for optimizing original residual mapping, in particular, adding

level-wise shortcut connections upon original residual networks,

to promote the learning capability of residual networks. More

importantly, RoR can be applied to various kinds of residual

networks (Pre-ResNets and WRN) and significantly boost their

performance. Our experiments demonstrate the effectiveness and

versatility of RoR, where it achieves the best performance in all

residual-network-like structures. Our RoR-3-WRN58-4 models

achieve new state-of-the-art results on CIFAR-10, CIFAR-100 and

SVHN, with test errors 3.77%, 19.73% and 1.59% respectively.

These results outperform 1001-layer Pre-ResNets by 18.4% on

CIFAR-10 and 13.1% on CIFAR-100.

Index Terms—Image classification, Residual networks, Resid-

ual networks of Residual networks, Shortcut, Stochastic Depth.

I. INTRODUCTION

C

ONVOLUTIONAL Neural Networks (CNNs) have given

the computer vision community a significant shock [1],

and have been improving state-of-the-art results in many

computer vision applications. Since AlexNets’ [2] ground-

breaking victory at the ImageNet Large Scale Visual Recog-

nition Challenge 2012 (ILSVRC 2012) [4], deeper and deeper

CNNs [2], [3], [5], [6], [7], [8], [9], [10], [11], [12] have been

This work is supported by National Natural Science Foundation of China

(Grants No. 61302163 and No. 61302105), Hebei Province Natural Science

Foundation (Grants No. F2015502062) and the Fundamental Research Funds

for the Central Universities.

K. Zhang is with the Department of Electronic and Communication

Engineering, North China Electric Power University, Baoding, Hebei, 071000

China e-mail: zhangkeit@ncepu.edu.cn.

M. Sun is with the Department of Electrical and Computer Engi-

neering, University of Missouri, Columiba, MO, 65211 USA e-mail:

msqz6@mail.missouri.edu.

T. X. Han is with the Department of Electrical and Computer En-

gineering, University of Missouri, Columiba, MO, 65211 USA e-mail:

HanTX@missouri.edu.

X. Yuan is with the Department of Electrical and Computer En-

gineering, University of Missouri, Columiba, MO, 65211 USA e-mail:

xyuan@mail.missouri.edu.

L. Guo is with the Department of Electronic and Communication Engineer-

ing, North China Electric Power University, Baoding, Hebei, 071000 China

e-mail: glr9292@126.com.

T. Liu is with the Department of Electronic and Communication Engineer-

ing, North China Electric Power University, Baoding, Hebei, 071000 China

e-mail: taoliu@ncepu.edu.cn.

Manuscript received , 2016; revised , 2016.

16

16

conv

image

……

fc

16

16

……

32

32

……

64

64

……

16

16

conv

image

……

fc

16

16

……

32

32

……

64

64

……

Residual Networks

RoR

Avg pool

Avg pool

shortcuts

Residual Block

conv

x

conv

x

Identity

mapping

F(x)

f(F(x) + x)

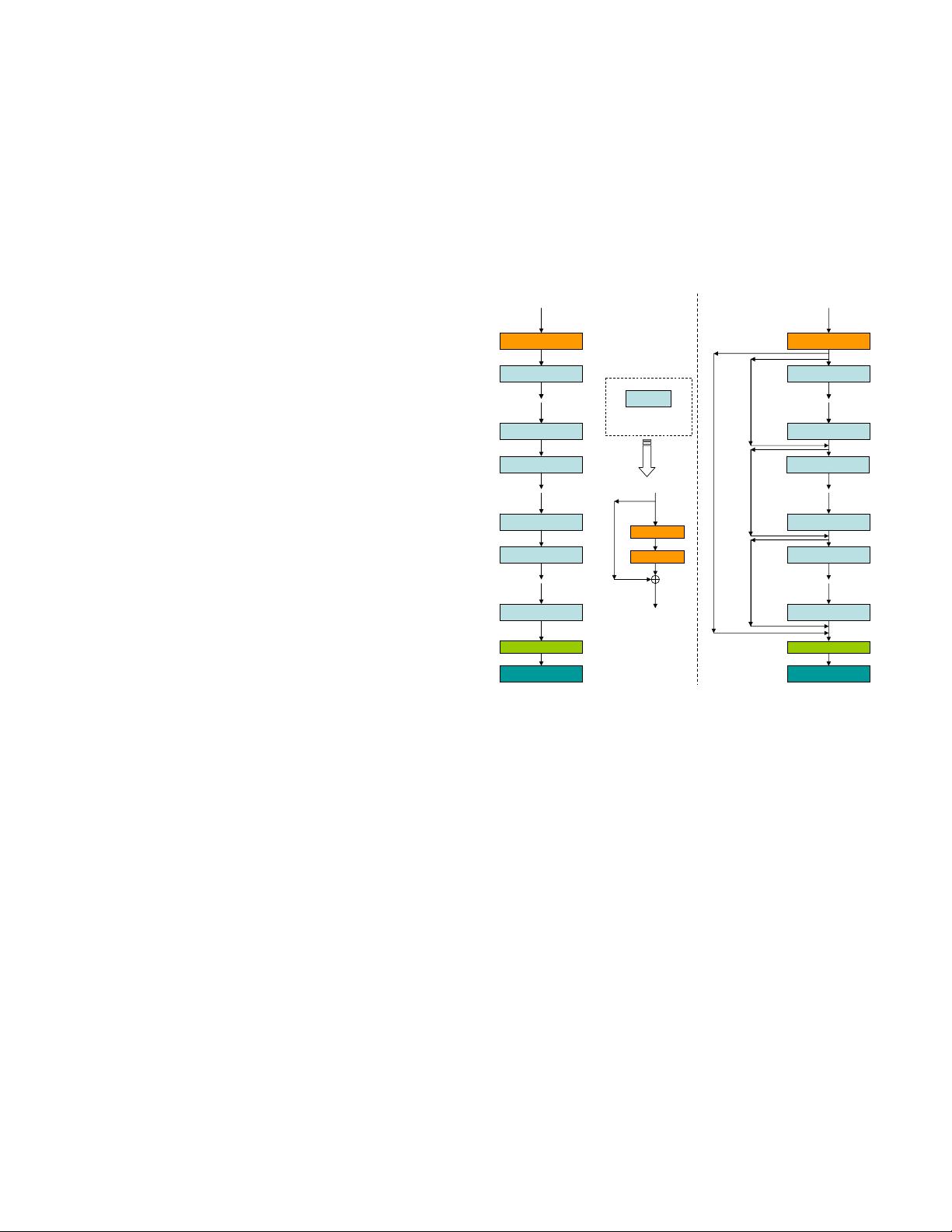

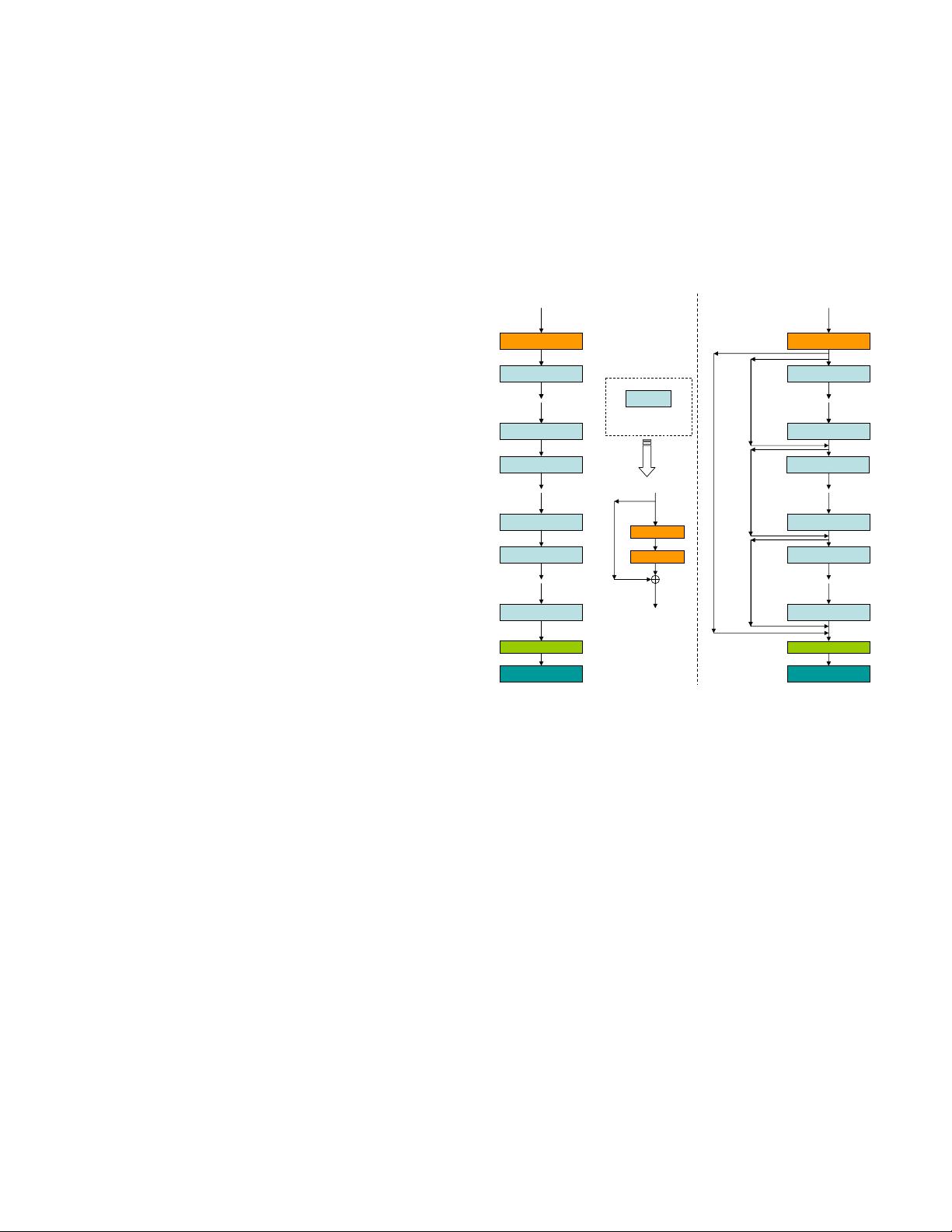

Fig. 1. The left image with dashed line is an original residual network

which contains a series of residual blocks, and each residual block has one

shortcut connection. The number (16, 32, or 64) of each residual block is the

number of output feature map. F (x) is the residual mapping and x is the

identity mapping. The original mapping represents as F (x) + x. The right

image with dashed line is our new residual networks of residual networks

architecture with three levels. RoR is constructed by adding identity shortcuts

level by level based on original residual networks.

proposed and achieved better performance on ImageNet or

other benchmark datasets. The results of these models revealed

the importance of network depth, as deeper networks lead to

superior results.

With a dramatic increase in depth, residual networks

(ResNets) [12] achieved the state-of-the-art performance

at ILSVRC 2015 classification, localization, detection, and

COCO detection, segmentation tasks. However, very deep

models will suffer vanishing gradients and over-fitting prob-

lems, thus the performance of thousand-layer ResNets is

worse than hundred-layer ResNets. Then the Identity Mapping

ResNets (Pre-ResNets) [13] simplified the residual networks

training by BN-ReLU-conv order. Pre-ResNets can allevi-

ate vanishing gradients problem, so that the performance of

thousand-layer Pre-ResNets can be further improved. The

arXiv:1608.02908v1 [cs.CV] 9 Aug 2016