The Pragmatics Information Extraction

Based on BP Neural Network

Liu Ding, Jiang Minghu

School of Humanities and Social Sciences, Lab. of Computational Linguistics,

Tsinghua University, Beijing , China

liuding10@mails.tsinghua.edu.cn, jiang.mh@tsinghua.edu.cn

Abstract—This article describes a method that uses the BP

neural network to extract the pragmatics information from the

conversational corpus. Then cluster the texts based on the

pragmatics information matrix that generated by the BP neural

network. And, compare with the original term-document matrix,

the pragmatics information matrix improve the clustering results

obviously.

Key words: BP neural network, pragmatics, conversational

corpus, text clustering

I. INTRODUCT ION

In the recent study on nature language processing, the

researcher use lexical information, syntax information and

sematic information, rather than pragmatics information. But

in some research areas, people have to deal with the high

dimensional and sparse matrix, especially in text classification

and text clustering. The high dimensional and sparse matrix

not only contains a great many redundant information, but also

processed by more computer resources. Actually, people do

not need the huge high dimensional and sparse matrix so called

term-document matrix when cluster or classify the texts.

Indeed, there are some special key words which are enough to

identify the feature of the sample in texts, such as the time

words, scene words and role words in conversational texts.

These kinds of information are related to the participator in

conversation, therefore it could be viewed as the pragmatics

information, and this article describes the method that extracts

the pragmatics information.

II. P

RAGMAT ICS INFORMAT ION EXT RACT IONS

The direct method that extracts the time, scene and role

information from conversational texts is the name-entity

recognition technology. But it does not run well because of the

complexity of the oral context in conversational texts. And, the

pragmatics information is often implied by the context, rather

than showed directly by the words. At this time, the

name-entity recognition technology fails. Therefore, we use

the supervised machine learning model -- Back Propagation

Neural Network (BP network) to extract the pragmatics

information from conversational text. The BP network is a

kind of classical learning model, and it is often applied in

pattern recognition. Backpropagation is the generalization of

the Widrow-Hoff learning rule to multiple-layer networks and

nonlinear differentiable transfer functions. Input vectors and

the corresponding target vectors are used to train a network

until it can approximate a function, associate input vectors

with specific output vectors, or classify input vectors in an

appropriate way as defined by user. Standard backpropagation

is a gradient descent algorithm, as is the Widrow-Hoff learning

rule, in which the network weights are moved along the

negative of the gradient of the performance function[12]. The

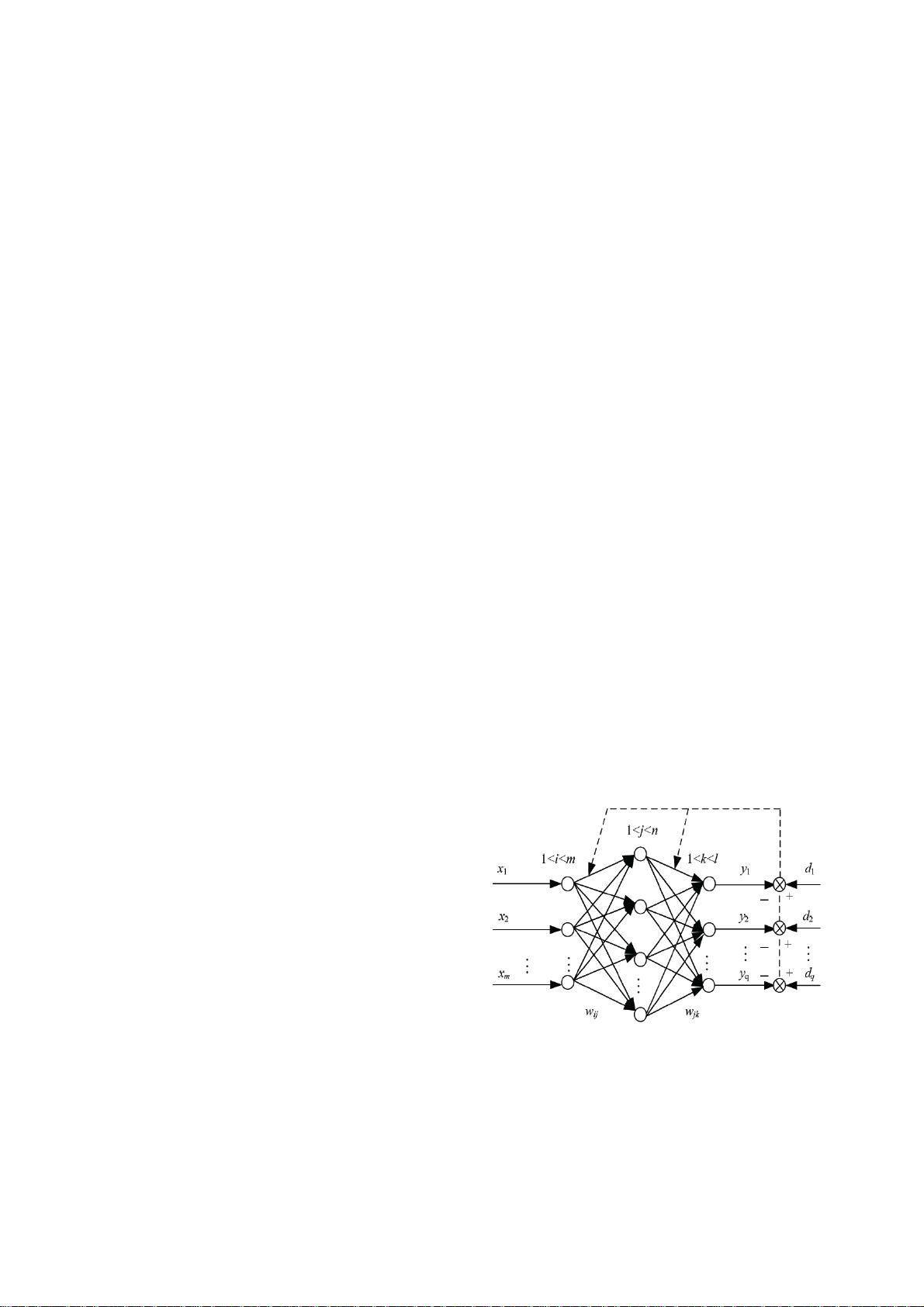

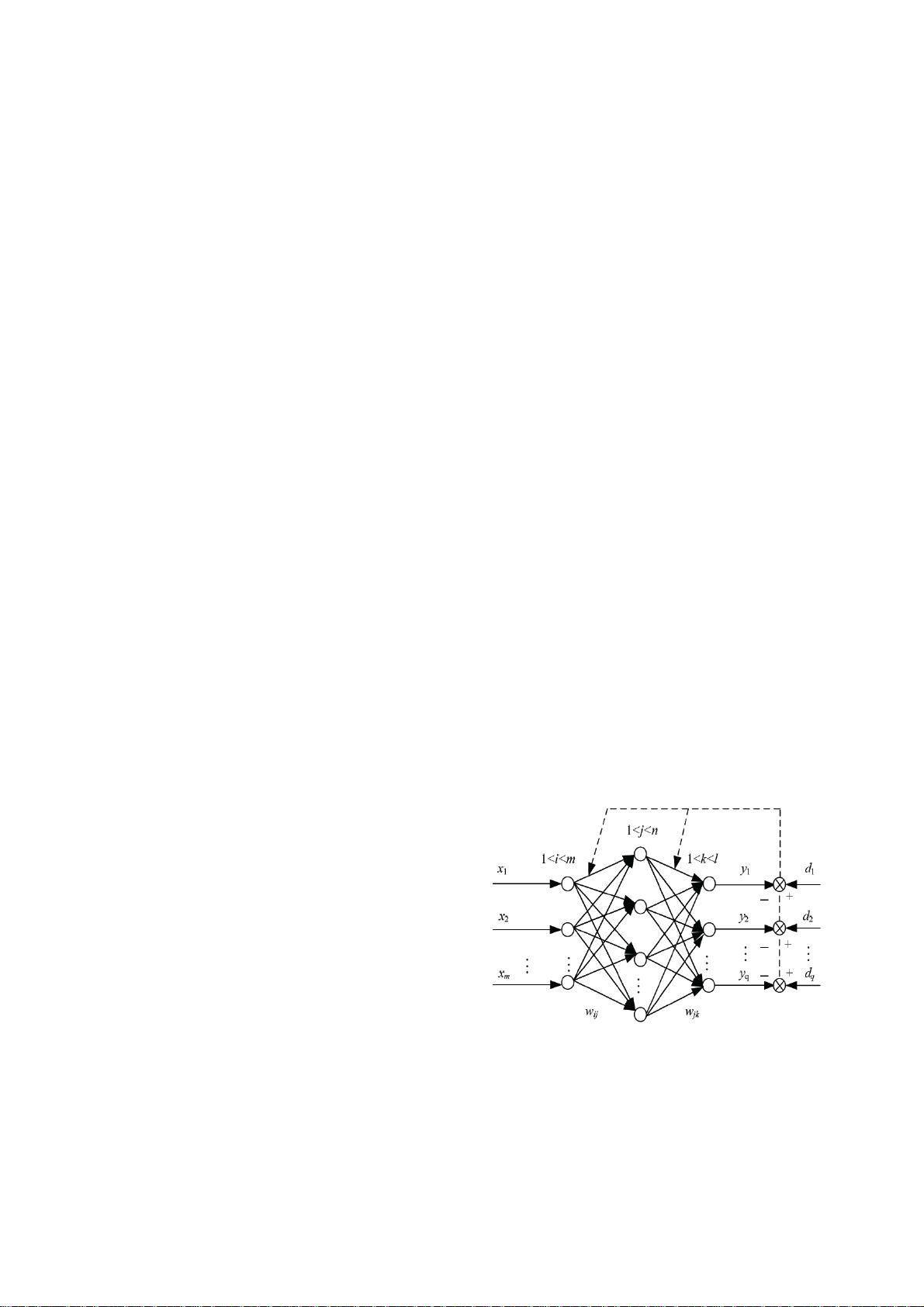

basic structure of BP neural network is shown as follows:

Input

layer

Hidden

layer

Output

layer

Figure 1. BP Neural Network

Multilayer networks often use the log-sigmoid function

(logsig) and the tan-sigmoid function (tansig) as the transfer

function. The diagrams of these two transfer function are

___________________________________

978-1-4673-2197-6/12/$31.00 ©2012 IEEE