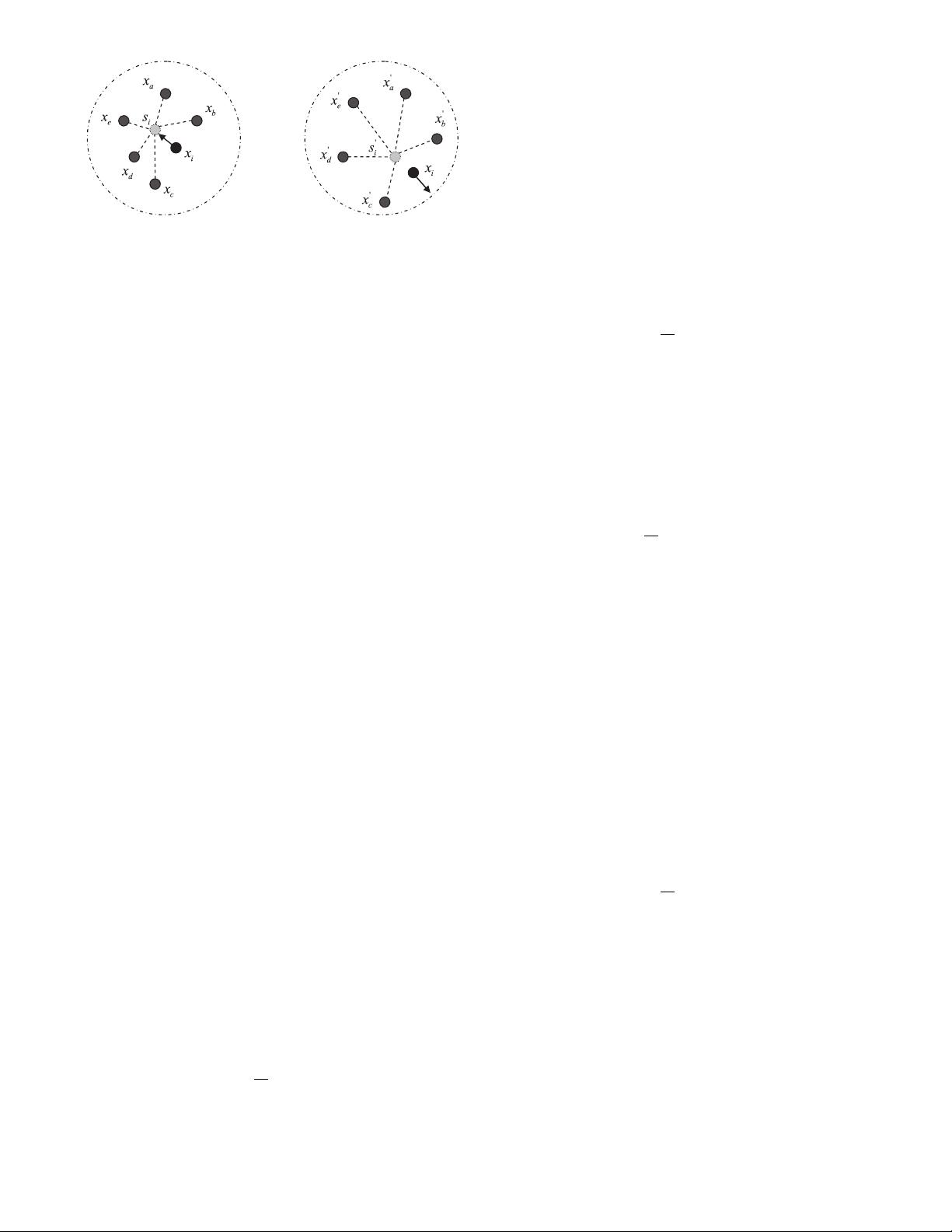

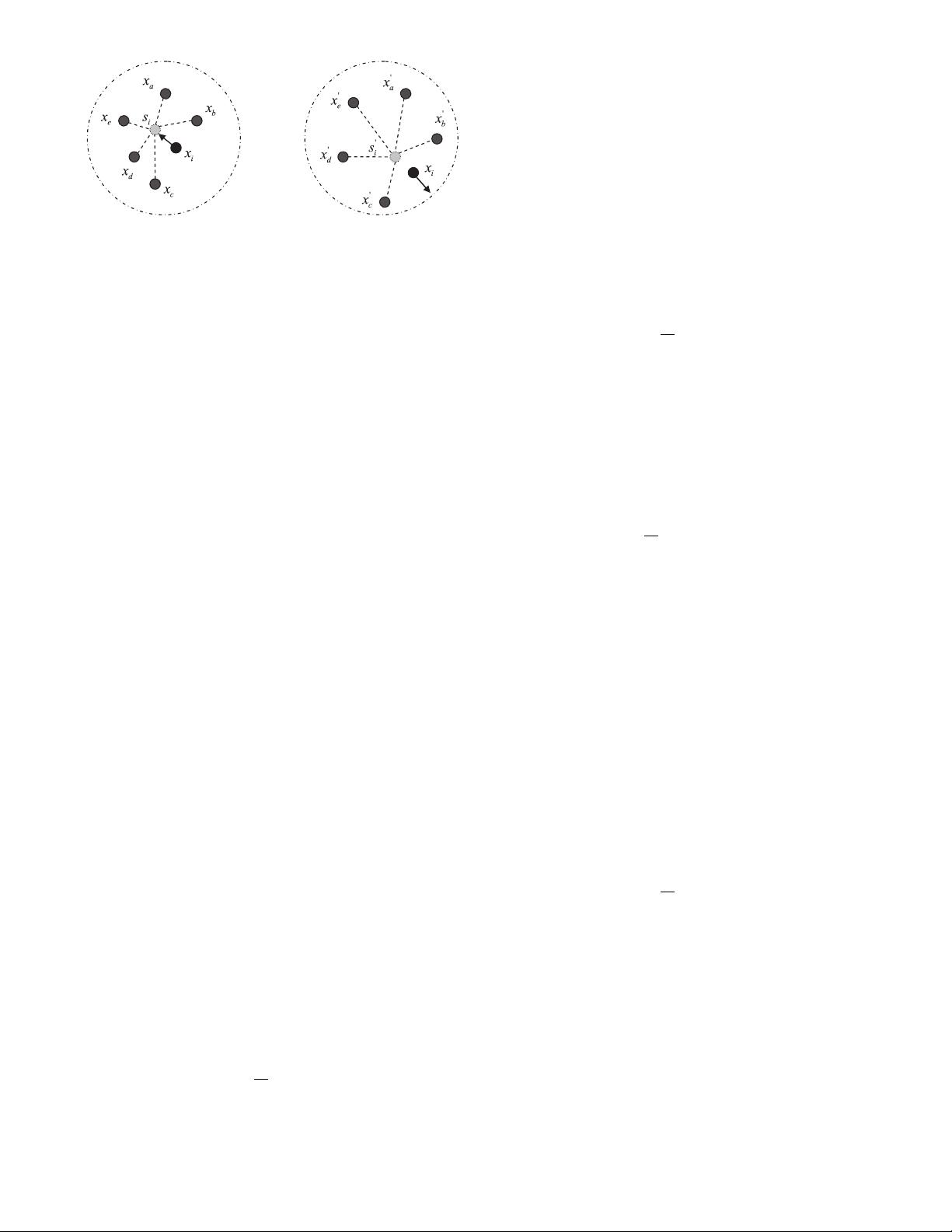

Fig. 1. Sample’s clustering direction in its (a) within-class

neighborhood and (b) between-class neighborhood for NGCSE.

III. NEIGHBORHOOD GEOMETRIC CENTER

SCALING EMBEDDING

To endow the samples with clear clustering directions

and enhance the algorithm’s stability, we introduce the

geometric center scaling into the neighborhoods. First, we

compute the geometric center of the within-

class neighborhood and the geometric center of the

between-class neighborhood for each sample. After that,

we establish spatial relationship between each sample x

i

and its within-class neighborhood geometric center s

i

,as

well as the one between each sample x

i

and its

between-class neighborhood geometric center s

i

.Thenan

embedding can be obtained by solving an optimization

problem. When being embedded into a low-dimensional

subspace, each sample gets close to its within-class

neighborhood geometric center yet is prevented from

approaching its between-class neighborhood geometric

center as much as possible, as shown in Fig. 1 . Finally,

the purpose of classification is achieved.

Let M be a manifold embedded in R

n

, given training

dataset

{

x

i

∈ R

n

,i = 1, 2,...,N

}

∈ M. Corresponding

data class labels are

{

y

i

∈ [1, 2,...,c],i = 1, 2,...,N

}

,

where N denotes the amount of the training dataset and c

denotes the class number of the training dataset. Any

subset of data points that belong to the same class is

assumed to lie on a submanifold of M. In NGCSE, an

embedding based on linear projection is constructed:

V ∈ R

n×l

: x

i

∈ R

n

→ z

i

= V

T

x

i

∈ R

l

, (l n). Via

embedding, each sample in the low-dimensional space

moves toward its within-class neighborhood geometric

center but keeps away from its between-class

neighborhood geometric center.

We calculate the within-class neighborhood N

+

k

1

(i)and

between-class neighborhood N

+

k

2

(i) for each sample x

i

.

Here N

+

k

1

(i) indicates the set of the k

1

nearest neighbors of

the sample x

i

in the same class and N

−

k

2

(i) indicates the set

of the k

2

nearest neighbors of the sample x

i

from different

classes.

The within-class neighborhood geometric center s

i

for

each sample can be computed as

s

i

=

1

k

1

k

1

m

1

=1

x

m

1

(5)

where x

m

1

∈ N

+

k

1

(i).

The within-class objective function is defined here as

J

w

(V )=

i,j

z

i

− c

j

2

w

ij

=

i,j

V

T

x

i

− V

T

s

j

2

w

ij

. (6)

where W = [w

ij

] ∈ R

N×N

is the affinity weight matrix,

which is defined in this instance as

w

ij

=

exp{−x

i

− s

j

2

}, if i = j

0, otherwise

. (7)

In addition,

c

j

= V

T

s

j

=

1

k

1

k

1

m

1

=1

V

T

x

m

1

. (8)

J

w

(V ) demonstrates the spatial relationships between

the samples and their respective within-class

neighborhood geometric centers: the smaller the value of

J

w

(V ), the closer the samples are to their within-class

neighborhood geometric centers.

The between-class neighborhood geometric center s

i

for each sample can be computed as

s

i

=

1

k

2

k

2

m

2

=1

x

m

2

(9)

where x

m

2

∈ N

−

k

2

(i).

The between-class objective function is defined in this

instance as

J

b

(V ) =

i,j

z

i

− c

j

2

w

ij

=

i,j

V

T

x

i

− V

T

s

j

2

w

ij

.

(10)

where W

= [w

ij

] ∈ R

N×N

is the penalty weight matrix,

whichisdefinedhereas

w

ij

=

exp

−

x

i

− s

j

2

, if i = j

0, otherwise

. (11)

In addition,

c

j

= V

T

s

j

=

1

k

2

k

2

m

2

=1

V

T

x

m

2

. (12)

J

b

(V ) demonstrates the spatial relationships between

the samples and their respective between-class

neighborhood geometric centers: the larger the value of

J

b

(V ), the farther the samples are from their

between-class neighborhood geometric centers.

Referring to (6), we can infer that

J

w

(V ) =

i,j

z

i

− c

j

2

w

ij

=

i,j

V

T

x

i

− V

T

s

j

2

w

ij

182 IEEE TRANSACTIONS ON AEROSPACE AND ELECTRONIC SYSTEMS VOL. 50, NO. 1 JANUARY 2014