Extrinsic Calibration of a 2D Laser-Rangefinder and a Camera based

on Scene Corners

Ruben Gomez-Ojeda, Jesus Briales, Eduardo Fernandez-Moral and Javier Gonzalez-Jimenez

Abstract— Robots are often equipped with 2D laser-

rangefinders (LRFs) and cameras since they complement well

to each other. In order to correctly combine measurements from

both sensors, it is required to know their relative pose, that is, to

solve their extrinsic calibration. In this paper we present a new

approach to such problem which relies on the observations of

orthogonal trihedrons which are profusely found as corners in

human-made scenarios. Thus, the method does not require any

specific pattern, which turns the calibration process fast and

simpler to perform. The estimated relative pose has proven

to be also very precise since it uses two different types of

constraints, line-to-plane and point-to-plane, as a result of a

richer configuration than previous proposals that relies on plane

or V-shaped patterns. Our approach is validated with synthetic

and real experiments, showing better performance than the

state-of-art methods.

I. INTRODUCTION

The combination of a laser-rangefinder (LRF) and a cam-

era is a common practice in mobile robotics. Some examples

are the acquisition of urban models [1] [2], the detection

of pedestrians [3], or the construction of semantic maps

[4]. In order to effectively exploit measurements from both

type of sensors, a precise estimation of their relative pose,

that is, their extrinsic calibration, is required. This paper

presents a method for such extrinsic calibration which relies

on the observation of three perpendicular planes (orthogonal

trihedron), which can be found in any structured scene, for

instance, buildings. This idea to calibrate the sensors from

the elements of the environment was inspired by our previous

work for RGB-D cameras [5] and LRFs [6]. In a nutshell, the

calibration process is performed by first extracting the three

plane normals from the projected junctions of the trihedron,

and then imposing co-planarity between the scanned points

and those planes.

A. Related Work

The most precise and effective strategy to perform the

extrinsic calibration between a camera and a LRF is by

establishing some kind of data association between the sensor

measurements. For that, the intuitive approach is to detect

the laser spot in the image, but this is rarely feasible since

most LRFs employ invisible light beams. Then, the common

The authors are with the Mapir Group of Department of System

Engineering and Automation, University of Málaga, Spain. +E-mail:

{rubengooj|jesusbriales}@gmail.com

This work has been supported by two projects: "GiraffPlus", funded by

EU under contract FP7-ICT-#288173, and "TAROTH: New developments

toward a robot at home", funded by the Spanish Government and the

"European Regional Development Fund ERDF" under contract DPI2011-

25483.

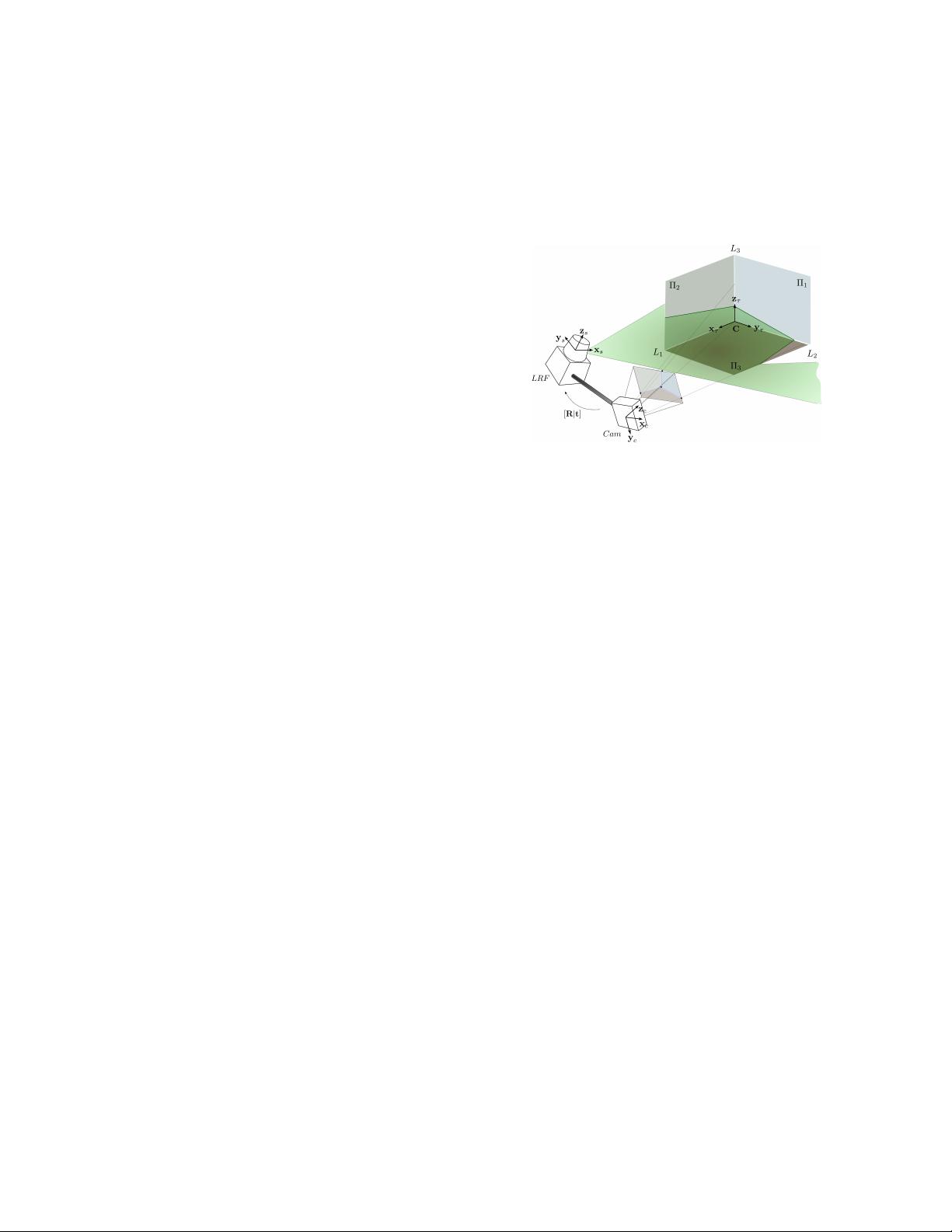

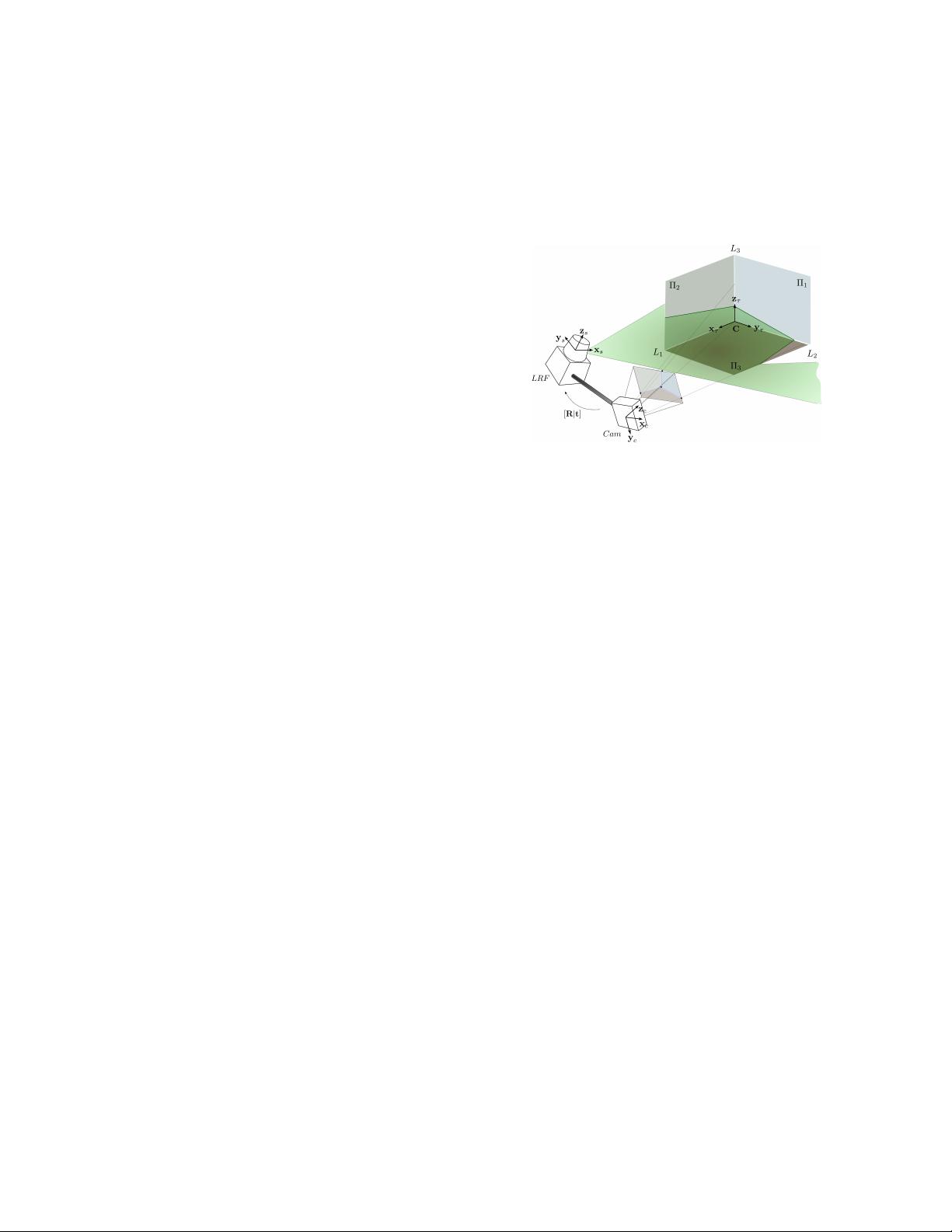

Fig. 1. Observation of a trihedron structure, which is defined by three

orthogonal planes {Π

1

, Π

2

, Π

3

} intersecting at three orthogonal lines

{L

1

, L

2

, L

3

}, by a rig formed by a 2D LRF and a camera.

practice is to establish geometric constraints from the asso-

ciation of different 3D features (e.g. points, lines and planes)

observed simultaneously by both sensors. Depending on the

nature of the detected features, two methodologies have been

considered in the literature. The first one employs point-to-

line restrictions, establishing correspondences between some

identifiable scanned points and line segments in the image.

A typical calibration target for this technique is the V-

shaped pattern proposed by Wasielewski and Strauss [7].

Their approach computes the intersection point of the LRF

scan plane with the V-shaped target, and then minimizes the

distance from the projected point to the line segment detected

in the image. In general, this procedure requires a large

number of observations to have a well-determined problem

and to reduce the error introduced by the mismatch between

the scan point and the observed line. To overcome this

limitation, in [8] the number of point-to-line correspondences

in each observation is increased to three by introducing

virtual end-points, and also the effect of outliers is lowered

down with a Huber weighting function.

A different strategy is the one proposed by Zhang and

Pless [9], which makes use of a planar checkerboard pattern.

Similarly to the camera calibration method in [10], they

first estimate the relative pose between the camera and the

pattern in each observation. Then, they impose point-to-

plane restrictions between the laser points and the pattern

plane to obtain a linear solution, which is employed as

initial value in a final non-linear optimization process. This

approach has two problems: the initial value may not be a

valid pose, since there is no guarantee that the rotation R ∈

SO(3), and it is often a poor guess which leads to a local

minimum. These disadvantages are addressed in [11], where

the authors reformulate the estimation as a linear Perspective

2015 IEEE International Conference on Robotics and Automation (ICRA)

Washington State Convention Center

Seattle, Washington, May 26-30, 2015

978-1-4799-6922-7/15/$31.00 ©2015 IEEE 3611