TABLE I

MEANINGS OF THE SYMBOLS

S

data

Data size of disk I/O in a compaction

S

sst

Size of an SSTable

S

dt

Size of a DTable

S

metadata

Metadata size in a compaction

RA/WA R/W amplification from LSM-tree or LWC-tree

ARA/AWA Auxiliary R/W amplification from SMR drives

MRA/MWA Overall R/W amplification, MWA=MA × AWA

L

0

L

1

L

n

L

2

L

0

L

1

L

n

………

L

2

…

read

①

………

…

③

(a)before compaction

(b)after compaction

Memory

Disk

②

sort

write

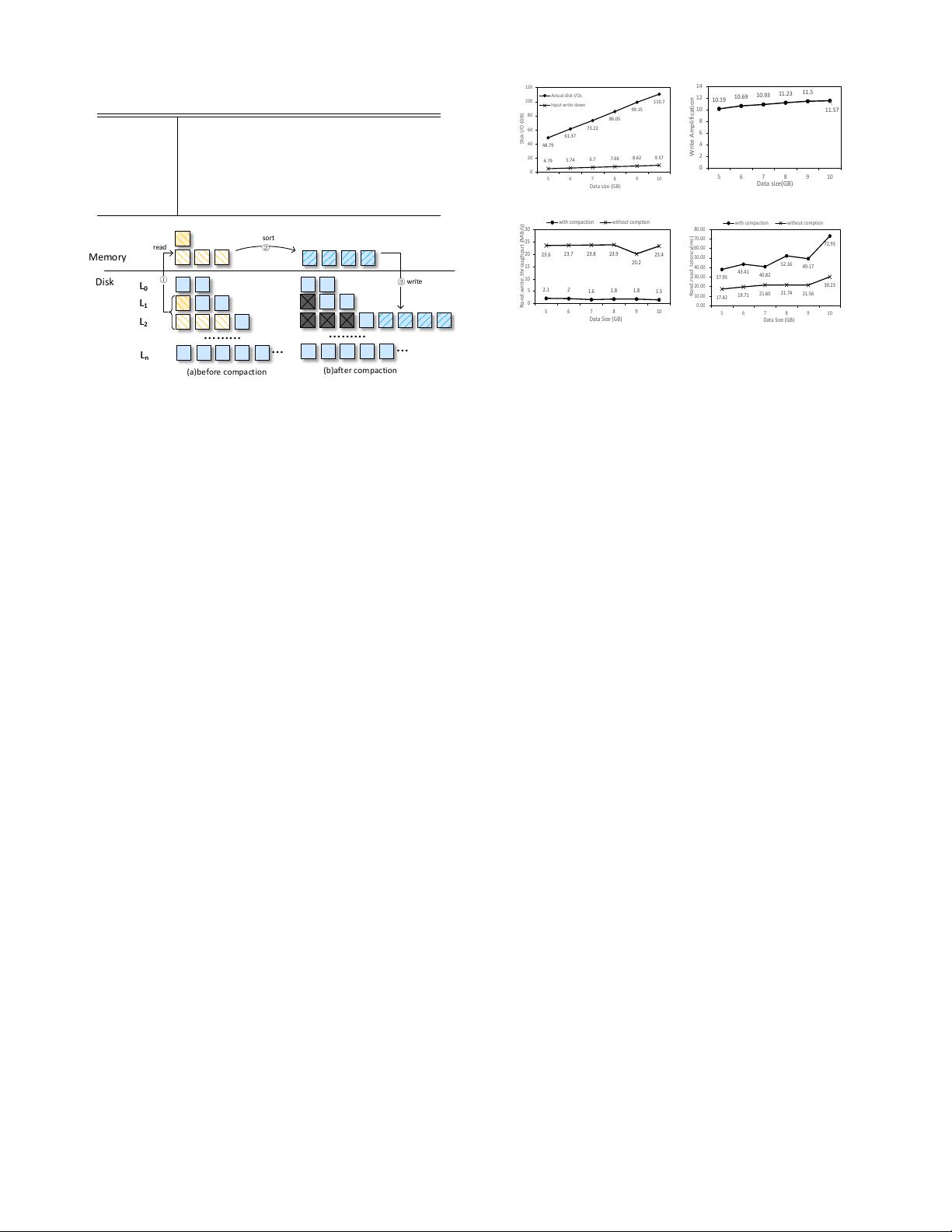

Fig. 1. The compaction procedure of LSM-trees. The compaction involves

read, sort and rewrite multiple SSTables, causing serious I/O amplification.

KV items, which introduces excessive writes and represents a

performance bottleneck of LSM-tree based key-value stores.

More specifically, a single compaction has three steps. For

the convenience of exposition, let us call the SSTable selected

to compact in level L

i

as a victim SSTable and the SSTables

whose key ranges fall in the key range of the victim SSTable

in the next level L

i+1

as overlapped SSTables. To start a

compaction, LevelDB first selects victim SSTables and the

overlapped SSTables according to the score of each level,

and then it decides whether more victim SSTables in L

i

can be added into the compaction by searching the SSTables

whose key ranges fall in the ranges of overlapped SSTables.

Figure 1 pictorially shows the three steps of a compaction

procedure of LSM-trees. As it is shown, during the compaction

procedure, LevelDB first reads the victim SSTable in level L

i

and overlapped SSTables in level L

i+1

. After that, LevelDB

merges and sorts SSTables that have been fetched into memory

by the first step. Finally, LevelDB writes the newly generated

SSTables to disk. According to the size limit of each level

in LevelDB, the size of L

i+1

is 10 times that of L

i

and this

size factor is called amplification factor (AF). Due to this size

relationship, on average a victim SSTable in level L

i

has AF

overlapped SSTables in level L

i+1

and thus the total data size

involved in a compaction is given by Equation 1, where S

sst

represents the size of an SSTable and S

data

represents the

data size of disk I/O in a compaction. The 2× multiplication

indicates both read and write the total data. With a large

dataset, the ultimate amplification could be over 50 (10 for

each gap between L

1

to L

6

), as it is possible for any newly

generated SSTable to migrate from L

0

to L

6

through a series

of compaction steps [1].

S

data

= (AF + 1) × S

sst

× 2 (1)

To quantitatively measure the degree of amplification and

performance degradation due to compaction in practice with

LevelDB, we carry out the following experiments with the

48.79

61.37

73.22

86.05

99.15

110.7

4.79

5.74

6.7

7.66

8.62

9.57

0

20

40

60

80

100

120

5 6 7 8 9 10

Disk I/O (GB)

Data size (GB)

Actual disk I/Os

Input write down

(a) I/O comparison

10.19

10.69

10.93

11.23

11.5

11.57

0

2

4

6

8

10

12

14

5 6 7 8 9 10

Write Amplification

Data size(GB)

(b) write amplification

2.1

2

1.6

1.8 1.8

1.5

23.6

23.7

23.8

23.9

20.2

23.4

0

5

10

15

20

25

30

5 6 7 8 9 10

Rand.write.throughput (MB/s)

Data Size (GB)

with compaction without comption

(c) random write

37.95

43.41

40.82

52.16

49.17

72.93

17.42

19.71

21.60

21.74

21.56

30.23

0.00

10.00

20.00

30.00

40.00

50.00

60.00

70.00

80.00

5 6 7 8 9 10

Rand.read latency(ms)

Data Size (GB)

with compaction without comption

(d) random read

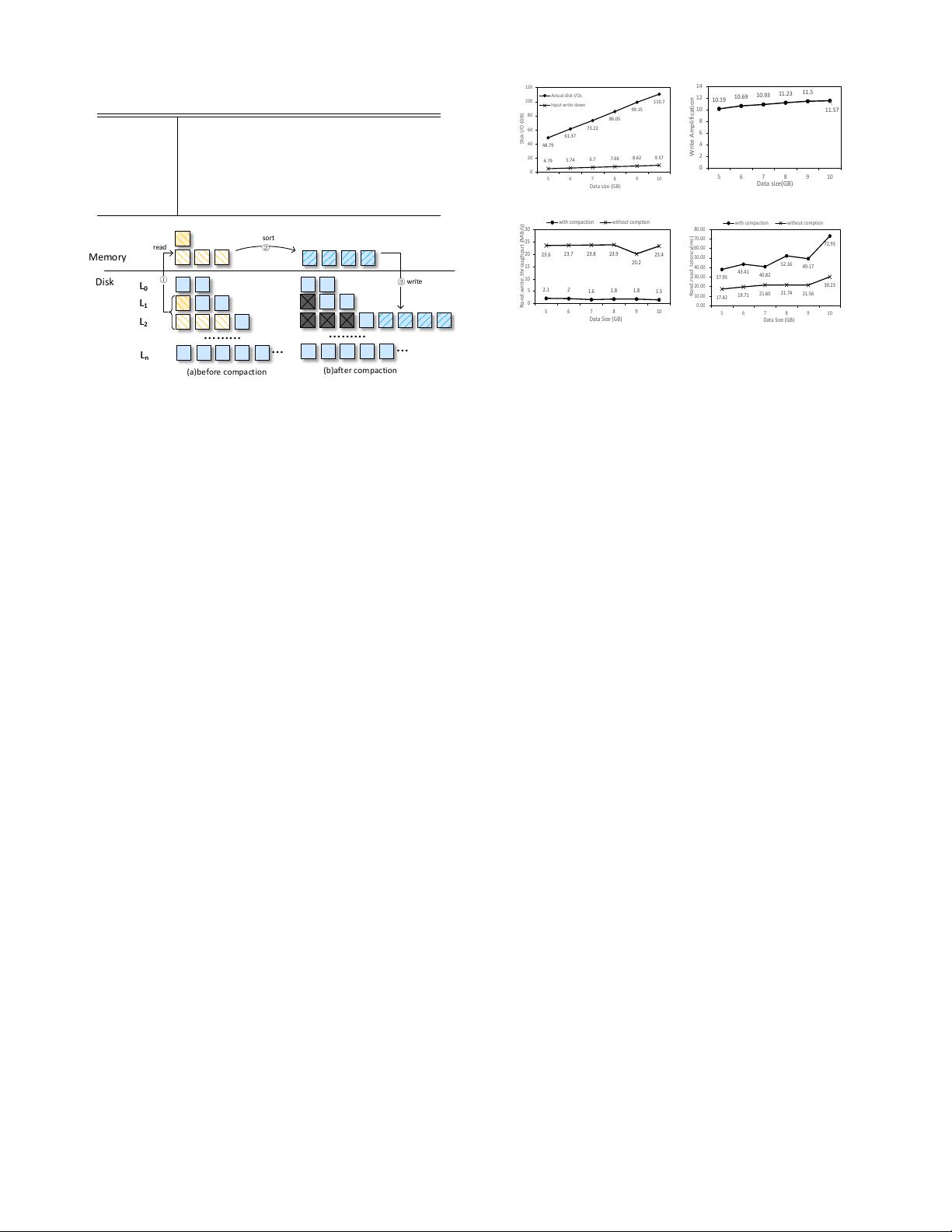

Fig. 2. Write amplification and performance degradation due to

compactions. 2(a): the comparison between write data size and actual disk

I/O size; 2(b): the write amplification of different write data sizes; 2(c): the

random write performance with and without compaction; 2(d):the random

read performance with and without compaction.

same configuration of LevelDB on HDDs in section V. First,

we randomly load databases of size 5GB, 6GB, 7GB, 8GB,

9GB and 10GB, respectively. The value size is 4KB by default.

Figure 2(a) shows the relationship between input data size and

actual disk I/O data size. As can be seen, in all cases, LevelDB

incurs significant write amplification. For example, writing

10GB input data results in 110.7GB actual disk write and the

corresponding write amplification ratio is 11.57 as shown in

Figure 2(b). Based on Figure 2(a), Figure 2(b) calculates all

the write amplification factors and a minimum value of 10.19

is observed. Second, to evaluate the random I/O performance

with compaction or without compaction, we use 6 different

database sizes for the initial random loads as well and random-

ly query 1 million keys following a uniform distribution. For

the I/O performance without compaction, we trigger a manual

compaction immediately after finishing loading the database

and before starting performing I/Os to eliminate concurrent

compaction and mitigate the compaction interferences. Figure

2(c) and Figure2(d) show the performance degradation caused

by compaction to random write and random read, respectively.

The write and read throughputs without compaction on average

are 13.01× and 2.23× the throughputs with compaction,

respectively. We design the LWC-tree mainly to eliminate the

amplification caused by compactions in LSM-trees.

III. LWC-TREE DESIGN

As demonstrated in the previous section, the conventional

LSM-tree incurs excessive I/O amplification when used as the

key-value store index. We design the LWC-tree to alleviate the

I/O amplification caused by compactions and aim to achieve

high write throughput without sacrificing read performance.

As a variant of LSM-tree, LWC-tree is also composed of

one memory-resident component and multiple disk-resident

components. Key-value items are written to the memory

component first, then dumped to disk, and finally compacted

to lower levels. We keep the sorted tables and the multi-level