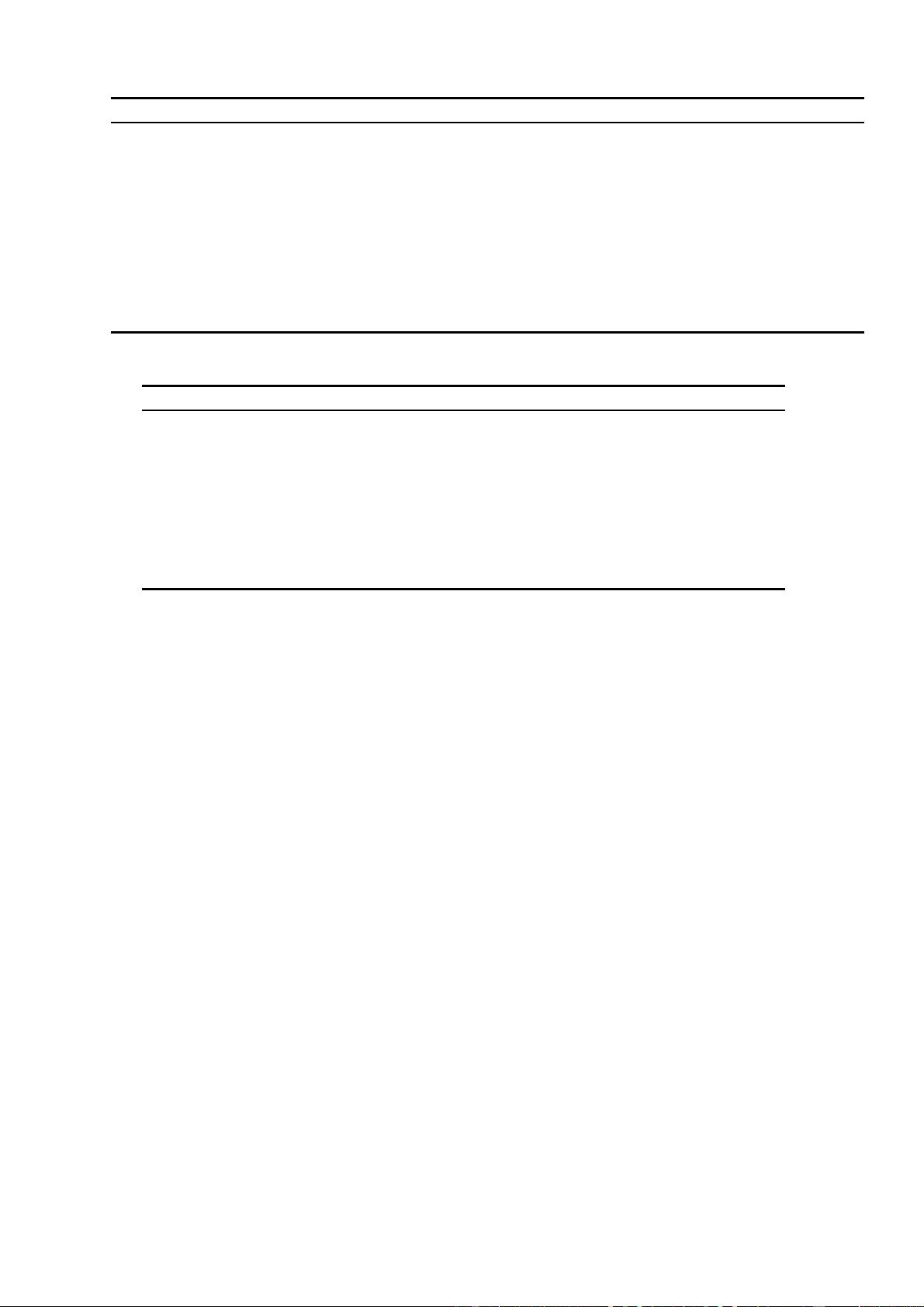

Method Architecture Encoder Decoder Objective Dataset

ELMo LSTM ✗ X LM 1B Word Benchmark

GPT Transformer ✗ X LM BookCorpus

GPT2 Transformer ✗ X LM Web pages st arting from Reddit

BERT Transformer X ✗ MLM & NSP BookCorpus & Wiki

RoBERTa Transformer X ✗ MLM BookCorpus, Wiki, CC-News, OpenWebText, Stories

ALBERT Transformer X ✗ MLM & SOP Same as RoBERTa and XLNet

UniLM Transformer X ✗ LM, MLM, seq2seq LM Same as BERT

ELECTRA Transformer X ✗ Discriminator (o/r) Same as XLNet

XLNet Transformer ✗ X PLM BookCorpus, Wiki, Giga5, ClueWeb, Common Crawl

XLM Transformer X X CLM, MLM, TLM Wiki, parellel corpora (e.g. MultiUN)

MASS Transformer X X Span Mask WMT News Crawl

T5 Transformer X X Text Infil ling Colossal Clean Crawled Corpus

BART Transformer X X Text Infil ling & Sent Shuffling Same as RoBERTa

Table 1: A comparison of pop ular pre-trained models.

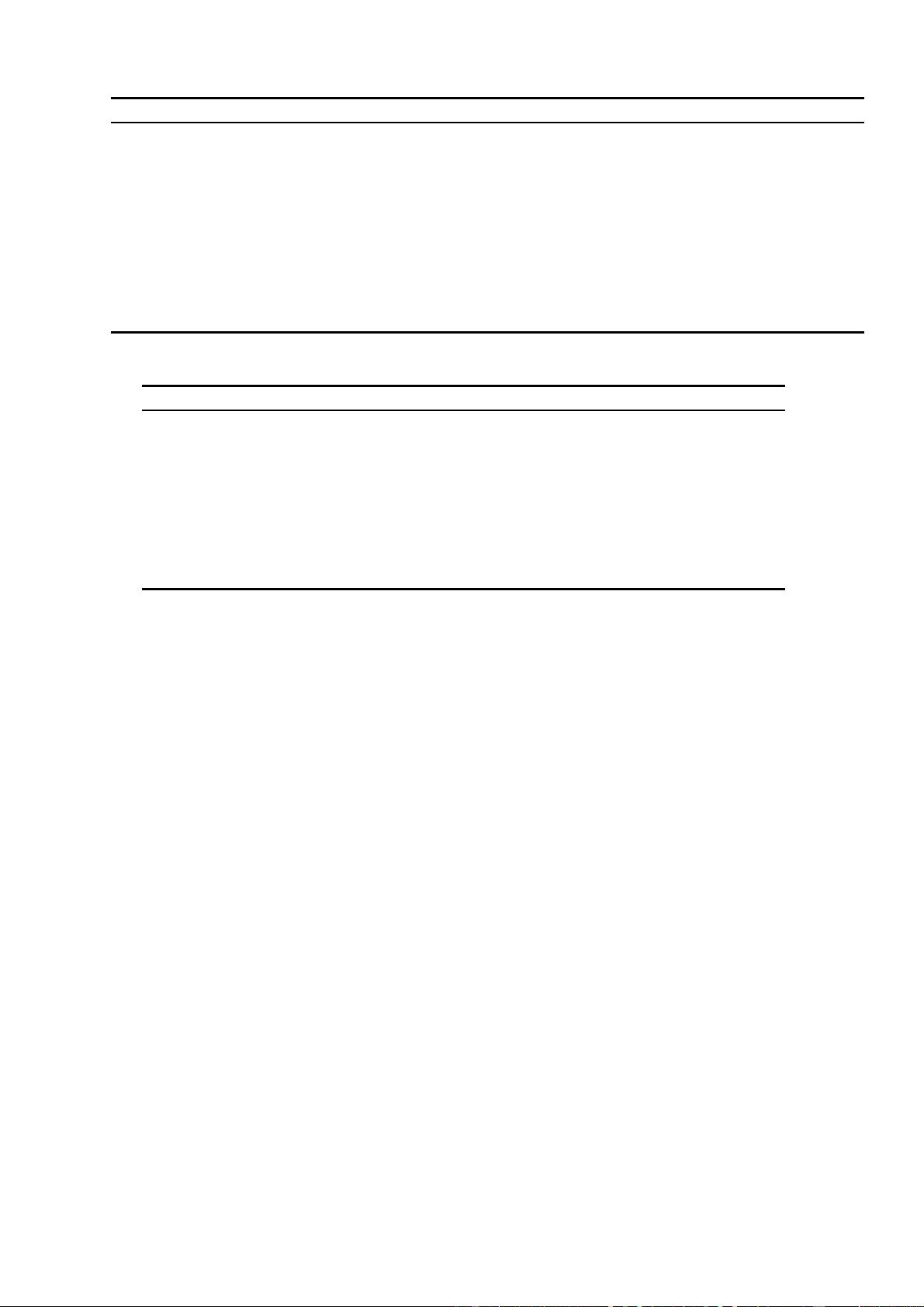

Objective Inputs Targets

LM [START] I am happy to join with you today

MLM I am [MASK] to join with you [MASK] happy today

NSP Sent1 [SEP] Next Sent or Sent1 [SEP] Random Sent Next Sent/Random Sent

SOP Sent1 [SEP] Sent2 or Sent2 [SEP] Sent1 in order/reversed

Discriminator (o/r) I am thrilled to study with you today o o r o r o o o

PLM happy join with today am I to you

seq2seq LM I am happy to join with you today

Span Mask I am [MASK] [MASK] [MASK] with you today happy to join

Text Infil ling I am [MASK] with you today happy to join

Sent Shuffling today you am I join with happy to I am happy to join with you today

TLM How [MASK] you [SEP] [MASK] vas-tu are Comment

Table 2: Pre-training objectives and their input-output formats.

[x

k

; ELMO

task

k

], before feeding them to higher

layers.

The effectiveness of ELMo is evaluated on six

NLP problems, including question answering, tex-

tual entailment and sentiment analysis.

GPT, GPT2, and Grover. GPT (Radford et al.,

2018) adopts a two-stage learning paradigm: (a)

unsupervised pre-training using a language mod-

elling objective and (b) supervised fine-tuning.

The goal is to learn universal representations trans-

ferable to a wide range of downstream tasks.

To this end, GPT uses the BookCorpus dataset

(Zhu et al., 2015), which contains more than 7,000

books from various genres, for training the lan-

guage model. The Transformer architecture

(

Vaswani et al., 2017) is used to implement the

language model, which has been shown to bet-

ter capture global dependencies from the inputs

compared to its alternatives, e.g. recurrent net-

works, and perform strongly on a range of se-

quence learning tasks, such as machine transla-

tion (Vaswani et al., 2017) and document gener-

ation (

Liu et al., 2018). To use GPT on inputs

with multiple sequences during fine-tuning, GPT

applies task-specific input adaptations motivated

by traversal-style approaches (Rockt¨aschel et al.,

2015). These approaches pre-process each text

input as a single contiguous sequence of tokens

through special tokens including [START] (the

start of a sequence), [DELIM] (delimiting two se-

quences from the text input) and [EXTRACT] (the

end of a sequence). GPT outperforms task-specific

architectures in 9 out of 12 tasks studied with a pre-

trained Transformer.

GPT2 (

Radford et al., 2019) mainly follows the

architecture of GPT and trains a language model

on a dataset as large and diverse as possible to

learn from varied domains and contexts. To do

so,

Radford et al. (2019) create a new dataset of

millions of web pages named WebText, by scrap-

ing outbound links from Reddit. The authors ar-

gue that a language model trained on large-scale

unlabelled corpora begins to learn some common

supervised NLP tasks, such as question answer-

ing, machine translation and summarization, with-

out any explicit supervision signal. To validate

this, GPT2 is tested on ten datasets (e.g. Chil-

dren’s Book Test (

Hill et al., 2015), LAMBADA

(Paperno et al., 2016) and CoQA (Reddy et al.,