An Application of Panoramic Mosaic in UAV Aerial Image

Jinwen Hu

1

, Yihui Zhou

1

, Chunhui Zhao

1

, Quan Pan

1

, Kun Zhang

2

, Zhao Xu

2

Abstract— Panoramic mosaic plays an important role in the

field of computer vision, robot navigation and virtual reality.

This paper summarizes the specific process of panoramic

stitching and proposes a coordinate transformation stitching

method based on down-sampling. In order to reduce the

processing time, images are compressed by down-sampling

before processing, and spliced in the corresponding points of

original images. Especially, the middle image is chosen as the

reference image and the others are directly spliced onto it by

transformation matrix. Considering the illumination of UAV

aerial images, just one overlapping region of the adjacent

images is remained when doing the fusion. In the experiment,

images with different resolutions are tested. The results show

the performance of good efficiency and little time consuming.

I. INTRODUCTION

In recent years, the demand for panoramic image is

becoming more and more urgent, along with the develop-

ment of computer technology. As an emerging technology,

panoramic mosaic has attracted many researchers attention,

and it has been applied in many areas such as geological ex-

amination, military surveillance, Minimally Invasive Surgery,

video conference and so on.

Panoramic stitching [1] is the process of seamlessly align-

ing and combining multiple images with overlapping fields

of view to form a high-resolution output image. In many

literatures [2]–[4], image mosaic is usually considered as

a multi-image matching problem, and uses invariant local

features to find matches between all of the images, which

accompany high computational complexity. But in most real

applications with a single camera, images are mostly taken

in order and each two adjacent have overlapping areas.

The rest of this paper is organized as follows. Section II

summarizes the process and general problems of panoramic

mosaic. In Section III, panoramic mosaic algorithm is pro-

posed for UAV aerial images. The experiment results are

shown and analyzed in Section IV. Finally, we conclude this

paper and conceive future work in section V.

II. OVERVIEW OF PANORAMIC MOSAIC

A. Process of panoramic mosaic

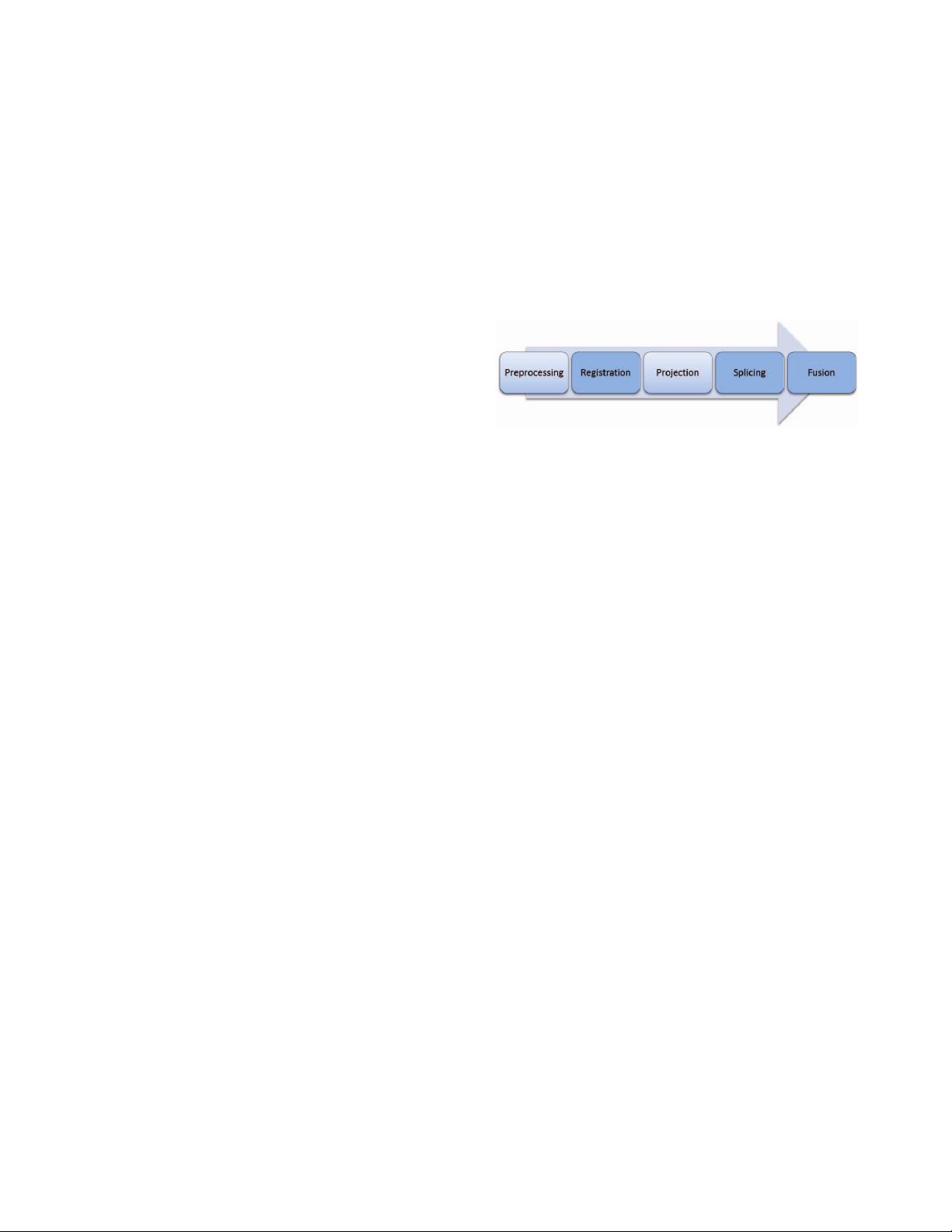

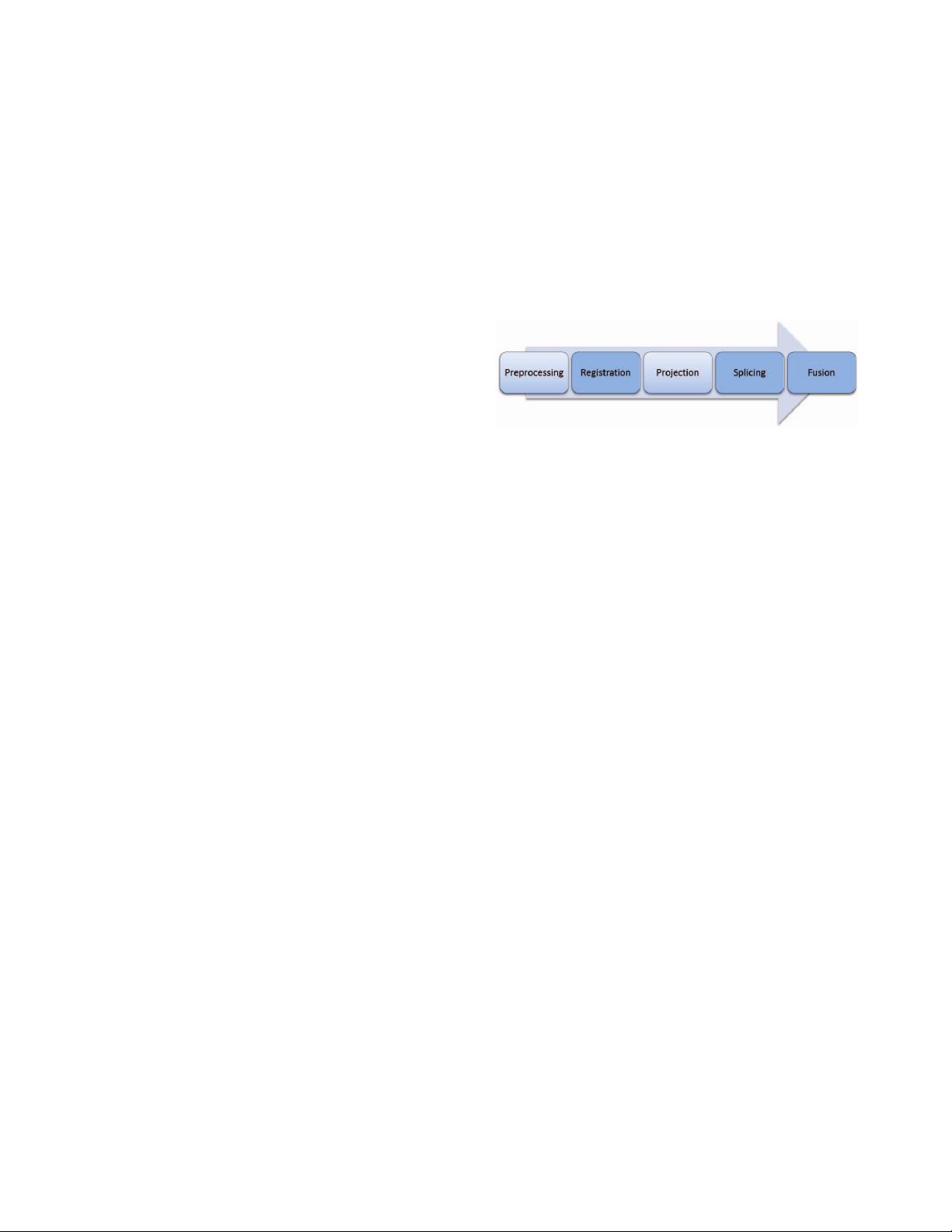

Nowadays, many image stitching methods have been

proposed. Although they are different in the details of the

algorithm, the framework of the steps is the same. As shown

in Fig. 1, panoramic stitching is basically made up of five

1

Jinwen Hu, Yihui Zhou, Chunhui Zhao and Quan Pan are with

the School of Automation, Northwestern Polytechnical University, Xi’an,

Shaanxi, China.

2

Kun Zhang and Zhao Xu are with the School of Electronics and

Information, Northwestern Polytechnical University, Xi’an, Shaanxi, China.

Corresponding author: Chunhui Zhao (Email: zhaochun-

hui@nwpu.edu.cn)

steps: image preprocessing, image registration, panoramic

image projection, image splicing and image fusion. Image

registration, splicing and fusion are the key steps which

determine the performance of the stitching method.

Fig. 1. Flowchart of panoramic stitching.

Image preprocessing is to eliminate the possible adverse

effects before processing. The main purpose of image prepro-

cessing ensure the accuracy of the next image registration,

which is similar to filtering and enhancing. Filtering algo-

rithms we used commonly include median filter, Gaussian

filter, etc. Image registration includes two parts: feature

extraction and matching.

The first step for stitching is to extract the characteristic

information of two adjacent images to provide the basis for

image matching. There are two main types of detecting and

matching features in images [5], area-based methods and

feature-based methods. Area-based methods use correlation

of intensity patterns of a pixel with the intensity pattern

around the corresponding pixel in another image, which

make them sensitive to changes in viewing position, absolute

intensity, contrast and illumination. Feature-based methods

are based on intensities in the images rather than image inten-

sities themselves. There are two features that are commonly

used, edge detectors and interest point detectors. Interest

points, as SIFT feature [6], Surf feature [7], Orb feature

[8], are robust to changes in lighting, rotation, viewpoint,

translation and scale.

Since each image is captured by camera at different angles,

they are not in the same projection plane. If the overlapping

regions are spliced directly, the visual consistency of the ac-

tual scene will be destroyed. So we need to obtain projection

image, and then splice the image. There are several projec-

tion models such as planar projection, cylindrical projection,

cube projection and spherical projection.

If we use the pattern matching, we can do the splicing

work directly by the translation parameters we calculated

before. If we choose the point features, we need to calculate

the homograph between two images through matching points,

and then convert image coordinates into new.

After all the images are processed, in most cases, however,

it is not a perfect panorama, and some seams at the boarder of

2017 13th IEEE International Conference on Control & Automation (ICCA)

July 3-6, 2017. Ohrid, Macedonia

978-1-5386-2679-5/17/$31.00 ©2017 IEEE 1049