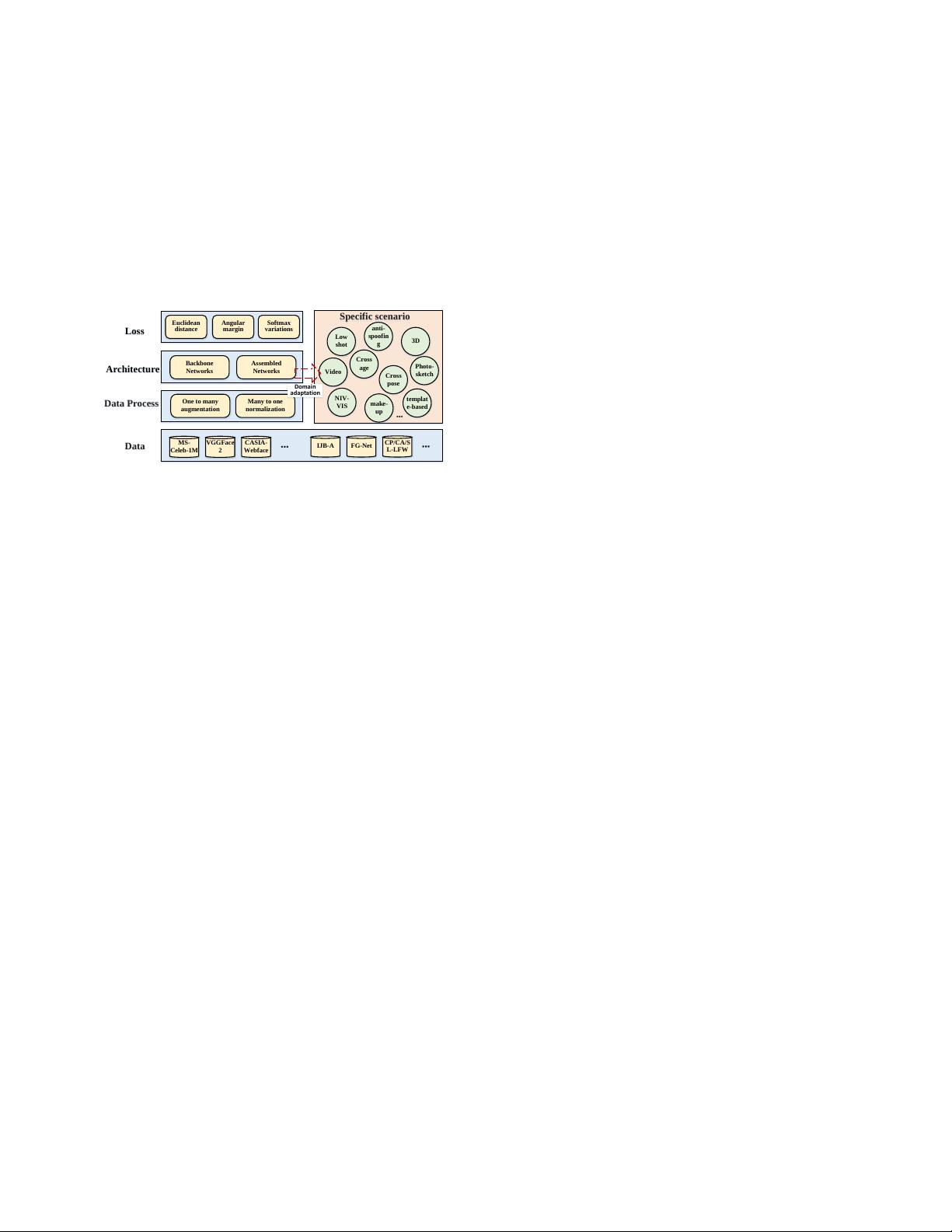

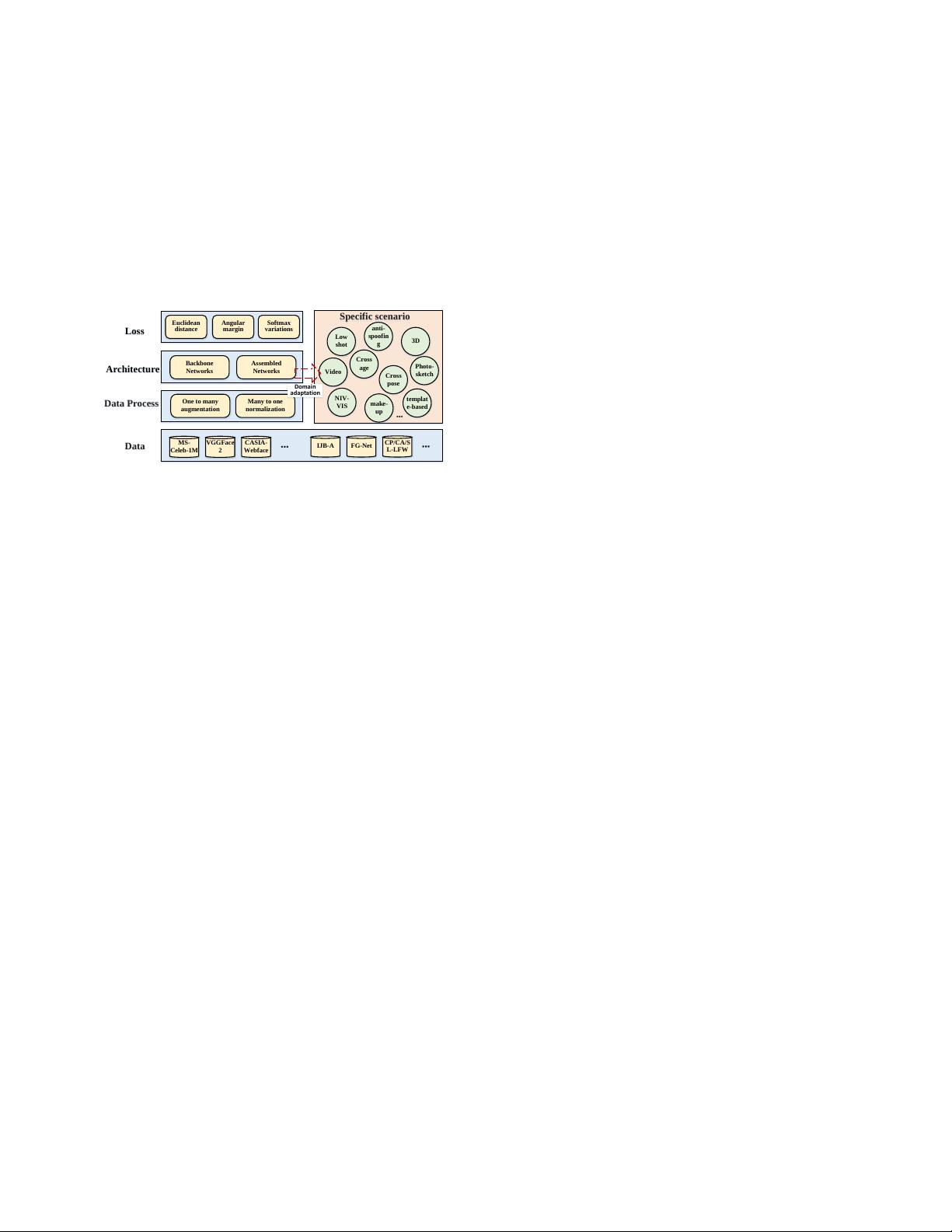

5

tions inherit from object classification and develop according

to unique characteristics of FR; face processing methods are

also designed to handle poses, expressions and occlusions

variations. With maturity of FR in general scenario, difficulty

levels are gradually increased and different solutions are driven

for specific scenarios that are closer to reality, such as cross-

pose FR, cross-age FR, video FR. In specific scenarios, more

difficult and realistic datasets are constructed to simulate

reality scenes; face processing methods, network architectures

and loss functions are also modified based on those of general

solutions.

Data

Data Process

Architecture

Loss

Euclidean

distance

Angular

margin

Softmax

variations

Backbone

Networks

Assembled

Networks

One to many

augmentation

Many to one

normalization

Video

3D

Low

shot

Photo-

sketch

…

anti-

spoofin

g

make-

up

Domain

adaptation

MS-

Celeb-1M

VGGFace

2

CASIA-

Webface

…

IJB-A FG-Net

CP/CA/S

L-LFW

…

NIV-

VIS

templat

e-based

Cross

age

Cross

pose

Specific scenario

Fig. 4. FR studies have begun with general scenario, then gradually increase

difficulty levels and drive different solutions for specific scenarios to get close

to reality, such as cross-pose FR, cross-age FR, video FR. In specific scenarios,

targeted training and testing database are constructed, and the algorithms, e.g.

face processing, architectures and loss functions are modified based on those

of general solutions.

III. NETWORK ARCHITECTURE AND TRAINING LOSS

As there are billions of human faces in the earth, real-

world FR can be regarded as an extremely fine-grained object

classification task. For most applications, it is difficult to

include the candidate faces during the training stage, which

makes FR become a “zero-shot” learning task. Fortunately,

since all human faces share a similar shape and texture, the

representation learned from a small proportion of faces can

generalize well to the rest. A straightforward way is to include

as many IDs as possible in the training set. For example,

Internet giants such as Facebook and Google have reported

their deep FR system trained by 10

6

− 10

7

IDs [176], [195].

Unfortunately, these personal datasets, as well as prerequisite

GPU clusters for distributed model training, are not accessible

for academic community. Currently, public available training

databases for academic research consist of only 10

3

−10

5

IDs.

Instead, academic community make effort to design effec-

tive loss functions and adopt deeper architectures to make deep

features more discriminative using the relatively small training

data sets. For instance, the accuracy of most popular LFW

benchmark has been boosted from 97% to above 99.8% in the

pasting four years, as enumerated in Table IV. In this section,

we survey the research efforts on different loss functions and

network architecture that have significantly improved deep FR

methods.

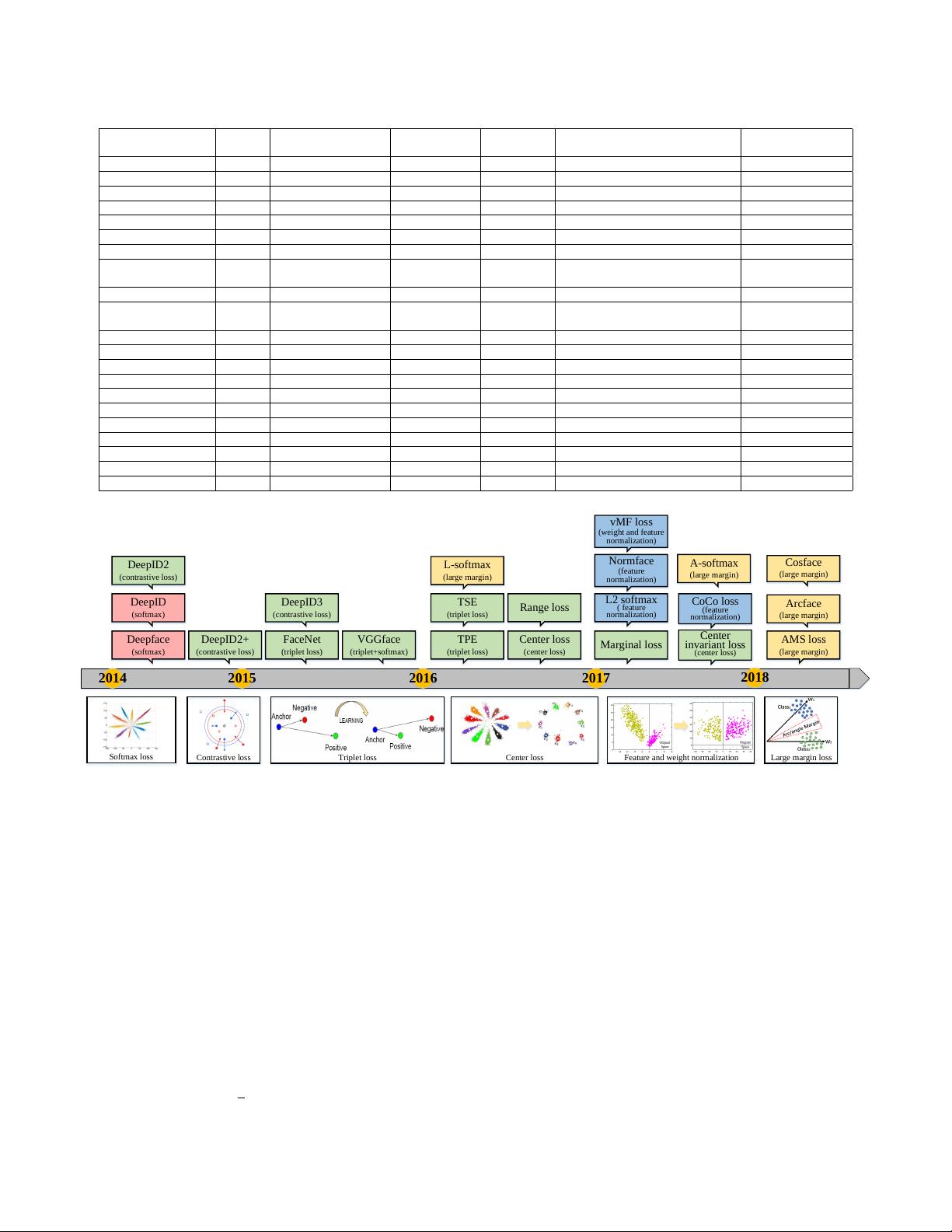

A. Evolution of Discriminative Loss Functions

Inheriting from the object classification network such as

AlexNet, the initial Deepface [195] and DeepID [191] adopted

cross-entropy based softmax loss for feature learning. After

that, people realized that the softmax loss is not sufficient by

itself to learn feature with large margin, and more researchers

began to explore discriminative loss functions for enhanced

generalization ability. This become the hottest research topic

for deep FR research, as illustrated in Fig. 5. Before 2017,

Euclidean-distance-based loss played an important role; In

2017, angular/cosine-margin-based loss as well as feature and

weight normalization became popular. It should be noted that,

although some loss functions share similar basic idea, the new

one is usually designed to facilitate the training procedure by

easier parameter or sample selection.

1) Euclidean-distance-based Loss : Euclidean-distance-

based loss is a metric learning method[230], [216] that embeds

images into Euclidean space and compresses intra-variance

and enlarges inter-variance. The contrastive loss and the triplet

loss are the commonly used loss functions. The contrastive loss

[222], [187], [188], [192], [243] requires face image pairs and

then pulls together positive pairs and pushes apart negative

pairs.

L =y

ij

max

0, kf(x

i

) − f (x

j

)k

2

−

+

+ (1 − y

ij

)max

0,

−

− kf(x

i

) − f (x

j

)k

2

(2)

where y

ij

= 1 means x

i

and x

j

are matching samples and

y

ij

= −1 means non-matching samples. f (·) is the feature

embedding,

+

and

−

control the margins of the matching and

non-matching pairs respectively. DeepID2 [222] combined the

face identification (softmax) and verification (contrastive loss)

supervisory signals to learn a discriminative representation,

and joint Bayesian (JB) was applied to obtain a robust embed-

ding space. Extending from DeepID2 [222], DeepID2+ [187]

increased the dimension of hidden representations and added

supervision to early convolutional layers, while DeepID3 [188]

further introduced VGGNet and GoogleNet to their work.

However, the main problem with the contrastive loss is that

the margin parameters are often difficult to choose.

Contrary to contrastive loss that considers the absolute

distances of the matching pairs and non-matching pairs, triplet

loss considers the relative difference of the distances between

them. Along with FaceNet [176] proposing by Google, Triplet

loss [176], [149], [171], [172], [124], [51] was introduced

into FR. It requires the face triplets, and then it minimizes

the distance between an anchor and a positive sample of the

same identity and maximizes the distance between the anchor

and a negative sample of a different identity. FaceNet made

kf(x

a

i

) − f (x

p

i

)k

2

2

+ α < − kf (x

a

i

) − f (x

n

i

)k

2

2

using hard

triplet face samples, where x

a

i

, x

p

i

and x

n

i

are the anchor,

positive and negative samples, respectively; α is a margin;

and f (·) represents a nonlinear transformation embedding

an image into a feature space. Inspired by FaceNet [176],

TPE [171] and TSE [172] learned a linear projection W to

construct triplet loss, where the former satisfied Eq. 3 and the

latter followed Eq. 4. Other methods combine triplet loss with

softmax loss [276], [124], [51], [40]. They first train networks

with the softmax and then fine-tune them with triplet loss.

(x

a

i

)

T

W

T

W x

p

i

+ α < (x

a

i

)

T

W

T

W x

n

i

(3)