没有合适的资源?快使用搜索试试~ 我知道了~

首页2019 IEEE国际固态电路会议特辑:低功耗运动触发IoT CMOS图像传感器与能量效率

2019 IEEE国际固态电路会议特辑:低功耗运动触发IoT CMOS图像传感器与能量效率

需积分: 10 0 下载量 48 浏览量

更新于2024-07-16

收藏 140.48MB PDF 举报

"这篇文档是IEEE固态电路期刊(Journal of Solid-State Circuits)的2019年11月刊,主要关注集成电路的晶体管级设计,特别是2019年国际固态电路会议(ISSCC)的特辑。文章经过同行评审,并在被接受前进行了抄袭检查。特辑包括了能源高效、数据压缩、可重构3D堆叠SPAD成像器等创新固态电路技术的论文。"

在固态电路领域,集成电路(IC)的设计是一个核心主题,它涉及将多个电子元件集成到一个单一的硅片上,以实现更小、更高效的功能。本文刊载的论文反映了这个领域的最新进展和技术挑战。例如,"Energy-Efficient Motion-Triggered IoT CMOS Image Sensor With Capacitor Array-Assisted Charge-Injection SAR ADC"探讨了一种能效高的物联网(IoT)CMOS图像传感器,其利用电容阵列辅助的电荷注入SAR ADC(逐次逼近型模数转换器),实现了在运动触发时的低功耗操作,这对于电池供电的IoT设备至关重要。

另一篇论文"A Data-Compressive 1.5/2.75-bit Log-Gradient QVGA Image Sensor With Multi-Scale Readout for Always-On Object Detection"介绍了一种数据压缩的1.5/2.75位对数梯度QVGA图像传感器,它具有多尺度读取功能,用于始终开启的目标检测。这种传感器能够在保持低功耗的同时,提供高效的图像处理,适合用于连续监测和物体识别的应用。

"Reconfigurable 3-D-Stacked SPAD Imager With In-Pixel Histogramming for Flash LIDAR or High-Speed Time-of-Flight Imaging"提出了一种可重构的3D堆叠SPAD(单光子雪崩二极管)成像器,内置像素级直方图,适用于闪存激光雷达或高速飞行时间成像。这种创新设计提高了3D传感的速度和精度,对于自动驾驶汽车和其他需要精确距离测量的系统具有重要意义。

最后,"A 512-Pixel, 51-kHz-Frame-Rate, Dual-Shank, Lens-Less, Filter-Less Single-Photon Avalanche Diode CMOS Neural Imaging Probe"介绍了一种512像素、51kHz帧率的双分支、无镜头、无滤波的单光子雪崩二极管CMOS神经成像探针。这种探针用于高分辨率的神经科学应用,它能够实现无损的神经活动记录,对于理解大脑功能和开发新的神经接口技术具有重大价值。

这些论文展示了固态电路技术在能源效率、数据处理、传感器设计和生物医学应用等方面的突破,突显了该领域的创新活力和未来潜力。通过这样的同行评审出版物,科研人员和工程师可以分享最新的研究成果,推动固态电路技术的发展。

2930 IEEE JOURNAL OF SOLID-STATE CIRCUITS, VOL. 54, NO. 11, NOVEMBER 2019

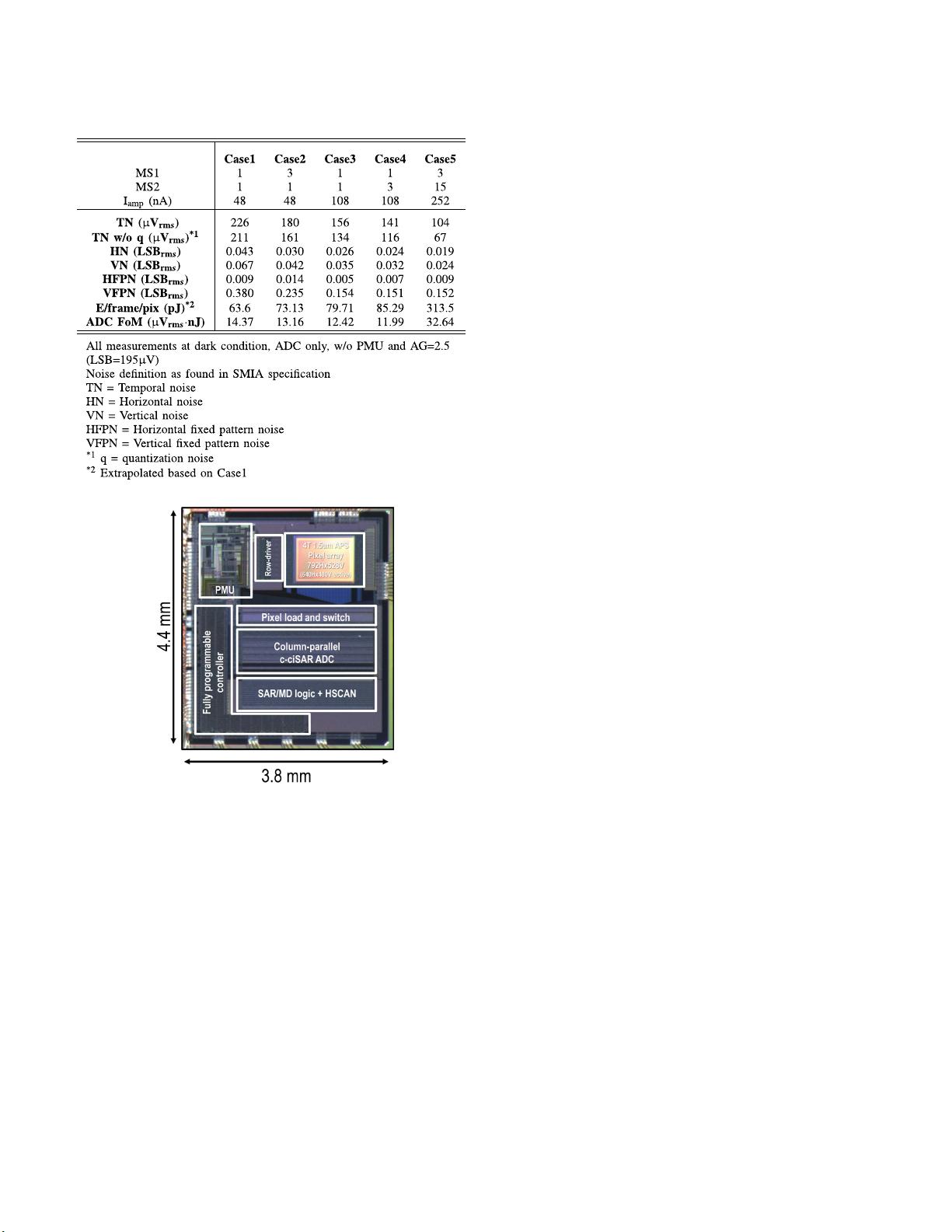

TABLE III

I

MAGE CHARACTERISTICS

Fig. 16. Chip photograph.

pitch pixel array is selected for future integration of a small-

form lens. The sensor is designed with thick-oxide devices

to reduce leakage current except for column SAR logic. The

ADC pitch and size can be further reduced with using high-vt

thin-oxide devices if available. Also, the sensor can operate

with its on-chip PMU, which generates 13 intern al voltages

from the external 2.5-V battery, enabling integration into an

IoT sensor node. The total die area is 16.7 mm

2

.

VI. C

ONCLUSION

In this article, an IoT image sensor is proposed that is

designed with energy-efficient c-ciSAR ADCs in column-

parallel form. The c-ciSAR achieves 10b performance while

only consuming 63.6 pJ/frame/pix, which yields the state-of-

the-art energy efficiency of 14.4-μV

rms

·nJ ADC FoM. Also,

the sensor contains motion-triggering function implemented

with the near-pixel placement of previous frame data. This

is done while re-using the ADC hardware already in place

for c-ciSAR, which leads to a more hardware/energy-efficient

solution than other sensors while maintaining high image

quality (64.1 dB in dynamic range).

A

CKNOWLEDGME NT

The authors would like to thank Synopsys for their tools.

R

EFERENCES

[1] G. Kim et al., “A 467 nW CMOS visual motion sensor with temporal

av eraging and pixel aggregation,” in IEEE Int. Solid-State Circ uits Conf.

(ISSCC) Dig. Tech. Papers, Feb. 2013, pp. 480–481.

[2] O. Kumagai et al., “A 1/4-inch 3.9 Mpixel low-power event-driven

back-illuminated stacked CMOS image sensor,” in IEEE Int. Solid-State

Circuits Conf. (ISSCC) Dig. Tech. Papers, Feb. 2018, pp. 86–88.

[3] K. D. Choo, J. Bell, and M. P. Flynn, “Area-efficient 1 GS/s 6b SAR

ADC with charge-injection-cell-based DAC,” in IEEE Int. Solid-State

Circuits Conf. (ISSCC) Dig. Tech. Papers, Jan./Feb. 2016, pp. 460–461.

[4] S. Kawahito, “Column-parallel ADCs for CMOS image sensors and their

FoM-based evaluations,” IEICE Trans. Electron., vol. E101.C, no. 7,

pp. 444–456, Jul. 2018.

[5] P. Harpe, E. Cantatore, and A. V. Roermund, “A 2.2/2.7 fJ/conversion-

step 10/12b 40 kS/s SAR ADC with data-driven noise reduction,”

in IEEE Int. Solid-State Circuits Conf. (ISSCC) Dig. Tech. Papers,

Feb. 2013, pp. 270–271.

[6] C. Liu, S. Chang, G. Huang, and Y. Lin, “A 0.92 mW 10-bit 50-MS/s

SAR ADC in 0.13 μm CMOS process,” in Proc. Symp. VLSI Circuits,

Jun. 2009, pp. 236–237.

[7] L. Kull et al., “A 90 GS/s 8b 667 mW 64× interleaved SAR ADC

in 32 nm digital SOI CMOS,” in IEEE Int. Solid-State Circuits Conf.

(ISSCC) Dig. Tech. Papers, Feb. 2014, pp. 378–379.

[8] R. Funatsu et al., “133 Mpixel 60 fps CMOS image sensor with

32-column shared high-speed column-parallel SAR ADCs,” in IEEE

Int. Solid-State Circuits Conf. (ISSCC) Dig. Tech. Papers, Feb. 2015,

pp. 1–3.

[9] H. Kim et al., “A delta-readout scheme for low-po wer CMOS image

sensors with multi-column-parallel SAR ADCs,” IEEE J. Solid-State

Circuits, vol. 51, no. 10, pp. 2262–2273, Oct. 2016.

[10] J. P. Keane et al., “An 8 GS/s time-interleaved SAR ADC with

unresolved decision detection achieving -58 dBFS noise and 4 GHz

bandwidth in 28 nm CMOS,” in IEEE Int. Solid-State Circuits Conf.

(ISSCC) Dig. Tech. Papers, Feb. 2017, pp. 284–285.

[11] P. Harpe, “A 0.0013 mm

2

10b 10 MS/s SAR ADC with a 0.0048 mm

2

42 dB-rejection passive fir filter,” in IEEE Int. Solid-State Circuits Conf.

(ISSCC) Dig. Tech. Papers, Apr. 2018, pp. 1–4.

[12] S. Ji, J. Pu, B. C. Lim, and M. Horowitz, “A 220 pJ/pixel/frame CMOS

image sensor with partial settling readout architecture,” in Proc. IEEE

Symp. VLSI Circuits (VLSI-Circuits), Jun. 2016, pp. 1–2.

[13] L. Chen, X. Tang, A. Sanyal, Y. Yoon, J. Cong, and N. Sun,

“A 0.7-V 0.6-μW 100-kS/s low-po wer SAR ADC with statistical

estimation-based noise reduction,” IEEE J. Solid-State Circuits, vol. 52,

no. 5, pp. 1388–1398, May 2017.

[14] S.-E. Hsieh and C.-C. Hsieh, “A 0.4 V 13b 270 kS/S SAR-ISDM ADC

with an opamp-less time-domain integrator ,” in IEEE Int. Solid-State

Circuits Conf. (ISSCC) Dig. Tech. Papers, Feb. 2018, pp. 240–242.

[15] L. Kull et al., “A 10b 1.5 GS/s pipelined-SAR ADC with background

second-stage common-mode regulation and offset calibration in 14 nm

CMOS FinFET,” in IEEE Int. Solid-State Circuits Conf. (ISSCC) Dig.

Tech. Papers, Feb . 2017, pp. 474–475.

[16] T. Takahashi et al., “A stacked CMOS image sensor with array-

parallel ADC architecture,” IEEE J. Solid-State Circuits, vol. 53, no. 4,

pp. 1061–1070, Apr. 2018.

[17] T. Haruta et al., “A 1/2.3 inch 20 Mpixel 3-layer stacked CMOS image

sensor with DRAM,” in IEEE Int. Solid-State Cir cuits Conf. (ISSCC)

Dig. Tech. Papers, Feb. 2017, pp. 76–77.

[18] S. Sukegawa et al., “A 1/4-inch 8 Mpixel back-illuminated stacked

CMOS image sensor,” in IEEE Int. Solid-State Circuits Conf. (ISSCC)

Dig. Tech. Papers, Feb. 2013, pp. 484–485.

[19] A. Suzuki et al., “A 1/1.7-inch 20 Mpixel back-illuminated stacked

CMOS image sensor for ne w imaging applications,” in IEEE Int. Solid-

State Circuits Conf. (ISSCC) Dig. Tech. Papers, Feb . 2015, pp. 1–3.

CHOO et al.: ENERGY-EFFICIENT MOTION-TRIGGERED IOT CMOS IMAGE SENSOR 2931

[20] T. Arai et al.,“A1.1-μm 33-Mpixel 240-fps 3-D-stacked CMOS

image sensor with three-stage cyclic-cyclic-SAR analog-to-digital con-

verters,” IEEE Trans. Electron Devices, vol. 64, no. 12, pp. 4992–5000,

Dec. 2017.

[21] K. D. Choo et al., “Energy-efficient low-noise CMOS image sensor

with capacitor array-assisted charge-injection SAR ADC for motion-

triggered low-power IoT applications,” in IEEE Int. Solid-State Circuits

Conf. (ISSCC) Dig. Tech. Papers, Feb. 2019, pp. 96–98.

Kyojin David Choo (M’14) recei ved the B.S. and

M.S. degrees in electrical engineering from Seoul

National Unive rsity, Seoul, South Korea, in 2007

and 2009, respectively, and the Ph.D. degree from

the Univ ersity of Michigan, Ann Arbor, MI, USA,

in 2018.

From 2009 to 2013, he was with the Image Sensor

De velopment Team, Samsung Electronics, Yongin,

South Korea, where he designed signal readout

chains for mobile/digital single-lens reflex (DSLR)

image sensors. During his doctoral research, he

interned with Apple, Cupertino, CA, USA, and consulted to Sony Electronics,

San Jose, CA, USA. He is currently a Research Fellow with the University

of Michigan. He holds 15 U.S. patents. His research interests include charge-

domain circuits, sensor interfaces, energy converters, high-speed links/timing

generators, and millimeter-scale integrated systems.

Li Xu (S’15) received the B.Eng. degree in automa-

tion from Tongji University, Shanghai, China, in

2009, and the M.S. degree in electrical and computer

engineering from Northeastern University, Boston,

MA, USA, in 2016. He is currently pursuing the

Ph.D. degree with the University of Michigan, Ann

Arbor, MI, USA.

From 2009 to 2011, he was an IC Design Engineer

with Ricoh Electronic Devices Shanghai Company,

Ltd., Shanghai, where he worked on low-drop out

(regulators) (LDO) and dc/dc converter projects. In

2015, he was a Design Intern with Linear Technology Corporation, Colorado

Springs, CO, USA. His current research interest includes energy-efficient

mixed-signal circuit design.

Yejoong Kim (S’08–M’15) receive d the bachelor’s

degree in electrical engineering from Yonsei Univer-

sity, Seoul, South Korea, in 2008, and the master’s

and Ph.D. degrees in electrical engineering from the

University of Michigan, Ann Arbor, MI, USA, in

2012 and 2015, respectiv ely.

He is currently a Research Fellow with the Univer-

sity of Michigan and the Vice President of Research

and Development with CubeWorks, Inc., Ann Arbor.

His research interests include subthreshold circuit

designs, ultralow-power SRAM, and the design of

millimeter-scale computing systems and sensor platforms.

Ji-Hwan Seol (M’15) received the B.S. degree in

electrical engineering from Yonsei University, Seoul,

South Korea, in 2009, and the M.S. degree in

electrical engineering from KAIST, Daejeon, South

Korea, in 2012. He is currently pursuing the Ph.D.

degree with the University of Michigan, Ann Arbor,

MI, USA, supported by the Samsung Fellowship

Program.

In 2012, he joined the DRAM Design Team, Sam-

sung Electronics, Yongin, South Korea, where he

contributed to the deve lopment of mobile DRAMs

including LPDDR2, LPDDR3, LDDR4, and LPDDR5. His research interests

include clock generation, memory systems, and ultralow-po wer system design.

Xiao Wu (S’14–M’15) receiv e d the B.S. degree

in electrical engineering jointly from the University

of Michigan, Ann Arbor, MI, USA, and Shanghai

Jiaotong University, Shanghai, China, in 2014. She

is currently pursuing the Ph.D. degree with the

Univ ersity of Michigan.

She has experience in energy harvester, power

management unit (PMU), and high-performance

hardware accelerator . Her current research interests

include small sensor system integration and hard-

ware accelerator design.

Dennis Sylvester (S’95–M’00–SM’04–F’11)

received the Ph.D. degree in electrical engineering

from the University of California at Berkeley,

Berkele y, CA, USA, in 1999, where his dissertation

was recognized with the David J. Sakrison

Memorial Prize as the most outstanding research in

the UC-Berkeley EECS Department.

He was the F ounding Director of the Michigan

Integrated Circuits Laboratory (MICL), Electrical

and Computer Engineering Department, University

of Michigan, Ann Arbor, MI, USA, a group of

10 faculty and 70+ graduate students. He has held research staff positions

at the Advanced Technology Group of Synopsys, Mountain View, CA,

USA, Hewlett-Packard Laboratories, Palo Alto, CA, USA, and visiting

professorships at the National University of Singapore, Singapore, and

Nanyang Technological University, Singapore. He co-founded Ambiq

Micro, Austin, TX, USA, a fabless semiconductor company developing

ultralow-power mixed-signal solutions for compact wireless devices. He is

currently a Professor of electrical engineering and computer science with

the University of Michigan. He has authored or coauthored more than

500 articles along with one book and several book chapters. He holds 45

U.S. patents. His research interests include the design of millimeter-scale

computing systems and energy-efficient near-threshold computing.

Dr. Sylvester currently serves on the Technical Program Committee for the

IEEE International Solid-State Circuits Conference and on the Administrative

Committee for the IEEE Solid-State Circuits Society. He was a recipient

of the NSF CAREER Award, the Beatrice Winner Award at ISSCC, an

IBM Faculty Award, an SRC Inventor Recognition Award, the University of

Michigan Henry Russel Award for distinguished scholarship, and ten best

paper awards and nominations. He was named one of the Top Contributing

Authors at ISSCC and most prolific author at IEEE Symposium on VLSI

circuits. He serves/has served as Associate Editor for the IEEE J

OURNAL

OF

SOLI D-STATE CI RCUITS, the IEEE TRANSACTIONS ON CAD, and the

IEEE T

RANSACTIONS ON VL SI SYSTEMS. He was an IEEE Solid-State

Circuits Society Distinguished Lecturer.

David Blaauw (M’94–SM’07–F’12) received the

B.S. degree in physics and computer science from

Duke University, Durham, NC, USA, in 1986,

and the Ph.D. degree in computer science from

the Univ ersity of Illinois at Urbana–Champaign,

Champaign, IL, USA, in 1991.

Until August 2001, he worked for Motorola, Inc.,

Austin, TX, USA, where he was the Manager of the

High-Performance Design Technology Group and

won the Motorola Innovation Award. Since August

2001, he has been the Faculty of the University of

Michigan, Michigan, Ann Arbor, USA, where he is the Kensall D. Wise

Collegiate Professor of EECS. For high-end servers, his research group

introduced so-called near-threshold computing, which has become a common

concept in semiconductor design. Most recently, he has pursued research

in cognitive computing using analog, in-memory neural-networks for edge-

de vices and genomics acceleration. He has authored or coauthored more than

600 articles. He holds 65 patents. He has extensi ve research in ultralow-power

computing using subthreshold computing and analog circuits for millimeter

sensor systems which was selected by the MIT Technology Review as one of

the year’s most significant innovations.

Dr. Blaauw currently serves on the IEEE International Solid-State Circuits

Conference’s technical program committee. He was a recipient of the 2016

SIA-SRC Faculty Award for lifetime research contributions to the U.S. semi-

conductor industry and numerous best paper awards and nominations. He was

the General Chair of the IEEE International Symposium on Low Power, the

Technical Program Chair of the ACM/IEEE Design Automation Conference.

He is currently the Director of the Michigan Integrated Circuits Lab.

2932 IEEE JOURNAL OF SOLID-STATE CIRCUITS, VOL. 54, NO. 11, NOVEMBER 2019

A Data-Compressive 1.5/2.75-bit Log-Gradient

QVGA Image Sensor With Multi-Scale Readout

for Always-On Object Detection

Christopher Young , Student Member, IEEE, Alex Omid-Zohoor , Member, IEEE,

Pedram Lajevardi, Member, IEEE, and Boris Murmann , Fellow, IEEE

Abstract—This article presents an application-optimized

QVGA image sensor for low-power, always-on object detection

using histograms of oriented gradients (HOG). In contrast to con-

ventional CMOS imagers that feature linear and high-resolution

ADCs, our readout scheme extracts logarithmic intensity gradi-

ents at 1.5 or 2.75 bits of resolution. This eliminates unnecessary

illumination-related data and allows the HOG feature descriptors

tobecompressedbyupto25× relative to a conventional 8-bit

readout. As a result, the digital backend-detector, which

typically limits system efficiency, incurs less data movement and

computation, leading to an estimated 3.3× energy reduction.

The imager employs a column-parallel readout with analog

cyclic-row buffers that also perform arbitrary-sized pixel-binning

for multi-scale object detection. The log-digitization of pixel

gradients is computed using a ratio-to-digital converter (RDC),

which performs successive capacitive divisions to its input

voltages. The prototype IC was fabricated in a 0.13-µmCIS

process with standard 4-T 5-µm pixels and consumes 99 pJ/pixel.

Experiments using a deformable parts model (DPM) detector

for three object classes (persons, bicycles, and cars) indicate

detection accuracies that are on par with conventional systems.

Index Terms— CMOS image sensor, deformable parts

model (DPM), feature extraction, histogram of oriented gradi-

ent (HOG), logarithmic gradients, object detection, pixel-binning,

wake-up.

I. INTRODUCTION

O

BJECT detection is an important vision task in embed-

ded systems like smartphones, real-time monitoring

devices, and augmented reality. Deep neural networks (DNNs)

are currently the best-performing algorithms for such applica-

tions [1]–[3], and there has been extensive work on improving

the efficiency of algorithms [4], [5] and hardware [6], [7].

Manuscript received May 11, 2019; revised July 25, 2019; accepted

August 12, 2019. Date of publication September 19, 2019; date of current

version October 23, 2019. This article was approved by Guest Editor Pedram

Mohseni. This work was supported in part by Robert Bosch LLC, Sunnyvale,

CA, and in part by Systems on Nanoscale Information fabriCs (SONIC),

one of six STARnet Centers through MARCO and DARPA. (Corresponding

author: Christopher Young.)

C. Young and B. Murmann are with the Department of Elec-

trical Engineering, Stanford University, Stanford, CA 94305 USA

(e-mail: cjyoung@stanford.edu).

A. Omid-Zohoor was with the Department of Electrical Engineering,

Stanford University, Stanford, CA 94305 USA. He is now with K-Motion

Interactive, Inc., Scottsdale, AZ 85260 USA (e-mail: alexomid@gmail.com).

P. Lajevardi is with the Research and Technology Center, Robert Bosch

LLC, Sunnyvale, CA 94085 USA (e-mail: pdrm@ieee.org).

Color versions of one or more of the figures in this article are available

online at http://ieeexplore.ieee.org.

Digital Object Identifier 10.1109/JSSC.2019.2937437

Despite these efforts, customized DNN application-specific

integrated circuits (ASICs) are relatively energy-hungry due to

their large computational footprint. For example, the moderate-

size DNN processor in [7] consumes 37.1 nJ/pixel, while even

the small-size network described in [6] requires 3.7 nJ/pixel.

Therefore, to achieve efficient object detection in an embedded

device, it is attractive to employ a cascaded topology [8], [9]

in which an always-on wakeup detector duty cycles a more

powerful DNN.

For a wake-up detector, energy efficiency is the primary

specification and accuracy can be traded off to some extent.

Consequently, it can be beneficial to consider prior-art machine

learning algorithms with low-complexity hand-crafted features

that help minimize the computational complexity. One suitable

option is to employ histograms of oriented gradients (HOGs),

as these features provide a good tradeoff between complex-

ity and attainable detection accuracy [18]. A custom 8-bit

CMOS imager that computes HOG features on-chip consumes

only 52 pJ/pixel [13]. However, the complete system also

requires a backend detection algorithm, which still consumes

940 pJ/pixel in an optimized implementation [10]. As shown in

our system study in [12], this imbalance mostly stems from a

large amount of data seen by the detector. To remedy this issue,

this article uses a feature-extraction approach that aggressively

quantizes and compresses the data, thereby alleviating the

memory requirements and processing load for the backend

algorithm.

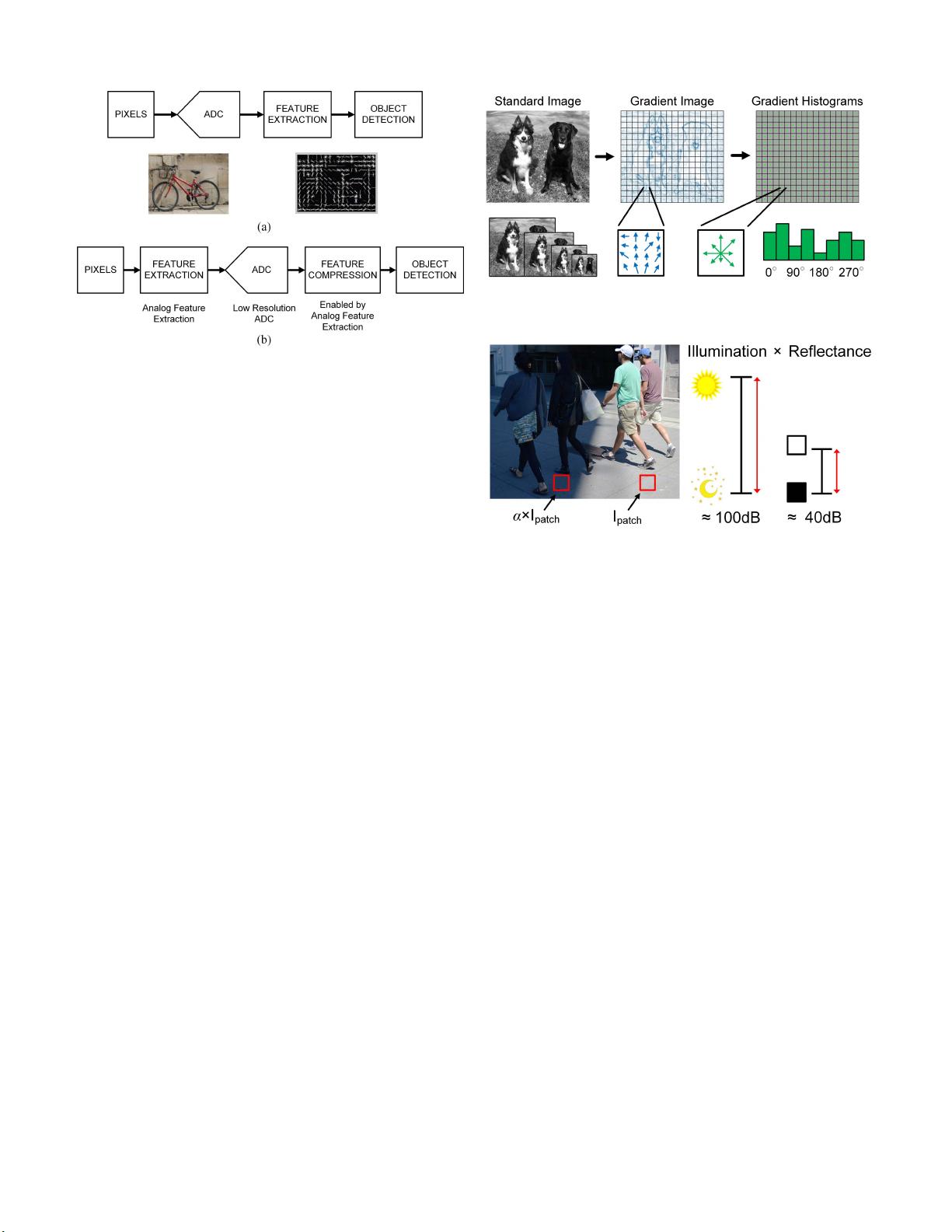

Fig. 1 contrasts a conventional pipleline with our approach.

Instead of faithfully digitizing each pixel value, we first per-

form gradient feature extraction in the analog domain before

A/D conversion. As we will explain in the remainder of this

article, extracting these analog features in the log domain

eliminates unnecessary illumination-related bits and enables

low-resolution digitization and aggressive feature compres-

sion. As shown in [12], the HOG feature compression ratio

can be as large as 25×, leading to an estimated 3.3× reduction

in backend energy for a deformable parts model (DPM)-based

detector.

Several prior studies have considered analog feature com-

putation within the pixels or within the imager’s readout

circuitry [13]–[15]. In-pixel computation can be attractive

but reduces the pixel fill factor and adversely affects the

sensing quality. While this issue can be alleviated with 3-D

stacked technologies [16], low-cost applications tend to require

0018-9200 © 2019 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission.

See http://www.ieee.org/publications_standards/publications/rights/index.html for more information.

YOUNG et al.: DATA-COMPRESSIVE 1.5/2.75-BIT LOG-GRADIENT QVGA IMAGE SENSOR 2933

Fig. 1. (a) Object detection pipeline producing high-resolution image and

extracting low-dimensional features. (b) Our approach with analog feature

extraction, enabling low-resolution features to be digitized and compressed.

a single-die implementation. For these reasons, our scheme

performs the feature extraction in the column readout circuitry

and uses a standard 4-T pixel array found in more conven-

tional imagers [11], [17]. Prior studies also perform feature

extraction in or near the column readout [13], [14], but rely

on a direct and linear mapping of the feature extraction into

analog/mixed-signal circuitry. The distinct advantage of our

approach is that it enables aggressive feature compression via

logarithmic processing.

The following sections expand on our conference contri-

bution [19] and provide updated chip measurements after

fine-tuning for energy consumption. We also show the results

from a more detailed object detection experiment. Section II

motivates the use of log-gradients, while Section III discusses

the major components of our log-gradient CIS as well as the

overall detection system. Section IV details the circuit level

innovations required to digitize low-resolution log-gradients as

well as our methodology for deriving the circuit specifications.

Section V presents the measurements on these circuits as well

as results from an end-to-end detection system experiment.

We summarize our results and conclude in Section VI.

II. L

OGARITHMIC GRADIENTS

The input to a DPM is generally the HOG features, which

are a global image descriptor originally developed for pedes-

trian detection, but generally suitable for detecting ridged

objects [20], [21]. Various ASICs that compute HOGs have

been reported in [10], [22], and [23], and Choi et al. [13]

demonstrated the HOG feature extraction using mixed-signal

circuitry within a CMOS image sensor.

Fig. 2 shows how HOG features are generated. First, the

image gradients are computed at every pixel to produce a

vector with an angle and magnitude. The gradient image

is then divided into cells, usually of 8 × 8 pixels [21].

In each cell, the magnitudes of the gradients are accumulated

into bins of a local histogram, where the bins are assigned

according to the quantized angles of the gradients. The angles

are often computed modulo 180

◦

such that their range is

between 0

◦

and 180

◦

. Further, using linear interpolation, a

Fig. 2. HOGs feature computation. Gradients are accumulated into local cell

histogram bins. This is performed at multiple image resolutions.

Fig. 3. Scene illumination versus reflectance. Variations in illumination can

cause a scene to have very large DR. On the other hand, the reflectance of

objects and surfaces has significantly smaller DR.

gradient’s magnitude is sometimes divided across multiple

cell histograms as a function of the gradient’s proximity to

each cell in a process called spatial soft-binning. Our study in

[12] shows that this is not necessarily required in the context

of a very low-power system where we seek to quantize the

gradients aggressively. Finally, it is important to compute the

HOG features at different spatial resolutions, to create what is

known as an image pyramid, to detect objects of multiple sizes.

Now, to understand why we are motivated to directly

compute the log-gradients on the image sensor itself, we first

give an overview of the relationship between image gradients

and light transduction at the pixel level.

A. Linear Images and Illumination

To first order, the incident light on the pixel array is

composed of two multiplicative components: 1) illumination

and 2) reflectance [24]. Illumination is proportional to the

overall light levels while reflectance is proportional to the

light reflected from objects. It is the reflectance that gives

objects their perceived shape, color, and other discriminating

characteristics. The key point is that the dynamic range (DR)

of the illumination is significantly larger than the DR of the

reflectance. Fig. 3 shows that the contrast between a moonlit

night and a sunny day can be 100 000:1 (100 dB) while

the difference in reflectance of a diffuse white surface and

a diffuse black surface is closer to 100:1 (40 dB).

The image in Fig. 3 shows patches of a sidewalk under

two different illuminations. Assuming the reflectance of the

patches is the same, the light intensity incident on a pixel

viewing the patch in the shadow is simply a scaled value of the

2934 IEEE JOURNAL OF SOLID-STATE CIRCUITS, VOL. 54, NO. 11, NOVEMBER 2019

light intensity in the brighter portion of the image. The scaling

factor is the proportional change in light intensity. More

generally, for a given image, I

base

taken at a base illumination

level (or equivalently, base image exposure), a change in

overall illumination (or change in image exposure) is captured

by a scaling factor, α

I

∗

= α I

base

. (1)

Further, the photon-to-electron-to-voltage conversion for a

pixel is approximately linear so that the voltage read from

the pixels is also proportional to α.

Linear images require a large bit-depth to capture the DR

of illumination within a natural scene. When producing an

image for human viewing, the linear proportionality to light

intensity is generally lost due to the gamma compression,

tone-mapping, and other nonlinear image processing. After

the nonlinear compression and processing, this bit depth can

be reduced mostly due to human’s logarithmic perception of

light [24]. The important point is that feature descriptors and

detection algorithms are typically designed and specified on

these nonlinearly compressed images. If we desire to map any

of the feature-extraction directly on an image sensor, we must

account for the larger DR of the linear pixel values.

B. Illumination and Image Gradients

Image gradients are composed of two sub-gradient compo-

nents, a horizontal gradient G

H

and a vertical gradient G

V

.

For a pixel P

j,k

at row j, column k, the gradient components

are expressed as the difference of pixels in their respective

directions

G

H j,k

= P

j,k−1

− P

j,k+1

(2)

G

V j,k

= P

j−1,k

− P

j+1,k

. (3)

Equivalently, each component can be computed by con-

volving the filters [−101]and[−101]

T

with the image.

Note that these are centered gradients, which were empirically

shown to yield higher accuracy than simply taking differences

of adjacent pixels [20].

Gradients calculated from linear images have the same pro-

portionality to light intensity as the linear images themselves,

where the illumination (or image exposure) also scales the

gradient by the same factor α

αG

H j,k

= α P

j,k−1

− αP

j,k+1

. (4)

Thus, the gradients are directly proportional to the illumination

and the gradient components must have the same bit-depth

(plus a sign bit) as the linear image itself.

Our system study in [12] recommends that instead of taking

the differences of linear pixels, one should take the differences

in the log of the pixels. This is equivalent to the log of the ratio.

Computationally, when quantizing the log-gradients, we can

drop the log for the ratio alone

G

H j,k

= log

P

j,k−1

P

j,k+1

→

P

j,k−1

P

j,k+1

(5)

G

V j,k

= log

P

j−1,k

P

j+k,k

→

P

j−1,k

P

j+k,k

. (6)

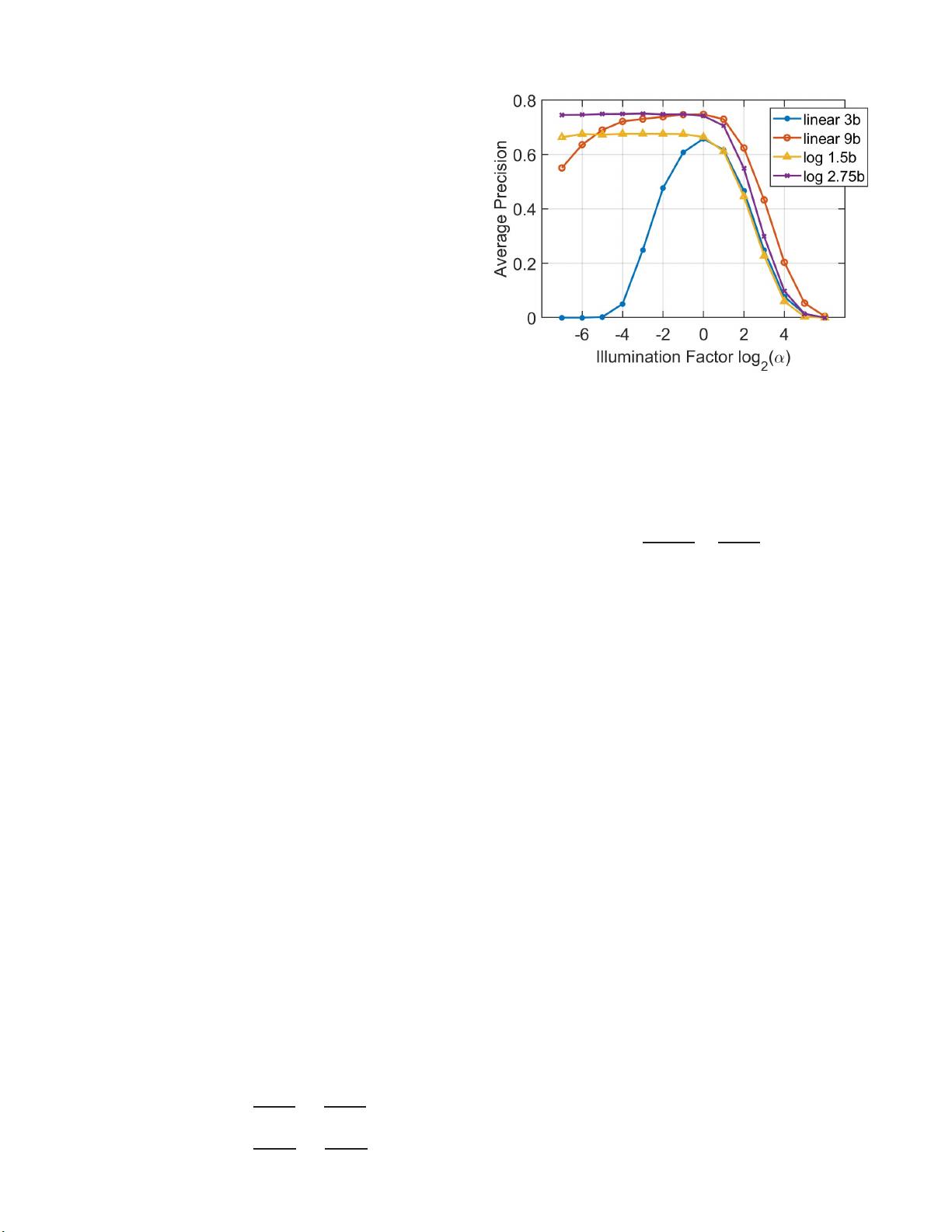

Fig. 4. AP of detection task versus illumination for quantized linear and log-

gradients. Heavily quantized log-gradients are far more robust to illumination

than linear gradients.

If there is a change in illumination (or image exposure),

the scaling factor α cancels in the ratio, meaning that the

gradient components are now illumination invariant

G

H j,k

=

αP

j,k−1

αP

j,k+1

=

P

j,k−1

P

j,k+1

. (7)

Because the log-gradients are robust to illumination,

Omid-Zohoor et al. [12] showed that they can be aggressively

quantized, to as few as 1.5 bits (two decision levels) or

2.75 bits (six decision levels). Fig. 4 shows the results

of an object detection task using a custom RAW, linear

image database [25] and an ideal, noiseless system. Average

precision (AP) on the y-axis is a measure of detector accuracy

and captures the balance between the detector’s precision and

recall. The x-axis is a sweep across global image exposure

of the database (i.e., α). The base image exposure at which

the models were trained (zero on the x-axis), is the exposure

empirically determined to yield the highest detection accuracy.

At this single “good” image exposure, 3-bit linear gradients

(calculated from a 2-bit image) yield a detection accuracy that

might be sufficient for a wake-up detection task. However,

a decrease from the ideal image exposure results in AP

dropping rapidly. In an always-on system, we cannot expect

to have an expensive auto-exposure algorithm, so a higher DR

is required. Linear 9-bit gradients maintain accuracy across a

wider range of image exposure, but the accuracy eventually

falls off. Under noiseless conditions, the accuracy of both 1.5-

and 2.75-bit log-gradients are constant across lower image

exposures. This means that the DR from an AP point-of-view

is decoupled from bit-depth. At higher image exposures,

AP for all cases falls off as the pixels in the image begin to

saturate. The AP of the low-resolution log-gradients falls faster

as the pixels saturate due to the extreme quantization, whereas

for 9-bit linear gradients, there is still some information

captured in the gradient calculation despite pixels clipping.

Note that we must use a RAW image database as opposed to

a standard database like PASCAL-VOC [3], since the latter

is filled with highly processed and compressed images.

剩余327页未读,继续阅读

2020-11-15 上传

2020-02-08 上传

2020-02-08 上传

2020-02-08 上传

2020-02-08 上传

2020-02-08 上传

2020-02-08 上传

2020-02-08 上传

2020-02-08 上传

netshell

- 粉丝: 11

- 资源: 185

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

最新资源

- Vue3Firebase

- Amazon Data Scraper - Price, Product, Sales-crx插件

- 应用做事

- pandas_flavor-0.3.0.tar.gz

- Psd2Xcode-Xample:Psd2Xcode的示例文件和项目

- tcp_server_client:精简的C ++ TCP客户端服务器

- 【IT十八掌徐培成】计算机基础第01天-02.进制转换-cpu亲和力设置.zip

- SirinlerProje2

- QR马上读-crx插件

- 体内DNA随机动力学

- LostIRC-开源

- 满足您所有开发需求的简短Python代码段-Python开发

- scala-jsonschema:Scala JSON模式

- 155386sy.github.io

- OraNetted-开源

- pandas_flavor-0.2.0.tar.gz

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功