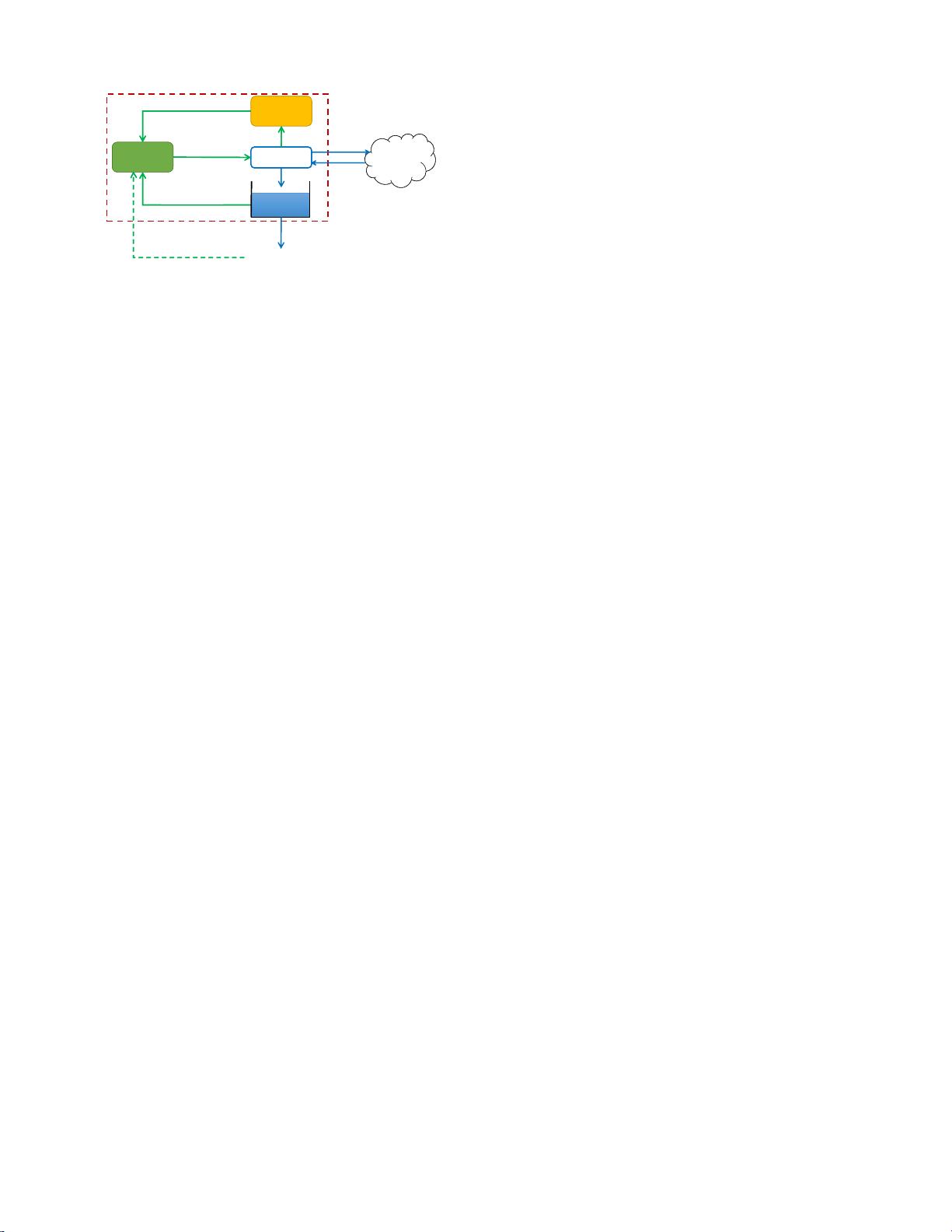

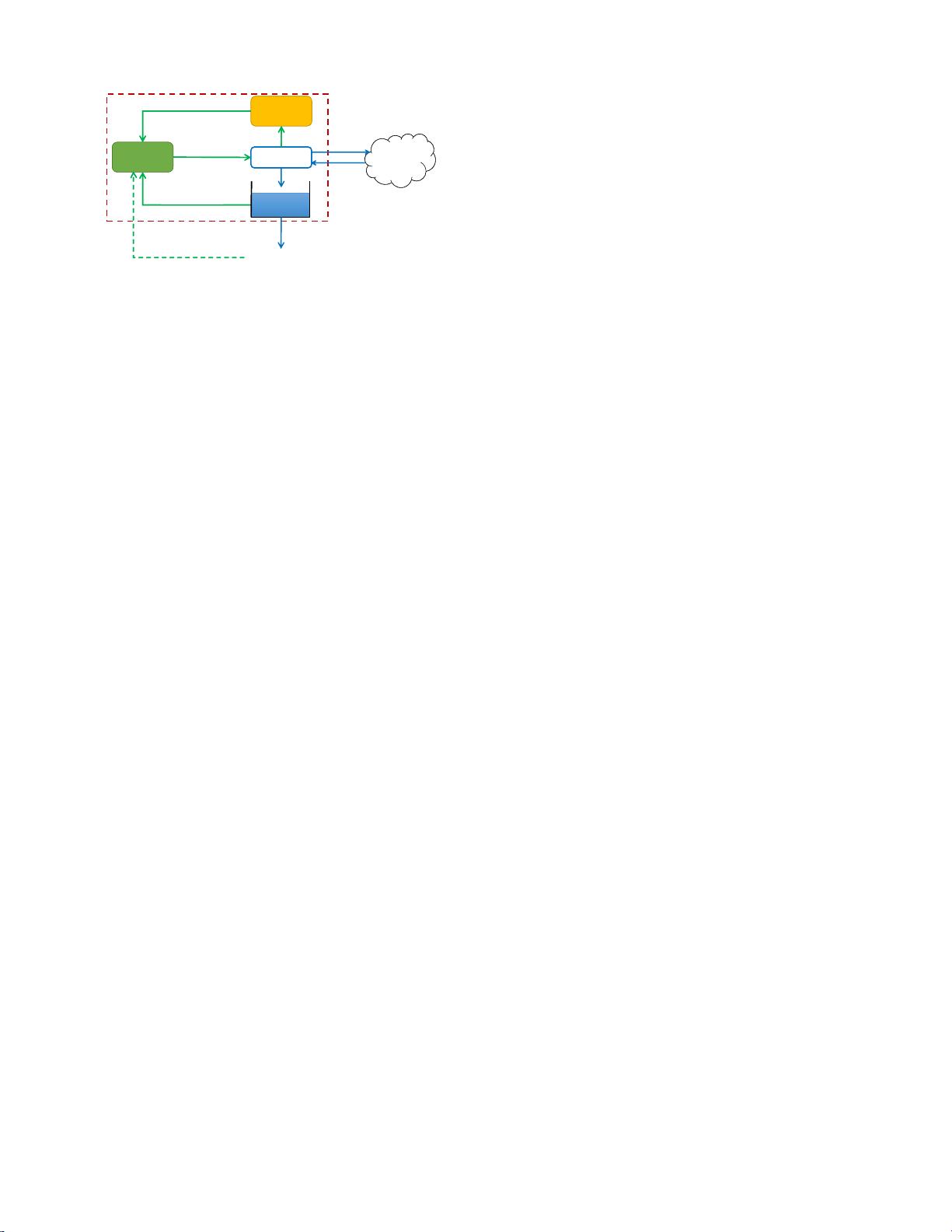

QoE

InternetInternet

Throughput

Predictor

Throughput

Predictor

Bitrate

Controller

Bitrate

Controller

HTTPHTTP

GET

Chunk

Buffer

Occupancy

Video Player

BufferBufferBuffer

End User

Bitrate

Throughput

Prediction

Figure 1: Abstract model of DASH players

is empty and cannot render the video; (2) delivering as high

a playback bitrate as possible within the throughput con-

straints; (3) minimizing startup delay so that the user does

not quit while waiting for the video to load; and (4) keeping

the playback as smooth as possible by avoiding frequent or

large bitrate jumps [24, 35].

To see why these objectives are conflicting, let us con-

sider two extreme solutions. A trivial solution to minimize

rebuffering and the startup delay would be to always pick

the lowest bitrate, but it conflicts with the goal of deliver-

ing high bitrate. Conversely, picking the highest available

bitrate may lead to many rebuffering events. Similarly, the

goal of maintaining a smooth playback may also conflict if

the optimal choice to simultaneously minimize rebuffering

and maximizing average bitrate is to switch bitrates for ev-

ery chunk.

The focus of this paper is on client-side adaptation solu-

tions. Other complementary work includes the use of server-

side bitrate switching (e.g., [37, 18]), TCP changes to avoid

bursts (e.g., [27]), and in-network throughput management

and caching (e.g., [31, 45, 42]). We focus on the client-side

problem for two key reasons. First, client-side solutions of-

fer the most immediately deployable alternative in contrast

to solutions that require in-network support (e.g., [31, 45,

42]), server-side software changes (e.g., [37, 18]), or modi-

fications to lower-layer transport protocols (e.g., [27, 28, 39,

29, 36]). Second, the client is often in the best position to

quickly detect performance issues and respond to dynam-

ics. That said, we believe that the formal foundations and

algorithms we develop can be equally applied to these other

deployment scenarios.

Many measurement studies have shown the poor perfor-

mance of state-of-art video players with respect to these QoE

measures (e.g., [46, 34, 32]). These studies show that most

problems are not artifacts of specific players but manifest

across all state-of-art players such as SmoothStreaming [13],

Netflix [11], Adobe OSMF [3], and Akamai HD [4]. For

brevity we do not reproduce these results here but refer in-

terested readers to prior work (e.g., [46, 34, 32]).

To alleviate these problems, there have been several recent

proposals in the research literature (e.g., [47, 34, 18, 33, 38]).

At a high level, these solutions can be roughly divided into

two categories: (1) rate-based algorithms and (2) buffer-

based algorithms. Video players with rate-based methods

essentially pick the highest possible bitrate based on the es-

timated available throughput. However, as shown in prior

work throughput estimation on top of HTTP suffers from

significant biases [32], which leads to problems with tradi-

tional rate-based approaches. Some solutions try to work

around these biases by either smoothing out throughput esti-

mates [47] or choosing better scheduling strategies [34]. On

the other hand, recent work makes a case for buffer-based al-

gorithms [33]. Rather than using throughput estimates, this

class of algorithms uses buffer occupancy as the feedback

signal, and designs mechanisms to keep the buffer occu-

pancy at a desired level, essentially discarding any available

throughput information.

Despite the broad interest in this topic, what is critically

lacking today is a principled understanding of bitrate adapta-

tion algorithms. Each aforementioned solution offers point

heuristics that work under specific (and implicit) environ-

mental assumptions. While each approach seen in isolation

has been shown to outperform commercial players, there is

little effort to systematically compare how different classes

of algorithms stack up against each other or which of these

technical components are critical, or how robust these algo-

rithms are across different operating regimes (e.g., through-

put stability, buffer size, number of bitrate levels). Further-

more, many of these algorithms even fail to formally state

what objective they seek to optimize making it harder to con-

duct a meaningful comparison.

Our first-order goal in this work is to bring some clarity

to this space. Rather than design yet another point solution,

we start by developing a first-principles approach via con-

trol theory to develop a general framework to reason about

classes of algorithms. In the next section, we use this control-

theoretic “lens” to formally define the stochastic optimiza-

tion that video bitrate adaptation algorithms try to solve.

3 Control-Theoretic Model

In this section, we develop a mathematical model of the

HTTP video streaming process and formally define the bi-

trate adaptation problem. This model gives us a framework

to compare and evaluate existing algorithms and serves as

the foundation for potential improvements.

3.1 Video Streaming Model

We model a video as a set of consecutive video segments or

chunks, V = {1, 2, · · · , K}, each of which contains L sec-

onds of video. Each chunk is encoded at different bitrates.

Let R be the set of all available bitrate levels. The video

player can choose to download chunk k at bitrate R

k

∈ R.

Let d

k

(R

k

) be the size of chunk k encoded at bitrate R

k

. In

constant bitrate (CBR) case, d

k

(R

k

) = L × R

k

, while in

variable bitrate (VBR) case the d

k

∼ R

k

relationship can

differ across chunks.

The higher bitrate is selected, the higher video quality

is perceived by the user. Let q(·) : R → R

+

be a non-

decreasing function which maps selected bitrate R

k

to video

quality perceived by user q(R

k

). Note that q(·) may depend

on the video-playing device as well as the content of the

video. For example, while on HDTV 3Mbps and 1Mbps

may lead to significant difference in user experience, the