2.2. PARALLEL PROGRAMMING GOALS 7

0.1

1

10

100

1000

10000

1975

1980

1985

1990

1995

2000

2005

2010

2015

CPU Clock Frequency / MIPS

Year

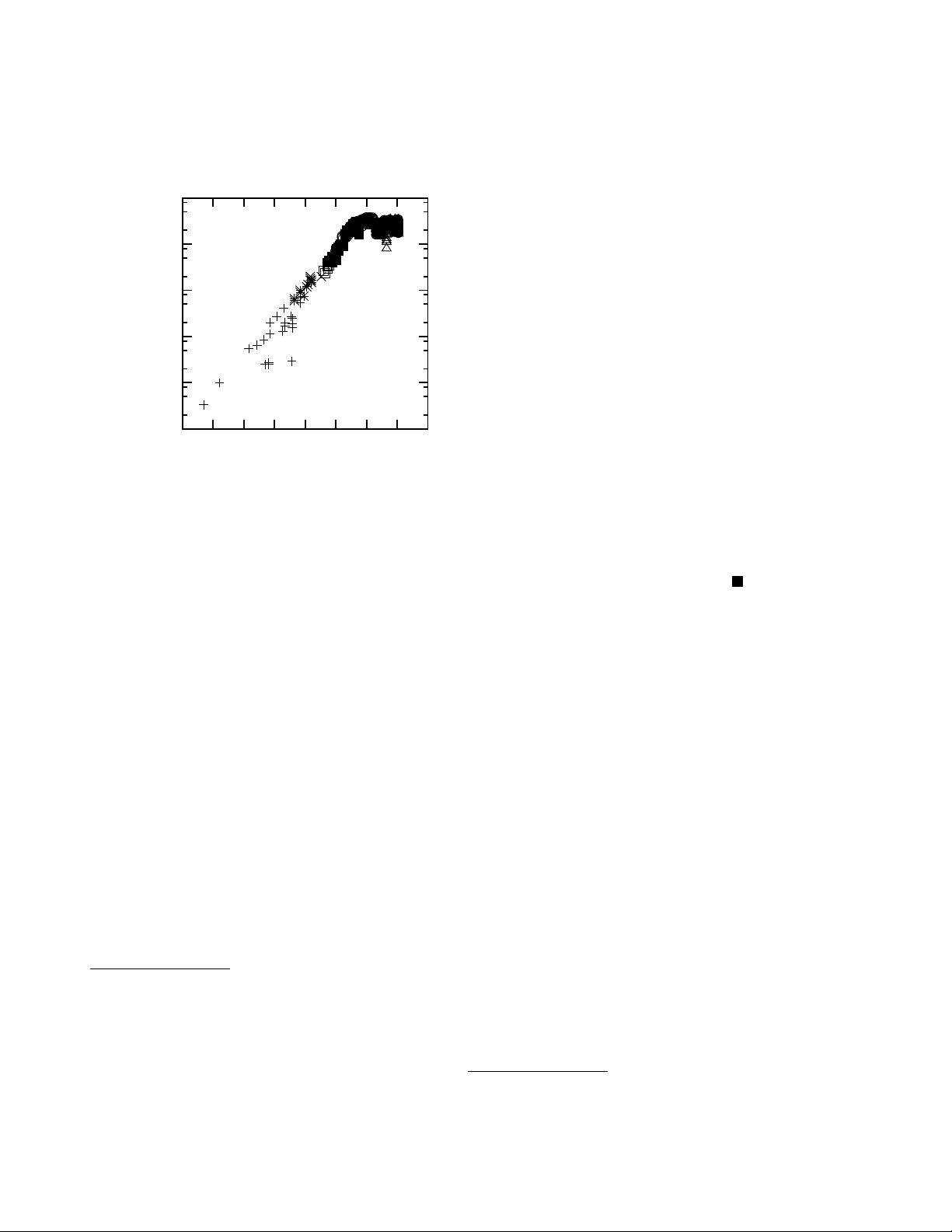

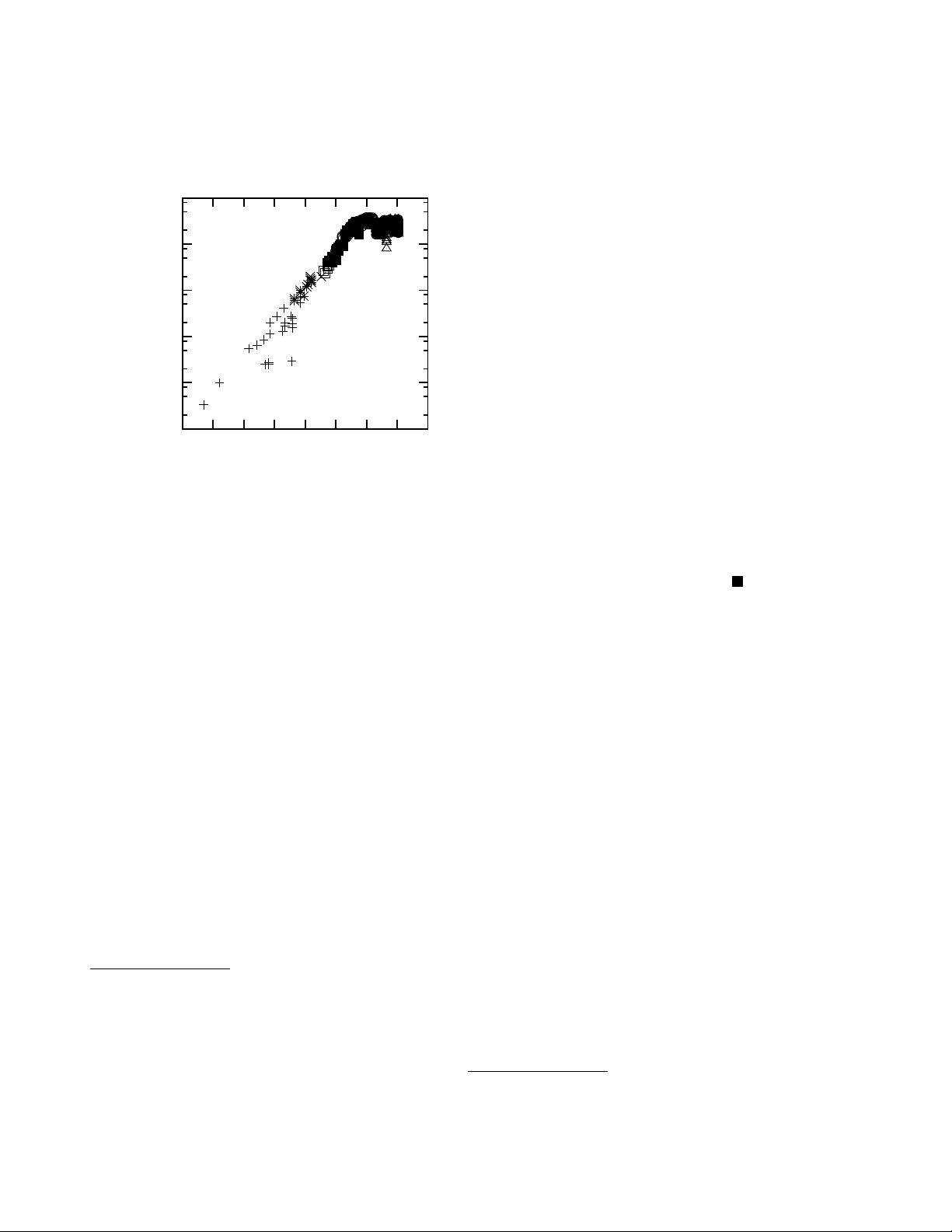

Figure 2.1: MIPS/Clock-Frequency Trend for Intel CPUs

to the fact that, although Moore’s Law continues to deliver

increases in transistor density, it has ceased to provide the

traditional single-threaded performance increases. This

can be seen in Figure 2.1.

1

, which shows that writing

single-threaded code and simply waiting a year or two for

the CPUs to catch up may no longer be an option. Given

the recent trends on the part of all major manufacturers

towards multicore/multithreaded systems, parallelism is

the way to go for those wanting the avail themselves of

the full performance of their systems.

Even so, the first goal is performance rather than scal-

ability, especially given that the easiest way to attain

linear scalability is to reduce the performance of each

CPU [

Tor01

]. Given a four-CPU system, which would

you prefer? A program that provides 100 transactions per

second on a single CPU, but does not scale at all? Or a

program that provides 10 transactions per second on a

single CPU, but scales perfectly? The first program seems

like a better bet, though the answer might change if you

happened to have a 32-CPU system.

1

This plot shows clock frequencies for newer CPUs theoretically ca-

pable of retiring one or more instructions per clock, and MIPS (millions

of instructions per second, usually from the old Dhrystone benchmark)

for older CPUs requiring multiple clocks to execute even the simplest in-

struction. The reason for shifting between these two measures is that the

newer CPUs’ ability to retire multiple instructions per clock is typically

limited by memory-system performance. Furthermore, the benchmarks

commonly used on the older CPUs are obsolete, and it is difficult to

run the newer benchmarks on systems containing the old CPUs, in part

because it is hard to find working instances of the old CPUs.

That said, just because you have multiple CPUs is not

necessarily in and of itself a reason to use them all, espe-

cially given the recent decreases in price of multi-CPU

systems. The key point to understand is that parallel pro-

gramming is primarily a performance optimization, and,

as such, it is one potential optimization of many. If your

program is fast enough as currently written, there is no rea-

son to optimize, either by parallelizing it or by applying

any of a number of potential sequential optimizations.

2

By the same token, if you are looking to apply parallelism

as an optimization to a sequential program, then you will

need to compare parallel algorithms to the best sequential

algorithms. This may require some care, as far too many

publications ignore the sequential case when analyzing

the performance of parallel algorithms.

2.2.2 Productivity

Quick Quiz 2.8:

Why all this prattling on about non-

technical issues??? And not just any non-technical issue,

but productivity of all things? Who cares?

Productivity has been becoming increasingly important

in recent decades. To see this, consider that the price of

early computers was tens of millions of dollars at a time

when engineering salaries were but a few thousand dollars

a year. If dedicating a team of ten engineers to such a

machine would improve its performance, even by only

10%, then their salaries would be repaid many times over.

One such machine was the CSIRAC, the oldest still-

intact stored-program computer, which was put into op-

eration in 1949 [

Mus04

,

Mel06

]. Because this machine

was built before the transistor era, it was constructed of

2,000 vacuum tubes, ran with a clock frequency of 1kHz,

consumed 30kW of power, and weighed more than three

metric tons. Given that this machine had but 768 words

of RAM, it is safe to say that it did not suffer from the

productivity issues that often plague today’s large-scale

software projects.

Today, it would be quite difficult to purchase a machine

with so little computing power. Perhaps the closest equiv-

alents are 8-bit embedded microprocessors exemplified

by the venerable Z80 [

Wik08

], but even the old Z80 had

a CPU clock frequency more than 1,000 times faster than

the CSIRAC. The Z80 CPU had 8,500 transistors, and

could be purchased in 2008 for less than $2 US per unit

in 1,000-unit quantities. In stark contrast to the CSIRAC,

2

Of course, if you are a hobbyist whose primary interest is writing

parallel software, that is more than enough reason to parallelize whatever

software you are interested in.