Closed-loop Matters: Dual Regression Networks for

Single Image Super-Resolution

Yong Guo

∗

, Jian Chen

∗

, Jingdong Wang

∗

, Qi Chen, Jiezhang Cao, Zeshuai Deng,

Yanwu Xu

†

, Mingkui Tan

†

South China University of Technology, Guangzhou Laboratory, Microsoft Research Asia, Baidu Inc.

{guo.yong, sechenqi, secaojiezhang, sedengzeshuai}@mail.scut.edu.cn,

{mingkuitan, ellachen}@scut.edu.cn, jingdw@microsoft.com, ywxu@ieee.org

Abstract

Deep neural networks have exhibited promising perfor-

mance in image super-resolution (SR) by learning a non-

linear mapping function from low-resolution (LR) images

to high-resolution (HR) images. However, there are two un-

derlying limitations to existing SR methods. First, learning

the mapping function from LR to HR images is typically an

ill-posed problem, because there exist infinite HR images

that can be downsampled to the same LR image. As a result,

the space of the possible functions can be extremely large,

which makes it hard to find a good solution. Second, the

paired LR-HR data may be unavailable in real-world ap-

plications and the underlying degradation method is often

unknown. For such a more general case, existing SR mod-

els often incur the adaptation problem and yield poor per-

formance. To address the above issues, we propose a dual

regression scheme by introducing an additional constraint

on LR data to reduce the space of the possible functions.

Specifically, besides the mapping from LR to HR images, we

learn an additional dual regression mapping estimates the

down-sampling kernel and reconstruct LR images, which

forms a closed-loop to provide additional supervision. More

critically, since the dual regression process does not depend

on HR images, we can directly learn from LR images. In

this sense, we can easily adapt SR models to real-world

data, e.g., raw video frames from YouTube. Extensive exper-

iments with paired training data and unpaired real-world

data demonstrate our superiority over existing methods.

1. Introduction

Deep neural networks (DNNs) have been the workhorse

of many real-world applications, including image classifi-

cation [

18, 14, 9, 15, 27, 13], video understanding [46, 45,

∗

Authors contributed equally.

†

Corresponding author.

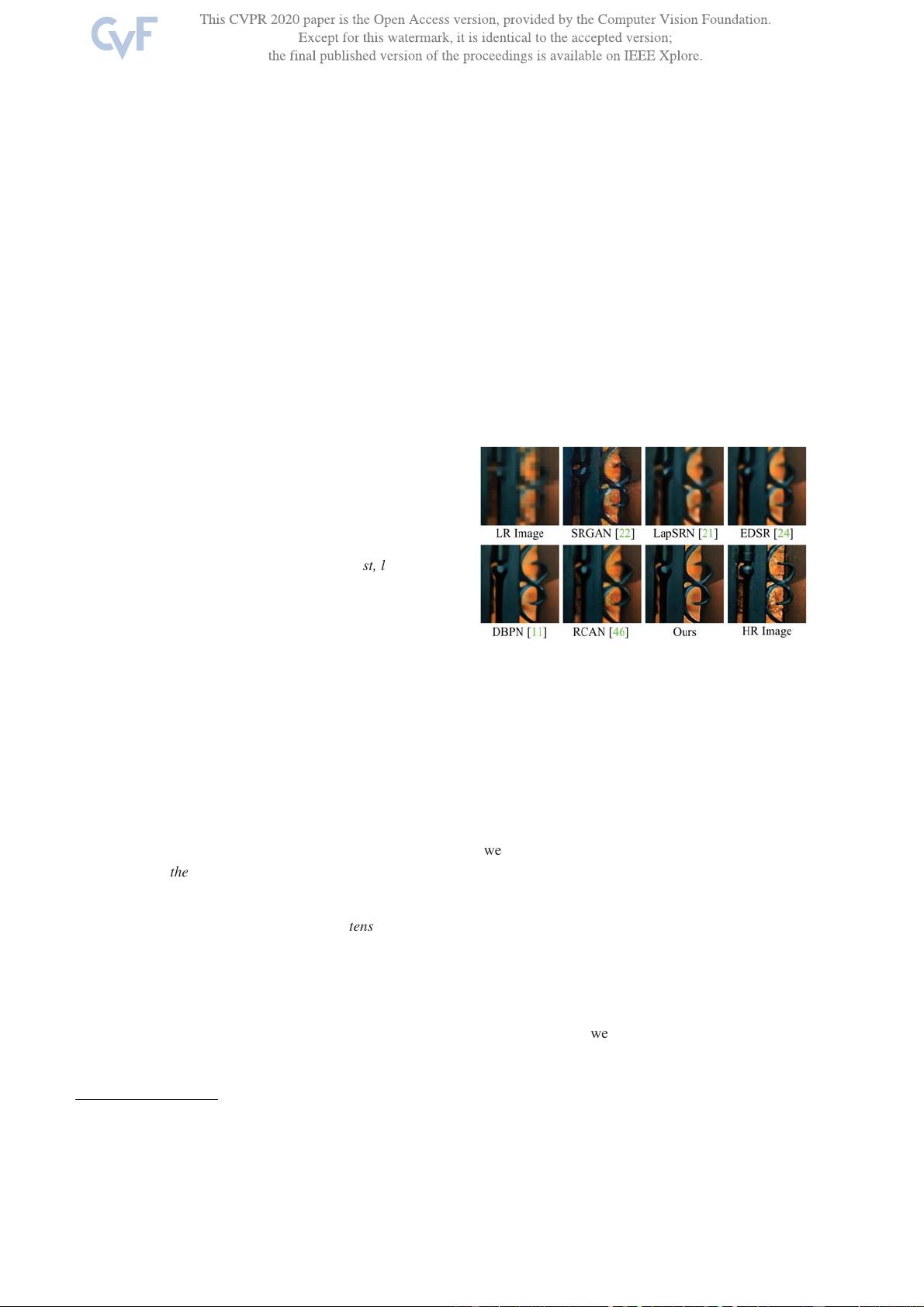

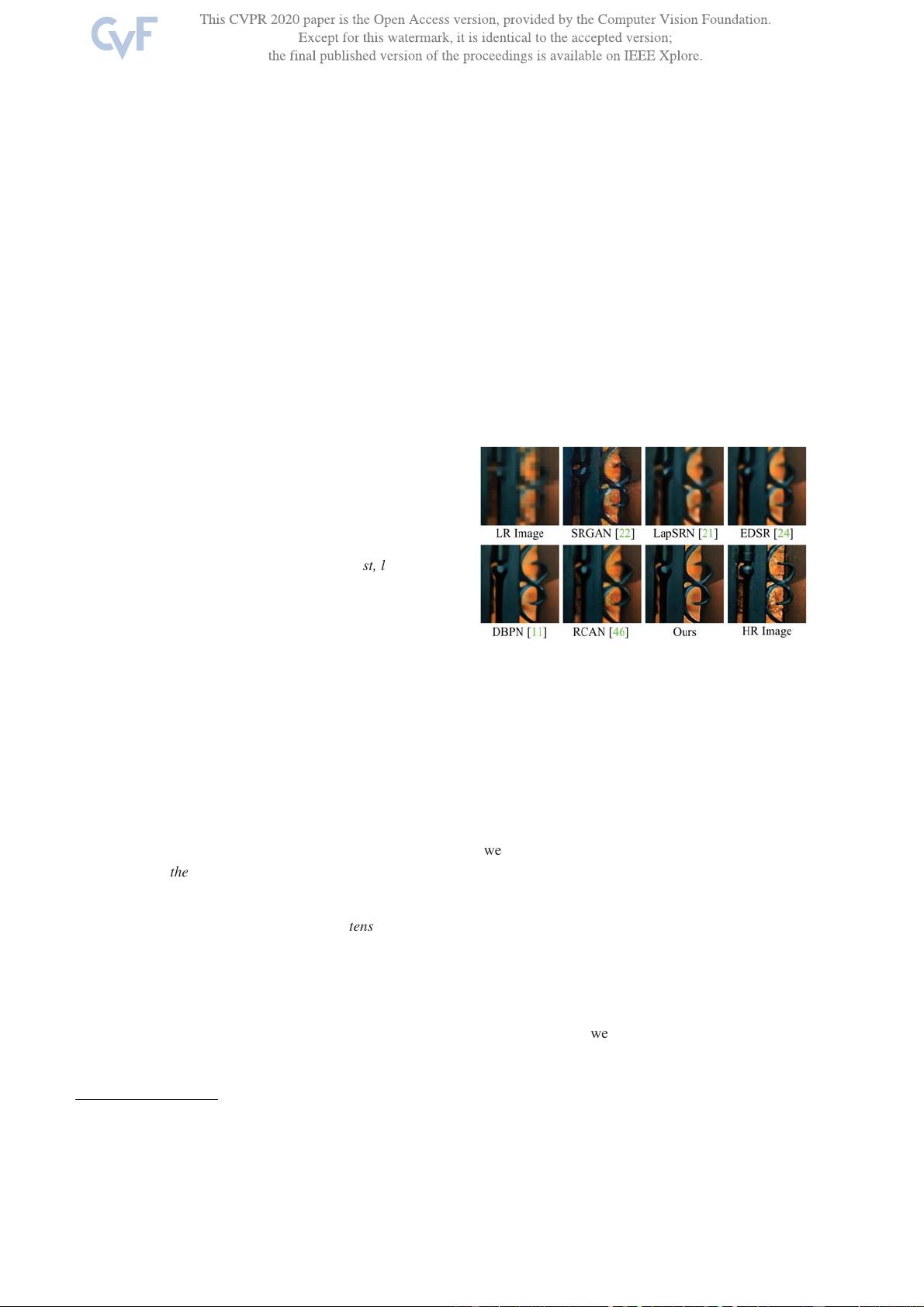

Figure 1. Performance comparison of the images produced by the

state-of-the-art methods for 8× SR. Our dual regression scheme is

able to produce sharper images than the baseline methods.

44, 6] and many other applications [7, 50, 52, 11, 20]. Re-

cently, image super-resolution (SR) has become an impor-

tant task that aims at learning a nonlinear mapping to re-

construct high-resolution (HR) images from low-resolution

(LR) images. Based on DNNs, many methods have been

proposed to improve SR performance [

51, 26, 10, 12, 49].

However, these methods may suffer from two limitations.

First, learning the mapping from LR to HR images is typ-

ically an ill-posed problem since there exist infinitely many

HR images that can be downscaled to obtain the same LR

image [

36]. Thus, the space of the possible functions that

map LR to HR images becomes extremely large. As a result,

the learning performance can be limited since learning a

good solution in such a large space is very hard. To improve

the SR performance, one can design effective models by in-

creasing the model capacity, e.g., EDSR [

26], DBPN [16],

and RCAN [

51]. However, these methods still suffer from

the large space issue of possible mapping functions, result-

ing in the limited performance without producing sharp tex-

tures [

24] (See Figure 1). Thus, how to reduce the possible

space of the mapping functions to improve the training of

SR models becomes an important problem.

5407