IEEE COMMUNICATIONS SURVEYS AND TUTORIALS 6

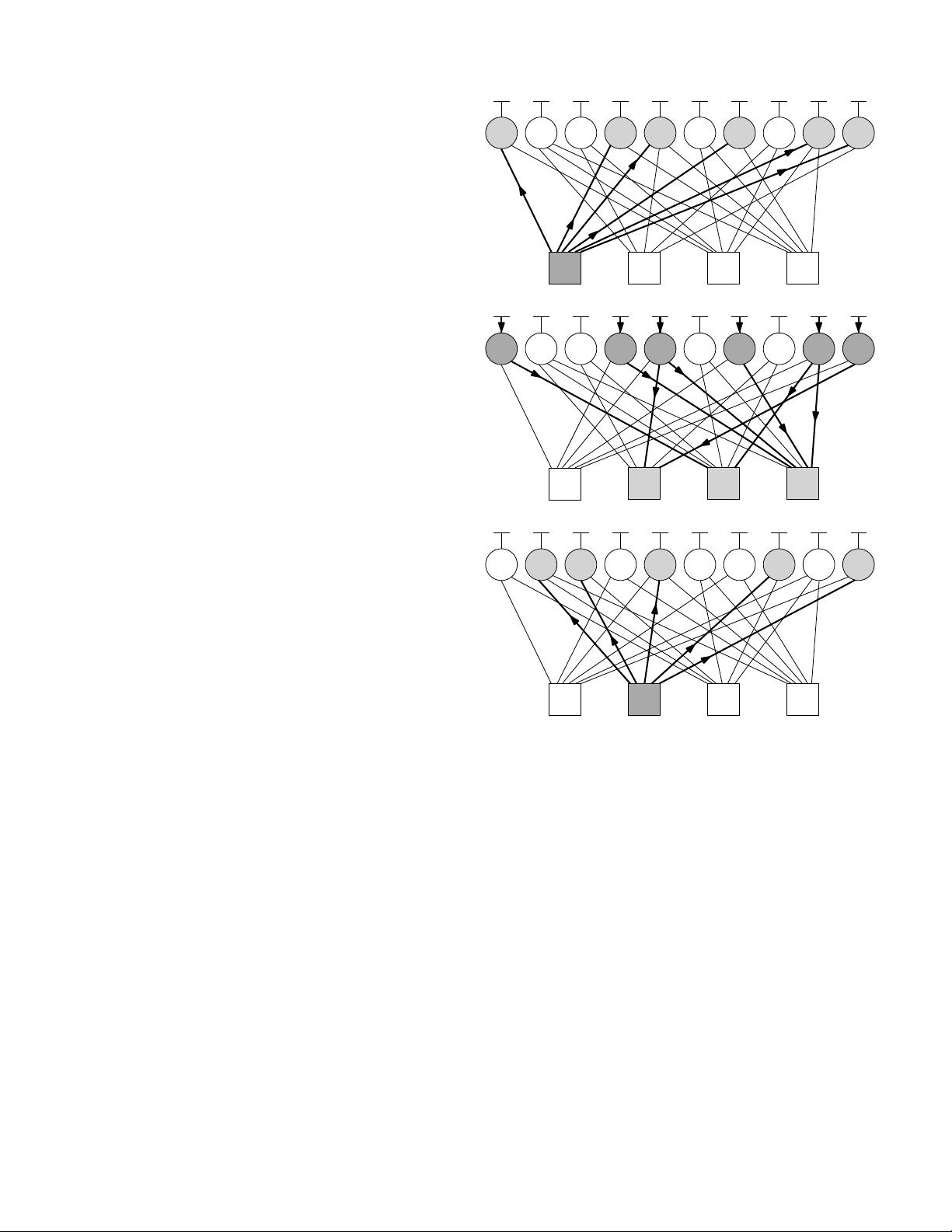

sent along every edge (in bold) to every receiving VN, shown

in light grey. In the second half-iteration, the VNs are shown

in dark grey to indicate that they are performing calculations,

while the CNs are only receiving messages, so they are shown

in light grey.

While Layered Belief Propagation also operates in an

iterative manner, it processes the nodes more sequentially

within each iteration, activating only one or a specific subset

of nodes at a time [21]. LBP is commonly operated in a CN-

centric manner, processing each CN in turn. Once a CN has

been activated, all of its connected VNs are activated before

moving on to the next CN. Once every CN has been processed,

the iteration is complete. Using Fig. 6 as an example, LBP

may commence each decoding iteration by activating CN c

1

first, sending messages to each of its connected VNs: v

1

, v

4

,

v

5

, v

7

, v

9

and v

10

. Each of these VNs may then be activated,

sending new messages to each of their connected CNs, except

c

1

. Following this, c

2

may be activated, allowing it to make

use of the new information received from v

5

and v

10

alongside

the information previously received from its other connected

VNs. This process continues until every CN has been activated,

which then marks the end of one decoding iteration.

LBP has the advantage that the information obtained during

an iteration is available to aid the remainder of the iteration.

Owing to this however, it does not have the same high level

of parallelism as the flooding schedule, possibly resulting in a

lower processing throughput and a higher processing latency.

It can also be seen that M CN activations and D

c

× M VN

activations occur per iteration, resulting in a higher computa-

tional complexity per iteration, when compared to the flooding

schedule. However, it will also be shown in Section II-C2

that CN activations can be significantly more computationally

expensive than the VN activations, hence the increased cost

is manageable. Additionally, LBP tends to converge to the

correct codeword using fewer iterations and therefore with

lower computational complexity than flooding [17], resulting

in lower complexity overall.

Informed Dynamic Scheduling inspects the messages that

are passed between the various nodes, selecting to activate

whichever node is expected to offer the greatest improvement

in belief [22]. This requires IDS to perform additional calcula-

tions in order to determine which node to activate at each stage

of the decoding process. However, IDS facilitates convergence

using fewer node activations than in either flooding or LBP,

which may lead to a lower complexity overall.

During IDS, the difference between the previous message

sent over an edge and the message that is obtained using

recently-updated information [23] is calculated. This differ-

ence is termed the residual, and represents the improvement

in belief that is achieved by the new message. Like the LBP

schedule, IDS is commonly centred on the CNs. At the start

of the iterative decoding process, the residual for each output

of each CN is calculated as the magnitude of the message to

be sent over that edge. The message with the greatest residual

is identified, and the receiving VN is then activated, sending

updated messages to each of its connected CNs. These CNs

then calculate new residuals for each of their edges as the

difference between its new message and its previous message.

˜

P

1

v

1

˜

P

2

v

2

˜

P

3

v

3

˜

P

4

v

4

˜

P

5

v

5

˜

P

6

v

6

˜

P

7

v

7

˜

P

8

v

8

˜

P

9

v

9

˜

P

10

v

10

c

1

c

2

c

3

c

4

˜

P

1

v

1

˜

P

2

v

2

˜

P

3

v

3

˜

P

4

v

4

˜

P

5

v

5

˜

P

6

v

6

˜

P

7

v

7

˜

P

8

v

8

˜

P

9

v

9

˜

P

10

v

10

c

1

c

2

c

3

c

4

˜

P

1

v

1

˜

P

2

v

2

˜

P

3

v

3

˜

P

4

v

4

˜

P

5

v

5

˜

P

6

v

6

˜

P

7

v

7

˜

P

8

v

8

˜

P

9

v

9

˜

P

10

v

10

c

1

c

2

c

3

c

4

Fig. 6. An example of the layered belief propagation schedule

All of the residuals in the graph are then compared for the

sake of identifying the new maximum, before the process is

repeated.

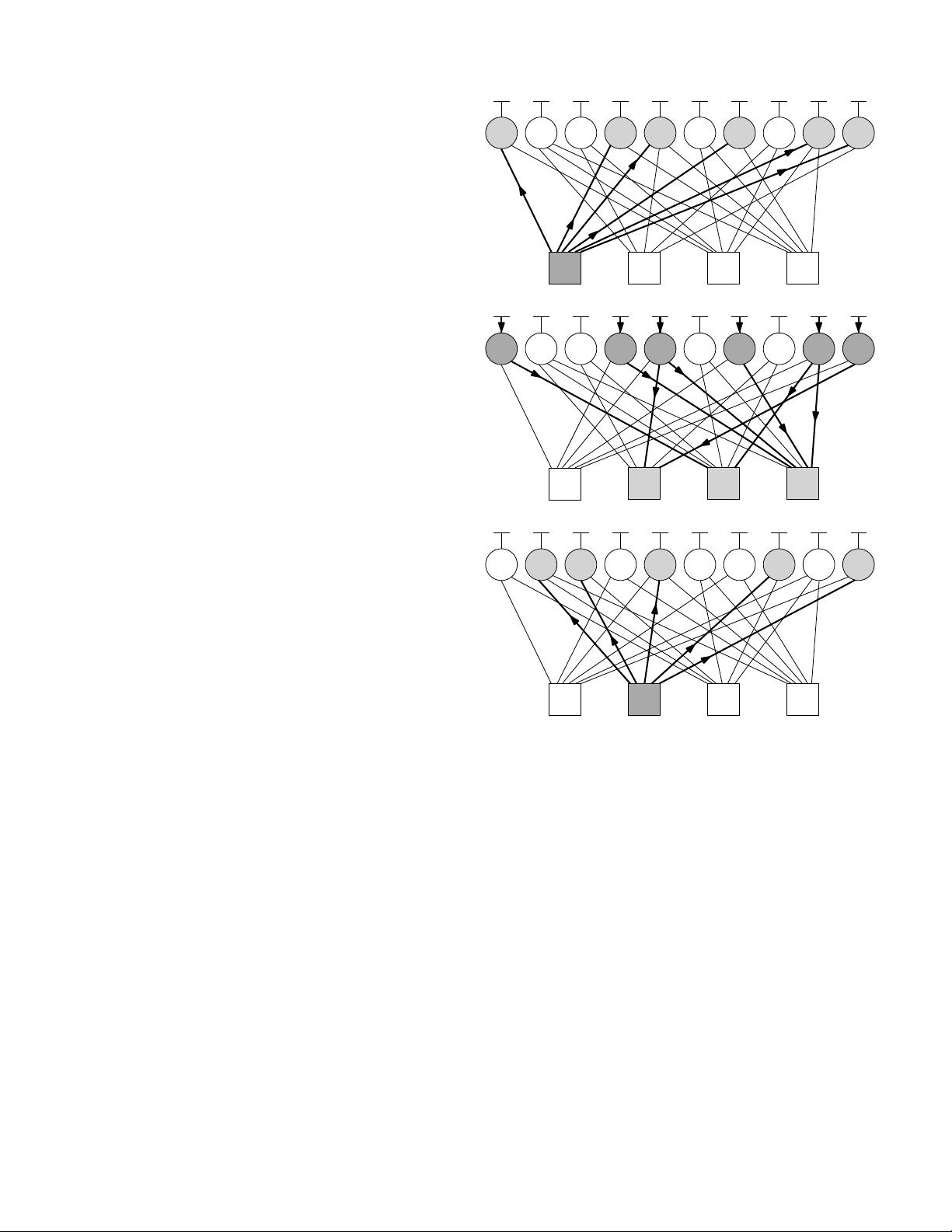

Using Fig. 7 as before, suppose that at the start of the

iterative decoding process, the message ˜r

3−8

from CN c

3

is

identified as having the highest magnitude of all the check-to-

variable messages in the graph. Owing to this, ˜r

3−8

is passed

to the VN v

8

, which is then activated, in order to obtain

the message ˜q

8−2

which is then passed to c

2

. The CN c

2

can then be activated to calculate new residuals for its other

four edges, as the difference between their previous messages

and their new messages that have been obtained using the

updated information from v

8

. These new residuals are then

compared with the others from the previous step, allowing a

new global maximum to be identified, to inform the next step

of the decoding process. Note that the next highest residual

within the factor graph does not necessarily have to originate

from the most recently updated CN c

2

. In the example seen in

Fig. 7, it can be seen that c

2

is activated to calculate residuals

but it is ˜r

1−4

from CN c

1

to VN v

4

that is sent. This implies