Phrase Type Sensitive Tensor Indexing Model for Semantic Composition

Yu Zhao

1

, Zhiyuan Liu

1∗

, Maosong Sun

1,2

1

Department of Computer Science and Technology, State Key Lab on Intelligent Technology and Systems,

National Lab for Information Science and Technology, Tsinghua University, Beijing 100084, China

2

Jiangsu Collaborative Innovation Center for Language Competence, Jiangsu 221009, China

zhaoyu.thu@gmail.com, {liuzy, sms}@tsinghua.edu.cn

Abstract

Compositional semantic aims at constructing the mean-

ing of phrases or sentences according to the composi-

tionality of word meanings. In this paper, we propose

to synchronously learn the representations of individu-

al words and extracted high-frequency phrases. Repre-

sentations of extracted phrases are considered as gold

standard for constructing more general operations to

compose the representation of unseen phrases. We pro-

pose a grammatical type specific model that improves

the composition flexibility by adopting vector-tensor-

vector operations. Our model embodies the composi-

tional characteristics of traditional additive and multi-

plicative model. Empirical result shows that our model

outperforms state-of-the-art composition methods in the

task of computing phrase similarities.

Introduction

Compositional semantic aims at constructing the meaning

of phrases or sentences according to the compositionality

of word meanings. Most recently, continuous word repre-

sentations are frequently used for representing the seman-

tic meaning of words (Turney and Pantel 2010), which have

achieved great success in various NLP tasks such as se-

mantic role labeling (Collobert and Weston 2008), para-

phrase detection (Socher et al. 2011a), sentiment analy-

sis (Maas et al. 2011) and syntactic parsing (Socher et al.

2013a). Beyond word representation, it is also essential to

find appropriate representations for phrases or longer utter-

ances. Hence, compositional distributional semantic models

(Marelli et al. 2014; Baroni and Zamparelli 2010; Grefen-

stette and Sadrzadeh 2011) have been proposed to construct

the representations of phrases or sentences based on the rep-

resentations of the words they contain.

Most existing compositional distributional semantic mod-

els can be divided into the following two typical types:

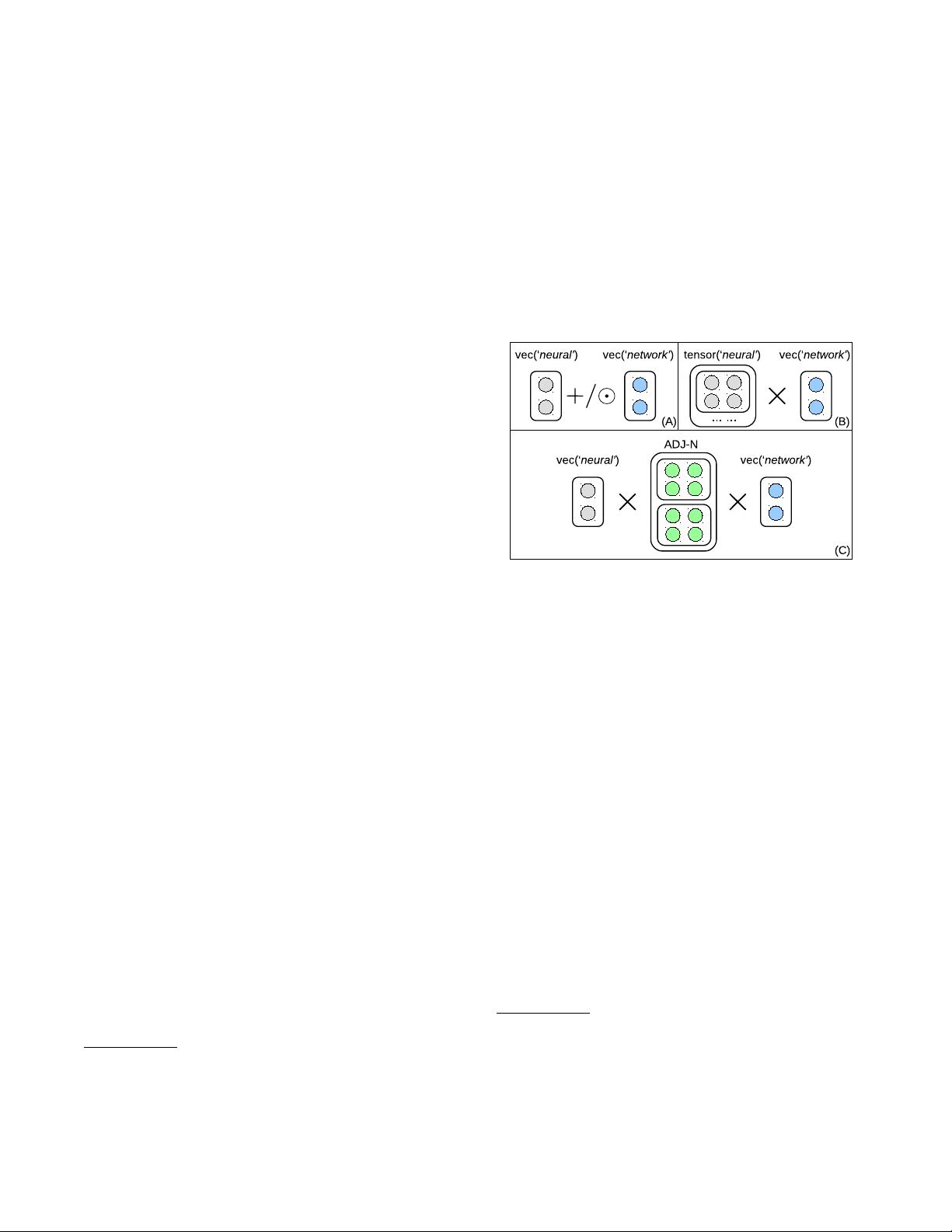

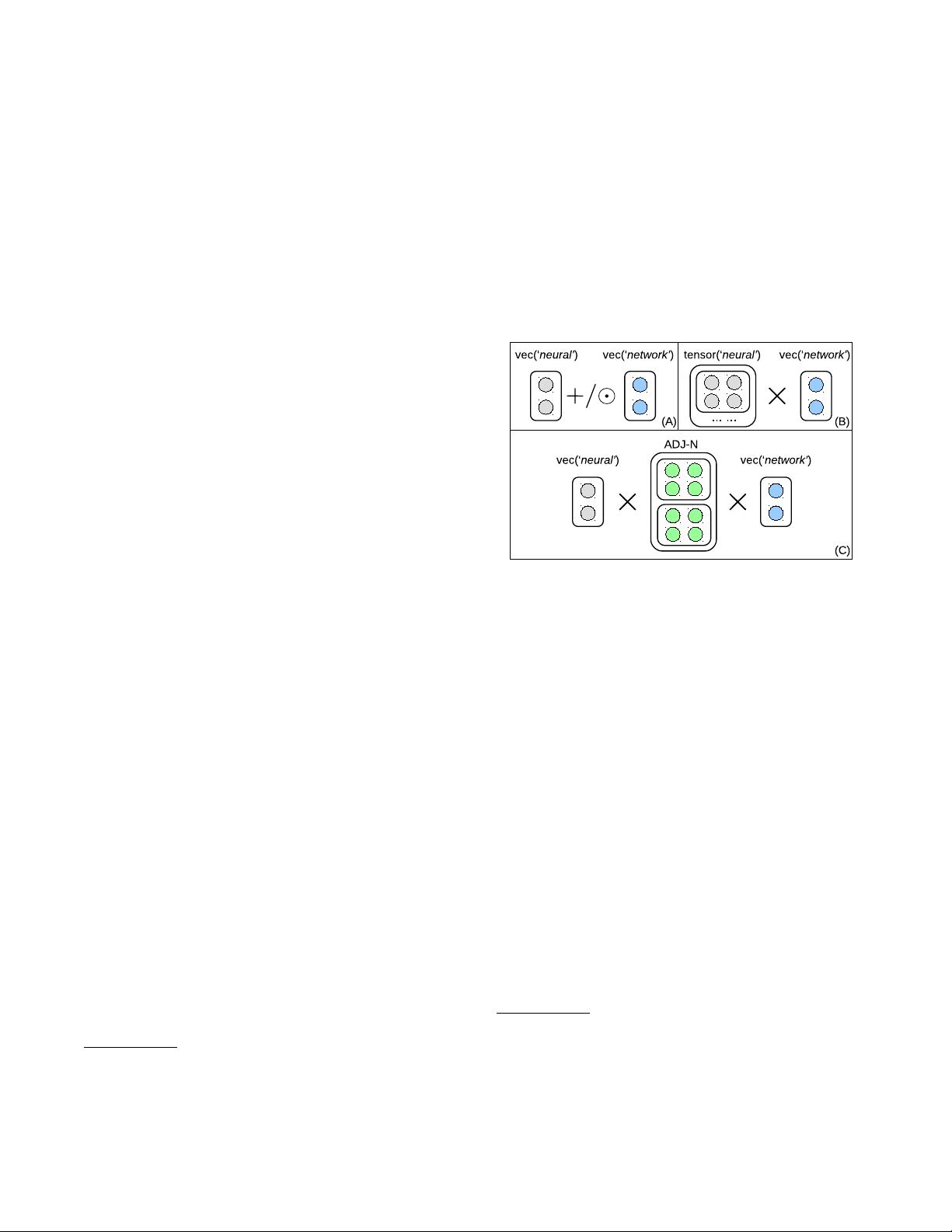

Vector-Vector Composition. These models use element-

wise composition operations to compose word vectors in-

to phrase vectors, as shown in Figure 1(A). For example,

Mitchell and Lapata (2010) propose to use additive model

∗

Corresponding author: Zhiyuan Liu (liuzy@tsinghua.edu.cn).

Copyright

c

2015, Association for the Advancement of Artificial

Intelligence (www.aaai.org). All rights reserved.

Figure 1: Comparison of different semantic composition

models, including (a) vector-vector composition, (b) tensor-

vector composition and (c) vector-tensor-vector composi-

tion.

(z = x + y) and multiplicative model (z = x y). How-

ever, both of the operations are commutative, which may

be unreasonable for semantic composition since the order

of word sequences in a phrase may influence its meaning.

For instance, machine learning and learning machine have

different meanings, while the commutative functions will re-

turn the same representation for them.

Tensor-Vector Composition. To improve composition

capability, complicated schemes for word representation are

proposed to replace simple vector-space models, includ-

ing matrices, tensors, or a combination of vectors and ma-

trices (Erk and Pad

´

o 2008; Baroni and Zamparelli 2010;

Yessenalina and Cardie 2011; Coecke, Sadrzadeh, and Clark

2010; Grefenstette et al. 2013). In this way, semantic com-

position is conducted via operations like tensor-vector prod-

uct, as demonstrated in Figure 1(B)

1

. Despite of powerful

capability, these methods have several disadvantages that re-

duce their scalability: (1) They have to learn matrices or

tensors for each word, which is time-consuming. Moreover,

1

In accordant with grammatical structure, words with k argu-

ments are represented by rank k + 1 tensors. Hence, the word neu-

ral is represented by a 2-order tensor, i.e., a matrix. An example for

words represented by 3-order tensors is the verb loves in the clause

John loves Mary.