D-20 Classification: Theoretical Background

The second problem of the Bayes classifier is how to obtain the a priori probability P (x|w_i). In prin-

ciple, a histogram over all feature vectors of the training set can be used. The apparent solution is to

subdivide each dimension of the feature space into a number of bins. But as the number of bins grows

exponentially with the dimension of the feature space, you face the so-called ’curse of dimensionality’.

That is, to get a good approximation for P (x|w_i), you need more memory than can be handled properly.

With another solution, instead of keeping the size of a bin constant and varying the number of samples in

the bin, the number of samples k for a class w_i is kept constant while varying the volume of the region

in space around the feature vector x that contains the k samples (v(x, w_i)). The volume depends on the

k nearest neighbors of the class w_i, so the solution is called k nearest-neighbor density estimation. It

has the disadvantage that all training samples have to be stored with the classifier and the search for the k

nearest neighbors is rather time-consuming. Because of that, it is seldom used in practice. A solution that

can be used in practice assumes that P (x|w_i) follows a certain distribution, e.g., a normal distribution.

Then, you only have to estimate the two parameters of the normal distribution, i.e., the mean vector µ_i

and the covariance matrix Σ_i. This can be achieved, e.g., by a maximum likelihood estimator.

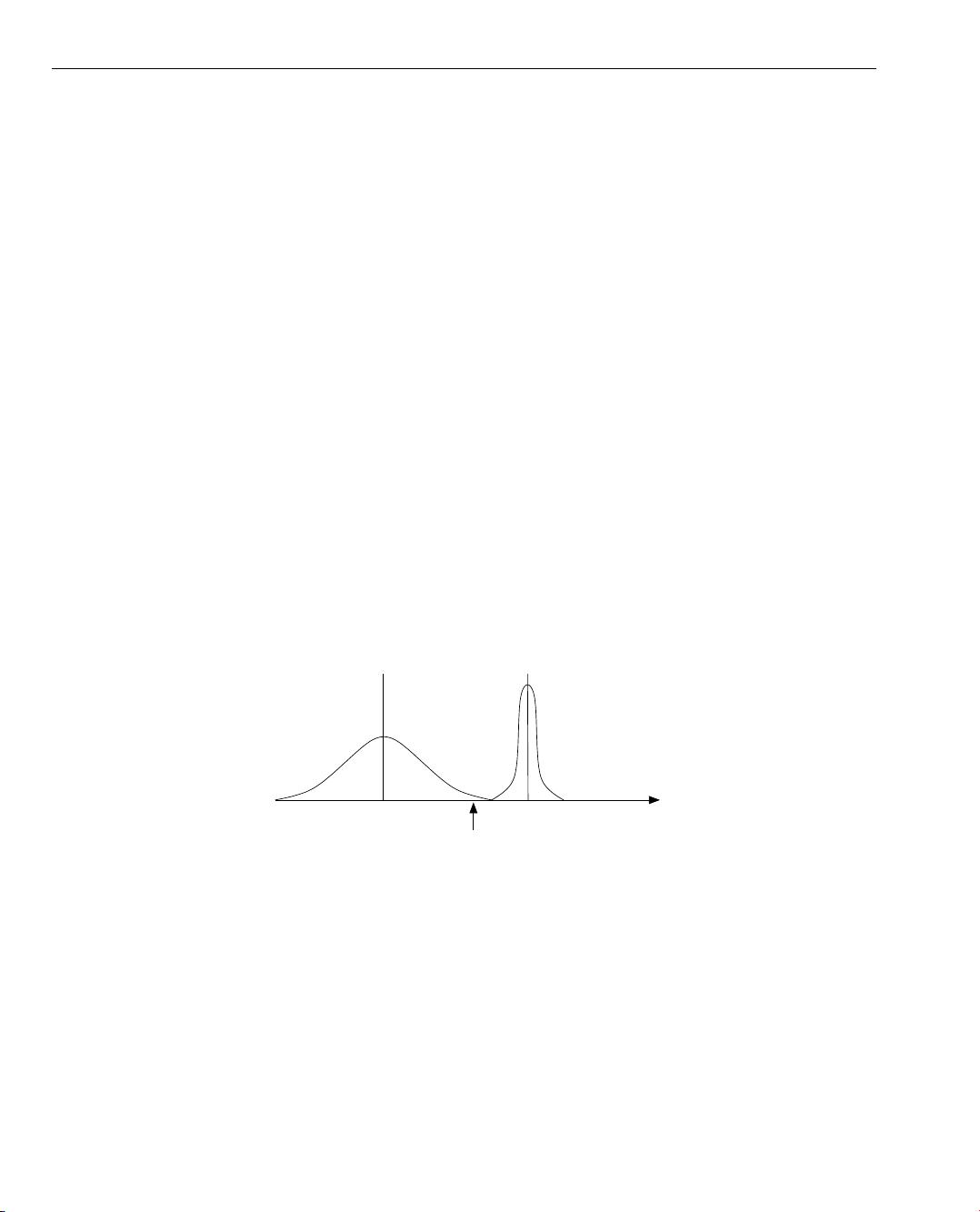

In some cases, a single normal distribution is not sufficient, as there are large variations inside a class.

The character ’a’, e.g., can be represented by ’a’ or ’a’, which have significantly different shapes. Nev-

ertheless, both belong to the same character, i.e., to the same class. Inside a class with large variations, a

mixture of l_i different densities exists. If these are again assumed to be normal distributed, we have a

Gaussian mixture model. Classifying with a Gaussian mixture model means to estimate to which specific

mixture density a sample belongs. This is done by the so-called expectation minimization algorithm.

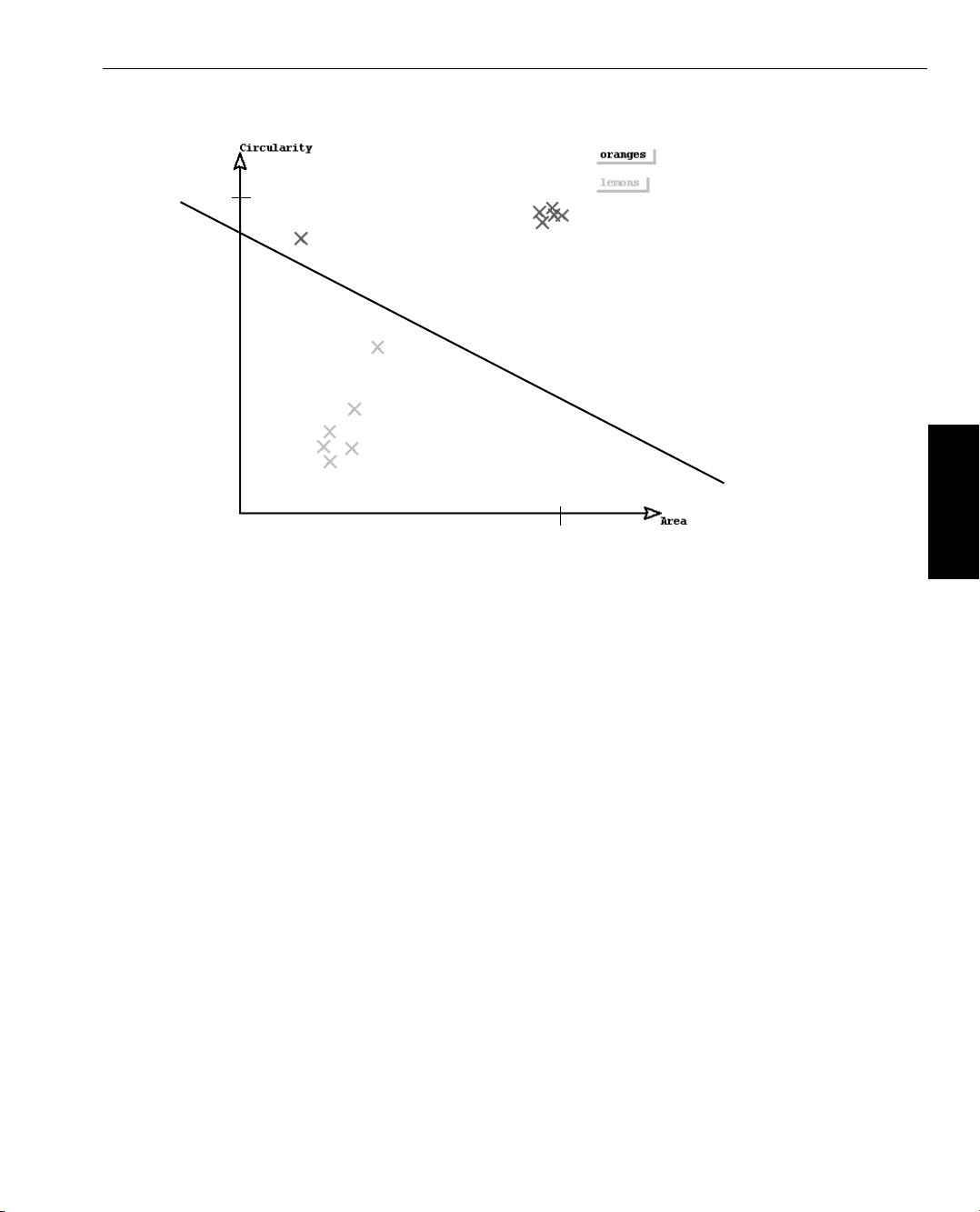

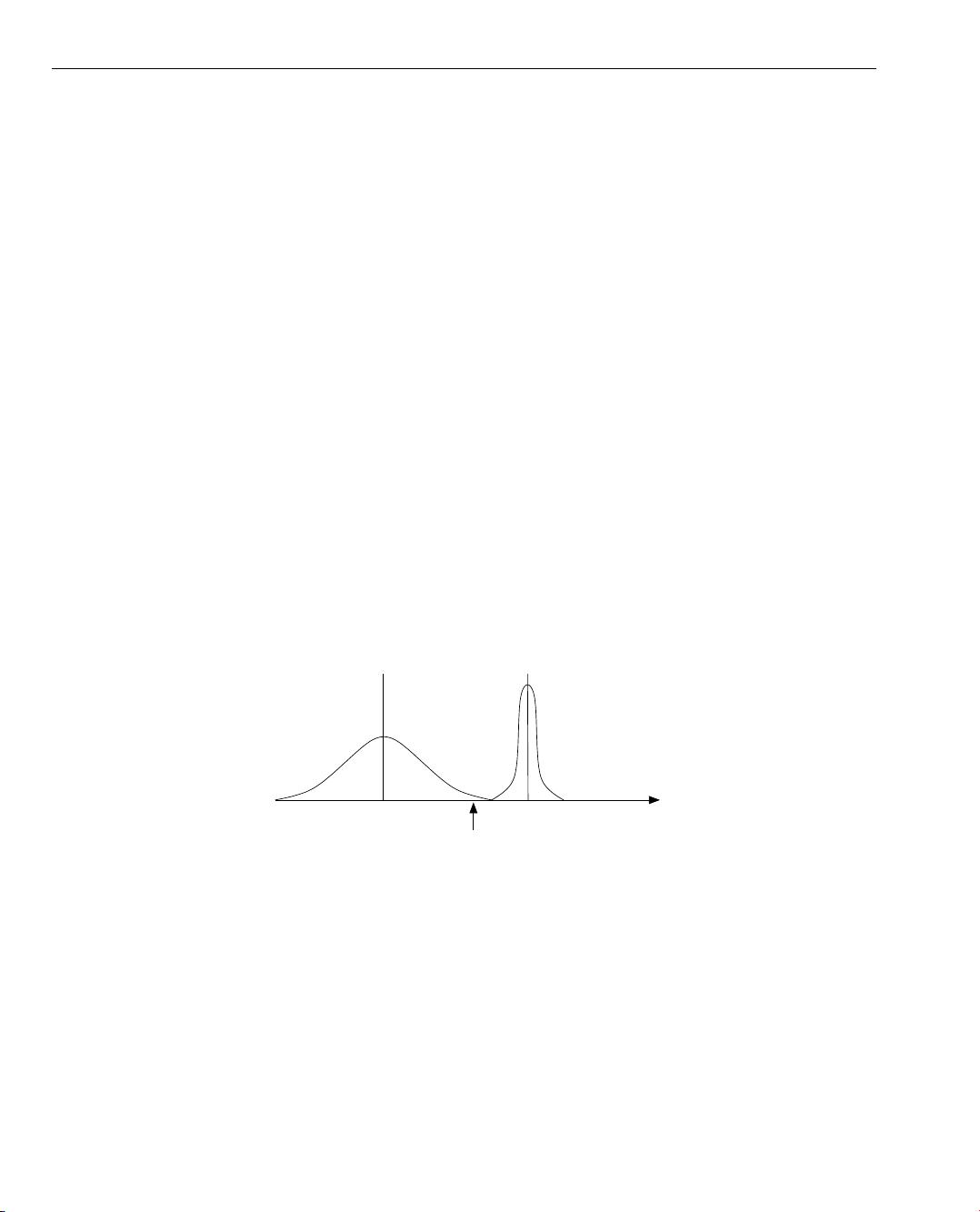

Coarsely spoken, the GMM classifier uses probability density functions of the individual classes and

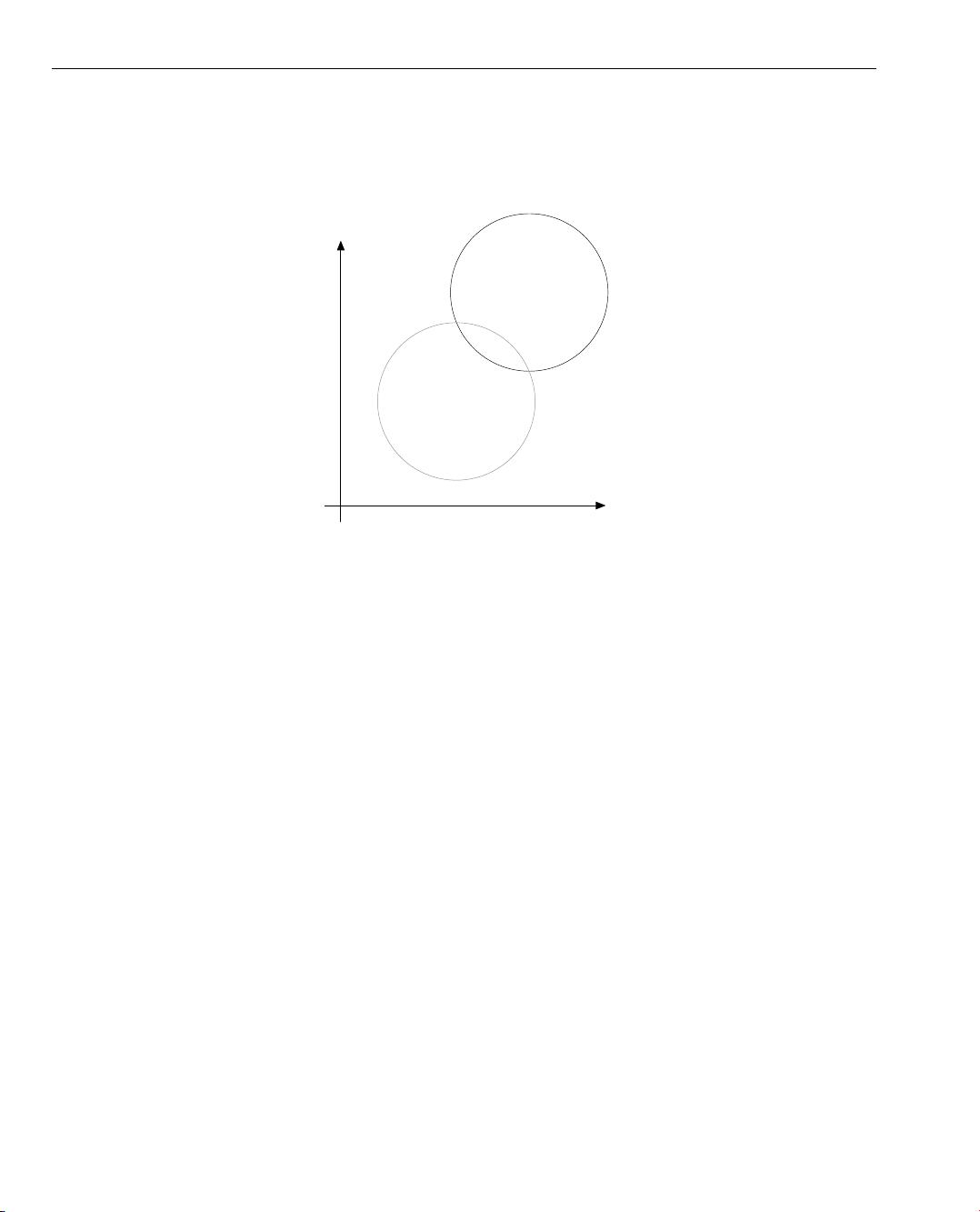

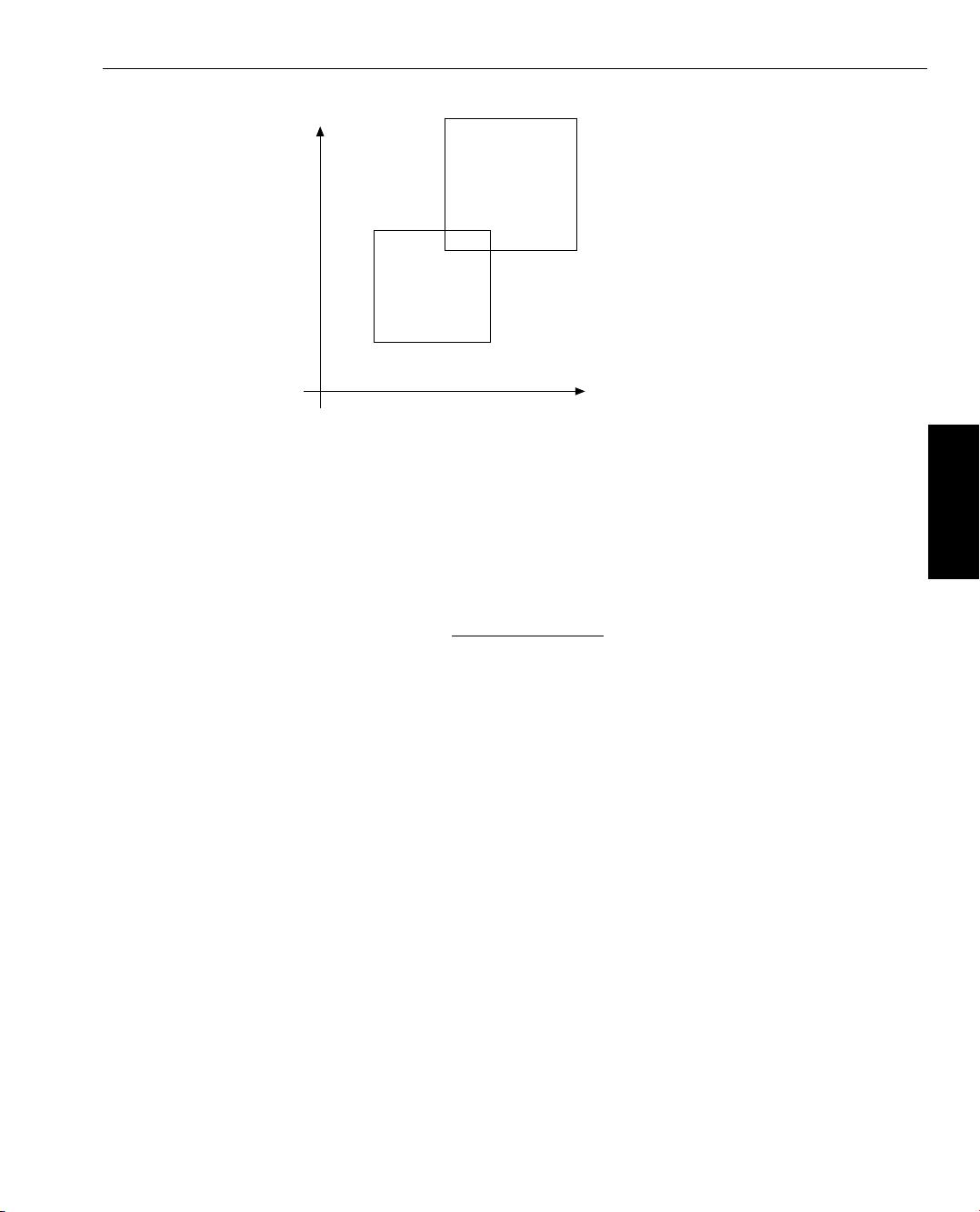

expresses them as linear combinations of Gaussian distributions (see figure 3.5). Comparing the approach

to the simple classification approaches described in section 3.2 on page 17, you can imagine the GMM

to construct n-dimensional error (covariance) ellipsoids around the cluster centers (see

figure 3.6).

Feature Vector X

Feature Vectors

Class 1 Class 2

Figure 3.5: The variance of class 1 is significantly larger than that of class 2. In such a case, the distance

to the Gauss error distribution curve is a better criteria for the class membership than the

distance to the cluster center.

GMM are reliable only for low dimensional feature vectors (approximately up to 15 features), so HAL-

CON provides GMM only for the classification of general features and image segmentation, but not for

OCR. Typical Applications are image segmentation and novelty detection. Novelty detection is specific

for GMM and means that feature vectors that do not belong to one of the trained classes can be rejected.

Note that novelty detection can also be applied with SVM, but then a specific parameter has to be set and

only two-class problems can be handled, i.e., a single class can be trained and the feature vectors that do

not belong to that single class are rejected.

There are two general approaches for the construction of a classifier. First, you can estimate the a pos-