RESEARCH ARTICLES

◥

COGNITIVE SCIENCE

Human-level concept learning

through probabilistic

program induction

Brenden M. Lake,

1

* Ruslan Salakhutdinov,

2

Joshua B. Tenenbaum

3

People learning new concepts can often generalize successfully from just a single example,

yet machine learning algorithms typically require tens or hundreds of examples to

perform with similar accuracy. People can also use learned concepts in richer ways than

conventional algorithms—for action, imagination, and explanation. We present a

computational model that captures these human learning abilities for a large class of

simple visual concepts: handwritten characters from the world’s alphabets. The model

represents concepts as simple programs that best explain observed examples under a

Bayesian criterion. On a challenging one-shot classification task, the model achieves

human-level performance while outperforming recent deep learning approaches. We also

present several “visual Turing tests” probing the model’s creative generalization abilities,

which in many cases are indistinguishable from human behavior.

D

espite remarkable advances in artificial

intelligence and machine learning, two

aspects of human conceptual knowledge

have eluded machine systems. First, for

most interesting kinds of natural and man-

made categories, people can learn a new concept

from just one or a handful of examples, where as

standard algorithms in machine learning require

tens or hundreds of examples to perform simi-

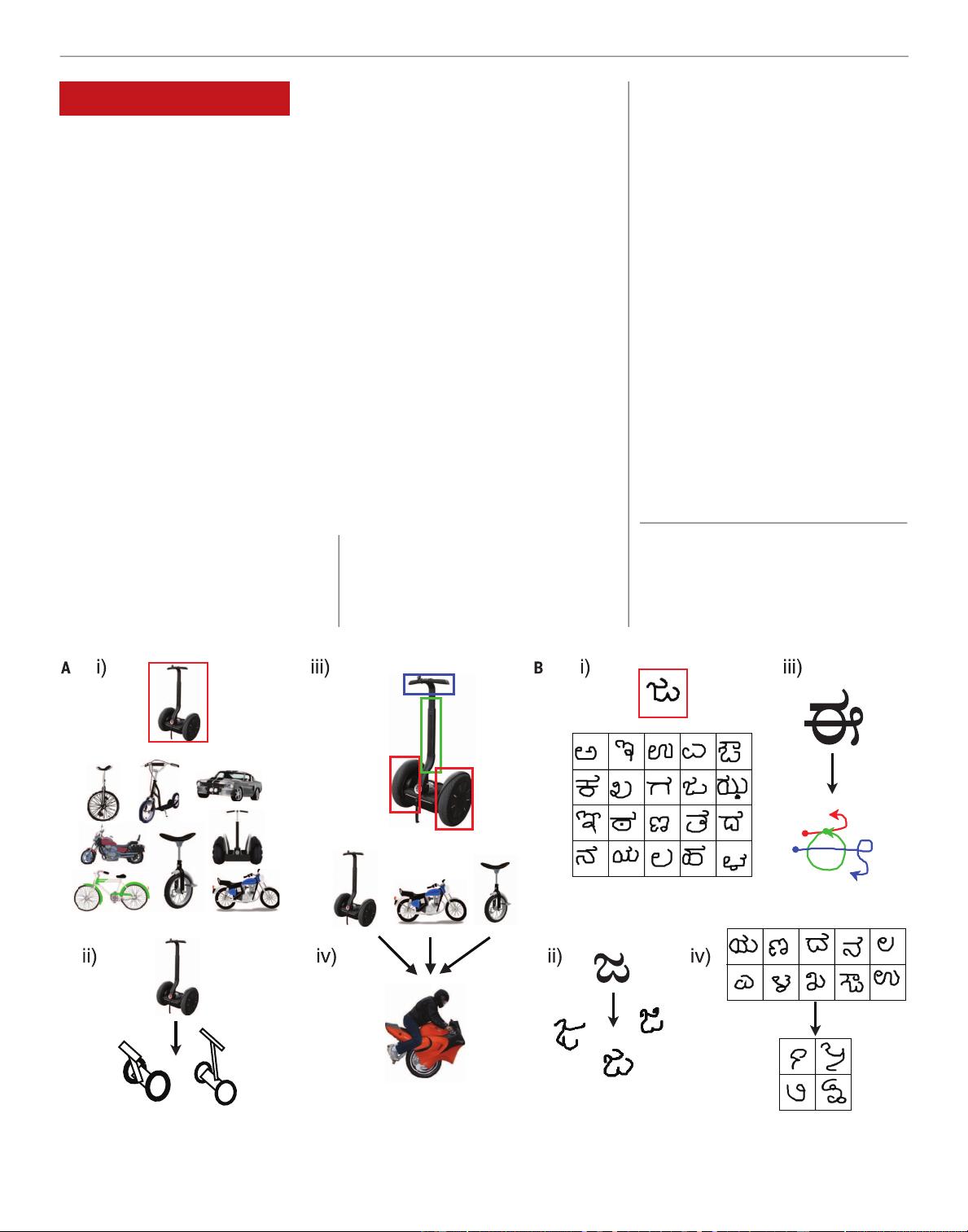

larly. For instance, people may only need to see

one example of a novel two-wheeled vehicle

(Fig. 1A) in order to grasp the boundaries of the

new concept, and even children can make mean-

ingful generalizations via “one-shot learning”

(1–3). In contrast, many of the leading approaches

in machine learning are also the most data-hungry,

especially “deep learning” models that have

achieved new levels of performance on object

and speech recognition benchmarks (4–9 ). Sec-

ond, people learn richer representations than

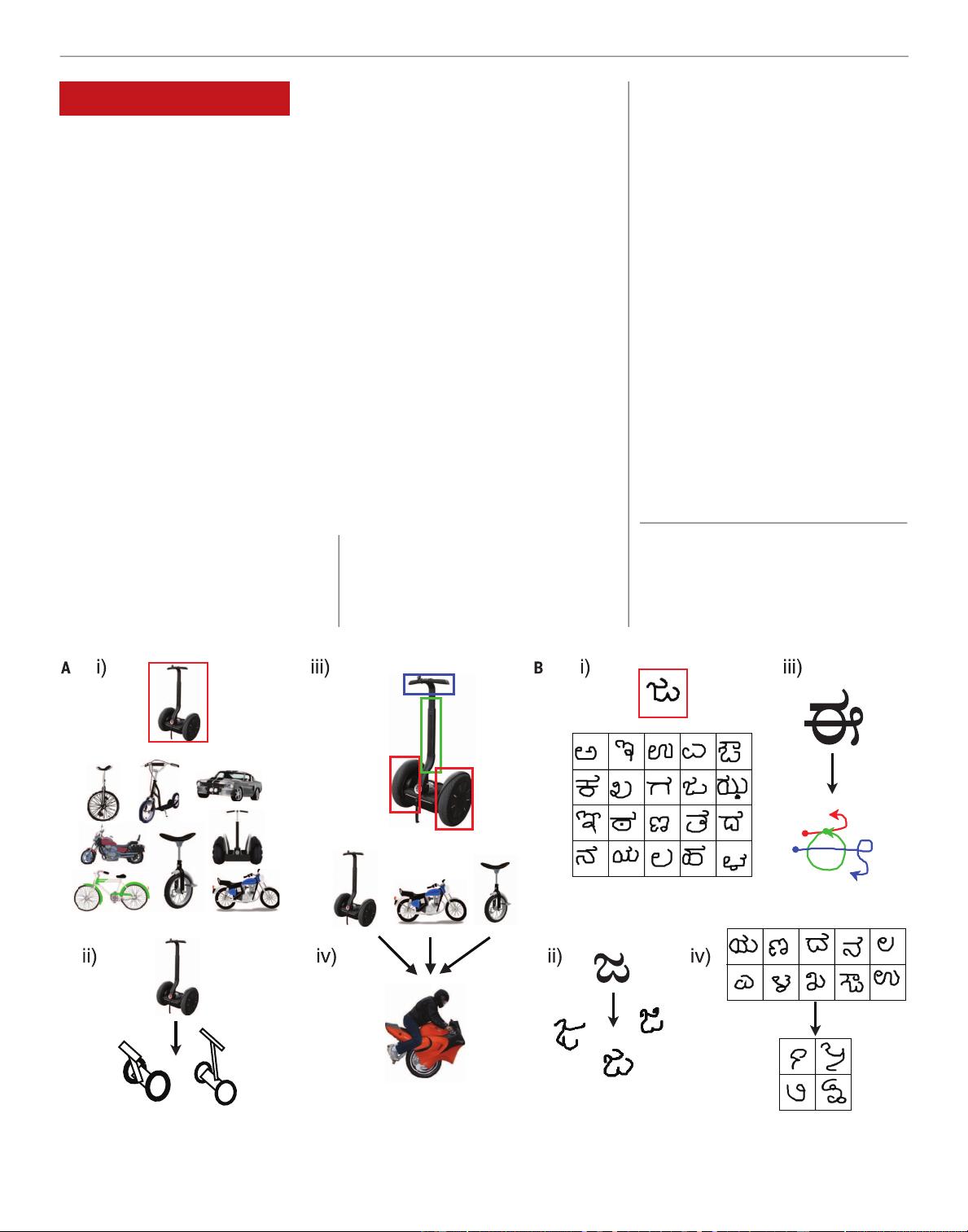

machines do, even for simple concepts (Fig. 1B),

using them for a wider range of functions, in-

cluding (Fig. 1, ii) creating new exemplars (10),

(Fig. 1, iii) parsing objects into parts and rela-

tions (11), and (Fig. 1, iv) creating new abstract

categories of objects based on existing categories

(12, 13). In contrast, the best machine classifiers

do not perform these additional functions, which

are rarely studied and usually require special-

ized algorithms. A central challenge is to ex-

plain these two aspects of human-level concept

learning: How do people learn new concepts

from just one or a few examples? And how do

people learn such abstract, rich , and flexib le rep-

resentations? An even greater challenge arises

when putting them together: How can learning

succeed from such sparse data yet also produce

such rich representations? For any theory of

RESEARCH

1332 11 DECEMBE R 2015 • VOL 350 ISSUE 6266 sciencemag.org SCIENCE

1

Center for Data Science, New York University, 726

Broadway, New York, NY 10003, USA.

2

Department of

Computer Science and Department of Statistics, University

of Toronto, 6 King’s College Road, Tor onto, ON M5S 3G4,

Canada.

3

Department of Brain and Cognitive Sciences,

Massachusetts Institute of Technology, 77 Massachusetts

Avenue, Cambridge, MA 02139, USA.

*Corresponding author. E-mail: brenden@nyu.edu

Fig. 1. People can learn rich concepts from limited data. (A and B) A single example of a new concept (r ed boxes) can be enough information to support

the (i) classification of new examples, (ii) generation of new examples, (iii) par sing an object into parts and relations (parts segmented by color), and (iv)

generation of new concepts from related concepts. [Image credit for (A), iv , bottom: With permission from Glenn Roberts and Motor cy cle Mojo Magazine ]

on December 10, 2015www.sciencemag.orgDownloaded from on December 10, 2015www.sciencemag.orgDownloaded from on December 10, 2015www.sciencemag.orgDownloaded from on December 10, 2015www.sciencemag.orgDownloaded from on December 10, 2015www.sciencemag.orgDownloaded from on December 10, 2015www.sciencemag.orgDownloaded from on December 10, 2015www.sciencemag.orgDownloaded from