Fine-Tuning Language Models from Human Preferences

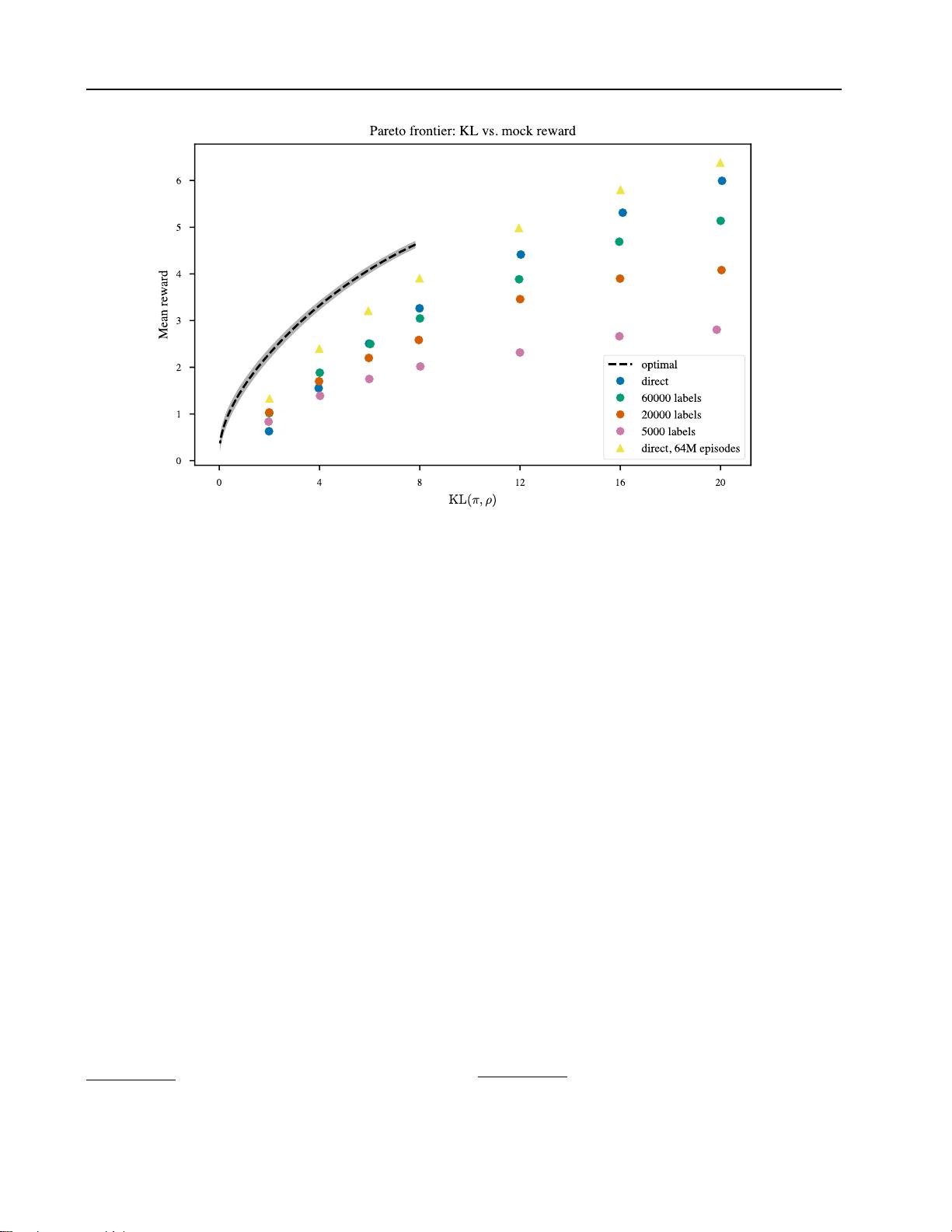

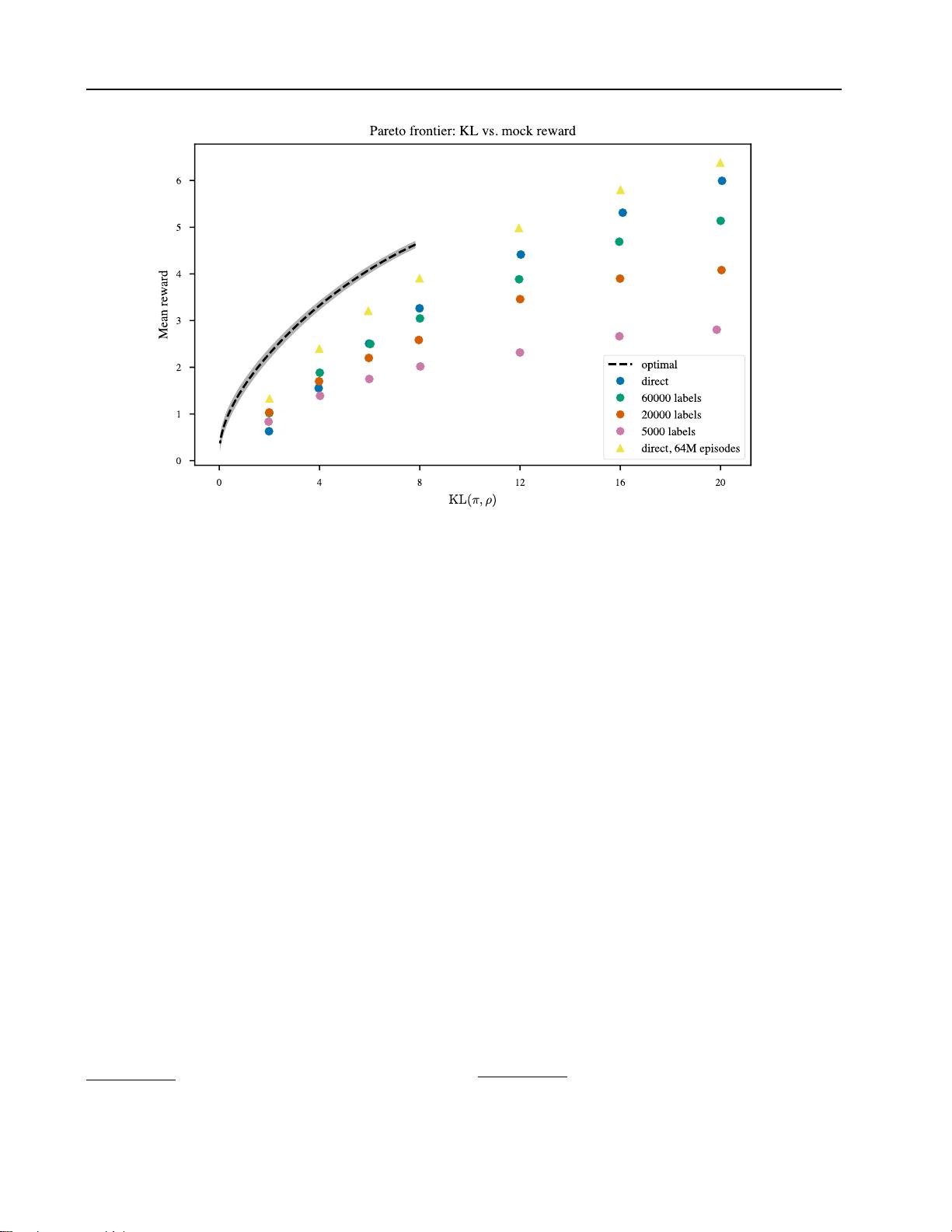

Figure 3: Allowing the policy

π

to move further from the initial policy

ρ

as measured by

KL(π, ρ)

achieves higher reward at

the cost of less natural samples. Here we show the optimal KL vs. reward for 124M-parameter mock sentiment (as estimated

by sampling), together with results using PPO. Runs used 2M episodes, except for the top series.

We release code

2

for reward modeling and fine-tuning in

the offline data case. Our public version of the code only

works with a smaller 124M parameter model with 12 layers,

12 heads, and embedding size 768. We include fine-tuned

versions of this smaller model, as well as some of the human

labels we collected for our main experiments (note that these

labels were collected from runs using the larger model).

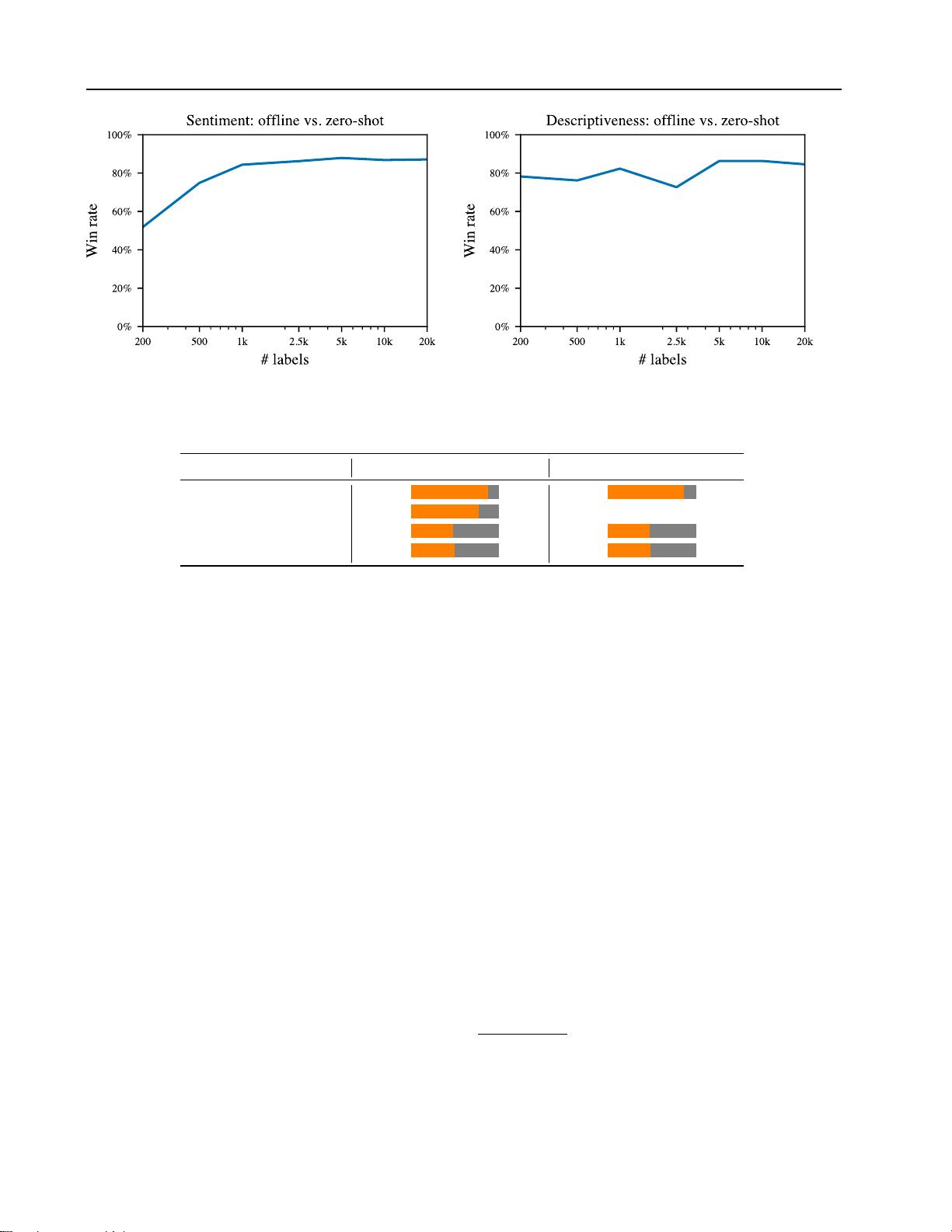

3.1. Stylistic continuation tasks

We first apply our method to stylistic text continuation tasks,

where the policy is presented with an excerpt from the Book-

Corpus dataset (Zhu et al., 2015) and generates a continu-

ation of the text. The reward function evaluates the style

of the concatenated text, either automatically or based on

human judgments. We sample excerpts with lengths of 32

to 64 tokens, and the policy generates 24 additional tokens.

We set the temperature of the pretrained model to

T = 0.7

as described in section 2.1.

3.1.1. MOCK SENTIMENT TASK

To study our method in a controlled setting, we first apply it

to optimize a known reward function

r

s

designed to reflect

some of the complexity of human judgments. We construct

2

Code at https://github.com/openai/lm-human-preferences.

r

s

by training a classifier

3

on a binarized, balanced subsam-

ple of the Amazon review dataset of McAuley et al. (2015).

The classifier predicts whether a review is positive or nega-

tive, and we define

r

s

(x, y)

as the classifier’s log odds that

a review is positive (the input to the final sigmoid layer).

Optimizing

r

s

without constraints would lead the policy

to produce incoherent continuations, but as described in

section 2.2 we include a KL constraint that forces it to stay

close to a language model ρ trained on BookCorpus.

The goal of our method is to optimize a reward function

using only a small number of queries to a human. In this

mock sentiment experiment, we simulate human judgments

by assuming that the “human” always selects the continu-

ation with the higher reward according to

r

s

, and ask how

many queries we need to optimize r

s

.

Figure 2 shows how

r

s

evolves during training, using either

direct RL access to

r

s

or a limited number of queries to train

a reward model. 20k to 60k queries allow us to optimize

r

s

nearly as well as using RL to directly optimize r

s

.

Because we know the reward function, we can also ana-

lytically compute the optimal policy and compare it to our

learned policies. With a constraint on the KL divergence

3

The model is a Transformer with 6 layers, 8 attention heads,

and embedding size 512.

http://chat.xutongbao.top