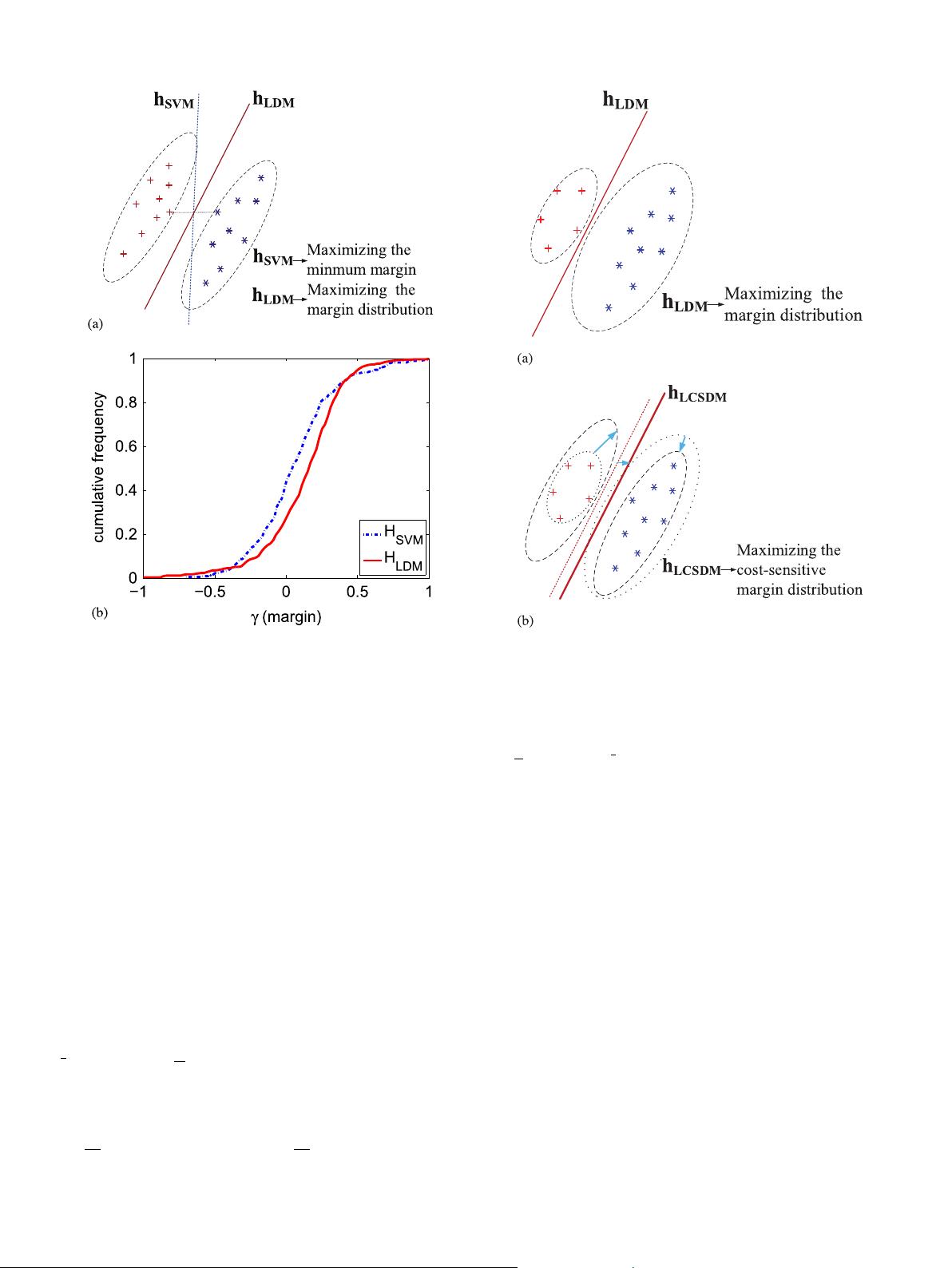

minimum margin (the intersection of the curve and the margin-axis) of

H

LD

is less than that of

H

SV

, but

H

LD

lies on the right side. This

illustrates that SVM has a larger minimum margin, and LDM has a

larger margin distribution. Then a natural question is which one is

more important. Gao and Zhou [8] gave the answer and revealed that

the margin distribution instead of a single margin is critical to the

generalization performance. In the following, an implement of large

margin distribution learning will be introduced.

2.2. Large margin distribution machine

LDM proposed by Zhang and Zhou [10] tries to get a strong

generalization performance by maximizing the margin distribution.

The margin distribution is characterized by the first- and second-order

statistics (the margin mean and margin variance). The margin mean is

formulated as

∑

ωω

yϕ

m

Xxy=()=

1

(),

i

m

i

TT

i

=1

where

Xϕ ϕxx=[ ( ),…, ( )

1m

represents the example matrix. The margin

variance is formulated as

l

∑∑

ωω ωωωωγ

m

yϕ yϕ

m

mXX X Xxx yy=

1

(()− ()),=

2

(−

.

i

j

i

T

j

TTTTTT

ij

2

=1 =1

2

2

LDM maximizes the margin mean and minimizes the margin variance

simultaneously. The optimal object function of LDM is formulated as

l

∑

ωω ωλγ λγ C ξs t y ϕ ξ ξ i Lxmin

1

2

+ − + .. . ( )≥1− , ≥0, ∈ ,

wξ

T

i

m

i

i

T

iii

,

12

=1

(4)

where λ

1

, λ

2

and C are the trade-off parameters for the model

complexity, margin variance, margin mean and training error.

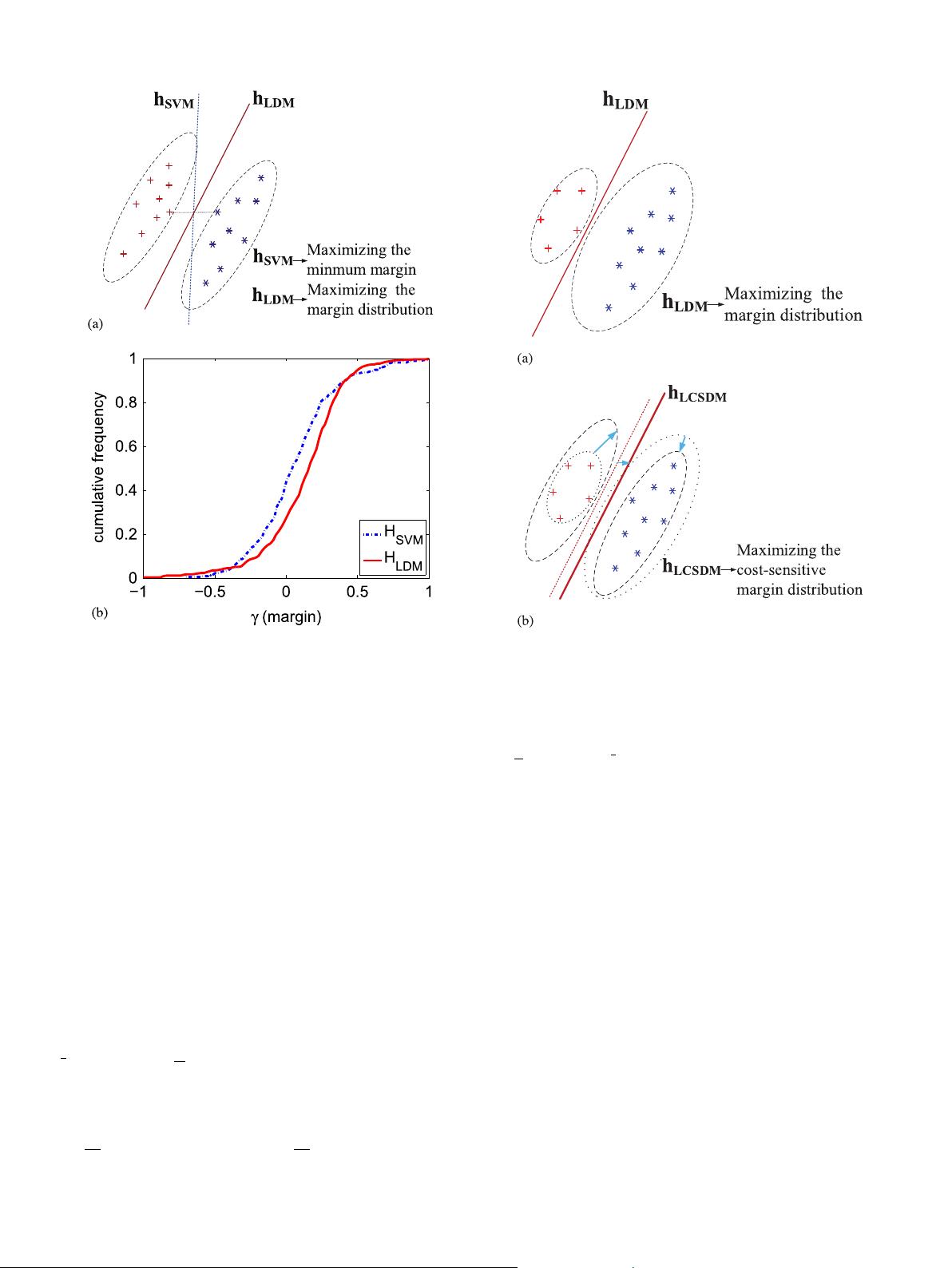

Though LDM performs well on many data sets compared with SVM

and other out-of-the-art methods [10], it generally has an imbalanced

margin distribution between two classes on imbalanced training set. As

shown in Fig. 2(a), LDM generally forces the separator

h

LD

to get close

to the positive class (the minority class) and shrinks the encirclement

of the positive support hyperplane described by left dashed ellipse to

increase the margin mean and decrease the margin variance on

imbalanced training data. This imbalanced problem can be effectively

solved by cost-sensitive learning [29]. Therefore cost-sensitive margin

distribution learning is proposed based on margin distribution theory

to address imbalanced binary-classification on imbalanced training

data.

3. Cost-sensitive margin distribution learning

The idea of cost-sensitive margin distribution learning is that the

margin weight of the positive class in the margin mean should be

increased, the margin weight of the positive class in the margin

variance should be decreased and the misclassification penalty of the

positive class should be heightened to increase the margin distribution

of the positive class. As shown in Fig. 2(b), cost-sensitive margin

Fig. 1. (a) Simple illustration of the separators. The red pluses represent positive

examples and the blue stars represent negative examples.

SV

represents the separator

of SVM and

LD

represents the separator of LDM. Examples located on di fferent sides

of the separator will be classified to different categories. (b) Simple illustration of the

margin distributions.

H

SVM

is cumulative frequency curve of SVM, and

H

LDM

is

cumulative frequency curve of LDM. The more right the cumulative frequency curve is,

the larger the margin distribution is. (For interpretation of the references to color in this

figure legend, the reader is referred to the web version of this article.)

Fig. 2. (a) Simple illustration of the LDM separator on imbalanced training data. (b)

Simple illustration of the LCSDM separator on imbalanced training data.

LD

represents the separator of LDM and

LCSD

represents the separator of LCSDM.

F. Cheng et al.

Neurocomputing 224 (2017) 45–57

47