Adversarial Examples in Modern Machine Learning: A Review

conditions via S

+

or S

−

, we identify which measure applies to the most salient pair (p

∗

, q

∗

) to decide on the

perturbation direction θ

0

accordingly. A history vector η is added to prevent oscillatory perturbations. Similar

to NT-JSMA, M-JSMA terminates when the predicted class ˆy(x) = arg max

c

f(x)

(c)

no longer matches the

true class y.

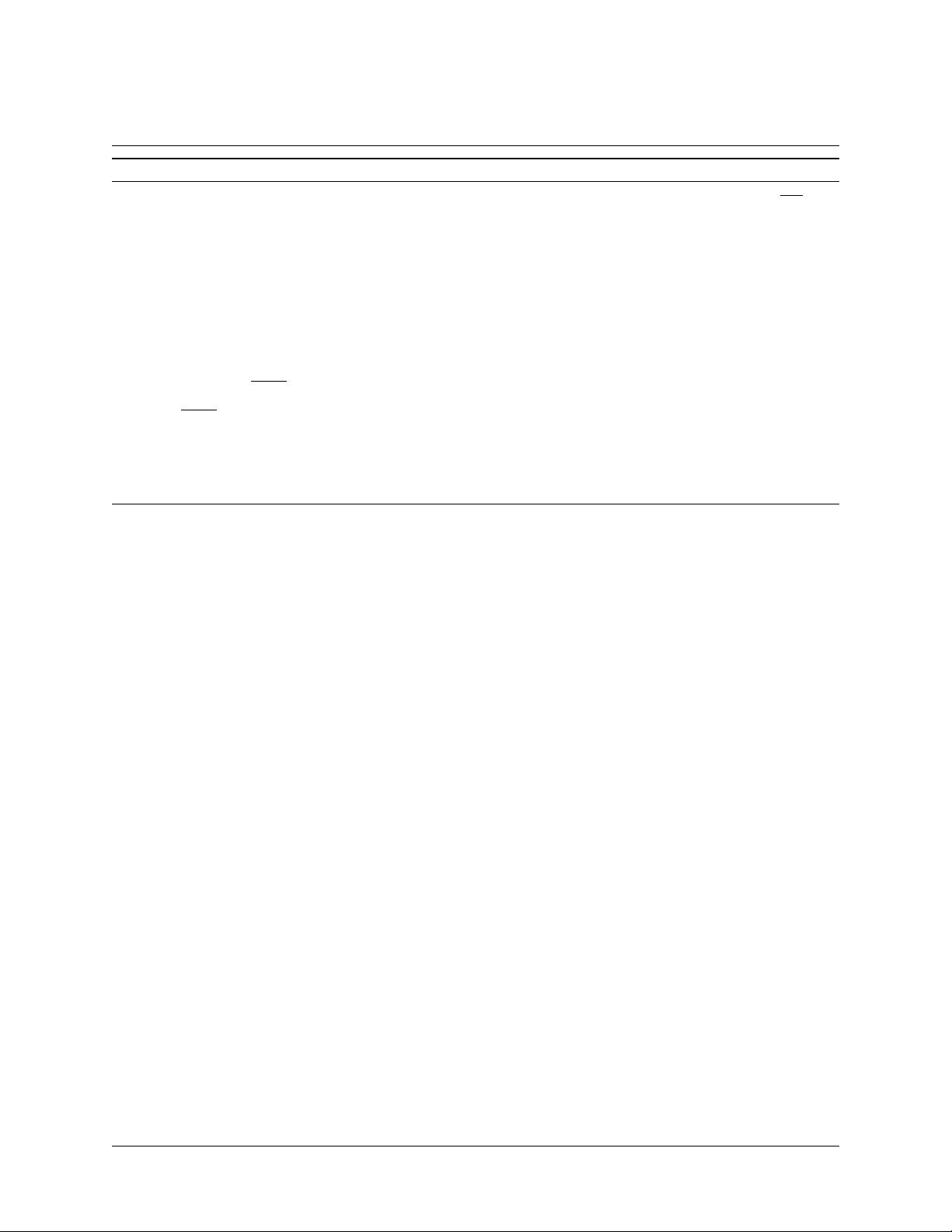

Table 4: Performance comparison of the original JSMA, non-targeted JSMA, and maximal JSMA variants

(|θ| = 1, = 1): % of successful attacks, average L

0

and L

2

perturbation distances, and average entropy

H(f(x)) of misclassified softmax prediction probabilities.

Attack

MNIST F-MNIST CIFAR10

% L

0

L

2

H % L

0

L

2

H % L

0

L

2

H

JSMA+F 100 34.8 4.32 0.90 99.9 93.1 6.12 1.22 100 34.7 3.01 1.27

JSMA-F 100 32.1 3.88 0.88 99.9 82.2 4.37 1.21 100 36.9 2.13 1.23

NT-JSMA+F 100 17.6 3.35 0.64 100 18.8 3.27 1.03 99.9 17.5 2.36 1.16

NT-JSMA-F 100 19.7 3.44 0.70 99.9 33.2 2.99 0.98 99.9 19.6 1.68 1.12

M-JSMA_F 100 14.9 3.04 0.62 99.9 18.7 3.42 1.02 99.9 17.4 2.16 1.12

Table 4 summarizes attacks carried out on correctly-classified test-set instances in the MNIST [116],

Fashion MNIST [119], and CIFAR10 [120] datasets, using targeted, Non-Targeted, and Maximal JSMA

variants. For targeted attacks, we consider only adversaries that were misclassified in the fewest iter-

ations over target classes. The JSMA+F results showed that on average only (34.8 L

0

distance)/(28 ∗

28 pixels of an MNIST image) = 4.4% of pixels needed to be perturbed in order to create adversaries, thus

corroborating findings from [80]. More importantly, as evidenced by lower L

0

values, NT-JSMA found ad-

versaries much faster than the fastest targeted attacks across all 3 datasets, while M-JSMA was consistently

even faster and on average only perturbed (14.9 L

0

distance)/(28 ∗ 28 pixels) = 1.9% of input pixels. Ad-

ditionally, the quality of adversaries found by NT-JSMA and M-JSMA were also superior, as indicated by

smaller L

2

perceptual differences between the adversaries x

0

and the original inputs x, and by lower misclas-

sification uncertainty as reflected by prediction entropy H (f(x)) = −

P

c

f(x)

(c)

·log f(x)

(c)

. Since M-JSMA

considers all possible class targets, and both S

+

and S

−

metrics and perturbation directions, these results

show that it inherits the combined benefits from both the original JSMA and NT-JSMA.

4.9 Substitute Blackbox Attack

All of the techniques covered so far are whitebox attacks, relying upon access to a model’s innards. Papernot

et al. [41] proposed one of the early practical blackbox methods, called the Substitute Blackbox Attack (SBA).

The key idea is to train a substitute model to mimic the blackbox model, and use whitebox attack methods

on this substitute. This approach leverages the transferability property of adversarial examples. Concretely,

the attacker first gathers a synthetic dataset, obtains predictions on the synthetic dataset from the targeted

model, and then trains a substitute model to imitate the targeted model’s predictions.

After the substitute model is trained, adversaries can be generated using any whitebox attacks since the

details of the substitute model are known (e.g., [41] used the FGSM [32] (see Section 4.2) and JSMA [80] (see

Section 4.8)). We refer to SBA based on the type of adversarial attacks used when attacking the substitute

model. For example, if the attacker uses FGSM to attack the substitute model, we refer this as FGSM-SBA.

The success of this approach depends on choosing adequately-similar synthetic data samples and a sub-

stitute model architecture using high-level knowledge of the target classifier setup. As such, an intimate

knowledge of the domain and the targeted model is likely to aid the attacker. Even if the absence of specific

expertise, the transferability property suggests that adversaries generated from a well-trained substitute

model are likely to fool the targeted model as well.

Papernot et al. [41] note that in practice the attacker is constrained from making unlimited query to the

targeted model. Consequently, the authors introduced the Jacobian-based Dataset Augmentation technique,

which generates a limited number of additional samples around a small initial synthetic dataset to efficiently

replicate the target model’s decision boundaries. Concretely, given an initial sample x, one calculates the

19